代码下载地址:https://github.com/tazhigang/big-data-github.git

一、需求:将统计结果按照手机归属地不同省份输出到不同文件中(分区)

二、数据准备

- 数据准备:案例二中的phoneData.txt

- 根据电话号码的前三位分区

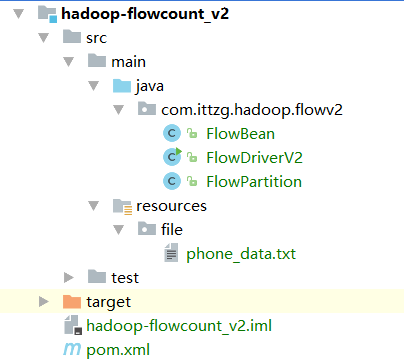

三、创建maven项目

- 项目结构

- 由于该案例是建立在案例二的基础上,所以此处指展示新添加的FlowPartiton.java及修改过的FlowDriveV2进行展示,如需查看改案例的全部代码结构请前往下载

- 代码展示

- FlowPartition.java

package com.ittzg.hadoop.flowv2;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Partitioner;

/**

* @email: tazhigang095@163.com

* @author: ittzg

* @date: 2019/7/6 14:19

*/

public class FlowPartition extends Partitioner<Text,FlowBean> {

public int getPartition(Text text, FlowBean flowBean, int numPartitions) {

String phoneNum = text.toString().substring(0,3);

int partitons = 3;

if("135".equals(phoneNum)){

partitons = 0;

}else if("136".equals(phoneNum)){

partitons = 1;

}else if("137".equals(phoneNum)){

partitons = 2;

}

return partitons;

}

}

- FlowDriveV2.java

package com.ittzg.hadoop.flowv2;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

/**

* @email: tazhigang095@163.com

* @author: ittzg

* @date: 2019/7/1 22:49

* @describe:

*/

public class FlowDriverV2 {

public static class FlowMapper extends Mapper<LongWritable,Text,Text,FlowBean>{

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 获取一行数据

String line = value.toString();

// 截取字段

String[] split = line.split("\t");

long upFlow = Long.parseLong(split[split.length-3]);

long downFlow = Long.parseLong(split[split.length-2]);

// 构造FlowBean

FlowBean flowBean = new FlowBean(upFlow, downFlow);

context.write(new Text(split[1]),flowBean);

}

}

public static class FlowReduce extends Reducer<Text,FlowBean,Text,FlowBean>{

@Override

protected void reduce(Text key, Iterable<FlowBean> values, Context context) throws IOException, InterruptedException {

long upFlow = 0;

long downFlow = 0;

for (FlowBean flowBean : values) {

upFlow += flowBean.getUpFlow();

downFlow +=flowBean.getDownFlow();

}

FlowBean flowBean = new FlowBean(upFlow, downFlow);

context.write(new Text(key),flowBean);

}

}

public static void main(String[] args) throws IOException, URISyntaxException, InterruptedException, ClassNotFoundException {

// 设置输入输出路径

String input = "hdfs://hadoop-ip-101:9000/user/hadoop/flow/input";

String output = "hdfs://hadoop-ip-101:9000/user/hadoop/flow/v2output";

Configuration conf = new Configuration();

conf.set("mapreduce.app-submission.cross-platform","true");

Job job = Job.getInstance(conf);

//

job.setJar("/big-data-github/hadoop-parent/hadoop-flowcount-v2/target/hadoop-flowcount-v2-1.0-SNAPSHOT.jar");

job.setMapperClass(FlowMapper.class);

job.setReducerClass(FlowReduce.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(FlowBean.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop-ip-101:9000"),conf,"hadoop");

Path outPath = new Path(output);

if(fs.exists(outPath)){

fs.delete(outPath,true);

}

// 设置分区 ********新增

job.setPartitionerClass(FlowPartition.class);

// 设置reduceTask个数 ********新增

job.setNumReduceTasks(4);

FileInputFormat.addInputPath(job,new Path(input));

FileOutputFormat.setOutputPath(job,outPath);

boolean bool = job.waitForCompletion(true);

System.exit(bool?0:1);

}

}

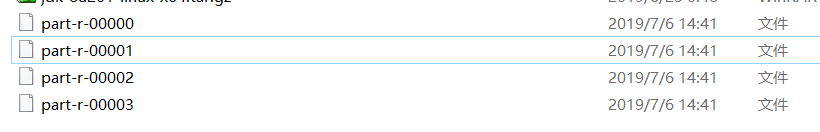

四、最终结果

- 网页浏览

- 下载的文件

- 文件内容

- part-r-0000与part-r-0002

- part-r-0001与part-r-0003

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?