Hadoop2.3.0上部署Mahout0.10,并测试单机版与分布式版个性化推荐程序

1 Eclipse中Hadoop2.3.0及Mahout0.10相关jar包部署

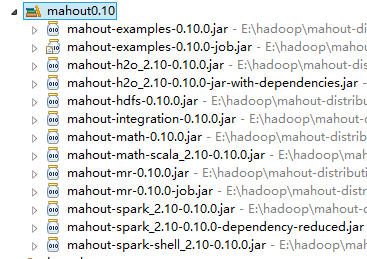

Hadoop2以上需要使用Mahout0.10以上版本才可以直接运行,否则需要重新编译Mahout相关jar包。本文直接使用Mahout0.10版本,执行前在Eclipse中分别倒入Hadoop2.3.0和Mahout0.10相关jar包即可。Eclipse中Hadoop2.3.0jar包部署见上篇文章:eclipse中hadoop2.3.0环境部署及在eclipse中直接提交mapreduce任务,Eclipse中Mahout0.10jar包部署如下图所示:

2 单机版个性化推荐源码

import java.io.File;

import java.io.IOException;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import java.util.Map.Entry;

import java.util.Set;

import org.apache.mahout.cf.taste.common.TasteException;

import org.apache.mahout.cf.taste.eval.IRStatistics;

import org.apache.mahout.cf.taste.eval.RecommenderBuilder;

import org.apache.mahout.cf.taste.eval.RecommenderIRStatsEvaluator;

import org.apache.mahout.cf.taste.impl.common.LongPrimitiveIterator;

import org.apache.mahout.cf.taste.impl.eval.GenericRecommenderIRStatsEvaluator;

import org.apache.mahout.cf.taste.impl.model.file.FileDataModel;

import org.apache.mahout.cf.taste.impl.neighborhood.NearestNUserNeighborhood;

import org.apache.mahout.cf.taste.impl.recommender.GenericUserBasedRecommender;

import org.apache.mahout.cf.taste.impl.similarity.EuclideanDistanceSimilarity;

import org.apache.mahout.cf.taste.impl.similarity.PearsonCorrelationSimilarity;

import org.apache.mahout.cf.taste.model.DataModel;

import org.apache.mahout.cf.taste.neighborhood.UserNeighborhood;

import org.apache.mahout.cf.taste.recommender.RecommendedItem;

import org.apache.mahout.cf.taste.recommender.Recommender;

import org.apache.mahout.cf.taste.similarity.UserSimilarity;

/**

* 产品推荐单机运行模式

*

* @author hadoop

*

*/

// 用户id 产品id 评分

// 1,101,5.0

// 1,102,3.0

// 1,103,2.5

// 2,101,2.0

// 2,102,2.5

// 2,103,5.0

// 2,104,2.0

// 3,101,2.5

// 3,104,4.0

// 3,105,4.5

// 3,107,5.0

// 4,101,5.0

// 4,103,3.0

// 4,104,4.5

// 4,106,4.0

// 5,101,4.0

// 5,102,3.0

// 5,103,2.0

// 5,104,4.0

// 5,105,3.5

// 5,106,4.0

public class UserCF {

final static int NEIGHBORHOOD_NUM = 2;// 和相邻多少个用户进行关联求相似度

final static int RECOMMENDER_NUM = 3;// 每个用户推荐产品的数量

/**

* @description DataModel负责存储和提供用户、项目、偏好的计算所需要的数据

* UserSimiliarity提供了一些基于某种算法的用户相似度度量的方法

* UserNeighborhood定义了一个和某指定用户相似的用户集合

* Recommender利用所有的组件来为一个用户产生一个推荐结果,另外他也提供了一系列的相关方法

* @param args

* @throws IOException

* @throws TasteException

*/

public static void main(String[] args) throws IOException, TasteException {

String file = "E:/hadoop/mahout0.9_1jars/mahout_in1.txt";// 数据文件路径,可以是压缩文件

DataModel model = new FileDataModel(new File(file));// 加载数据

UserSimilarity user = new EuclideanDistanceSimilarity(model);// 计算用户相似度,权重值为(0,1]

NearestNUserNeighborhood neighbor = new NearestNUserNeighborhood(

NEIGHBORHOOD_NUM, user, model);// 寻找相似用户

Recommender r = new GenericUserBasedRecommender(model, neighbor, user);

LongPrimitiveIterator iter = model.getUserIDs();

while (iter.hasNext()) {

long uid = iter.nextLong();

List<RecommendedItem> list = r.recommend(uid, RECOMMENDER_NUM);

System.out.printf("uid:%s", uid);

for (RecommendedItem ritem : list) {

System.out.printf("(%s,%f)", ritem.getItemID(),

ritem.getValue());

}

System.out.println();

}

/**

* 推荐结果评估

*/

RecommenderIRStatsEvaluator evaluator = new GenericRecommenderIRStatsEvaluator();

RecommenderBuilder recommenderBuilder = new RecommenderBuilder() {

@Override

public Recommender buildRecommender(DataModel model)

throws TasteException {

UserSimilarity similarity = new PearsonCorrelationSimilarity(

model);

UserNeighborhood neighborhood = new NearestNUserNeighborhood(2,

similarity, model);

return new GenericUserBasedRecommender(model, neighborhood,

similarity);

}

};

IRStatistics stats = evaluator.evaluate(recommenderBuilder, null,

model, null, 2,

GenericRecommenderIRStatsEvaluator.CHOOSE_THRESHOLD, 1.0);

System.out.println("查准率: " + stats.getPrecision());//查准率

System.out.println("召回率: " + stats.getRecall());//召回率

}

}运行结果:

uid:1(104,4.274336)(106,4.000000)

uid:2(105,4.055916)

uid:3(103,3.360987)(102,2.773169)

uid:4(102,3.000000)

uid:5

查准率: 0.75

召回率: 1.0

3 分布式版个性化推荐源码

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.util.ToolRunner;

import org.apache.mahout.cf.taste.hadoop.item.RecommenderJob;

import org.apache.mahout.math.hadoop.similarity.cooccurrence.measures.CityBlockSimilarity;

import org.apache.mahout.math.hadoop.similarity.cooccurrence.measures.CooccurrenceCountSimilarity;

import org.apache.mahout.math.hadoop.similarity.cooccurrence.measures.CosineSimilarity;

import org.apache.mahout.math.hadoop.similarity.cooccurrence.measures.EuclideanDistanceSimilarity;

import org.apache.mahout.math.hadoop.similarity.cooccurrence.measures.LoglikelihoodSimilarity;

import org.apache.mahout.math.hadoop.similarity.cooccurrence.measures.CosineSimilarity;

import org.apache.mahout.math.hadoop.similarity.cooccurrence.measures.TanimotoCoefficientSimilarity;

public class MahoutJobTest {

public static void main(String args[]) throws Exception{

Configuration conf= new Configuration();

conf.set("fs.default.name", "hdfs://192.168.1.100:9000");

conf.set("hadoop.job.user", "hadoop");

conf.set("mapreduce.framework.name", "yarn");

conf.set("mapreduce.jobtracker.address", "192.168.1.101:9001");

conf.set("yarn.resourcemanager.hostname", "192.168.1.101");

conf.set("yarn.resourcemanager.admin.address", "192.168.1.101:8033");

conf.set("yarn.resourcemanager.address", "192.168.1.101:8032");

conf.set("yarn.resourcemanager.resource-tracker.address", "192.168.1.101:8031");

conf.set("yarn.resourcemanager.scheduler.address", "192.168.1.101:8030");

String[] str ={

"-i","hdfs://192.168.1.100:9000/data/test_in/mahout_in1.csv",

"-o","hdfs://192.168.1.100:9000/data/test_out/mahout_out_CityBlockSimilarity/rec001",

"-n","3",

"-b","false",

//mahout自带的相似类列表

// SIMILARITY_COOCCURRENCE(CooccurrenceCountSimilarity.class),

// SIMILARITY_LOGLIKELIHOOD(LoglikelihoodSimilarity.class),

// SIMILARITY_TANIMOTO_COEFFICIENT(TanimotoCoefficientSimilarity.class),

// SIMILARITY_CITY_BLOCK(CityBlockSimilarity.class),

// SIMILARITY_COSINE(CityBlockSimilarity.class),

// SIMILARITY_PEARSON_CORRELATION(CosineSimilarity.class),

// SIMILARITY_EUCLIDEAN_DISTANCE(EuclideanDistanceSimilarity.class);

"-s","SIMILARITY_CITY_BLOCK",

"--maxPrefsPerUser","70",

"--minPrefsPerUser","2",

"--maxPrefsInItemSimilarity","70",

"--outputPathForSimilarityMatrix","hdfs://192.168.1.100:9000/data/test_out/mahout_out_CityBlockSimilarity/matrix/rec001",

"--tempDir","hdfs://192.168.1.100:9000/data/test_out/mahout_out_CityBlockSimilarity/temp/rec001"

};

ToolRunner.run(conf, new RecommenderJob(), str);

}

}

1486

1486

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?