本系列实验主要测试hadoop2.2.0的新特性NameNode HA 和 Federation,

本篇为基础环境搭建。

1:虚拟机规划

服务器:

productserver

192.168.100.200 (DNS、NFS、Oracle、MySQL、Cluster Moniter)

Hadoop集群1:

product201

192.168.100.201 (NN1)

product202

192.168.100.202 (NN2、zookeeper1

、JournalNode1

)

product203

192.168.100.203 (DN1、zookeeper2

、JournalNode2

)

product204

192.168.100.204 (DN2、zookeeper3

、JournalNode3

)

Hadoop集群2:

product211

192.168.100.211 (NN1)

product212

192.168.100.212 (NN2

、zookeeper1)

product213

192.168.100.213 (DN1

、zookeeper2)

product214

192.168.100.214 (DN2

、zookeeper3)

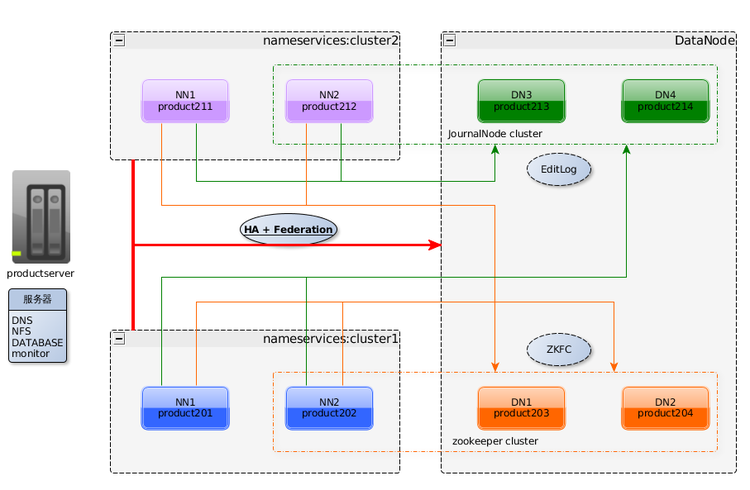

2:实验规划

A:HA+JournalNode+zookeeper

DNS:productserver

HA:product201、product202

DN:product203、product204

JouralNode:product202、product203、product204

zookeeper:product202、product203、product204

如下图之蓝线部分所示。

B:HA+NFS+zookeeper

DNS、NFS:productserver

HA:product211、product212

DN:product213、product214

zookeeper:product212、product213、product214

如上图之橙线部分所示。

C:HA+Federation

DNS:productserver

HA1(nameservices为cluster1):product201、product202

HA2(nameservices为cluster2):product211、product212

DN:product203、product204、product213、product214

JouralNode:product212、product213、product214

zookeeper:product202、product203、product204

如下图所示

3:服务器搭建

A:DNS搭建

[root@productserver ~]# yum install bind_libs bind bind-utils

[root@productserver ~]# vi /etc/named.conf

[root@productserver ~]# cat /etc/named.conf

options { listen-on port 53 { any; }; listen-on-v6 port 53 { ::1; }; directory "/var/named"; dump-file "/var/named/data/cache_dump.db"; statistics-file "/var/named/data/named_stats.txt"; memstatistics-file "/var/named/data/named_mem_stats.txt"; allow-query { any; }; recursion yes; forwarders { 202.101.172.35; }; dnssec-enable yes; dnssec-validation yes; dnssec-lookaside auto; /* Path to ISC DLV key */ bindkeys-file "/etc/named.iscdlv.key"; managed-keys-directory "/var/named/dynamic"; }; logging { channel default_debug { file "data/named.run"; severity dynamic; }; }; zone "." IN { type hint; file "named.ca"; }; include "/etc/named.rfc1912.zones"; include "/etc/named.root.key";

[root@productserver ~]# vi /etc/named.rfc1912.zones

[root@productserver ~]# cat /etc/named.rfc1912.zones

zone "localhost.localdomain" IN { type master; file "named.localhost"; allow-update { none; }; }; zone "localhost" IN { type master; file "named.localhost"; allow-update { none; }; }; //注释下面几行 //zone "1.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.ip6.arpa" IN { // type master; // file "named.loopback"; // allow-update { none; }; //}; //zone "1.0.0.127.in-addr.arpa" IN { // type master; // file "named.loopback"; // allow-update { none; }; //}; zone "0.in-addr.arpa" IN { type master; file "named.empty"; allow-update { none; }; }; zone "product" IN { type master; file "product.zone"; }; zone "100.168.192.in-addr.arpa" IN { type master; file "100.168.192.zone"; };

[root@productserver ~]# vi /var/named/product.zone

[root@productserver ~]# cat /var/named/product.zone

$TTL 86400 @ IN SOA product. root.product. ( 2013122801 ; serial (d. adams) 3H ; refresh 15M ; retry 1W ; expiry 1D ) ; minimum @ IN NS productserver. productserver IN A 192.168.100.200 ; 正解设置 product201 IN A 192.168.100.201 product202 IN A 192.168.100.202 product203 IN A 192.168.100.203 product204 IN A 192.168.100.204 product211 IN A 192.168.100.211 product212 IN A 192.168.100.212 product213 IN A 192.168.100.213 product214 IN A 192.168.100.214

[root@productserver ~]# vi /var/named/100.168.192.zone

[root@productserver ~]# cat /var/named/100.168.192.zone

$TTL 86400 @ IN SOA productserver. root.productserver. ( 2013122801 ; serial (d. adams) 3H ; refresh 15M ; retry 1W ; expiry 1D ) ; minimum IN NS productserver. 200 IN PTR productserver.product. ;反解设置 201 IN PTR product201.product. 202 IN PTR product202.product. 203 IN PTR product203.product. 204 IN PTR product204.product. 211 IN PTR product211.product. 212 IN PTR product212.product. 213 IN PTR product213.product. 214 IN PTR product214.product.

[root@productserver ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth0

[root@productserver ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

DNS1=192.168.100.200 DNS2=202.101.172.35

***************************************************************

TIPS:

正反解文件配置容易出错,可以用“named-checkzone zone名 zone文件”命令来检查;

重新配置productserver的DNS,使DNS1=192.168.100.200,DNS2才是Internet上的DNS,不然在服务器上不能正反解productserver;

本地DNS客户端使用nslookup或dig测试DNS服务是否正常;

***************************************************************

B:NFS搭建

**************************************************************************

用下面命令检查运行NFS必须的软件包是否已经安装

[root@productserver ~]# rpm -qa |grep rpcbind

[root@productserver ~]# rpm -qa |grep nfs

若没有使用下面命令安装

[root@productserver ~]# yum install nfs-utils

**************************************************************************

安装后配置NFS服务和启动

[root@productserver ~]# chkconfig rpcbind on

[root@productserver ~]# chkconfig nfs on

[root@productserver ~]# chkconfig nfslock on

[root@productserver ~]# service rpcbind restart

[root@productserver ~]# service nfs restart

[root@productserver ~]# service nfslock restart

创建共享目录

[root@productserver ~]# mkdir -p /share/cluster1

[root@productserver ~]# mkdir -p /share/cluster2

[root@productserver ~]# groupadd hadoop

[root@productserver ~]# useradd -g hadoop hadoop

[root@productserver ~]# chown -R hadoop:hadoop /share/cluster1

[root@productserver ~]# chown -R hadoop:hadoop /share/cluster2

[root@productserver ~]# setfacl -m u:hadoop:rwx /share/cluster1

[root@productserver ~]# setfacl -m u:hadoop:rwx /share/cluster2

配置共享目录配置文件

[root@productserver ~]# vi /etc/exports

[root@productserver ~]# cat /etc/exports

/share/cluster1 192.168.100.0/24(rw) /share/cluster2 192.168.100.0/24(rw)

使共享目录配置文件/etc/exports生效:

[root@productserver ~]# exportfs -arv

4:各节点搭建

A:所有节点生成hadoop用户和hadoop密钥对

[root@product201 ~]# groupadd hadoop

[root@product201 ~]# useradd -g hadoop hadoop

注意:如果要作为NFS客户端的话,用户hadoop的UID和GID和服务器的hadoop用户的UID和GID一致。不然存取文件权限会混乱。

[root@product201 ~]# su - hadoop

[hadoop@product201 ~]$ ssh-keygen -t rsa

如果~/.ssh/目录已经有文件,先全删除,重新生成密钥对。

[hadoop@product201 ~]$ exit

[root@product201 ~]# vi /etc/ssh/sshd_config

[root@product201 ~]# cat

/etc/ssh/sshd_config

RSAAuthentication yes PubkeyAuthentication yes AuthorizedKeysFile .ssh/authorized_keys

B:所有节点DNS设置

[root@product201 ~]# vi /etc/nsswitch.conf

[root@product201 ~]# cat /etc/nsswitch.conf

hosts: dns files

[root@product201 ~]# vi /etc/sysconfig/network-scripts/ifcfg-eth0

[root@product201 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

C:product201、

product211

安装hadoop文件

[root@product201 ~]# mkdir -p /app/hadoop

[root@product201 ~]# chown -R hadoop:hadoop /app/hadoop

[root@product201 ~]# cd /app/hadoop

[root@product201 hadoop]# tar zxf /app/hadoop-2.2.0.tar.gz

[root@product201

hadoop

]# mv

hadoop-2.2.0 hadoop220

[root@product201

hadoop

]# chown -R hadoop:hadoop hadoop220

D:

product201、

product211安装zookeeper

[root@product201 hadoop]# tar zxf /app/zookeeper-3.4.5.tar.gz

[root@product201

hadoop

]# mv zookeeper-3.4.5 zookeeper345

[root@product201

hadoop

]# chown -R hadoop:hadoop zookeeper345

113

113

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?