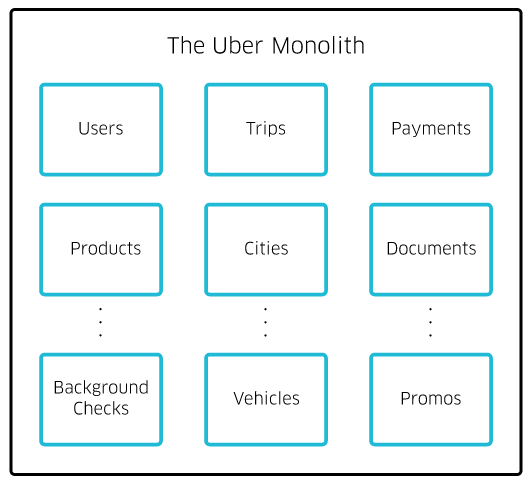

像很多初创型公司一样,Uber的架构一开始也是一整块的,或者说是整体的、不可分割的,服务端部署在一个城市,对外整体上是单个节点。这个也迎合了当时服务范围和功能选项有限的业务场景。可执行代码部署在单个节点,对于这种场景下,可以说是简洁、易管理的,而且直接上来说,满足了我们的业务需求:简单的连接司机和乘客,出账单,支付。在这种“小而美”的场景下,将Uber的这些简单的业务逻辑放在一起,也是很有道理、很有实际操作性、很有性价比的:)。但是,当我们的业务迅速拓展到多个城市,并且产品也不再那么单一的时候,问题就来了。

首先,核心业务模型在拓展,新的产品特性在增加,而我们的代码中,代码中的那些组件,确实紧耦合的,对于代码逻辑的过度封装在一起,我们想把业务逻辑上的关注点分开,也变的困难重重。不断有程序员向模块中集成新的代码,这样带来的问题很明显,每一次的部署,都意味着部署所有的可执行代码,容错性,灵活性,几乎没有。我们的开发工程师团队,也经历了从几个人到几十个人的蜕变,分化出多个相对独立的子团队,代码的提交、修改、到部署的频率迅速增加。每次增加新的业务逻辑或者说新的产品功能,bug修复,解决代码的缺陷,优化代码结构(笑),都是在一个代码库中进行,使得整体协调变得尤为困难。这种情况,要么采用更轻捷更松散的代码架构,以适应上面所说的种种变化,要么建立类似微软开发Windows那样庞大而强势的管理团队(这貌似不可能)。

那么下面就要介绍这种架构了:SOA

我们决定跟随业务上具有高增长特性的那些企业:Amazon, Netflix, SoundCloud, Twitter, 还有其他企业,借鉴他们的做法,将一整块代码模块/结构拆分为多个代码模块,构建SOA(面向服务)的代码架构。SOA的概念比较宽泛,涵盖的内容很多,我们主要提取采用了其中的微服务架构。所谓的微服务架构,就是一种设计模式,它强调将代码设计称为一个个小的服务,而每一个小的服务都对应了一个特定的、完好封装的领域模型/业务模型。每一个小服务都可以用适合自身的编程语言、技术框架、甚至有自己的数据库。

从一整块代码结构演变到分布式的SOA,能解决很多问题,但是也带来了不少问题,主要在下面三个方面:

1.可见性

2.安全性

3.恢复能力

可见性:

当服务达到500+,找到合适的服务就会变得困难,即使找到了服务,也不知道如何合理的使用,因为每个服务都是用自身的方式组织架构的。比如以REST或者RPC方式提供的网关接口(在局域网内就能直接访问和使用接口的功能),这些接口往往存在一个弱协议性的问题:每个接口遵循的协议/约定都是不一样的。你也可以尝试,REST接口强加JSON结构,确实能够提高安全性和优化服务相关的处理能力,但是这个JSON化的过程将变的琐碎和麻烦。最终,这个解决方式反而使得容错方面和延迟方面,无法再能提供保证。另外,对于客户端,很明显要去对进行服务的异常处理,而且要面对的是一连串多个服务,每个服务都可能出现超时和结果异常,如何处理多个异常?一个服务的结果的缺失是否会导致一连串的服务结果的缺失?如何处理?系统的健壮性,会受到这些缺陷的严重影响。我们的工程师开玩笑说:“我们只是将一整块的APIs转变成了分布式的但是还是一整块的APIs”。

经过这样的分析,问题就慢慢清楚了:我们需要一个统一的标准的接口通信方式,保证类型安全性,合法性验证,和容错机制。其他的目标:1)客户端的接口库简单化2)跨语言支持3)可调的超时时间和超时之后的重试次数4)能高效的开发和测试

在我们的业务高速增长的阶段,Uber的工程师们持续的评估我们采用的技术框架和工具(对比某些企业外包项目,什么时髦用什么,什么牛逼用什么,笑:))。一种我们想到的思路,就是一开始,我们就使用成熟的接口定义语言(IDL),同时也能使用它们内建的工具套件,这个是最理想的。

我们评估了现有的工具组件,并且发现Apache Thrift(因为FaceBook和Twitter的使用而名声大噪)能够最好地满足我们的需求。Thrift是专门为构建可拓展、跨语言服务而发明的,它包含了代码库,和工具组件。具体实现上:数据类型和服务接口定义在语言无差别文件(language agnostic file)中,然后生成指定语言的代码,这份代码对通讯方式和RPC消息的编码进行了抽象定义。

除了Thrift以外,对于接口的生命周期的管理,我们还使用了生命周期管理软件,把这些客户端接口提交到包管理系统,比如python的pip,node的npm。从而使的发现/定位/确定和贡献/开发这些接口,就成了一个可管理的问题。 服务客户端,在这种管理方式下,能够提供客户端接口声明的源码下载,从而多了一种学习接口定义的方式,而不是仅仅通过文档和wiki。

安全性:

对于Thrift工具,最大的挑战就是安全性,Thrift通过让接口绑定严格的协议的方式,提供安全性。但是这种方法限制了太多的细节:它定义了服务程序的调用方式,哪些输入,哪些输出。比如,下面就是一个Thrift IDL,在这个接口中,我们定义了一个ZOO服务,这个服务下面有方法makeSound,参数是animalName,返回是String或抛出异常,我们来看看这个IDL:

<span style="font-size:18px;">struct Animal {

1: i32 id

2: string name

3: string sound

}

exception NotFoundException {

1: i32 what

2: string why

}

service Zoo {

/**

* Returns the sound the given animal makes.

*/

string makeSound(1: string animalName) throws (

1: NotFoundException noAnimalFound

)

}</span>恢复性/容错性/稳定性

最后对于容错性和延迟处理,我们参(chao)考(xi)了面临同样问题的公司的解决办法,具体就是Netflix的Hystrix库和Twitter的Finagle库,来处理恢复(resiliency)问题。基于这两个库,我们自己写了一个库,使得在客户端能够成功处理这些场景。

利弊权衡和展望

没有任何解决方案是完美的,都是有利有弊,比如开发人员关心的少,但是灵活性可能变差。不得不吐槽的是Thrift这个工具集并不是那么成熟和十全十美,对于python和node还有很多待完善的地方。如果直接拿来主义的使用,可能还需要投入人力时间成本去补充没有被完善的底层组件。此外,一些细节的缺陷,比如没有在高层次上支持header。对于权限和跨服务的执行流追踪,比较麻烦。

对我们以前建立的一整块的的架构的分解工作已经很长时间了。这个工作非常重要,而且保证了我们之后的业务能飞速的增长。

对于2015年余下来的工作就是完全去除之前那个代码仓库,同时通过微服务架构,推进清晰的权限管理关系,提供更好的组织上的拓展性,提供更好的可恢复性,容错性。

原文:

Like many startups, Uber began its journey with a monolithic architecture, built for a single offering in a single city. At the time, all of Uber was our UberBLACK option and our “world” was San Francisco. Having one codebase seemed “clean” at the time, and solved our core business problems, which included connecting drivers with riders, billing, and payments. It was reasonable back then to have all of Uber’s business logic in one place. As we rapidly expanded into more cities and introduced new products, this quickly changed.

As core domain models grew and new features were introduced, our components became tightly coupled, and enforcing encapsulation made separation of concerns difficult. Continuous integration turned into a liability because deploying the codebase meant deploying everything at once. Our engineering team experienced rapid growth and scaling, which not only meant handling more requests but also handling a significant increase in developer activity. Adding new features, fixing bugs, and resolving technical debt all in a single repo became extremely difficult. Tribal knowledge was required before attempting to make a single change.

Moving to a SOA

We decided to follow the lead of other hyper-growth companies—Amazon, Netflix, SoundCloud, Twitter, and others—and break up the monolith into multiple codebases to form a service-oriented architecture (SOA). Specifically, since the term SOA tends to mean a variety of different things, we adopted a microservice architecture. This design pattern enforces the development of small services dedicated to specific, well-encapsulated domain areas. Each service can be written in its own language or framework, and can have its own database or lack thereof.

Migrating from a monolithic codebase to a distributed SOA solved many of our problems, but it created a few new ones as well. These problems fall into three main areas:

- Obviousness

- Safety

- Resilience

Obviousness

With 500+ services, finding the appropriate service becomes arduous. Once identified, how to utilize the service is not obvious, since each microservice is structured in its own way. Services providing REST or RPC endpoints (where you can access functionality within that domain) typically offer weak contracts, and in our case these contracts vary greatly between microservices. Adding JSON Schema to a REST API can improve safety and the process of developing against the service, but it is not trivial to write or maintain. Finally, these solutions do not provide any guarantees regarding fault tolerance or latency. There’s no standard way to handle client-side timeouts and outages, or ensure an outage of one service does not cause cascading outages. The overall resiliency of the system would be negatively impacted by these weaknesses. As one developer put it, we “converted our monolithic API into a distributed monolithic API”.

It has become clear we need a standard way of communication that provides type safety, validation, and fault tolerance. Other goals include:

- Simple ways to provide client libraries

- Cross language support

- Tunable default timeouts and retry policies

- Efficient testing and development

At this stage in our hyper-growth, Uber engineers continue to evaluate technologies and tools to fit our goals. One thing we do know is that using an existing Interface Definition Language(IDL) that provides lots of pre-built tooling from day one is ideal.

We evaluated the existing tools and found that Apache Thrift (made popular by Facebook and Twitter) met our needs best. Thrift is a set of libraries and tools for building scalable cross-language services. To accomplish this, datatypes and service interfaces are defined in a language agnostic file. Then, code is generated to abstract the transport and encoding of RPC messages between services written in all of the languages we support (Python, Node, Go, etc.)

In addition to Thrift, we’re creating lifecycle tooling to publish these clients to packaging systems (such as pip for Python and npm for Node). Discovering and contributing to the service then becomes a manageable task. Service clients also act as learning tools, in addition to docs and wikis.

Safety

The most compelling argument for Thrift is its safety. Thrift guarantees safety by binding services to use strict contracts. The contract describes how to interact with that service including how to call service procedures, what inputs to provide, and what output to expect. In the following Thrift IDL we have defined a service Zoo with a function makeSound that takes a string animalName and returns a string or throws an exception.

Adhering to a strict contract means less time is spent figuring out how to communicate with a service and dealing with serialization. In addition, as a microservice evolves we do not have to worry about interfaces changing suddenly, and are able to deploy services independently from consumers. This is very good news for Uber engineers. We’re able to move on to other projects and tools since Thrift solves the problem of safety out of the box.

Resilience

Lastly, we drew inspiration from fault tolerance and latency libraries in other companies facing similar challenges, such as Netflix’s Hystrix library and Twitter’s Finagle library, to tackle the problem of resiliency. With those libraries in mind, we wrote libraries that ensure clients are able to deal with failure scenarios successfully (which will be discussed in more detail in a future post).

Tradeoffs and Where We’re Headed

Of course, no solution is perfect and all solutions have challenges. Unfortunately, Thrift’s toolset is relatively young and tools for Python and Node are not abundant. There is a risk that a lot of time will be invested in creating these tools. Additionally, there is no higher-level support for headers. Authentication and cross-service tracing, for example, are two challenging problems since higher level meta-data would be passed in every time.

Dismantling our well-worn monolith has been a long time coming. While it has been a key component that enabled our explosive growth in the past, it has grown cumbersome and difficult to scale further and maintain.

Our goal for the remainder of 2015 is to get rid of this repo entirely—promoting clear ownership, offering better organizational scalability, and providing more resilience and fault tolerance through our commitment to microservices.

4980

4980

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?