see:

http://www.jianshu.com/p/5f4be94630a3

http://blog.csdn.net/xu470438000/article/details/50512442

https://hub.docker.com/r/sequenceiq/hadoop-docker/

10.177.3.93>sudo docker run --name hadoop0 --hostname hadoop0 --restart=always -d --net=host -P -p 8070:50070 -p 8088:8088 daocloud.io/hrsapac/hadoop:2.7.0

10.177.1.33>sudo docker run --name hadoop1 --hostname hadoop1 --restart=always -d --net=host -P daocloud.io/hrsapac/hadoop:2.7.0

master:hadoop0 10.177.3.93

slave :hadoop1 10.177.1.33

2:设置ssh免密码登录

在hadoop0上执行下面操作

cd ~

mkdir .ssh

cd .ssh

ssh-keygen -t rsa(一直按回车即可)

ssh-copy-id -i localhost

ssh-copy-id -i hadoop0

ssh-copy-id -i hadoop1

在hadoop1上执行下面操作

cd ~

cd .ssh

ssh-keygen -t rsa(一直按回车即可)

ssh-copy-id -i localhost

ssh-copy-id -i hadoop0

ssh-copy-id -i hadoop1

3:在hadoop0 and hadoop1 (both files should be the same) 上修改hadoop的配置文件,进入到/usr/local/hadoop/etc/hadoop目录 修改目录下的配置文件core-site.xml、hdfs-site.xml、yarn-site.xml、mapred-site.xml (1)hadoop-env.sh

export JAVA_HOME=/usr/local/jdk1.7

- 1

(2)core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop0:9000</value> (all point to hadoop0)

</property>

</configuration>

(3)hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

(4)yarn-site.xml

<configuration>

<property>

<description>The hostname of the RM.</description>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop0</value> //all point to hadoop0

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.application.classpath</name>

<value>/usr/local/hadoop/etc/hadoop, /usr/local/hadoop/share/hadoop/common/*, /usr/local/hadoop/share/hadoop/common/lib/*, /usr/local/hadoop/share/hadoop/hdfs/*, /usr/local/hadoop/share/hadoop/hdfs/lib/*, /usr/local/hadoop/share/hadoop/mapreduce/*, /usr/local/hadoop/share/hadoop/mapreduce/lib/*,

/usr/local/hadoop/share/hadoop/yarn/*, /usr/local/hadoop/share/hadoop/yarn/lib/*</value>

</property>

<property>

<name>yarn.nodemanager.delete.debug-delay-sec</name>

<value>600</value>

</property>

</configuration>

(5)修改文件名:mv mapred-site.xml.template mapred-site.xml

vi mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

(6)格式化

进入到/usr/local/hadoop目录下

1、执行格式化命令

bin/hdfs namenode -format

注意:在执行的时候会报错,是因为缺少which命令,安装即可

执行下面命令安装

yum install -y which

10)修改hadoop0中hadoop的一个配置文件etc/hadoop/slaves

(1.)删除原来的所有内容,修改为如下hadoop1

(2)修改hadoop1中hadoop的一个配置文件etc/hadoop/slaves

hadoop1

(11)在hadoop0中执行命令

scp -rq /usr/local/hadoop hadoop1:/usr/local

scp -rq /usr/local/hadoop hadoop2:/usr/local

- 1

- 2

(12)启动hadoop分布式集群服务

执行sbin/start-all.sh

- 1

注意:在执行的时候会报错,是因为两个从节点缺少which命令,安装即可

分别在两个从节点执行下面命令安装

yum install -y which

- 1

再启动集群(如果集群已启动,需要先停止)

only in hadoop0 (we do not need to start in hadoop1)

>sbin/start-all.sh

- 1

(13)验证集群是否正常

首先查看进程:

Hadoop0上需要有这几个进程

[root@hadoop0 hadoop]# jps

4643 Jps

4073 NameNode

4216 SecondaryNameNode

4381 ResourceManager

Hadoop1上需要有这几个进程

[root@hadoop1 hadoop]# jps

715 NodeManager

849 Jps

645 DataNode

- 1

- 2

- 3

使用程序验证集群服务

创建一个本地文件

vi a.txt

hello you

hello me

- 1

- 2

- 3

上传a.txt到hdfs上

hdfs dfs -put a.txt /

- 1

执行wordcount程序

cd /usr/local/hadoop/share/hadoop/mapreduce

hadoop jar hadoop-mapreduce-examples-2.4.1.jar wordcount /a.txt /out

- 1

- 2

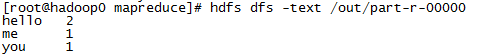

查看程序执行结果

这样就说明集群正常了。

通过浏览器访问集群的服务

由于在启动hadoop0这个容器的时候把50070和8088映射到宿主机的对应端口上了

adb9eba7142b crxy/centos-ssh-root-jdk-hadoop "/usr/sbin/sshd -D" About an hour ago Up About an hour 0.0.0.0:8088->8088/tcp, 0.0.0.0:50070->50070/tcp, 0.0.0.0:32770->22/tcp hadoop0

628

628

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?