移动端h5会话ui

Conversational UI has always been a reach goal for technologists. Its sheer presence in science-fiction movies alone is an indicator of how much we as a society value this mode of interaction. There are many reasons for this. From an early age, we’re taught how to interact with each other via conversation – wouldn’t it be great if computers could understand us instead of us learning how to use them?

对话式UI一直是技术人员的目标。 仅在科幻电影中它的出现,就表明我们作为一个社会对这种互动方式的重视程度。 这件事情是由很多原因导致的。 从很小的时候开始,我们就学会了如何通过对话进行交互-如果计算机能够理解我们,而不是让我们学习如何使用它们,那不是很好吗?

This notion of “smart bots” came quickly this year, and came to stay. Facebook released their own bot platform, Microsoft has a platform for building them, and we’re starting to see independent vendors pop up like wit.ai and api.ai. Developers want to create them, and users want to use them.

这种“智能机器人”的概念在今年Swift流行,并且一直存在。 Facebook发布了自己的bot平台 ,Microsoft提供了一个平台来构建它们 ,我们开始看到像wit.ai和api.ai这样的独立供应商。 开发人员想要创建它们,而用户想要使用它们。

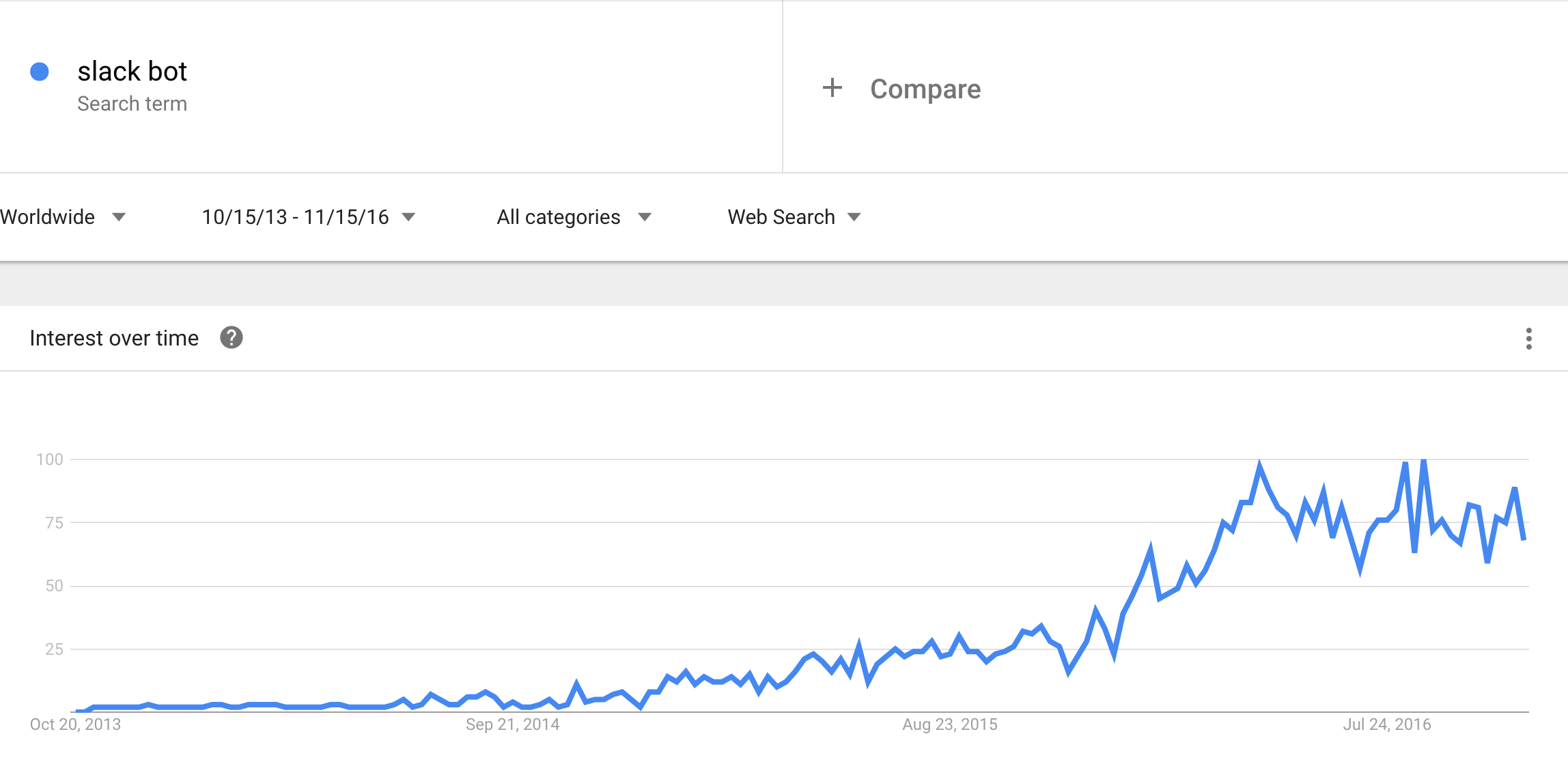

Perhaps Slack was an instigator in this trend too; it’s had open APIs allowing users to create bots since its inception, and with its recent surge in popularity, it’s no wonder why everyone is “bot thirsty.”

也许Slack也是这种趋势的推动者。 自成立以来,它就具有开放的API,允许用户创建机器人,并且随着最近的普及,毫无疑问,为什么每个人都“渴望机器人”。

So where does this leave us? With a rise in popularity and increased interest in creating bots, what are the best ways to do so?

那么,这会把我们留在哪里呢? 随着受欢迎程度的提高和对创建机器人的兴趣的增加,实现此目的的最佳方法是什么?

Unfortunately, there’s no clear cut answer on this one. Everyone is releasing their own proprietary platform with unique, incompatible APIs, with different levels of ease vs customizability. At the end of the day, creating bots, and creating natural conversation flows is an incredibly challenging process. There’s a reason why the most used Siri feature is setting timers and the most used Alexa feature is playing music. Creating richer experiences beyond simple command-and-respond actions is quite difficult.

不幸的是,对此没有明确的答案。 每个人都在发布自己的专有平台,这些平台具有独特的,不兼容的API,并且具有不同的易用性和可定制性级别。 归根结底,创建机器人并建立自然的对话流程是一个极具挑战性的过程。 为什么使用最常用的Siri功能设置计时器而使用最常用的Alexa功能播放音乐是有原因的。 除了简单的命令和响应动作之外,要创建更丰富的体验非常困难。

And despite the the plethora of frameworks and platforms, none of them present compelling ways to debug your bot-conversation (botversation™) on a system level. How do we determine what happened when you ask your bot about “music” and it responds with answers about “mac and cheese”?

尽管框架和平台过多,但它们都没有提出在系统级别调试机器人对话(botversation™)的有效方法。 当您向机器人询问“音乐”并响应“苹果和奶酪”时,我们如何确定发生了什么?

When something goes wrong, we want to gain introspection into the system, which (hopefully) will allow us to more easily debug. We don’t want to only see “you said this, so we said that” but rather a whole linked set of asynchronous events that follow your speech utterance and intent lifecycle, allowing us to see exactly what happened behind the scenes and enabling us to target exactly what went wrong.

当出现问题时,我们希望对系统进行自省,这(希望)将使我们能够更轻松地进行调试。 我们不想只看到“您说的是,所以我们说了”,而是看到一整套链接的异步事件,这些事件遵循您的语音表达和意图生命周期,使我们能够准确了解幕后发生的事情,并使我们能够准确定位出了什么问题。

Utilizing a tool of this nature will solve common pain points developers often face when setting up intricate conversation workflows. The fewer pain points there are, the faster users can get up and running to create awesome conversational bots.

使用这种性质的工具可以解决开发人员在建立复杂的对话工作流时经常面临的痛点。 痛苦点越少,用户可以更快地启动并运行以创建出色的对话机器人。

Enough of the talk, let’s look at a tool we’ve developed to address this. We’ll be walking through a common issue faced when setting up a conversation: “I wanted my bot to respond with ___ but instead it responded with ___.”

足够多的讨论,让我们看看为解决这个问题而开发的工具。 在建立对话时,我们将解决一个常见的问题:“我希望我的漫游器以___进行响应,但相反,它以___进行响应。”

I submit that while the following might be pretty and a good record of your interactions with a system, it wouldn’t help you figure out what went wrong:

我认为,尽管以下内容很漂亮,并且很好地记录了您与系统的互动,但是它并不能帮助您找出问题所在:

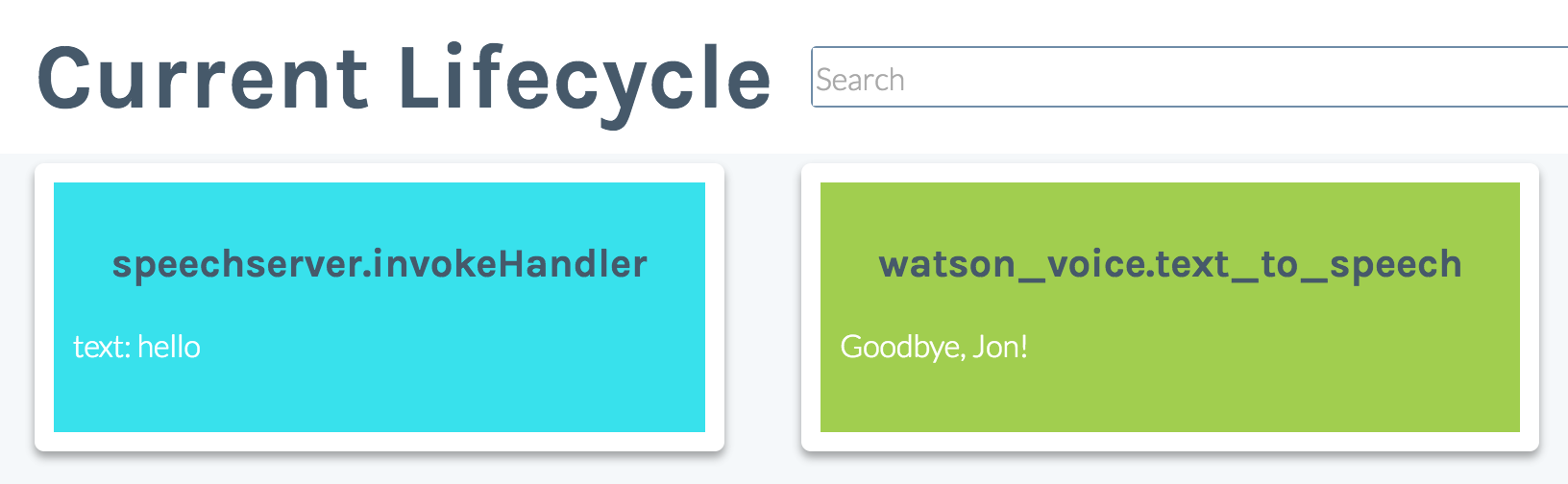

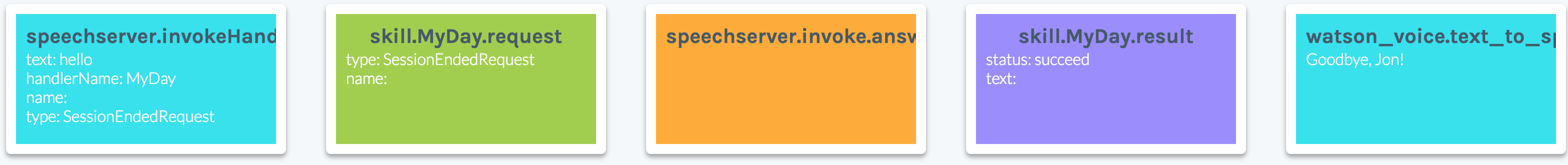

If instead we saw an event lifecycle indicating to us what actions the input text invoked, we would have a greater sense of why the system produced that output text:

相反,如果我们看到一个事件生命周期,该事件生命周期向我们指示了输入文本所调用的操作,那么我们将对为什么系统产生该输出文本有更大的了解:

With this new information we can see that for some reason our input “hello” text triggered a SessionEndedRequest. This is no good! What if we could retrain the system from this view to tell it “hey! don’t do that! when I say this, I actually want you to invoke something else!” This looks like:

有了这些新信息,我们可以看到由于某种原因,我们的输入“ hello”文本触发了SessionEndedRequest。 这不好! 如果我们可以从这种观点重新训练系统以告诉它“嘿! 不要那样做! 当我这样说时,我实际上是希望您调用其他东西!” 看起来像:

Which allows to select the correct skill and intent for our desired “hello” response:

这样可以为我们所需的“ hello”响应选择正确的技能和意图:

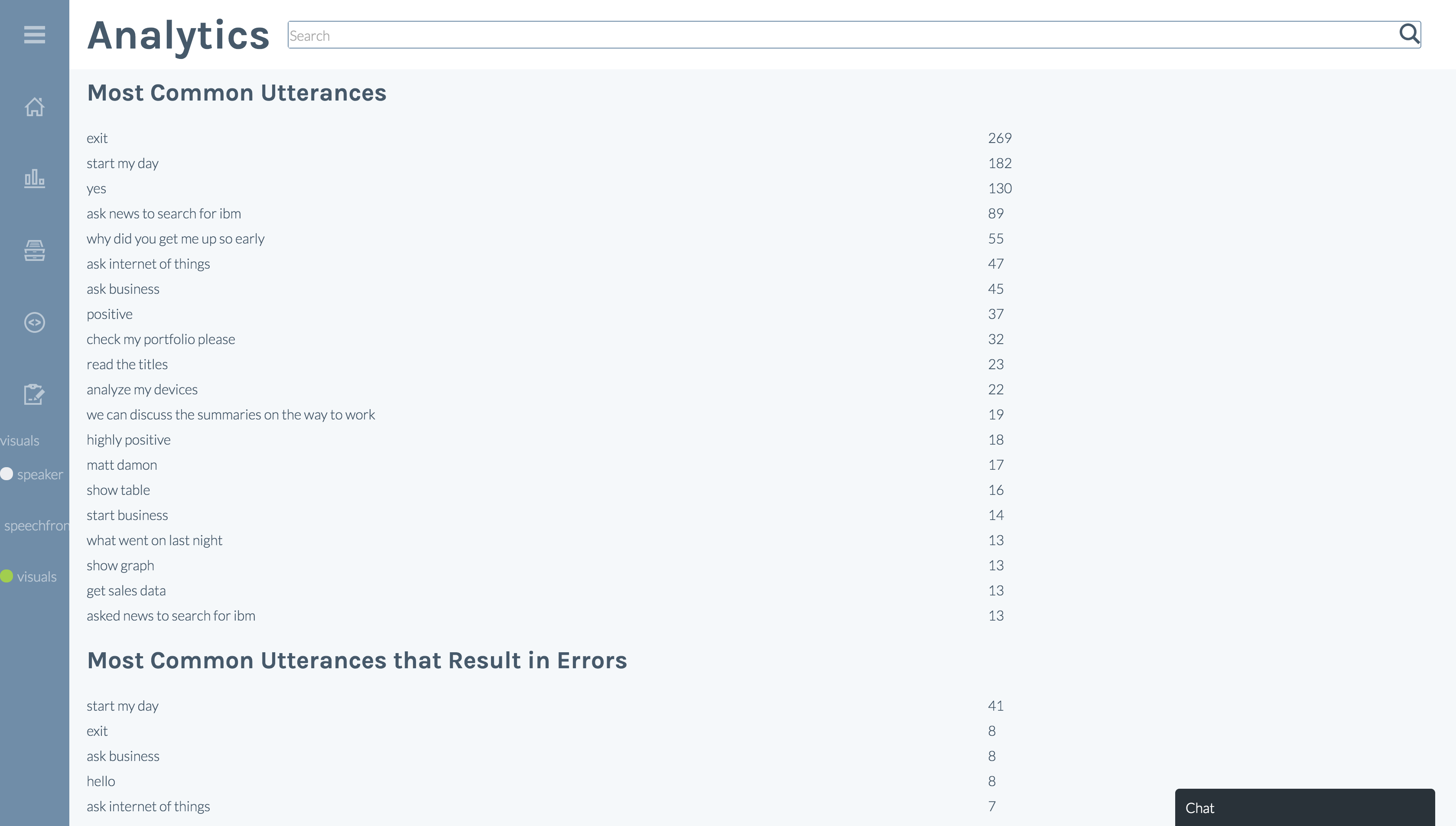

Once we have this framework in place, we need a more convenient way of finding out what went wrong. We can perform statistics and analytics on our recorded lifecycle dataset in order to identify what are the most common things that were said, and which of those resulted in the most errors. We can also perform similar metrics around skill sets and actions.

一旦有了这个框架,我们就需要一种更方便的方法来找出问题所在。 我们可以对记录的生命周期数据集进行统计和分析,以便确定最常说的是什么,以及哪些错误最多。 我们还可以围绕技能和动作执行类似的指标。

翻译自: https://www.pybloggers.com/2016/11/debugging-the-conversational-ui/

移动端h5会话ui

2415

2415

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?