骤:

1.录屏

思路:子线程进行截屏的方式进行录制,再使用avilib将截取到的图片保存为视频文件。

参考文章:avilib库的使用 - Ron's个人页面 - OSCHINA - 中文开源技术交流社区

子线程录制通过上文中的ToAviThread来实现

void ToAviThread::run()

{

QScreen *screen = QApplication::primaryScreen();

QDesktopWidget* desktopWidget = QApplication::desktop();

QRect screenRect = desktopWidget->screenGeometry();

//设置保存路径

out_fd = AVI_open_output_file(toAviFilePath.toLocal8Bit().data());

if(out_fd == NULL)

{

qDebug()<<"open file erro";

}

//size根据自身情况来设置,这里是之前保存过的一个变量,其实和上面的screenRect是一样的

QSize size = GNConfig::getInstance()->getMainSize();

//avilib设置尺寸帧率和格式

AVI_set_video(out_fd, size.width(), size.height(), 6, "mjpg");//设置视频文件的格式

while(!stopFlag)

{

//pause逻辑

sync.lock();

if(is_pause)

{

pauseCond.wait(&sync); // in this place, your thread will stop to execute until someone calls resume

}

sync.unlock();

//进行屏幕抓取

// if(frameBuffer != NULL && bytes !=0)

{

QPixmap map = this->grabWindow((HWND)QApplication::desktop()->winId(), 0, 0, screenRect.width(), screenRect.height());

//抓取到的pixmap保存为bytearray

QByteArray ba;

QBuffer bf(&ba);

if (!map.save(&bf, "jpg", 50))

exit(0);

frameBuffer = ba.data();

bytes = ba.length();

//每次截屏是1帧,写入到视频文件里

if(AVI_write_frame(out_fd,frameBuffer,bytes,1)<0)//向视频文件中写入一帧图像

{

qDebug()<<"write erro";

}else{

frameBuffer = NULL;

bytes = 0;

}

}

}

//截屏完成,保存到文件中

AVI_close (out_fd); //关闭文件描述符,并保存文件

}线程stop后,通过AVI_close保存到之前设置的路径中,至此视频录制完成。假如

AVI_open_output_file里设置的是e:\test.avi,那AVI_close后此文件就会自动生成。

PS:这里说明一下,之所以

AVI_set_video(out_fd, size.width(), size.height(), 6, "mjpg");

设置的是6帧,其实是因为每秒grab屏幕最快也就是6张,再多了录制的时间会对应不上。

另外grab函数自己重写了,也是参考网上的,QScreen的grabwindow方法无法抓取鼠标,所以baidu了一下。代码如下:

QPixmap ToAviThread::grabWindow(HWND winId, int x, int y, int w, int h)

{

RECT r;

GetClientRect(winId, &r);

if (w < 0) w = r.right - r.left;

if (h < 0) h = r.bottom - r.top;

HDC display_dc = GetDC(winId);

HDC bitmap_dc = CreateCompatibleDC(display_dc);

HBITMAP bitmap = CreateCompatibleBitmap(display_dc, w, h);

HGDIOBJ null_bitmap = SelectObject(bitmap_dc, bitmap);

BitBlt(bitmap_dc, 0, 0, w, h, display_dc, x, y, SRCCOPY | CAPTUREBLT);

CURSORINFO ci;

ci.cbSize = sizeof(CURSORINFO);

GetCursorInfo(&ci);

if((ci.ptScreenPos.x > x) && (ci.ptScreenPos.y > y) && (ci.ptScreenPos.x < (x+w)) && (ci.ptScreenPos.y < (y+h)))

DrawIcon(bitmap_dc, ci.ptScreenPos.x-x, ci.ptScreenPos.y-y, ci.hCursor);

// clean up all but bitmap

ReleaseDC(winId, display_dc);

SelectObject(bitmap_dc, null_bitmap);

DeleteDC(bitmap_dc);

QPixmap pixmap = QtWin::fromHBITMAP(bitmap);

DeleteObject(bitmap);

return pixmap;

}

2.录音

思路:使用QAudioInput进行麦克风设备的检测,录音采用QAudioRecorder来实现。

先来说音频设备检测:

窗体代码

#ifndef GNDEVICEDLG_H

#define GNDEVICEDLG_H

#include "GNIODevice.h"

#include <QMouseEvent>

#include <QWidget>

#include <QAudioRecorder>

#include <QAudioInput>

namespace Ui {

class GNDeviceDlg;

}

class GNDeviceDlg : public QWidget

{

Q_OBJECT

public:

explicit GNDeviceDlg(QWidget *parent = 0);

~GNDeviceDlg();

void showDeviceTest(QWidget* parent);

private slots:

void on_btnStartTest_clicked();

void onSkip();

void on_btnOK_clicked();

void on_btnReTest_clicked();

void refreshDisplay();

void onGotoResultPage();

signals:

void showRecordView(const QString& deviceName);

protected:

void mousePressEvent(QMouseEvent *event);

void mouseReleaseEvent(QMouseEvent *event);

void mouseMoveEvent(QMouseEvent *event);

private:

void initAudioRecorder();

enum{

PAGE_DEFAULT,

PAGE_TESTING,

PAGE_PASS,

PAGE_WRONG

};

private:

Ui::GNDeviceDlg *ui;

bool bPressFlag;

QPoint beginDrag;

QAudioRecorder *audioRecorder = NULL;

QAudioInput* m_audioInput = NULL;

GNIODevice* m_device = NULL;

QAudioFormat formatAudio;

bool bTestPass=false;

};

#endif // GNDEVICEDLG_H

#include "GNDeviceDlg.h"

#include "ui_GNDeviceDlg.h"

#include <GNTipMessageBox.h>

#include <QDebug>

#include <QFileInfo>

#include <QListView>

#include <QTimer>

GNDeviceDlg::GNDeviceDlg(QWidget *parent) :

QWidget(parent),

ui(new Ui::GNDeviceDlg)

{

ui->setupUi(this);

setWindowFlags(Qt::Tool |Qt::FramelessWindowHint | Qt::WindowStaysOnTopHint | Qt::Dialog);

setWindowModality(Qt::ApplicationModal);

this->setAttribute(Qt::WA_TranslucentBackground);

this->setAttribute(Qt::WA_DeleteOnClose);

connect(ui->labelSkip, &GNClickableLabel::clicked, this, &GNDeviceDlg::onSkip);

connect(ui->labelSkip_2, &GNClickableLabel::clicked, this, &GNDeviceDlg::onSkip);

ui->stackedWidget->setCurrentIndex(PAGE_DEFAULT);

qDebug()<<"[GNDeviceDlg] new QAudioRecorder";

audioRecorder = new QAudioRecorder(this);

//设置音频格式

formatAudio.setSampleRate(8000);

formatAudio.setChannelCount(1);

formatAudio.setSampleSize(16);

formatAudio.setSampleType(QAudioFormat::SignedInt);

formatAudio.setByteOrder(QAudioFormat::LittleEndian);

formatAudio.setCodec("audio/pcm");

QAudioDeviceInfo defaultInfo = QAudioDeviceInfo::defaultInputDevice();

//初始化检测音频设备的input对象

m_audioInput = new QAudioInput(defaultInfo,formatAudio, this);

m_device = new GNIODevice(formatAudio, this);

connect(m_device, SIGNAL(update()), SLOT(refreshDisplay()));

ui->comboBox->setEnabled(true);

ui->comboBox->setView(new QListView());

}

GNDeviceDlg::~GNDeviceDlg()

{

delete ui;

}

void GNDeviceDlg::initAudioRecorder()

{

QStringList inputs = audioRecorder->audioInputs();

qDebug()<<"initAudioRecorder inputs:"<<inputs;

ui->comboBox->clear();

ui->comboBox->addItems(inputs);

}

//开始检测按钮

void GNDeviceDlg::on_btnStartTest_clicked()

{

if(ui->comboBox->currentText().isEmpty())

{

//自定义msgbox,可用qmessagebox替换

GNTipMessageBox tipBox;

tipBox.updateMsg(tr("tip"), tr("Current no micphone device, Please plugin!"), tr("ok"));

tipBox.exec();

return;

}

ui->comboBox->setEnabled(false);

qDebug()<<"start test device name:"<<ui->comboBox->currentText();

//遍历音频输入设备列表

QAudioDeviceInfo testInfo;

QList<QAudioDeviceInfo> infos = QAudioDeviceInfo::availableDevices(QAudio::AudioInput);

foreach (QAudioDeviceInfo info, infos) {

qDebug()<<"info:"<<info.deviceName();//打印现有电脑上的麦克风设备

if(info.deviceName() == ui->comboBox->currentText())

{

testInfo = info;

break;

}

}

if(m_audioInput)

{

m_audioInput->stop();

delete m_audioInput;

m_audioInput = new QAudioInput(testInfo,formatAudio, this);

}

m_device->start();

m_audioInput->start(m_device);

ui->stackedWidget->setCurrentIndex(PAGE_TESTING);

bTestPass = false;

QTimer::singleShot(5000, this, SLOT(onGotoResultPage()));

}

//跳过检测

void GNDeviceDlg::onSkip()

{

this->hide();

m_device->stop();

m_audioInput->stop();

emit showRecordView(ui->comboBox->currentText());

}

void GNDeviceDlg::on_btnOK_clicked()

{

this->hide();

m_device->stop();

m_audioInput->stop();

//显示录制窗体

emit showRecordView(ui->comboBox->currentText());

}

void GNDeviceDlg::on_btnReTest_clicked()

{

on_btnStartTest_clicked();

}

void GNDeviceDlg::onGotoResultPage()

{

m_device->stop();

m_audioInput->stop();

ui->comboBox->setEnabled(true);

if(bTestPass)

{

ui->stackedWidget->setCurrentIndex(PAGE_PASS);//检测成功

}

else

{

ui->stackedWidget->setCurrentIndex(PAGE_WRONG);//检测失败

}

}

void GNDeviceDlg::refreshDisplay()

{

int value = m_device->level()*100;

qDebug()<<"level:"<<m_device->level()<<" value:"<<value;

ui->progressBar->setValue(value);

if(value>0)

{

bTestPass=true;

}

}

void GNDeviceDlg::showDeviceTest(QWidget* parentBtn)

{

initAudioRecorder();

QPoint GlobalPoint(parentBtn->mapToGlobal(QPoint(0, 0)));

qWarning()<<"parentBtn pos:"<<parentBtn->pos()<<" GlobalPoint:"<<GlobalPoint;

int x = GlobalPoint.x() - this->width()/2 + parentBtn->width()/2;

int y = GlobalPoint.y() - this->height()-20;

this->move(x, y);

ui->stackedWidget->setCurrentIndex(PAGE_DEFAULT);

ui->comboBox->setEnabled(true);

this->show();

}

void GNDeviceDlg::mousePressEvent(QMouseEvent *event)

{

bPressFlag = true;

beginDrag = event->pos();

QWidget::mousePressEvent(event);

}

void GNDeviceDlg::mouseReleaseEvent(QMouseEvent *event)

{

bPressFlag = false;

QWidget::mouseReleaseEvent(event);

}

void GNDeviceDlg::mouseMoveEvent(QMouseEvent *event)

{

if (bPressFlag)

{

QPoint relaPos(QCursor::pos() - beginDrag);

move(relaPos);

}

QWidget::mouseMoveEvent(event);

}

使用QAudioInput进行麦克风音量的检测,核心是继承QIODevice,然后在writeData虚函数里对音频数据进行音量的判断。

如下:

#ifndef GNIODEVICE_H

#define GNIODEVICE_H

#include <QtCore/QIODevice>

#include <QAudioFormat>

class GNIODevice: public QIODevice

{

Q_OBJECT

public:

GNIODevice(const QAudioFormat &format, QObject *parent);

~GNIODevice();

void start();

void stop();

qreal level() const { return m_level; }

qint64 readData(char *data, qint64 maxlen);

qint64 writeData(const char *data, qint64 len);

private:

const QAudioFormat m_format;

quint32 m_maxAmplitude;

qreal m_level; // 0.0 <= m_level <= 1.0

signals:

void update();

};

#endif // GNIODEVICE_H

#include "GNIODevice.h"

#include <QDebug>

#include <QtEndian>

const int BufferSize = 4096;

GNIODevice::GNIODevice(const QAudioFormat &format, QObject *parent): QIODevice(parent)

,m_format(format)

,m_maxAmplitude(0)

,m_level(0.0)

{

switch (m_format.sampleSize()) {

case 8:

switch (m_format.sampleType()) {

case QAudioFormat::UnSignedInt:

m_maxAmplitude = 255;

break;

case QAudioFormat::SignedInt:

m_maxAmplitude = 127;

break;

default:

break;

}

break;

case 16:

switch (m_format.sampleType()) {

case QAudioFormat::UnSignedInt:

m_maxAmplitude = 65535;

break;

case QAudioFormat::SignedInt:

m_maxAmplitude = 32767;

break;

default:

break;

}

break;

case 32:

switch (m_format.sampleType()) {

case QAudioFormat::UnSignedInt:

m_maxAmplitude = 0xffffffff;

break;

case QAudioFormat::SignedInt:

m_maxAmplitude = 0x7fffffff;

break;

case QAudioFormat::Float:

m_maxAmplitude = 0x7fffffff; // Kind of

default:

break;

}

break;

default:

break;

}

}

GNIODevice::~GNIODevice()

{

}

void GNIODevice::start()

{

open(QIODevice::WriteOnly);

}

void GNIODevice::stop()

{

close();

}

qint64 GNIODevice::writeData(const char *data, qint64 len)

{

if (m_maxAmplitude) {

Q_ASSERT(m_format.sampleSize() % 8 == 0);

const int channelBytes = m_format.sampleSize() / 8;

const int sampleBytes = m_format.channelCount() * channelBytes;

Q_ASSERT(len % sampleBytes == 0);

const int numSamples = len / sampleBytes;

quint32 maxValue = 0;

const unsigned char *ptr = reinterpret_cast<const unsigned char *>(data);

for (int i = 0; i < numSamples; ++i) {

for (int j = 0; j < m_format.channelCount(); ++j) {

quint32 value = 0;

if (m_format.sampleSize() == 8 && m_format.sampleType() == QAudioFormat::UnSignedInt) {

value = *reinterpret_cast<const quint8*>(ptr);

} else if (m_format.sampleSize() == 8 && m_format.sampleType() == QAudioFormat::SignedInt) {

value = qAbs(*reinterpret_cast<const qint8*>(ptr));

} else if (m_format.sampleSize() == 16 && m_format.sampleType() == QAudioFormat::UnSignedInt) {

if (m_format.byteOrder() == QAudioFormat::LittleEndian)

value = qFromLittleEndian<quint16>(ptr);

else

value = qFromBigEndian<quint16>(ptr);

} else if (m_format.sampleSize() == 16 && m_format.sampleType() == QAudioFormat::SignedInt) {

if (m_format.byteOrder() == QAudioFormat::LittleEndian)

value = qAbs(qFromLittleEndian<qint16>(ptr));

else

value = qAbs(qFromBigEndian<qint16>(ptr));

} else if (m_format.sampleSize() == 32 && m_format.sampleType() == QAudioFormat::UnSignedInt) {

if (m_format.byteOrder() == QAudioFormat::LittleEndian)

value = qFromLittleEndian<quint32>(ptr);

else

value = qFromBigEndian<quint32>(ptr);

} else if (m_format.sampleSize() == 32 && m_format.sampleType() == QAudioFormat::SignedInt) {

if (m_format.byteOrder() == QAudioFormat::LittleEndian)

value = qAbs(qFromLittleEndian<qint32>(ptr));

else

value = qAbs(qFromBigEndian<qint32>(ptr));

} else if (m_format.sampleSize() == 32 && m_format.sampleType() == QAudioFormat::Float) {

value = qAbs(*reinterpret_cast<const float*>(ptr) * 0x7fffffff); // assumes 0-1.0

}

maxValue = qMax(value, maxValue);

ptr += channelBytes;

}

}

maxValue = qMin(maxValue, m_maxAmplitude);

m_level = qreal(maxValue) / m_maxAmplitude;

}

emit update();

return len;

}

qint64 GNIODevice::readData(char *data, qint64 maxlen)

{

Q_UNUSED(data)

Q_UNUSED(maxlen)

return 0;

}

其中的m_level对应的就是音量大小了。直接用来检测声音。只不过需要注意的是level是从0-1.

3.最后来说合成

思路:使用ffmpeg进行音视频合成

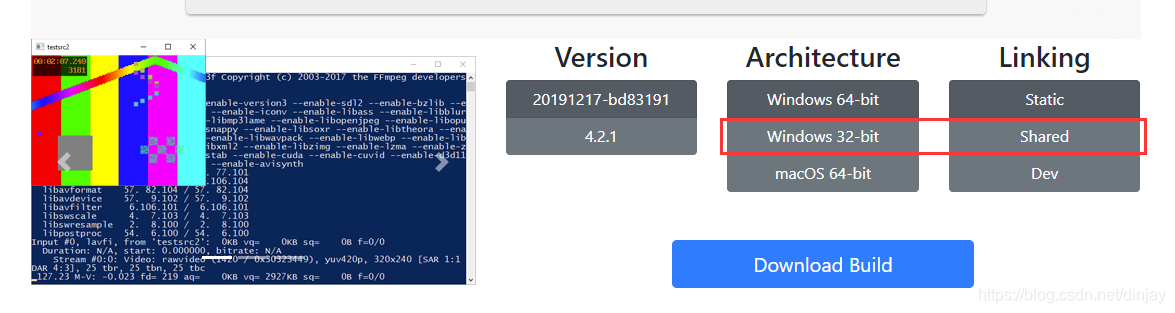

这部就比较简单了,先去下载ffmpeg,https://ffmpeg.zeranoe.com/builds/

这里把share下载下来即可,里面是编译好的ffmpeg,我们直接通过ffmpeg.exe命令行的方式调用合成功能。

合成代码:

void GNCompressView::startCompress(QString wav, QString avi, QString outName)

{

QString program = qApp->applicationDirPath();

program += "/compress/ffmpeg.exe";

QProcess process(this);

connect(&process, static_cast<void(QProcess::*)(int, QProcess::ExitStatus)>(&QProcess::finished), this, &GNCompressView::onFinished);

QStringList arguments;

if(!wav.isEmpty())

{

arguments<<"-i"<<wav<<"-i"<<avi<<outName;//传递到exe的参数

}

else

{

//没有音频,只把avi转成MP4

arguments<<"-i"<<avi<<"-vcodec"<<"mpeg4"<<outName;

}

process.start(program, arguments);

}

void GNCompressView::onFinished(int exitCode, QProcess::ExitStatus exitStatus)

{

qDebug()<<"onFinished exitCode:"<<exitCode<<" exitStatus:"<<exitStatus;

isFinished = true;

emit compressSuccessed();

}其实就是调用命令行,把下载的ffmpeg的share里bin目录中的内容拷贝到工程运行目录下,代码里直接通过QProcess调用ffmpeg.exe。

合成命令其实就是ffmpeg -i my.wav -i my.avi out.mp4,将avi和wav合并为mp4,至于其他编码设置,自行baidu一下ffmpeg用法即可,这里不做赘述。

总结:到这里音频检测+录音,以及录制屏幕视频和最终的合成全部完成。Baidu了很多,开始想把ffmpeg代码直接弄到工程下,后来由于环境原因就采取了外部调用ffmpeg的方式,不过效果达到了。也欢迎大家留意交流。如果在录制屏幕这块有更高效,帧率更高的的方法,也请告知。

820

820

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?