模型训练部分代码

(注: 仅仅是训练的代码,如需训练还需要load_and_process.py、cnn.py等代码)

from keras.callbacks import CSVLogger, ModelCheckpoint, EarlyStopping

from keras.callbacks import ReduceLROnPlateau

from keras.preprocessing.image import ImageDataGenerator

from load_and_process import load_fer2013

from load_and_process import preprocess_input

from models.cnn import XCEPTION

from sklearn.model_selection import train_test_split定义参数

# 参数

batch_size = 32

num_epochs = 10000

input_shape = (48, 48, 1)

validation_split = .2

verbose = 1

num_classes = 7

patience = 50

base_path = 'models/'# 构建模型、配置、打印

# 1. 使用定义好的mini_XCEPTION模型

# 2. compile:Model 类模型方法用于配置训练模型。

# 参数一:optimizer优化器采用adam

# 参数二:loss多分类的对数损失函数,

# categorical_crossentropy(kears后端函数)输出张量与目标张量之间的分类交叉熵。

# 参数三:metrics: 在训练和测试期间的模型评估标准。

# 3. model.summary():打印出模型概况,它实际调用的是keras.utils.print_summary

model = XCEPTION(input_shape, num_classes)

model.compile(optimizer='adam', # 优化器采用adam

loss='categorical_crossentropy', # 多分类的对数损失函数

metrics=['accuracy'])

model.summary() 运行结果:打印模型结果如下

WARNING:tensorflow:From C:\Users\asus\Anaconda3\envs\Face\lib\site-packages\tensorflow\python\framework\op_def_library.py:263: colocate_with (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version.

Instructions for updating:

Colocations handled automatically by placer.

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) (None, 48, 48, 1) 0

__________________________________________________________________________________________________

block1_conv1 (Conv2D) (None, 23, 23, 32) 288 input_1[0][0]

__________________________________________________________________________________________________

block1_conv1_bn (BatchNormaliza (None, 23, 23, 32) 128 block1_conv1[0][0]

__________________________________________________________________________________________________

block1_conv1_act (Activation) (None, 23, 23, 32) 0 block1_conv1_bn[0][0]

__________________________________________________________________________________________________

block1_conv2 (Conv2D) (None, 21, 21, 64) 18432 block1_conv1_act[0][0]

__________________________________________________________________________________________________

block1_conv2_bn (BatchNormaliza (None, 21, 21, 64) 256 block1_conv2[0][0]

__________________________________________________________________________________________________

block1_conv2_act (Activation) (None, 21, 21, 64) 0 block1_conv2_bn[0][0]

__________________________________________________________________________________________________

block2_sepconv1 (SeparableConv2 (None, 21, 21, 128) 8768 block1_conv2_act[0][0]

__________________________________________________________________________________________________

block2_sepconv1_bn (BatchNormal (None, 21, 21, 128) 512 block2_sepconv1[0][0]

__________________________________________________________________________________________________

block2_sepconv2_act (Activation (None, 21, 21, 128) 0 block2_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block2_sepconv2 (SeparableConv2 (None, 21, 21, 128) 17536 block2_sepconv2_act[0][0]

__________________________________________________________________________________________________

block2_sepconv2_bn (BatchNormal (None, 21, 21, 128) 512 block2_sepconv2[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 11, 11, 128) 8192 block1_conv2_act[0][0]

__________________________________________________________________________________________________

block2_pool (MaxPooling2D) (None, 11, 11, 128) 0 block2_sepconv2_bn[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 11, 11, 128) 512 conv2d_1[0][0]

__________________________________________________________________________________________________

add_1 (Add) (None, 11, 11, 128) 0 block2_pool[0][0]

batch_normalization_1[0][0]

__________________________________________________________________________________________________

block3_sepconv1_act (Activation (None, 11, 11, 128) 0 add_1[0][0]

__________________________________________________________________________________________________

block3_sepconv1 (SeparableConv2 (None, 11, 11, 256) 33920 block3_sepconv1_act[0][0]

__________________________________________________________________________________________________

block3_sepconv1_bn (BatchNormal (None, 11, 11, 256) 1024 block3_sepconv1[0][0]

__________________________________________________________________________________________________

block3_sepconv2_act (Activation (None, 11, 11, 256) 0 block3_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block3_sepconv2 (SeparableConv2 (None, 11, 11, 256) 67840 block3_sepconv2_act[0][0]

__________________________________________________________________________________________________

block3_sepconv2_bn (BatchNormal (None, 11, 11, 256) 1024 block3_sepconv2[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 6, 6, 256) 32768 add_1[0][0]

__________________________________________________________________________________________________

block3_pool (MaxPooling2D) (None, 6, 6, 256) 0 block3_sepconv2_bn[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 6, 6, 256) 1024 conv2d_2[0][0]

__________________________________________________________________________________________________

add_2 (Add) (None, 6, 6, 256) 0 block3_pool[0][0]

batch_normalization_2[0][0]

__________________________________________________________________________________________________

block4_sepconv1_act (Activation (None, 6, 6, 256) 0 add_2[0][0]

__________________________________________________________________________________________________

block4_sepconv1 (SeparableConv2 (None, 6, 6, 728) 188672 block4_sepconv1_act[0][0]

__________________________________________________________________________________________________

block4_sepconv1_bn (BatchNormal (None, 6, 6, 728) 2912 block4_sepconv1[0][0]

__________________________________________________________________________________________________

block4_sepconv2_act (Activation (None, 6, 6, 728) 0 block4_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block4_sepconv2 (SeparableConv2 (None, 6, 6, 728) 536536 block4_sepconv2_act[0][0]

__________________________________________________________________________________________________

block4_sepconv2_bn (BatchNormal (None, 6, 6, 728) 2912 block4_sepconv2[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 3, 3, 728) 186368 add_2[0][0]

__________________________________________________________________________________________________

block4_pool (MaxPooling2D) (None, 3, 3, 728) 0 block4_sepconv2_bn[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 3, 3, 728) 2912 conv2d_3[0][0]

__________________________________________________________________________________________________

add_3 (Add) (None, 3, 3, 728) 0 block4_pool[0][0]

batch_normalization_3[0][0]

__________________________________________________________________________________________________

block5_sepconv1_act (Activation (None, 3, 3, 728) 0 add_3[0][0]

__________________________________________________________________________________________________

block5_sepconv1 (SeparableConv2 (None, 3, 3, 728) 536536 block5_sepconv1_act[0][0]

__________________________________________________________________________________________________

block5_sepconv1_bn (BatchNormal (None, 3, 3, 728) 2912 block5_sepconv1[0][0]

__________________________________________________________________________________________________

block5_sepconv2_act (Activation (None, 3, 3, 728) 0 block5_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block5_sepconv2 (SeparableConv2 (None, 3, 3, 728) 536536 block5_sepconv2_act[0][0]

__________________________________________________________________________________________________

block5_sepconv2_bn (BatchNormal (None, 3, 3, 728) 2912 block5_sepconv2[0][0]

__________________________________________________________________________________________________

block5_sepconv3_act (Activation (None, 3, 3, 728) 0 block5_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block5_sepconv3 (SeparableConv2 (None, 3, 3, 728) 536536 block5_sepconv3_act[0][0]

__________________________________________________________________________________________________

block5_sepconv3_bn (BatchNormal (None, 3, 3, 728) 2912 block5_sepconv3[0][0]

__________________________________________________________________________________________________

add_4 (Add) (None, 3, 3, 728) 0 block5_sepconv3_bn[0][0]

add_3[0][0]

__________________________________________________________________________________________________

block6_sepconv1_act (Activation (None, 3, 3, 728) 0 add_4[0][0]

__________________________________________________________________________________________________

block6_sepconv1 (SeparableConv2 (None, 3, 3, 728) 536536 block6_sepconv1_act[0][0]

__________________________________________________________________________________________________

block6_sepconv1_bn (BatchNormal (None, 3, 3, 728) 2912 block6_sepconv1[0][0]

__________________________________________________________________________________________________

block6_sepconv2_act (Activation (None, 3, 3, 728) 0 block6_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block6_sepconv2 (SeparableConv2 (None, 3, 3, 728) 536536 block6_sepconv2_act[0][0]

__________________________________________________________________________________________________

block6_sepconv2_bn (BatchNormal (None, 3, 3, 728) 2912 block6_sepconv2[0][0]

__________________________________________________________________________________________________

block6_sepconv3_act (Activation (None, 3, 3, 728) 0 block6_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block6_sepconv3 (SeparableConv2 (None, 3, 3, 728) 536536 block6_sepconv3_act[0][0]

__________________________________________________________________________________________________

block6_sepconv3_bn (BatchNormal (None, 3, 3, 728) 2912 block6_sepconv3[0][0]

__________________________________________________________________________________________________

add_5 (Add) (None, 3, 3, 728) 0 block6_sepconv3_bn[0][0]

add_4[0][0]

__________________________________________________________________________________________________

block7_sepconv1_act (Activation (None, 3, 3, 728) 0 add_5[0][0]

__________________________________________________________________________________________________

block7_sepconv1 (SeparableConv2 (None, 3, 3, 728) 536536 block7_sepconv1_act[0][0]

__________________________________________________________________________________________________

block7_sepconv1_bn (BatchNormal (None, 3, 3, 728) 2912 block7_sepconv1[0][0]

__________________________________________________________________________________________________

block7_sepconv2_act (Activation (None, 3, 3, 728) 0 block7_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block7_sepconv2 (SeparableConv2 (None, 3, 3, 728) 536536 block7_sepconv2_act[0][0]

__________________________________________________________________________________________________

block7_sepconv2_bn (BatchNormal (None, 3, 3, 728) 2912 block7_sepconv2[0][0]

__________________________________________________________________________________________________

block7_sepconv3_act (Activation (None, 3, 3, 728) 0 block7_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block7_sepconv3 (SeparableConv2 (None, 3, 3, 728) 536536 block7_sepconv3_act[0][0]

__________________________________________________________________________________________________

block7_sepconv3_bn (BatchNormal (None, 3, 3, 728) 2912 block7_sepconv3[0][0]

__________________________________________________________________________________________________

add_6 (Add) (None, 3, 3, 728) 0 block7_sepconv3_bn[0][0]

add_5[0][0]

__________________________________________________________________________________________________

block8_sepconv1_act (Activation (None, 3, 3, 728) 0 add_6[0][0]

__________________________________________________________________________________________________

block8_sepconv1 (SeparableConv2 (None, 3, 3, 728) 536536 block8_sepconv1_act[0][0]

__________________________________________________________________________________________________

block8_sepconv1_bn (BatchNormal (None, 3, 3, 728) 2912 block8_sepconv1[0][0]

__________________________________________________________________________________________________

block8_sepconv2_act (Activation (None, 3, 3, 728) 0 block8_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block8_sepconv2 (SeparableConv2 (None, 3, 3, 728) 536536 block8_sepconv2_act[0][0]

__________________________________________________________________________________________________

block8_sepconv2_bn (BatchNormal (None, 3, 3, 728) 2912 block8_sepconv2[0][0]

__________________________________________________________________________________________________

block8_sepconv3_act (Activation (None, 3, 3, 728) 0 block8_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block8_sepconv3 (SeparableConv2 (None, 3, 3, 728) 536536 block8_sepconv3_act[0][0]

__________________________________________________________________________________________________

block8_sepconv3_bn (BatchNormal (None, 3, 3, 728) 2912 block8_sepconv3[0][0]

__________________________________________________________________________________________________

add_7 (Add) (None, 3, 3, 728) 0 block8_sepconv3_bn[0][0]

add_6[0][0]

__________________________________________________________________________________________________

block9_sepconv1_act (Activation (None, 3, 3, 728) 0 add_7[0][0]

__________________________________________________________________________________________________

block9_sepconv1 (SeparableConv2 (None, 3, 3, 728) 536536 block9_sepconv1_act[0][0]

__________________________________________________________________________________________________

block9_sepconv1_bn (BatchNormal (None, 3, 3, 728) 2912 block9_sepconv1[0][0]

__________________________________________________________________________________________________

block9_sepconv2_act (Activation (None, 3, 3, 728) 0 block9_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block9_sepconv2 (SeparableConv2 (None, 3, 3, 728) 536536 block9_sepconv2_act[0][0]

__________________________________________________________________________________________________

block9_sepconv2_bn (BatchNormal (None, 3, 3, 728) 2912 block9_sepconv2[0][0]

__________________________________________________________________________________________________

block9_sepconv3_act (Activation (None, 3, 3, 728) 0 block9_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block9_sepconv3 (SeparableConv2 (None, 3, 3, 728) 536536 block9_sepconv3_act[0][0]

__________________________________________________________________________________________________

block9_sepconv3_bn (BatchNormal (None, 3, 3, 728) 2912 block9_sepconv3[0][0]

__________________________________________________________________________________________________

add_8 (Add) (None, 3, 3, 728) 0 block9_sepconv3_bn[0][0]

add_7[0][0]

__________________________________________________________________________________________________

block10_sepconv1_act (Activatio (None, 3, 3, 728) 0 add_8[0][0]

__________________________________________________________________________________________________

block10_sepconv1 (SeparableConv (None, 3, 3, 728) 536536 block10_sepconv1_act[0][0]

__________________________________________________________________________________________________

block10_sepconv1_bn (BatchNorma (None, 3, 3, 728) 2912 block10_sepconv1[0][0]

__________________________________________________________________________________________________

block10_sepconv2_act (Activatio (None, 3, 3, 728) 0 block10_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block10_sepconv2 (SeparableConv (None, 3, 3, 728) 536536 block10_sepconv2_act[0][0]

__________________________________________________________________________________________________

block10_sepconv2_bn (BatchNorma (None, 3, 3, 728) 2912 block10_sepconv2[0][0]

__________________________________________________________________________________________________

block10_sepconv3_act (Activatio (None, 3, 3, 728) 0 block10_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block10_sepconv3 (SeparableConv (None, 3, 3, 728) 536536 block10_sepconv3_act[0][0]

__________________________________________________________________________________________________

block10_sepconv3_bn (BatchNorma (None, 3, 3, 728) 2912 block10_sepconv3[0][0]

__________________________________________________________________________________________________

add_9 (Add) (None, 3, 3, 728) 0 block10_sepconv3_bn[0][0]

add_8[0][0]

__________________________________________________________________________________________________

block11_sepconv1_act (Activatio (None, 3, 3, 728) 0 add_9[0][0]

__________________________________________________________________________________________________

block11_sepconv1 (SeparableConv (None, 3, 3, 728) 536536 block11_sepconv1_act[0][0]

__________________________________________________________________________________________________

block11_sepconv1_bn (BatchNorma (None, 3, 3, 728) 2912 block11_sepconv1[0][0]

__________________________________________________________________________________________________

block11_sepconv2_act (Activatio (None, 3, 3, 728) 0 block11_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block11_sepconv2 (SeparableConv (None, 3, 3, 728) 536536 block11_sepconv2_act[0][0]

__________________________________________________________________________________________________

block11_sepconv2_bn (BatchNorma (None, 3, 3, 728) 2912 block11_sepconv2[0][0]

__________________________________________________________________________________________________

block11_sepconv3_act (Activatio (None, 3, 3, 728) 0 block11_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block11_sepconv3 (SeparableConv (None, 3, 3, 728) 536536 block11_sepconv3_act[0][0]

__________________________________________________________________________________________________

block11_sepconv3_bn (BatchNorma (None, 3, 3, 728) 2912 block11_sepconv3[0][0]

__________________________________________________________________________________________________

add_10 (Add) (None, 3, 3, 728) 0 block11_sepconv3_bn[0][0]

add_9[0][0]

__________________________________________________________________________________________________

block12_sepconv1_act (Activatio (None, 3, 3, 728) 0 add_10[0][0]

__________________________________________________________________________________________________

block12_sepconv1 (SeparableConv (None, 3, 3, 728) 536536 block12_sepconv1_act[0][0]

__________________________________________________________________________________________________

block12_sepconv1_bn (BatchNorma (None, 3, 3, 728) 2912 block12_sepconv1[0][0]

__________________________________________________________________________________________________

block12_sepconv2_act (Activatio (None, 3, 3, 728) 0 block12_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block12_sepconv2 (SeparableConv (None, 3, 3, 728) 536536 block12_sepconv2_act[0][0]

__________________________________________________________________________________________________

block12_sepconv2_bn (BatchNorma (None, 3, 3, 728) 2912 block12_sepconv2[0][0]

__________________________________________________________________________________________________

block12_sepconv3_act (Activatio (None, 3, 3, 728) 0 block12_sepconv2_bn[0][0]

__________________________________________________________________________________________________

block12_sepconv3 (SeparableConv (None, 3, 3, 728) 536536 block12_sepconv3_act[0][0]

__________________________________________________________________________________________________

block12_sepconv3_bn (BatchNorma (None, 3, 3, 728) 2912 block12_sepconv3[0][0]

__________________________________________________________________________________________________

add_11 (Add) (None, 3, 3, 728) 0 block12_sepconv3_bn[0][0]

add_10[0][0]

__________________________________________________________________________________________________

block13_sepconv1_act (Activatio (None, 3, 3, 728) 0 add_11[0][0]

__________________________________________________________________________________________________

block13_sepconv1 (SeparableConv (None, 3, 3, 728) 536536 block13_sepconv1_act[0][0]

__________________________________________________________________________________________________

block13_sepconv1_bn (BatchNorma (None, 3, 3, 728) 2912 block13_sepconv1[0][0]

__________________________________________________________________________________________________

block13_sepconv2_act (Activatio (None, 3, 3, 728) 0 block13_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block13_sepconv2 (SeparableConv (None, 3, 3, 1024) 752024 block13_sepconv2_act[0][0]

__________________________________________________________________________________________________

block13_sepconv2_bn (BatchNorma (None, 3, 3, 1024) 4096 block13_sepconv2[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 2, 2, 1024) 745472 add_11[0][0]

__________________________________________________________________________________________________

block13_pool (MaxPooling2D) (None, 2, 2, 1024) 0 block13_sepconv2_bn[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 2, 2, 1024) 4096 conv2d_4[0][0]

__________________________________________________________________________________________________

add_12 (Add) (None, 2, 2, 1024) 0 block13_pool[0][0]

batch_normalization_4[0][0]

__________________________________________________________________________________________________

block14_sepconv1 (SeparableConv (None, 2, 2, 1536) 1582080 add_12[0][0]

__________________________________________________________________________________________________

block14_sepconv1_bn (BatchNorma (None, 2, 2, 1536) 6144 block14_sepconv1[0][0]

__________________________________________________________________________________________________

block14_sepconv1_act (Activatio (None, 2, 2, 1536) 0 block14_sepconv1_bn[0][0]

__________________________________________________________________________________________________

block14_sepconv2 (SeparableConv (None, 2, 2, 2048) 3159552 block14_sepconv1_act[0][0]

__________________________________________________________________________________________________

block14_sepconv2_bn (BatchNorma (None, 2, 2, 2048) 8192 block14_sepconv2[0][0]

__________________________________________________________________________________________________

block14_sepconv2_act (Activatio (None, 2, 2, 2048) 0 block14_sepconv2_bn[0][0]

__________________________________________________________________________________________________

avg_pool (GlobalAveragePooling2 (None, 2048) 0 block14_sepconv2_act[0][0]

__________________________________________________________________________________________________

predictions (Dense) (None, 7) 14343 avg_pool[0][0]

==================================================================================================

Total params: 20,875,247

Trainable params: 20,820,719

Non-trainable params: 54,528

__________________________________________________________________________________________________我的项目中的一些定义

# 定义回调函数 Callbacks使用的一些底层函数

# Callbacks用于训练过程查看训练模型的内在状态和统计。

# base_path = 'models/' 为什么没有生成_emotion_training文件?0kb?中间通道?# 1. 定义日志路径

# 2. CSVLogger把训练轮结果数据流到 csv 文件的回调函数。

# 参数一:filename=log_file_path csv 文件的文件名,例如 'run/log.csv'。

# 参数二:append False:覆盖存在的文件。# 3. EarlyStopping当被监测的数量不再提升,则停止训练。

# 参数一:monitor=val_loss: 被监测的数据。

# 参数二:patience: 没有进步的训练轮数,在这之后训练就会被停止。#3. ReduceLROnPlateau 当标准评估停止提升时,降低学习速率。

# 参数一:monitor=val_loss被监测的数据。

# 参数二:factor=0.1,学习速率被降低的因数。新的学习速率 = 学习速率 * 因数

# 参数三:patience=(patience/4):(50)缩小 没有进步的训练轮数,在这之后训练速率会被降低。

# 参数四:verbose: 整数。0:安静,1:更新信息。

log_file_path = base_path + '_emotion_training.log'

csv_logger = CSVLogger(log_file_path, append=False)

early_stop = EarlyStopping('val_loss', patience=patience)

reduce_lr = ReduceLROnPlateau('val_loss', factor=0.1,

patience=int(patience/4),

verbose=1)

# 参数定义:用在model_checkpoint 模型位置及命名ModelCheckpoint参数定义

trained_models_path = base_path + 'XCEPTION'

model_names = trained_models_path + '.{epoch:02d}-{val_acc:.2f}.hdf5'

# 1. ModelCheckpoint参数定义:用在callbacks ModelCheckpoint 在每个训练期之后保存模型

# 参数一:filepath=model_names: 字符串,保存模型的路径。

# 参数二:monitor=val_loss: 被监测的数据

# 参数三:verbose(0/1)详细信息模式

# 参数四:save_best_only: True,被监测数据的最佳模型就不会被覆盖.# 2. callbacks 回调函数是一个函数的合集,会在训练的阶段中所使用。

# callbacks 定义 fit_generator模型训练时的一个参数

model_checkpoint = ModelCheckpoint(model_names,

'val_loss', verbose=1,

save_best_only=True)

callbacks = [model_checkpoint, csv_logger, early_stop, reduce_lr]

# 载入数据集load_and_process.py中已经定义好的load_fer2013()函数

# load_and_process.py中已经定义好的preprocess_input(faces)函数

faces, emotions = load_fer2013()

faces = preprocess_input(faces)

num_samples, num_classes = emotions.shape # 数据维度

# 参数定义:划分训练、测试集 fit_generator validation_data里的参数

xtrain, xtest,ytrain,ytest = train_test_split(faces, emotions,test_size=0.2,shuffle=True)# 数据增强:图片产生器,在批量中对数据进行增强,扩充数据集大小

# ImageDataGenerator通过实时数据增强生成张量图像数据批次。数据将不断循环(按批次)。

# 参数一:featurewise_center: 布尔值。将输入数据的均值设置为 0,逐特征进行。

# 参数二:featurewise_std_normalization: Boolean. 布尔值。将输入除以数据标准差,逐特征进行。

# 参数三:rotation_range: 整数。随机旋转的度数范围。

# 参数四:width_shift_range: 浮点数、一维数组或整数。

# float: 如果 <1,则是除以总宽度的值,或者如果 >=1,则为像素值。

# 参数五:height_shift_range: 浮点数、一维数组或整数

# 参数六:zoom_range: 浮点数 或 [lower, upper]。随机缩放范围。如果是浮点数,[lower, upper] = [1-zoom_range, 1+zoom_range]。

# 参数七:horizontal_flip: 布尔值。随机水平翻转。

data_generator = ImageDataGenerator(

featurewise_center=False,

featurewise_std_normalization=False,

rotation_range=10,

width_shift_range=0.1,

height_shift_range=0.1,

zoom_range=.1,

horizontal_flip=True)# 注意为了绘画正确率损失函数一定要记得将训练结果传给history参数

# 数据训练:利用数据增强进行训练 batch_size = 32

# fit_generator,fit 训练,按批次训练模型,生成器与模型并行运行,以提高效率。

# 参数一:data_generator=ImageDataGenerator,一个生成器,生成器的输出(xtrain, ytrain, batch_size)元组?

# 参数二:steps_per_epoch 声明一个 epoch 完成并开始下一个 epoch 之前从 generator 产生的总步数(批次样本)。

# 它通常应该等于你的数据集的样本数量除以批量大小(len(xtrain) / batch_size)

# 参数三:epochs:整数。训练模型的迭代总轮数。一个 epoch 是对所提供的整个数据的一轮迭代

# epochs=num_epochs (num_epochs = 10000)

# 参数四:verbose: 0, 1 或 2。日志显示模式。 0 = 安静模式, 1 = 进度条, 2 = 每轮一行。verbose=1 进度条

# 参数五:callbacks: keras.callbacks.Callback 实例的列表。在训练时调用的一系列回调函数。

# 参数六:validation_data=(xtest,ytest) 验证数据,在每个 epoch 结束时评估损失和任何模型指标。该模型不会对此数据进行训练。

history = model.fit_generator(data_generator.flow(xtrain, ytrain, batch_size),

steps_per_epoch=len(xtrain) / batch_size,

epochs=num_epochs,

verbose=1, callbacks=callbacks,

validation_data=(xtest,ytest))运行结果:训练结果

WARNING:tensorflow:From C:\Users\asus\Anaconda3\envs\Face\lib\site-packages\tensorflow\python\ops\math_ops.py:3066: to_int32 (from tensorflow.python.ops.math_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use tf.cast instead.

Epoch 1/10000

898/897 [==============================] - 253s 282ms/step - loss: 1.6659 - acc: 0.3397 - val_loss: 1.9674 - val_acc: 0.3526

Epoch 00001: val_loss improved from inf to 1.96741, saving model to models/XCEPTION.01-0.35.hdf5

Epoch 2/10000

898/897 [==============================] - 232s 258ms/step - loss: 1.4373 - acc: 0.4454 - val_loss: 3.1111 - val_acc: 0.3649

Epoch 00002: val_loss did not improve from 1.96741

Epoch 3/10000

898/897 [==============================] - 232s 258ms/step - loss: 1.3367 - acc: 0.4948 - val_loss: 1.3311 - val_acc: 0.5010

Epoch 00003: val_loss improved from 1.96741 to 1.33113, saving model to models/XCEPTION.03-0.50.hdf5

Epoch 4/10000

898/897 [==============================] - 234s 260ms/step - loss: 1.2640 - acc: 0.5266 - val_loss: 1.4253 - val_acc: 0.5006

Epoch 00004: val_loss did not improve from 1.33113

Epoch 5/10000

898/897 [==============================] - 234s 261ms/step - loss: 1.2270 - acc: 0.5398 - val_loss: 1.1800 - val_acc: 0.5614

Epoch 00005: val_loss improved from 1.33113 to 1.17996, saving model to models/XCEPTION.05-0.56.hdf5

Epoch 6/10000

898/897 [==============================] - 232s 258ms/step - loss: 1.1928 - acc: 0.5538 - val_loss: 1.4678 - val_acc: 0.5587

Epoch 00006: val_loss did not improve from 1.17996

Epoch 7/10000

898/897 [==============================] - 233s 259ms/step - loss: 1.2171 - acc: 0.5520 - val_loss: 1.2607 - val_acc: 0.5194

Epoch 00007: val_loss did not improve from 1.17996

Epoch 8/10000

898/897 [==============================] - 235s 262ms/step - loss: 1.2409 - acc: 0.5437 - val_loss: 2.4143 - val_acc: 0.4160

Epoch 00008: val_loss did not improve from 1.17996

Epoch 9/10000

898/897 [==============================] - 235s 262ms/step - loss: 1.1859 - acc: 0.5568 - val_loss: 1.1573 - val_acc: 0.5697

Epoch 00009: val_loss improved from 1.17996 to 1.15731, saving model to models/XCEPTION.09-0.57.hdf5

Epoch 10/10000

898/897 [==============================] - 237s 263ms/step - loss: 1.1067 - acc: 0.5838 - val_loss: 4.0526 - val_acc: 0.4299

Epoch 00010: val_loss did not improve from 1.15731

Epoch 11/10000

898/897 [==============================] - 237s 264ms/step - loss: 1.0921 - acc: 0.5913 - val_loss: 1.1778 - val_acc: 0.5471

Epoch 00011: val_loss did not improve from 1.15731

Epoch 12/10000

898/897 [==============================] - 237s 264ms/step - loss: 1.0740 - acc: 0.5936 - val_loss: 1.1783 - val_acc: 0.5600

Epoch 00012: val_loss did not improve from 1.15731

Epoch 13/10000

898/897 [==============================] - 236s 262ms/step - loss: 1.0592 - acc: 0.6000 - val_loss: 1.0890 - val_acc: 0.5977

Epoch 00013: val_loss improved from 1.15731 to 1.08896, saving model to models/XCEPTION.13-0.60.hdf5

Epoch 14/10000

898/897 [==============================] - 234s 261ms/step - loss: 1.0431 - acc: 0.6058 - val_loss: 1.0631 - val_acc: 0.5954

Epoch 00014: val_loss improved from 1.08896 to 1.06306, saving model to models/XCEPTION.14-0.60.hdf5

Epoch 15/10000

898/897 [==============================] - 234s 261ms/step - loss: 1.0290 - acc: 0.6111 - val_loss: 1.0599 - val_acc: 0.6004

Epoch 00015: val_loss improved from 1.06306 to 1.05987, saving model to models/XCEPTION.15-0.60.hdf5

Epoch 16/10000

898/897 [==============================] - 234s 261ms/step - loss: 1.0108 - acc: 0.6183 - val_loss: 1.0576 - val_acc: 0.6030

Epoch 00016: val_loss improved from 1.05987 to 1.05763, saving model to models/XCEPTION.16-0.60.hdf5

Epoch 17/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.9956 - acc: 0.6226 - val_loss: 1.0592 - val_acc: 0.6127

Epoch 00017: val_loss did not improve from 1.05763

Epoch 18/10000

898/897 [==============================] - 235s 262ms/step - loss: 0.9908 - acc: 0.6273 - val_loss: 1.1557 - val_acc: 0.5561

Epoch 00018: val_loss did not improve from 1.05763

Epoch 19/10000

898/897 [==============================] - 235s 262ms/step - loss: 0.9916 - acc: 0.6267 - val_loss: 1.0356 - val_acc: 0.6177

Epoch 00019: val_loss improved from 1.05763 to 1.03557, saving model to models/XCEPTION.19-0.62.hdf5

Epoch 20/10000

898/897 [==============================] - 232s 259ms/step - loss: 0.9775 - acc: 0.6308 - val_loss: 1.0222 - val_acc: 0.6134

Epoch 00020: val_loss improved from 1.03557 to 1.02222, saving model to models/XCEPTION.20-0.61.hdf5

Epoch 21/10000

898/897 [==============================] - 233s 259ms/step - loss: 0.9570 - acc: 0.6389 - val_loss: 1.0285 - val_acc: 0.6223

Epoch 00021: val_loss did not improve from 1.02222

Epoch 22/10000

898/897 [==============================] - 234s 260ms/step - loss: 0.9470 - acc: 0.6449 - val_loss: 1.1531 - val_acc: 0.5963

Epoch 00022: val_loss did not improve from 1.02222

Epoch 23/10000

898/897 [==============================] - 234s 260ms/step - loss: 0.9268 - acc: 0.6508 - val_loss: 1.0245 - val_acc: 0.6297

Epoch 00023: val_loss did not improve from 1.02222

Epoch 24/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.9150 - acc: 0.6570 - val_loss: 0.9913 - val_acc: 0.6385

Epoch 00024: val_loss improved from 1.02222 to 0.99130, saving model to models/XCEPTION.24-0.64.hdf5

Epoch 25/10000

898/897 [==============================] - 232s 258ms/step - loss: 0.9081 - acc: 0.6595 - val_loss: 0.9774 - val_acc: 0.6383

Epoch 00025: val_loss improved from 0.99130 to 0.97737, saving model to models/XCEPTION.25-0.64.hdf5

Epoch 26/10000

898/897 [==============================] - 233s 259ms/step - loss: 0.8984 - acc: 0.6598 - val_loss: 1.0867 - val_acc: 0.6198

Epoch 00026: val_loss did not improve from 0.97737

Epoch 27/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.8808 - acc: 0.6669 - val_loss: 1.0477 - val_acc: 0.6183

Epoch 00027: val_loss did not improve from 0.97737

Epoch 28/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.8728 - acc: 0.6712 - val_loss: 0.9732 - val_acc: 0.6396

Epoch 00028: val_loss improved from 0.97737 to 0.97319, saving model to models/XCEPTION.28-0.64.hdf5

Epoch 29/10000

898/897 [==============================] - 233s 259ms/step - loss: 0.8654 - acc: 0.6722 - val_loss: 1.1426 - val_acc: 0.6031

Epoch 00029: val_loss did not improve from 0.97319

Epoch 30/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.8746 - acc: 0.6709 - val_loss: 0.9778 - val_acc: 0.6411

Epoch 00030: val_loss did not improve from 0.97319

Epoch 31/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.8348 - acc: 0.6852 - val_loss: 0.9817 - val_acc: 0.6461

Epoch 00031: val_loss did not improve from 0.97319

Epoch 32/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.8343 - acc: 0.6871 - val_loss: 0.9618 - val_acc: 0.6467

Epoch 00032: val_loss improved from 0.97319 to 0.96177, saving model to models/XCEPTION.32-0.65.hdf5

Epoch 33/10000

898/897 [==============================] - 233s 259ms/step - loss: 0.8274 - acc: 0.6890 - val_loss: 0.9642 - val_acc: 0.6402

Epoch 00033: val_loss did not improve from 0.96177

Epoch 34/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.8166 - acc: 0.6934 - val_loss: 0.9959 - val_acc: 0.6365

Epoch 00034: val_loss did not improve from 0.96177

Epoch 35/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.8075 - acc: 0.6965 - val_loss: 1.5719 - val_acc: 0.5956

Epoch 00035: val_loss did not improve from 0.96177

Epoch 36/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.8008 - acc: 0.6971 - val_loss: 1.0286 - val_acc: 0.6360

Epoch 00036: val_loss did not improve from 0.96177

Epoch 37/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.7892 - acc: 0.7050 - val_loss: 0.9681 - val_acc: 0.6471

Epoch 00037: val_loss did not improve from 0.96177

Epoch 38/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.7820 - acc: 0.7065 - val_loss: 0.9752 - val_acc: 0.6442

Epoch 00038: val_loss did not improve from 0.96177

Epoch 39/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.7679 - acc: 0.7153 - val_loss: 0.9893 - val_acc: 0.6402

Epoch 00039: val_loss did not improve from 0.96177

Epoch 40/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.7551 - acc: 0.7129 - val_loss: 1.1149 - val_acc: 0.6375

Epoch 00040: val_loss did not improve from 0.96177

Epoch 41/10000

898/897 [==============================] - 234s 260ms/step - loss: 0.7500 - acc: 0.7208 - val_loss: 0.9677 - val_acc: 0.6566

Epoch 00041: val_loss did not improve from 0.96177

Epoch 42/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.7416 - acc: 0.7189 - val_loss: 0.9999 - val_acc: 0.6480

Epoch 00042: val_loss did not improve from 0.96177

Epoch 43/10000

898/897 [==============================] - 234s 260ms/step - loss: 0.7380 - acc: 0.7241 - val_loss: 1.0890 - val_acc: 0.6470

Epoch 00043: val_loss did not improve from 0.96177

Epoch 44/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.7204 - acc: 0.7316 - val_loss: 0.9864 - val_acc: 0.6489

Epoch 00044: val_loss did not improve from 0.96177

Epoch 00044: ReduceLROnPlateau reducing learning rate to 0.00010000000474974513.

Epoch 45/10000

898/897 [==============================] - 233s 259ms/step - loss: 0.6523 - acc: 0.7562 - val_loss: 0.9394 - val_acc: 0.6734

Epoch 00045: val_loss improved from 0.96177 to 0.93938, saving model to models/XCEPTION.45-0.67.hdf5

Epoch 46/10000

898/897 [==============================] - 233s 259ms/step - loss: 0.6199 - acc: 0.7698 - val_loss: 0.9427 - val_acc: 0.6764

Epoch 00046: val_loss did not improve from 0.93938

Epoch 47/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.6029 - acc: 0.7766 - val_loss: 0.9580 - val_acc: 0.6746

Epoch 00047: val_loss did not improve from 0.93938

Epoch 48/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5912 - acc: 0.7782 - val_loss: 0.9617 - val_acc: 0.6764

Epoch 00048: val_loss did not improve from 0.93938

Epoch 49/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5886 - acc: 0.7799 - val_loss: 0.9505 - val_acc: 0.6794

Epoch 00049: val_loss did not improve from 0.93938

Epoch 50/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5864 - acc: 0.7809 - val_loss: 0.9720 - val_acc: 0.6751

Epoch 00050: val_loss did not improve from 0.93938

Epoch 51/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5719 - acc: 0.7861 - val_loss: 0.9792 - val_acc: 0.6732

Epoch 00051: val_loss did not improve from 0.93938

Epoch 52/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5659 - acc: 0.7892 - val_loss: 0.9878 - val_acc: 0.6726

Epoch 00052: val_loss did not improve from 0.93938

Epoch 53/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5587 - acc: 0.7914 - val_loss: 1.0260 - val_acc: 0.6702

Epoch 00053: val_loss did not improve from 0.93938

Epoch 54/10000

898/897 [==============================] - 234s 260ms/step - loss: 0.5618 - acc: 0.7908 - val_loss: 0.9862 - val_acc: 0.6729

Epoch 00054: val_loss did not improve from 0.93938

Epoch 55/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5492 - acc: 0.7957 - val_loss: 0.9880 - val_acc: 0.6760

Epoch 00055: val_loss did not improve from 0.93938

Epoch 56/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5530 - acc: 0.7917 - val_loss: 0.9726 - val_acc: 0.6783

Epoch 00056: val_loss did not improve from 0.93938

Epoch 57/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5460 - acc: 0.7957 - val_loss: 1.0289 - val_acc: 0.6711

Epoch 00057: val_loss did not improve from 0.93938

Epoch 00057: ReduceLROnPlateau reducing learning rate to 1.0000000474974514e-05.

Epoch 58/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5254 - acc: 0.8039 - val_loss: 1.0095 - val_acc: 0.6753

Epoch 00058: val_loss did not improve from 0.93938

Epoch 59/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5281 - acc: 0.8030 - val_loss: 1.0123 - val_acc: 0.6755

Epoch 00059: val_loss did not improve from 0.93938

Epoch 60/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5275 - acc: 0.8037 - val_loss: 1.0019 - val_acc: 0.6771

Epoch 00060: val_loss did not improve from 0.93938

Epoch 61/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5301 - acc: 0.8027 - val_loss: 1.0042 - val_acc: 0.6767

Epoch 00061: val_loss did not improve from 0.93938

Epoch 62/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5250 - acc: 0.8060 - val_loss: 1.0349 - val_acc: 0.6716

Epoch 00062: val_loss did not improve from 0.93938

Epoch 63/10000

898/897 [==============================] - 235s 261ms/step - loss: 0.5296 - acc: 0.8023 - val_loss: 1.0028 - val_acc: 0.6757

Epoch 00063: val_loss did not improve from 0.93938

Epoch 64/10000

898/897 [==============================] - 235s 261ms/step - loss: 0.5255 - acc: 0.8042 - val_loss: 1.0151 - val_acc: 0.6751

Epoch 00064: val_loss did not improve from 0.93938

Epoch 65/10000

898/897 [==============================] - 235s 261ms/step - loss: 0.5244 - acc: 0.8045 - val_loss: 1.0187 - val_acc: 0.6730

Epoch 00065: val_loss did not improve from 0.93938

Epoch 66/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5204 - acc: 0.8068 - val_loss: 1.0198 - val_acc: 0.6721

Epoch 00066: val_loss did not improve from 0.93938

Epoch 67/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5224 - acc: 0.8070 - val_loss: 1.0318 - val_acc: 0.6707

Epoch 00067: val_loss did not improve from 0.93938

Epoch 68/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5231 - acc: 0.8062 - val_loss: 1.0202 - val_acc: 0.6722

Epoch 00068: val_loss did not improve from 0.93938

Epoch 69/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5227 - acc: 0.8071 - val_loss: 1.0177 - val_acc: 0.6723

Epoch 00069: val_loss did not improve from 0.93938

Epoch 00069: ReduceLROnPlateau reducing learning rate to 1.0000000656873453e-06.

Epoch 70/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5209 - acc: 0.8068 - val_loss: 1.0175 - val_acc: 0.6734

Epoch 00070: val_loss did not improve from 0.93938

Epoch 71/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5187 - acc: 0.8069 - val_loss: 1.0146 - val_acc: 0.6737

Epoch 00071: val_loss did not improve from 0.93938

Epoch 72/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5187 - acc: 0.8070 - val_loss: 1.0090 - val_acc: 0.6743

Epoch 00072: val_loss did not improve from 0.93938

Epoch 73/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5169 - acc: 0.8086 - val_loss: 1.0193 - val_acc: 0.6725

Epoch 00073: val_loss did not improve from 0.93938

Epoch 74/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5190 - acc: 0.8039 - val_loss: 1.0111 - val_acc: 0.6746

Epoch 00074: val_loss did not improve from 0.93938

Epoch 75/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5277 - acc: 0.8041 - val_loss: 1.0150 - val_acc: 0.6747

Epoch 00075: val_loss did not improve from 0.93938

Epoch 76/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5196 - acc: 0.8078 - val_loss: 1.0149 - val_acc: 0.6737

Epoch 00076: val_loss did not improve from 0.93938

Epoch 77/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5190 - acc: 0.8070 - val_loss: 1.0311 - val_acc: 0.6708

Epoch 00077: val_loss did not improve from 0.93938

Epoch 78/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5117 - acc: 0.8096 - val_loss: 1.0127 - val_acc: 0.6744

Epoch 00078: val_loss did not improve from 0.93938

Epoch 79/10000

898/897 [==============================] - 234s 260ms/step - loss: 0.5156 - acc: 0.8066 - val_loss: 1.0194 - val_acc: 0.6722

Epoch 00079: val_loss did not improve from 0.93938

Epoch 80/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5245 - acc: 0.8001 - val_loss: 1.0184 - val_acc: 0.6712

Epoch 00080: val_loss did not improve from 0.93938

Epoch 81/10000

898/897 [==============================] - 234s 260ms/step - loss: 0.5150 - acc: 0.8093 - val_loss: 1.0117 - val_acc: 0.6744

Epoch 00081: val_loss did not improve from 0.93938

Epoch 00081: ReduceLROnPlateau reducing learning rate to 1.0000001111620805e-07.

Epoch 82/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5241 - acc: 0.8075 - val_loss: 1.0180 - val_acc: 0.6733

Epoch 00082: val_loss did not improve from 0.93938

Epoch 83/10000

898/897 [==============================] - 234s 260ms/step - loss: 0.5098 - acc: 0.8085 - val_loss: 1.0125 - val_acc: 0.6746

Epoch 00083: val_loss did not improve from 0.93938

Epoch 84/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5175 - acc: 0.8051 - val_loss: 1.0328 - val_acc: 0.6700

Epoch 00084: val_loss did not improve from 0.93938

Epoch 85/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5203 - acc: 0.8064 - val_loss: 1.0094 - val_acc: 0.6755

Epoch 00085: val_loss did not improve from 0.93938

Epoch 86/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5158 - acc: 0.8060 - val_loss: 1.0068 - val_acc: 0.6744

Epoch 00086: val_loss did not improve from 0.93938

Epoch 87/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5209 - acc: 0.8076 - val_loss: 1.0155 - val_acc: 0.6736

Epoch 00087: val_loss did not improve from 0.93938

Epoch 88/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5183 - acc: 0.8058 - val_loss: 1.0295 - val_acc: 0.6707

Epoch 00088: val_loss did not improve from 0.93938

Epoch 89/10000

898/897 [==============================] - 234s 260ms/step - loss: 0.5206 - acc: 0.8086 - val_loss: 1.0127 - val_acc: 0.6744

Epoch 00089: val_loss did not improve from 0.93938

Epoch 90/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5220 - acc: 0.8059 - val_loss: 1.0149 - val_acc: 0.6739

Epoch 00090: val_loss did not improve from 0.93938

Epoch 91/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5212 - acc: 0.8060 - val_loss: 1.0130 - val_acc: 0.6743

Epoch 00091: val_loss did not improve from 0.93938

Epoch 92/10000

898/897 [==============================] - 233s 260ms/step - loss: 0.5156 - acc: 0.8068 - val_loss: 1.0169 - val_acc: 0.6732

Epoch 00092: val_loss did not improve from 0.93938

Epoch 93/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5128 - acc: 0.8084 - val_loss: 1.0116 - val_acc: 0.6740

Epoch 00093: val_loss did not improve from 0.93938

Epoch 00093: ReduceLROnPlateau reducing learning rate to 1.000000082740371e-08.

Epoch 94/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5259 - acc: 0.8028 - val_loss: 1.0192 - val_acc: 0.6730

Epoch 00094: val_loss did not improve from 0.93938

Epoch 95/10000

898/897 [==============================] - 234s 261ms/step - loss: 0.5207 - acc: 0.8085 - val_loss: 1.0285 - val_acc: 0.6709

Epoch 00095: val_loss did not improve from 0.93938曲线图

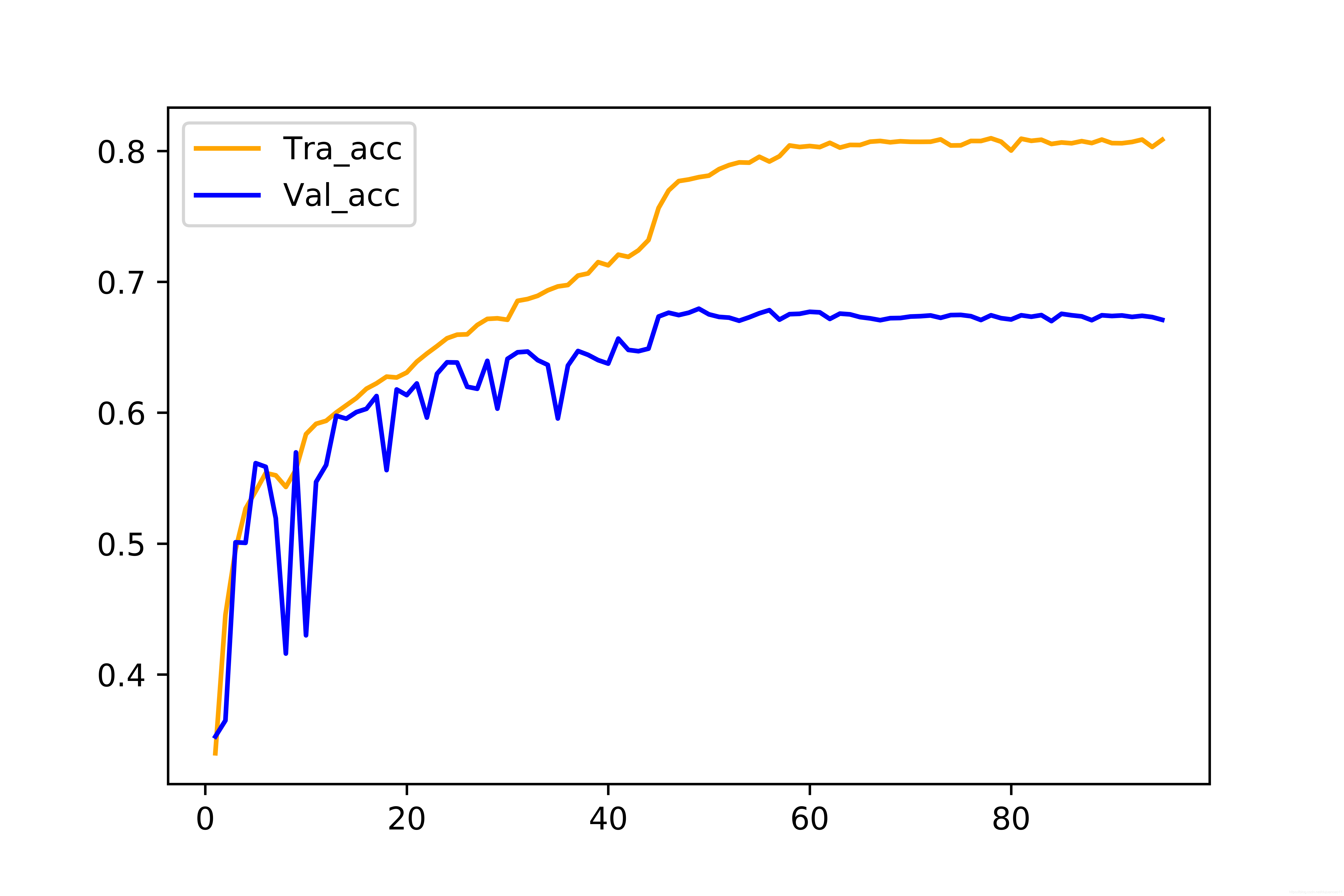

import matplotlib.pyplot as plt #重要

history_dict = history.history

acc = history_dict['acc']

val_acc = history_dict['val_acc']

epochs = range(1, len(val_acc) + 1)

plt.plot(epochs, acc, 'b',color='orange', label='Tra_acc')

plt.plot(epochs, val_acc, 'b', label='Val_acc')

plt.legend()

plt.savefig('E:/dujuan_papers/result/X1000_acc.png',dpi=1000)

plt.show()

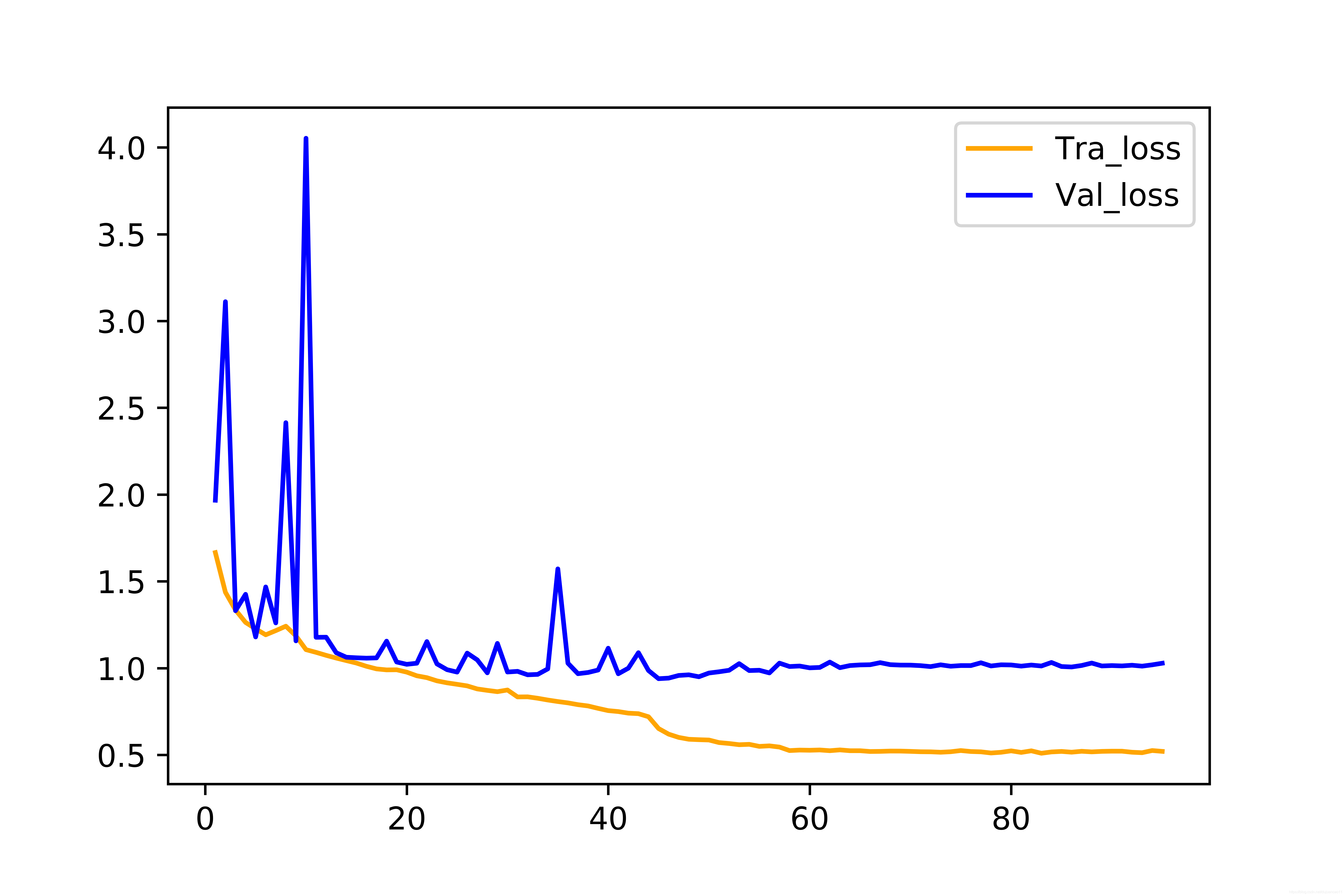

loss = history_dict['loss']

val_loss = history_dict['val_loss']

epochs = range(1, len(loss) + 1)

plt.plot(epochs, loss, 'b',color='orange', label='Tra_loss')

plt.plot(epochs, val_loss, 'b', label='Val_loss')

plt.legend()

plt.savefig('E:/dujuan_papers/result/X1000_loss.png',dpi=1000)

plt.show()

####结束

结果

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?