上篇中我们获取了重庆的一二级区(Scrapy爬取重庆安居客二手房并存入mysql数据库(上)),这一篇我们根据二级区获取相应的二手房信息。

初始化数据库

创建二手房信息数据库表,house表存放二手房信息,house_price存放价格(定期获取分析价格趋势):

CREATE TABLE `house` (

`id` int UNSIGNED AUTO_INCREMENT,

`area_id` int NOT NULL DEFAULT 0,

`area_code` varchar(255) DEFAULT NULL,

`title` varchar(2000) DEFAULT NULL,

`unit_price` decimal(19,4) NOT NULL DEFAULT 0,

`total_price` decimal(19,4) NOT NULL DEFAULT 0,

`code` varchar(255) DEFAULT NULL,

`community` varchar(255) DEFAULT NULL,

`location` varchar(255) DEFAULT NULL,

`build_years` varchar(255) DEFAULT NULL,

`floor` varchar(255) DEFAULT NULL,

`layout` varchar(255) DEFAULT NULL,

`size` varchar(255) DEFAULT NULL,

`picture_url` varchar(255) DEFAULT NULL,

`url` varchar(1024) DEFAULT NULL,

`created_on` timestamp DEFAULT current_timestamp,

`updated_on` timestamp DEFAULT current_timestamp on update current_timestamp,

PRIMARY KEY (id)

) ENGINE=INNODB DEFAULT CHARSET=utf8;

CREATE TABLE `house_price` (

`id` int UNSIGNED AUTO_INCREMENT,

`house_id` int NOT NULL DEFAULT 0,

`house_code` varchar(255) DEFAULT NULL,

`unit_price` decimal(19,4) NOT NULL DEFAULT 0,

`total_price` decimal(19,4) NOT NULL DEFAULT 0,

`created_on` timestamp DEFAULT current_timestamp,

`updated_on` timestamp DEFAULT current_timestamp on update current_timestamp,

PRIMARY KEY (id)

) ENGINE=INNODB DEFAULT CHARSET=utf8;创建项目

创建二手房scrapy项目:

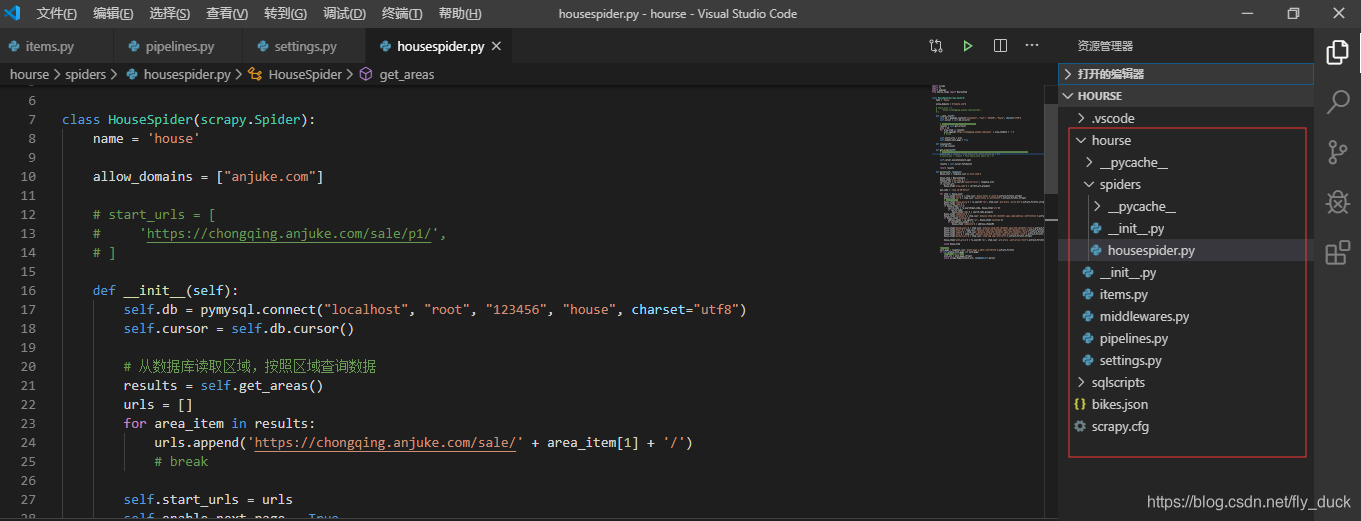

scrapy startproject hourse项目创建成功后,目录结构如下:

定义item

打开items.py文件,定义二手房信息item:

import scrapy

class HourseItem(scrapy.Item):

area_code = scrapy.Field()

title = scrapy.Field()

unit_price = scrapy.Field()

total_price = scrapy.Field()

code = scrapy.Field()

community = scrapy.Field()

location = scrapy.Field()

build_years = scrapy.Field()

floor = scrapy.Field()

layout = scrapy.Field()

size = scrapy.Field()

picture_url = scrapy.Field()

url = scrapy.Field()开发spider

在spiders目录中新建二手房爬虫的spider文件housespider.py,根据页面结构使用css选择器解析处理数据:

import scrapy

import re

import pymysql

from hourse.items import HourseItem

class HouseSpider(scrapy.Spider):

name = 'house'

allow_domains = ["anjuke.com"]

# start_urls = [

# 'https://chongqing.anjuke.com/sale/p1/',

# ]

def __init__(self):

self.db = pymysql.connect("localhost", "root", "123456", "house", charset="utf8")

self.cursor = self.db.cursor()

# 从数据库读取区域,按照区域查询数据

results = self.get_areas()

urls = []

for area_item in results:

urls.append('https://chongqing.anjuke.com/sale/' + area_item[1] + '/')

# break

self.start_urls = urls

self.enable_next_page = True

def close(self):

self.db.close()

def get_areas(self):

# 区域数量太多,会触发人机校验,请求连接会跳转到人工操作页面,这里添加条件只获取部分区域

select_sql = "select * from house_area where parent_id > 0"

# select_sql = "select * from house_area where id = 1"

self.cursor.execute(select_sql)

results = self.cursor.fetchall()

return results

def parse(self, response):

house_list = response.css('li.list-item')

house_item = HourseItem()

house_item['area_code'] = ''

current_url = re.search('sale/([^/]+)/', response.url)

if current_url:

house_item['area_code'] = current_url.group(1)

pat_code = '/([a-zA-Z0-9]+)\?'

for item in house_list:

house_item['title'] = item.css('.house-title a::text').extract_first().strip()

house_item['url'] = item.css('.house-title a::attr(href)').extract_first().strip()

# 总价万为单位

house_item['total_price'] = re.search('\d+', item.css('.pro-price .price-det').extract_first().strip()).group()

house_item['code'] = ''

if house_item['url']:

search_code = re.search(pat_code, house_item['url'])

if search_code:

house_item['code'] = search_code.group(1)

house_item['community'] = ''

house_item['location'] = item.css('.details-item:nth-child(3) span.comm-address::attr(title)').extract_first().replace(u'\xa0', u' ').strip()

if house_item['location']:

address_items = re.split('\s+', house_item['location'])

if len(address_items) > 1:

house_item['community'] = address_items[0]

house_item['build_years'] = item.css('.details-item:nth-child(2) span:nth-child(7)::text').extract_first().strip()

house_item['floor'] = item.css('.details-item:nth-child(2) span:nth-child(5)::text').extract_first().strip()

house_item['layout'] = item.css('.details-item:nth-child(2) span:nth-child(1)::text').extract_first().strip()

house_item['size'] = item.css('.details-item:nth-child(2) span:nth-child(3)::text').extract_first().strip()

house_item['picture_url'] = item.css('.item-img img::attr(src)').extract_first().strip()

house_item['unit_price'] = re.search('\d+', item.css('.pro-price .unit-price::text').extract_first().strip()).group()

yield house_item

#分页操作

next_page = response.css('.multi-page a.aNxt::attr(href)').extract_first()

if self.enable_next_page and next_page:

#构建新的Request对象

next_url = next_page.strip()

yield scrapy.Request(next_url, callback=self.parse)开发pipeline

打开pipelines.py文件,将spider中爬取处理的数据存入数据库:

import pymysql

class HoursePipeline(object):

def __init__(self):

self.db = pymysql.connect("localhost", "root", "123456", "house", charset="utf8")

self.cursor = self.db.cursor()

def process_item(self, item, spider):

select_area_sql = "select id from house_area where code='%s'" % item['area_code']

is_area_exist = self.cursor.execute(select_area_sql)

house_area = self.cursor.fetchone()

area_id = 0

if house_area:

area_id = house_area[0]

select_sql = "select id from house where code='%s'" % item['code']

already_save = self.cursor.execute(select_sql)

house_item = self.cursor.fetchone()

self.db.commit()

if already_save == 1:

# 更新信息

house_id = house_item[0]

update_sql = "update house set title='%s', unit_price='%d', total_price='%d', url='%s' where id='%d'" % (item['title'],int(item['unit_price']),int(item['total_price']),item['url'],int(house_id))

self.cursor.execute(update_sql)

self.db.commit()

# 插入价格

self.add_price(house_id, item['code'], item['unit_price'], item['total_price'])

else:

# 插入信息

sql = "insert into house(area_id,area_code,title,unit_price,total_price,code,community,location,build_years,floor,layout,size,picture_url,url)\

values('%d','%s','%s','%d','%d','%s','%s','%s','%s','%s','%s','%s','%s','%s')"\

%(area_id,item['area_code'],item['title'],int(item['unit_price']),int(item['total_price']),item['code'],item['community'],item['location'],\

item['build_years'],item['floor'], item['layout'],item['size'],item['picture_url'],item['url'])

self.cursor.execute(sql)

house_id = int(self.db.insert_id())

self.db.commit()

# 插入价格

self.add_price(house_id, item['code'], item['unit_price'], item['total_price'])

return item

def add_price(self, house_id, house_code, unit_price, total_price):

sql = "insert into house_price(house_id, house_code, unit_price, total_price)\

values('%d','%s','%d','%d')" % (int(house_id), house_code, int(unit_price), int(total_price))

self.cursor.execute(sql)

self.db.commit()

def __del__(self):

self.db.close()

设置settings

打开settings.py文件,这只项目的USER_AGENT和ITEM_PIPELINES,其余设置保持不变:

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.97 Safari/537.36'

ITEM_PIPELINES = {

'hourse.pipelines.HoursePipeline': 300,

}启动爬虫

在项目根目录下,在命令行中运行如下命令开始爬虫程序(博主在vscode中项目下直接运行的命令):

scrapy crawl house至此使用scrapy获取重庆的二手房数据已经完成,博主才入坑python,通过该项目学习了scrapy框架和一些基础的python语法。如果有不妥的笛梵,欢迎大家提意见。

项目的Github地址:https://github.com/liuhuifly/scrapy_cqanjuke,数据库初始化脚本在hourse项目的sqlscripts目录下。

声明:本项目仅仅供学习使用,使用该项目从事的一切商业行为与博主无关,自行承担责任。

1158

1158

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?