Table of Contents

色彩空间转换问题,请上传送门:https://blog.csdn.net/keith_bb/article/details/53470170

.常用滤波器列表:

-

bilateralFilter()双边滤波器

-

blur()平滑滤波器

-

boxFilter()box平滑滤波器

-

filter2D()图像卷积操作

-

GaussianBlur()高斯滤波-平滑

-

medainBlur()中值滤波-去噪/平滑

-

Laplican()拉普拉斯(二阶)微分算子

-

Sobel()计算一阶、二阶、高阶微分算子

2.滤波器参数及使用

bilateralFilter()

| void cv::bilateralFilter | ( | InputArray | src, |

| OutputArray | dst, | ||

| int | d, | ||

| double | sigmaColor, | ||

| double | sigmaSpace, | ||

| int | borderType = BORDER_DEFAULT | ||

| ) |

| Python: | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| dst | = | cv.bilateralFilter( | src, d, sigmaColor, sigmaSpace[, dst[, borderType]] | ) | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

该功能将双边滤波应用于输入图像,如http://www.dai.ed.ac.uk/CVonline/LOCAL_COPIES/MANDUCHI1/Bilateral_Filtering.html中所述,bilateralFilter可以很好地减少不需要的噪声,同时保持边缘相当清晰。但是,与大多数过滤器相比,它非常慢。

Sigma值:为简单起见,您可以将2 sigma值设置为相同。如果它们很小(<10),过滤器将没有太大的影响,而如果它们很大(> 150),它们将具有非常强烈的效果,使图像看起来“卡通”。

滤波器大小:大滤波器(d> 5)非常慢,因此建议对实时应用使用d = 5,对于需要大量噪声滤波的离线应用,可能需要d = 9。

此过滤器无法正常工作。

参数

| SRC | 源8位或浮点,1通道或3通道图像。 |

| DST | 与src具有相同大小和类型的目标映像。 |

| d | 过滤期间使用的每个像素邻域的直径。如果它是非正数,则从sigmaSpace计算。 |

| sigmaColor | 过滤颜色空间中的西格玛。参数的值越大意味着像素邻域内的更远的颜色(参见sigmaSpace)将混合在一起,从而产生更大的半等颜色区域。 |

| sigmaSpace | 在坐标空间中过滤西格玛。较大的参数值意味着只要它们的颜色足够接近,更远的像素就会相互影响(参见sigmaColor)。当d> 0时,无论sigmaSpace如何,它都指定邻域大小。否则,d与sigmaSpace成比例。 |

| borderType | 用于外推图像外部像素的边框模式 |

例子

#include <iostream>

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

using namespace std;

using namespace cv;

int DELAY_CAPTION = 1500;

int DELAY_BLUR = 100;

int MAX_KERNEL_LENGTH = 31;

Mat src; Mat dst;

char window_name[] = "Smoothing Demo";

int display_caption( const char* caption );

int display_dst( int delay );

int main( int argc, char ** argv )

{

namedWindow( window_name, WINDOW_AUTOSIZE );

const char* filename = argc >=2 ? argv[1] : "../data/lena.jpg";

src = imread( filename, IMREAD_COLOR );

if(src.empty())

{

printf(" Error opening image\n");

printf(" Usage: ./Smoothing [image_name -- default ../data/lena.jpg] \n");

return -1;

}

if( display_caption( "Original Image" ) != 0 )

{

return 0;

}

dst = src.clone();

if( display_dst( DELAY_CAPTION ) != 0 )

{

return 0;

}

if( display_caption( "Homogeneous Blur" ) != 0 )

{

return 0;

}

for ( int i = 1; i < MAX_KERNEL_LENGTH; i = i + 2 )

{

blur( src, dst, Size( i, i ), Point(-1,-1) );

if( display_dst( DELAY_BLUR ) != 0 )

{

return 0;

}

}

if( display_caption( "Gaussian Blur" ) != 0 )

{

return 0;

}

for ( int i = 1; i < MAX_KERNEL_LENGTH; i = i + 2 )

{

GaussianBlur( src, dst, Size( i, i ), 0, 0 );

if( display_dst( DELAY_BLUR ) != 0 )

{

return 0;

}

}

if( display_caption( "Median Blur" ) != 0 )

{

return 0;

}

for ( int i = 1; i < MAX_KERNEL_LENGTH; i = i + 2 )

{

medianBlur ( src, dst, i );

if( display_dst( DELAY_BLUR ) != 0 )

{

return 0;

}

}

if( display_caption( "Bilateral Blur" ) != 0 )

{

return 0;

}

for ( int i = 1; i < MAX_KERNEL_LENGTH; i = i + 2 )

{

bilateralFilter ( src, dst, i, i*2, i/2 );

if( display_dst( DELAY_BLUR ) != 0 )

{

return 0;

}

}

display_caption( "Done!" );

return 0;

}

int display_caption( const char* caption )

{

dst = Mat::zeros( src.size(), src.type() );

putText( dst, caption,

Point( src.cols/4, src.rows/2),

FONT_HERSHEY_COMPLEX, 1, Scalar(255, 255, 255) );

return display_dst(DELAY_CAPTION);

}

int display_dst( int delay )

{

imshow( window_name, dst );

int c = waitKey ( delay );

if( c >= 0 ) { return -1; }

return 0;

}blur()平滑

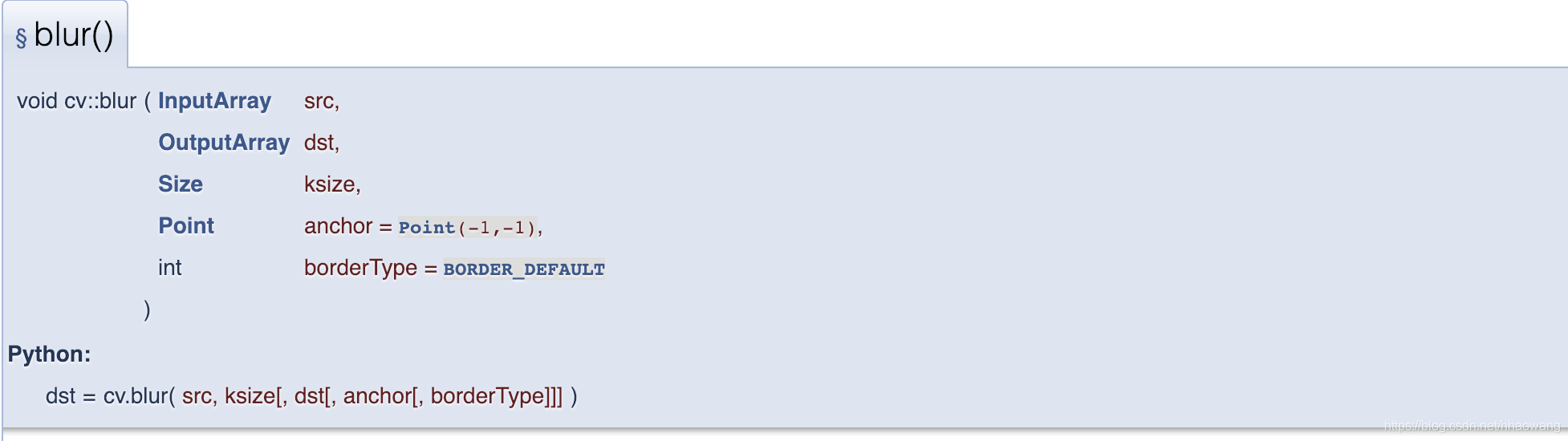

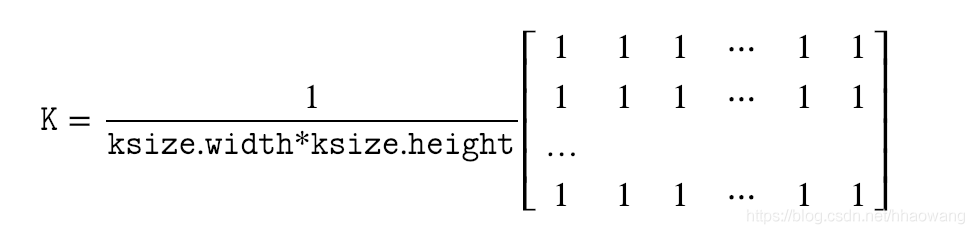

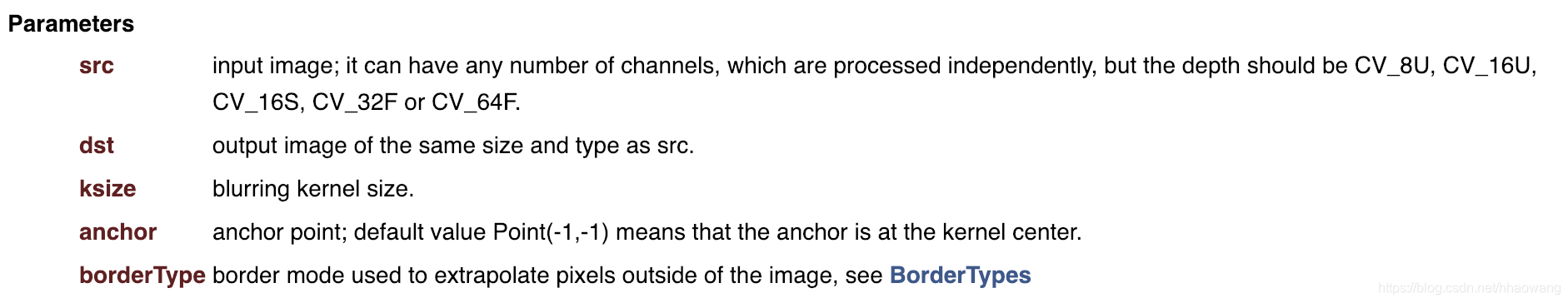

Blurs an image using the normalized box filter.

The function smooths an image using the kernel:

The call blur(src, dst, ksize, anchor, borderType) is equivalent to

boxFilter(src, dst, src.type(), anchor, true, borderType).

C++实例

/* This program demonstrates usage of the Canny edge detector */

/* include related packages */

#include "opencv2/core/utility.hpp"

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include <stdio.h>

using namespace cv; //namesapce cv

using namespace std; //standard namespace std

int edgeThresh = 1;

int edgeThreshScharr=1;

cv::Mat image, gray, blurImage, edge1, edge2, cedge;

const char* window_name1 = "Edge map : Canny default (Sobel gradient)";

const char* window_name2 = "Edge map : Canny with custom gradient (Scharr)";

static void onTrackbar(int, void*)

{/* define a trackbar callback, by using onTrackbar function*/

blur(gray, blurImage, Size(3,3));

// Run the edge detector on grayscale

Canny(blurImage, edge1, edgeThresh, edgeThresh*3, 3);

cedge = Scalar::all(0);

image.copyTo(cedge, edge1);

imshow(window_name1, cedge);

Mat dx,dy;

Scharr(blurImage,dx,CV_16S,1,0);

Scharr(blurImage,dy,CV_16S,0,1);

Canny( dx,dy, edge2, edgeThreshScharr, edgeThreshScharr*3 );

cedge = Scalar::all(0);

image.copyTo(cedge, edge2);

imshow(window_name2, cedge);

}//onTrackbar

static void help()

{ /* help and info display */

cout<<"\nThis sample demonstrates Canny edge detection\n"

<<"Call:\n"

<<" /.edge [image_name -- Default is ../data/fruits.jpg]\n"<<endl;

}//help

const char* keys =

{

"{help h||}{@image |../data/fruits.jpg|input image name}"

};

int main( int argc, const char** argv )

{

/* the main funciton */

help();

CommandLineParser parser(argc, argv, keys);

string filename = parser.get<string>(0);

image = imread(filename, IMREAD_COLOR);

if(image.empty()) // open file check

{

cout<<"Cannot read image file: "

<< filename.c_str()<<endl;

help();

return -1;

}

cedge.create(image.size(), image.type());

cvtColor(image, gray, COLOR_BGR2GRAY);

// Create a window

namedWindow(window_name1, 1);

namedWindow(window_name2, 1);

// create a toolbar

createTrackbar("Canny threshold default", window_name1, &edgeThresh, 100, onTrackbar);

createTrackbar("Canny threshold Scharr", window_name2, &edgeThreshScharr, 400, onTrackbar);

// Show the image

onTrackbar(0, 0);

// Wait for a key stroke; the same function arranges events processing

waitKey(0);

return 0;

}An example using drawContours to clean up a background segmentation result

#include "opencv2/imgproc.hpp"

#include "opencv2/videoio.hpp"

#include "opencv2/highgui.hpp"

#include "opencv2/video/background_segm.hpp"

#include <stdio.h>

#include <string>

using namespace std;

using namespace cv;

static void help()

{

printf("\n"

"This program demonstrated a simple method of connected components clean up of background subtraction\n"

"When the program starts, it begins learning the background.\n"

"You can toggle background learning on and off by hitting the space bar.\n"

"Call\n"

"./segment_objects [video file, else it reads camera 0]\n\n");

}

static void refineSegments(const Mat& img, Mat& mask, Mat& dst)

{

int niters = 3;

vector<vector<Point> > contours;

vector<Vec4i> hierarchy;

Mat temp;

dilate(mask, temp, Mat(), Point(-1,-1), niters);

erode(temp, temp, Mat(), Point(-1,-1), niters*2);

dilate(temp, temp, Mat(), Point(-1,-1), niters);

findContours( temp, contours, hierarchy, RETR_CCOMP, CHAIN_APPROX_SIMPLE );

dst = Mat::zeros(img.size(), CV_8UC3);

if( contours.size() == 0 )

return;

// iterate through all the top-level contours,

// draw each connected component with its own random color

int idx = 0, largestComp = 0;

double maxArea = 0;

for( ; idx >= 0; idx = hierarchy[idx][0] )

{

const vector<Point>& c = contours[idx];

double area = fabs(contourArea(Mat(c)));

if( area > maxArea )

{

maxArea = area;

largestComp = idx;

}

}

Scalar color( 0, 0, 255 );

drawContours( dst, contours, largestComp, color, FILLED, LINE_8, hierarchy );

}

int main(int argc, char** argv)

{

VideoCapture cap;

bool update_bg_model = true;

CommandLineParser parser(argc, argv, "{help h||}{@input||}");

if (parser.has("help"))

{

help();

return 0;

}

string input = parser.get<std::string>("@input");

if (input.empty())

cap.open(0);

else

cap.open(input);

if( !cap.isOpened() )

{

printf("\nCan not open camera or video file\n");

return -1;

}

Mat tmp_frame, bgmask, out_frame;

cap >> tmp_frame;

if(tmp_frame.empty())

{

printf("can not read data from the video source\n");

return -1;

}

namedWindow("video", 1);

namedWindow("segmented", 1);

Ptr<BackgroundSubtractorMOG2> bgsubtractor=createBackgroundSubtractorMOG2();

bgsubtractor->setVarThreshold(10);

for(;;)

{

cap >> tmp_frame;

if( tmp_frame.empty() )

break;

bgsubtractor->apply(tmp_frame, bgmask, update_bg_model ? -1 : 0);

refineSegments(tmp_frame, bgmask, out_frame);

imshow("video", tmp_frame);

imshow("segmented", out_frame);

char keycode = (char)waitKey(30);

if( keycode == 27 )

break;

if( keycode == ' ' )

{

update_bg_model = !update_bg_model;

printf("Learn background is in state = %d\n",update_bg_model);

}

}

return 0;

}boxFilter()

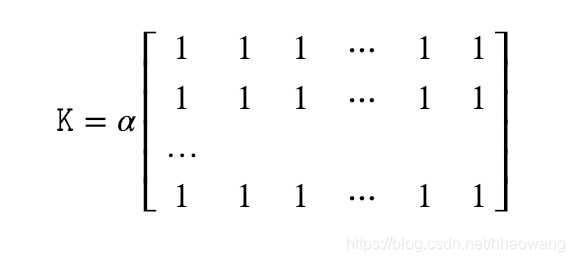

Blurs an image using the box filter.

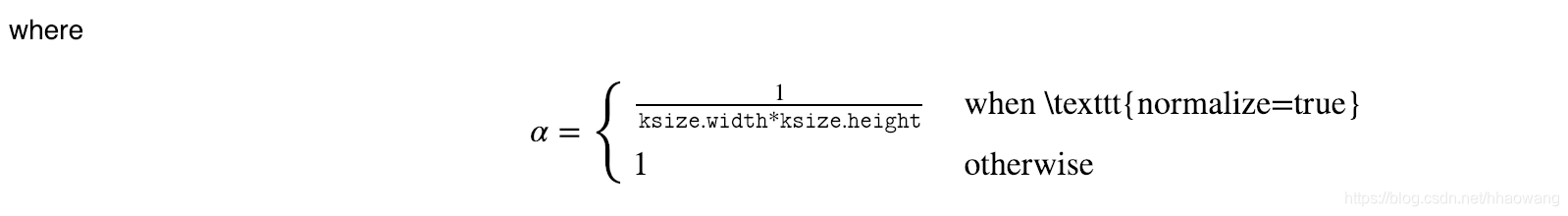

The function smooths an image using the kernel:

Unnormalized box filter is useful for computing various integral characteristics over each pixel neighborhood, such as covariance matrices of image derivatives (used in dense optical flow algorithms, and so on). If you need to compute pixel sums over variable-size windows, use integral.

非标准化盒式滤波器可用于计算每个像素邻域上的各种积分特性,例如图像导数的协方差矩阵(用于密集光流算法等)。 如果需要在可变大小的窗口上计算像素总和,请使用积分。

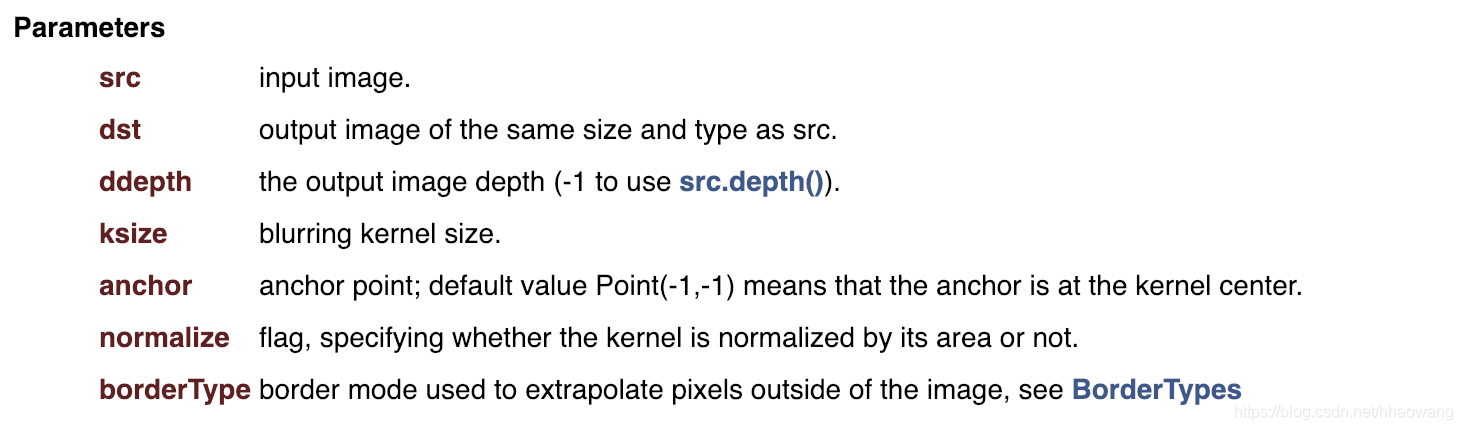

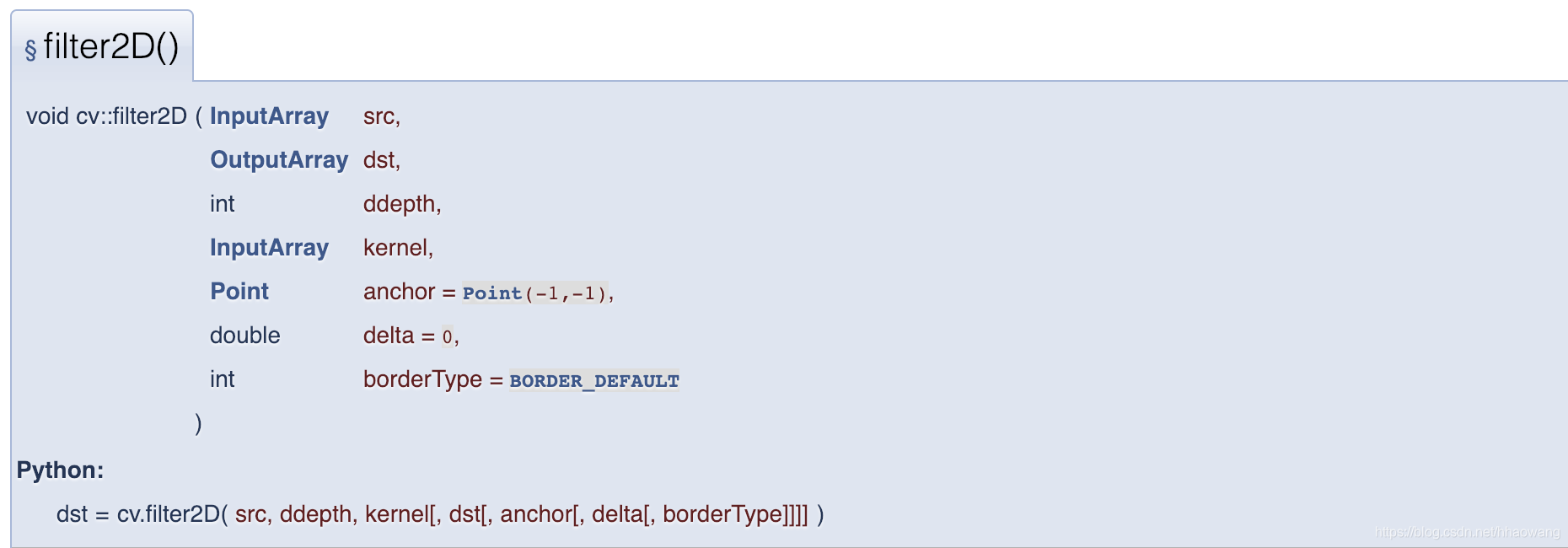

filter2D()

Convolves an image with the kernel.

The function applies an arbitrary linear filter to an image. In-place operation is supported. When the aperture is partially outside the image, the function interpolates outlier pixel values according to the specified border mode.

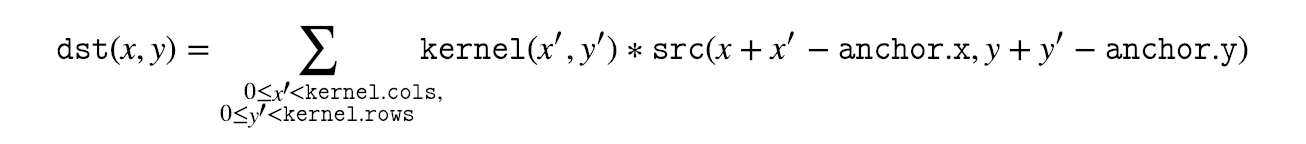

The function does actually compute correlation, not the convolution:

That is, the kernel is not mirrored around the anchor point. If you need a real convolution, flip the kernel using flip and set the new anchor to (kernel.cols - anchor.x - 1, kernel.rows - anchor.y - 1).

The function uses the DFT-based algorithm in case of sufficiently large kernels (~11 x 11 or larger) and the direct algorithm for small kernels.

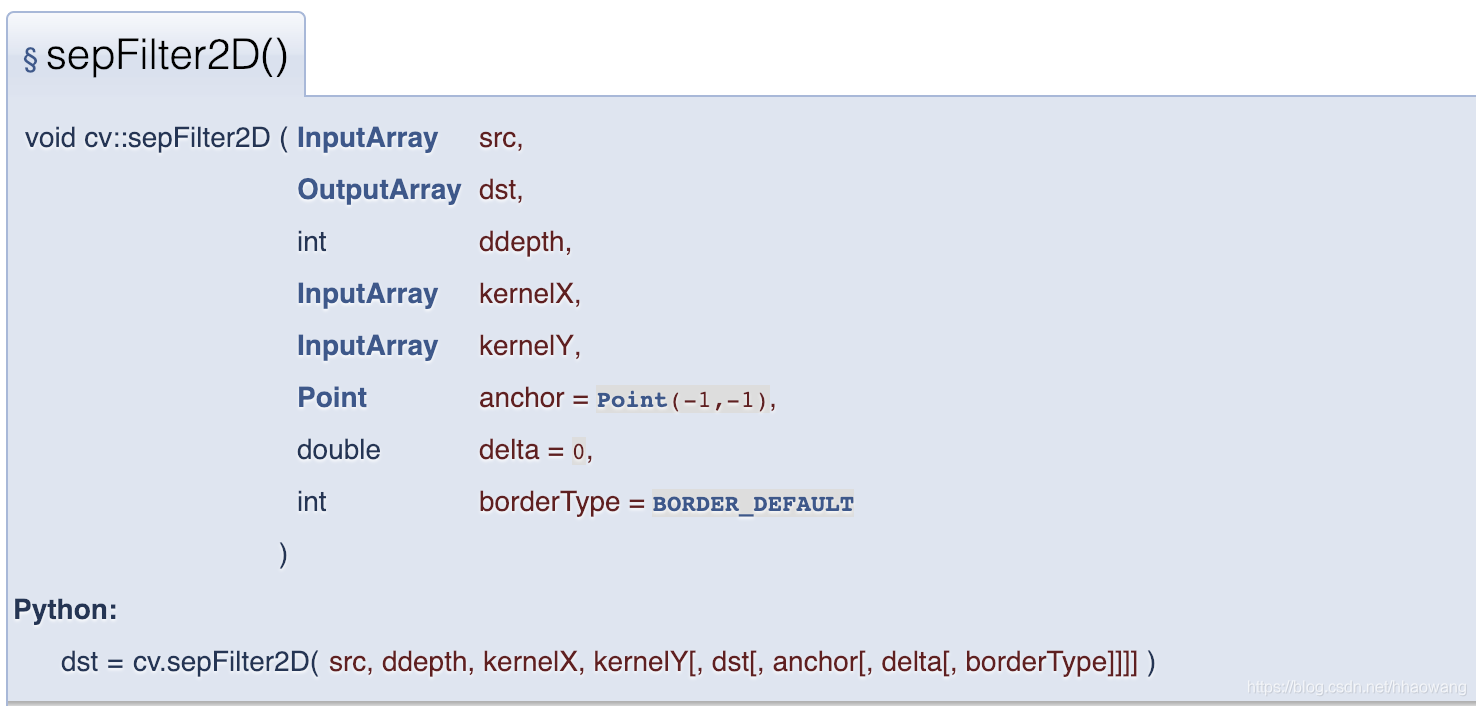

Applies a separable linear filter to an image.

The function applies a separable linear filter to the image. That is, first, every row of src is filtered with the 1D kernel kernelX. Then, every column of the result is filtered with the 1D kernel kernelY. The final result shifted by delta is stored in dst .

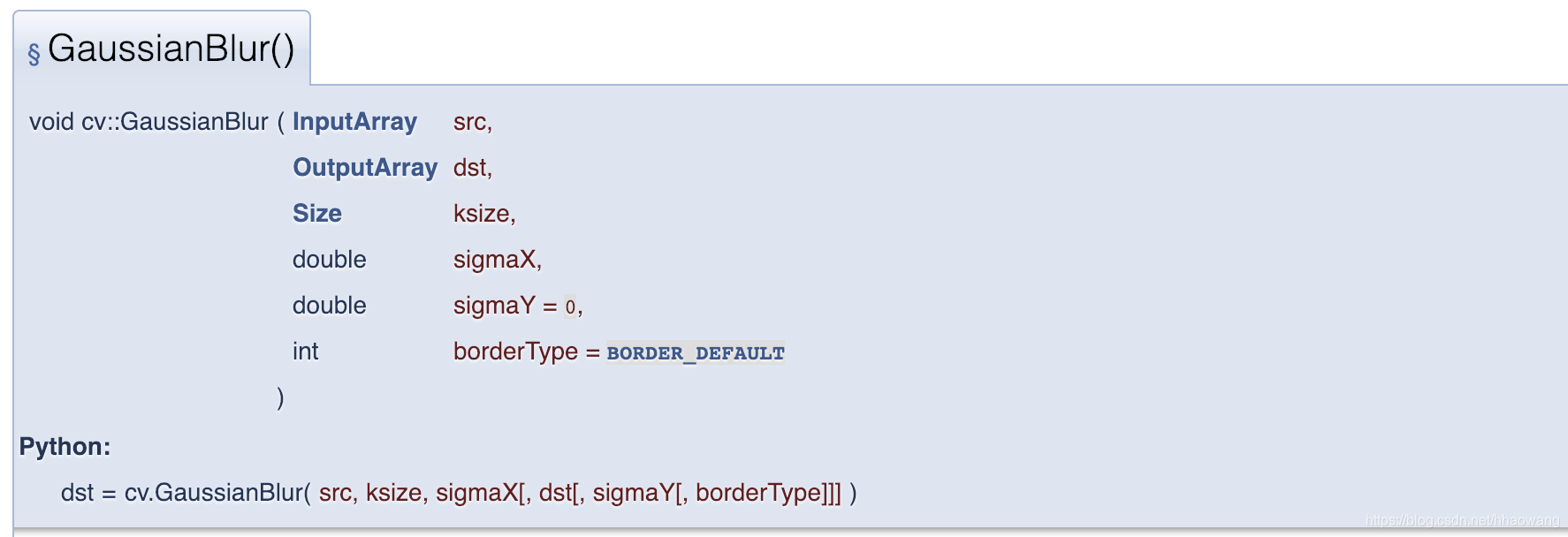

GaussianBlur()

Blurs an image using a Gaussian filter.

The function convolves the source image with the specified Gaussian kernel. In-place filtering is supported.

C++实例:

#include <iostream>

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

using namespace std;

using namespace cv;

int DELAY_CAPTION = 1500;

int DELAY_BLUR = 100;

int MAX_KERNEL_LENGTH = 31;

Mat src; Mat dst;

char window_name[] = "Smoothing Demo";

int display_caption( const char* caption );

int display_dst( int delay );

int main( int argc, char ** argv )

{

namedWindow( window_name, WINDOW_AUTOSIZE );

const char* filename = argc >=2 ? argv[1] : "../data/lena.jpg";

src = imread( filename, IMREAD_COLOR );

if(src.empty())

{

printf(" Error opening image\n");

printf(" Usage: ./Smoothing [image_name -- default ../data/lena.jpg] \n");

return -1;

}

if( display_caption( "Original Image" ) != 0 )

{

return 0;

}

dst = src.clone();

if( display_dst( DELAY_CAPTION ) != 0 )

{

return 0;

}

if( display_caption( "Homogeneous Blur" ) != 0 )

{

return 0;

}

for ( int i = 1; i < MAX_KERNEL_LENGTH; i = i + 2 )

{

blur( src, dst, Size( i, i ), Point(-1,-1) );

if( display_dst( DELAY_BLUR ) != 0 )

{

return 0;

}

}

if( display_caption( "Gaussian Blur" ) != 0 )

{

return 0;

}

for ( int i = 1; i < MAX_KERNEL_LENGTH; i = i + 2 )

{

GaussianBlur( src, dst, Size( i, i ), 0, 0 );

if( display_dst( DELAY_BLUR ) != 0 )

{

return 0;

}

}

if( display_caption( "Median Blur" ) != 0 )

{

return 0;

}

for ( int i = 1; i < MAX_KERNEL_LENGTH; i = i + 2 )

{

medianBlur ( src, dst, i );

if( display_dst( DELAY_BLUR ) != 0 )

{

return 0;

}

}

if( display_caption( "Bilateral Blur" ) != 0 )

{

return 0;

}

for ( int i = 1; i < MAX_KERNEL_LENGTH; i = i + 2 )

{

bilateralFilter ( src, dst, i, i*2, i/2 );

if( display_dst( DELAY_BLUR ) != 0 )

{

return 0;

}

}

display_caption( "Done!" );

return 0;

}

int display_caption( const char* caption )

{

dst = Mat::zeros( src.size(), src.type() );

putText( dst, caption,

Point( src.cols/4, src.rows/2),

FONT_HERSHEY_COMPLEX, 1, Scalar(255, 255, 255) );

return display_dst(DELAY_CAPTION);

}

int display_dst( int delay )

{

imshow( window_name, dst );

int c = waitKey ( delay );

if( c >= 0 ) { return -1; }

return 0;

}getDerivKernels()

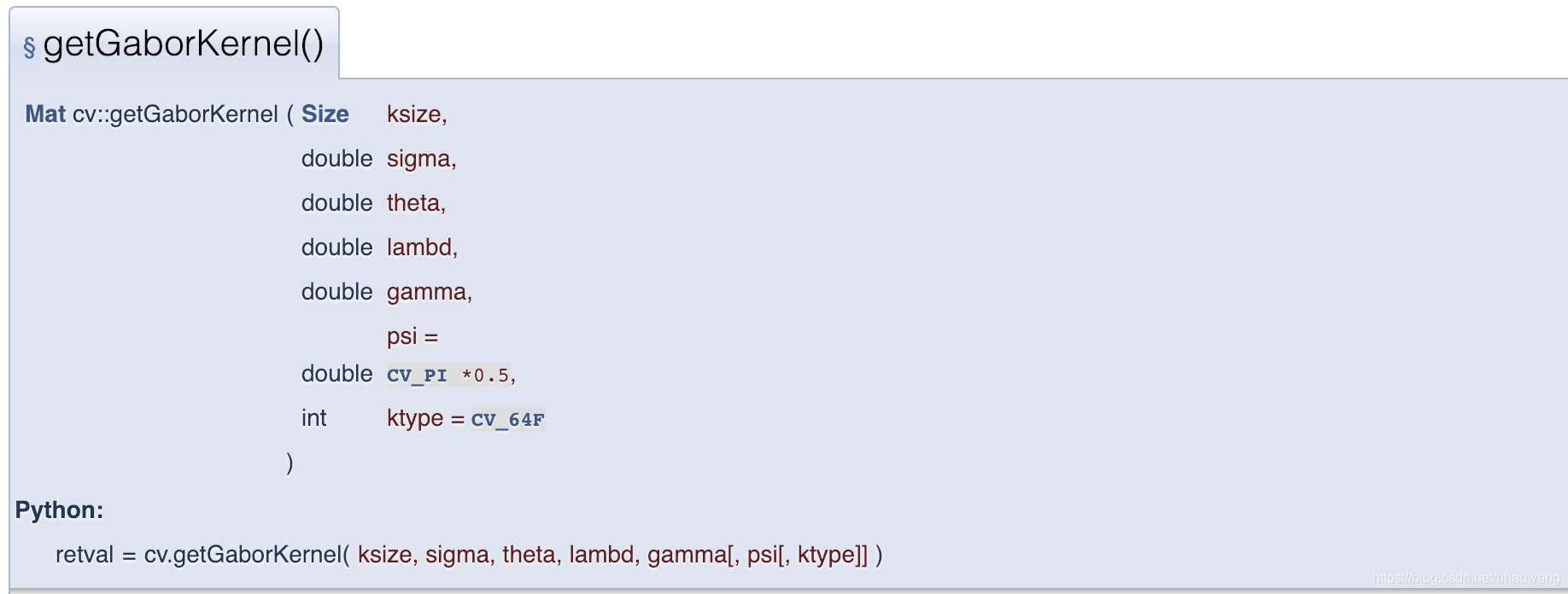

Returns filter coefficients for computing spatial image derivatives.

The function computes and returns the filter coefficients for spatial image derivatives. When ksize=CV_SCHARR, the Scharr 3×3 kernels are generated (see Scharr). Otherwise, Sobel kernels are generated (see Sobel). The filters are normally passed to sepFilter2D or to

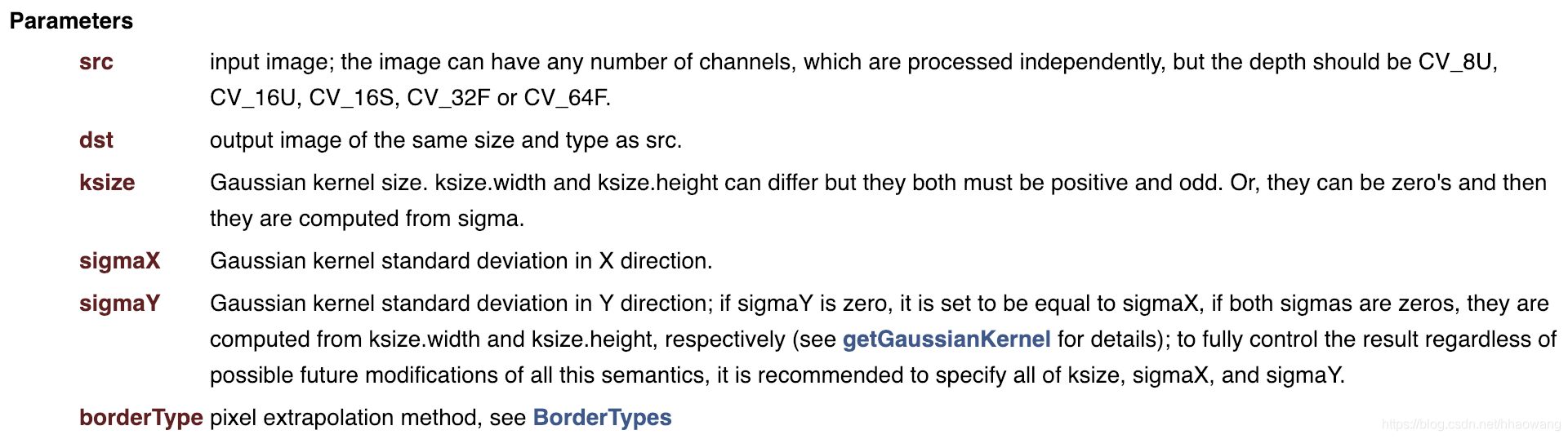

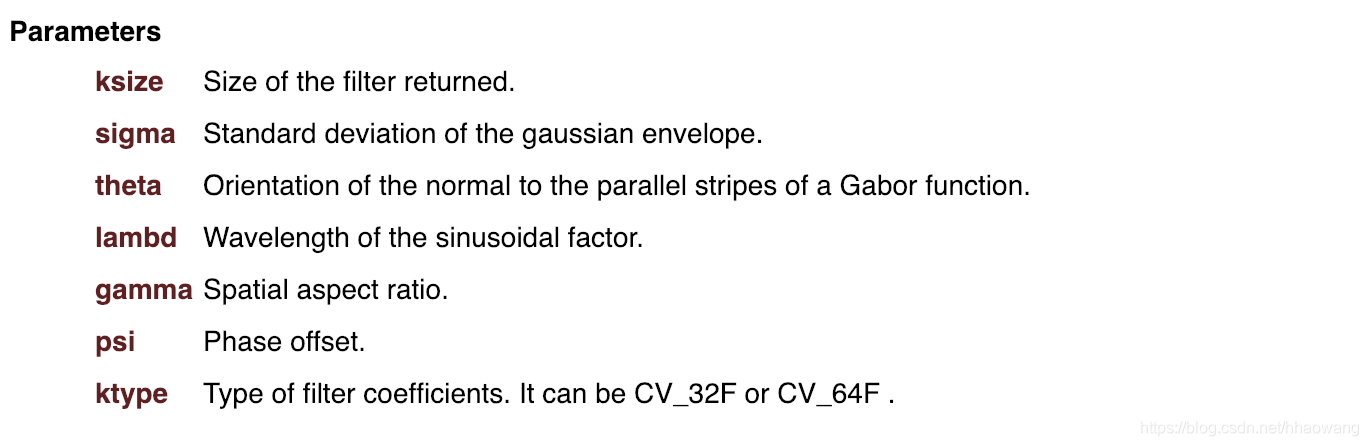

getGaborKernel()

Returns Gabor filter coefficients.

For more details about gabor filter equations and parameters, see: Gabor Filter.

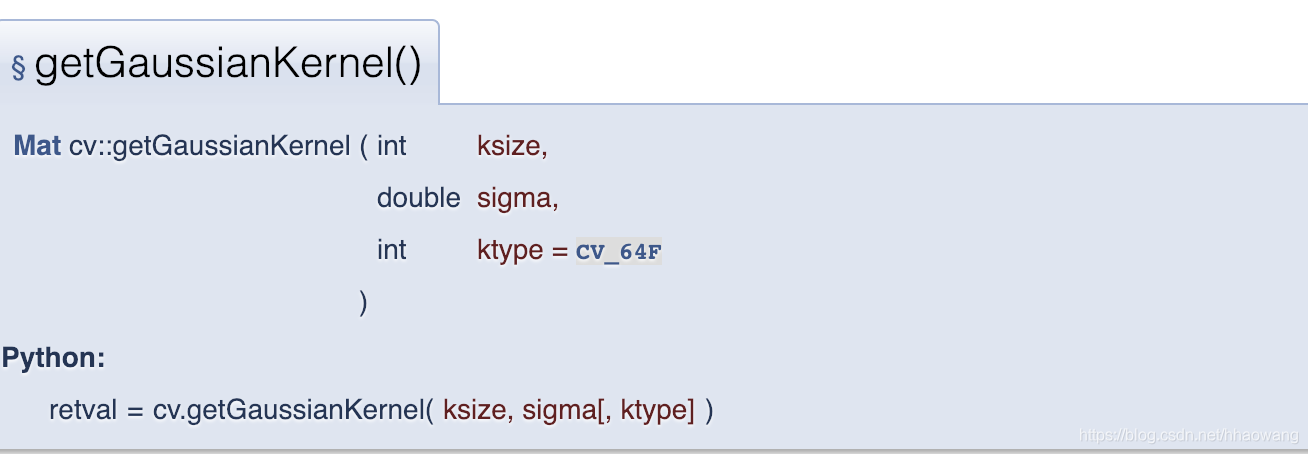

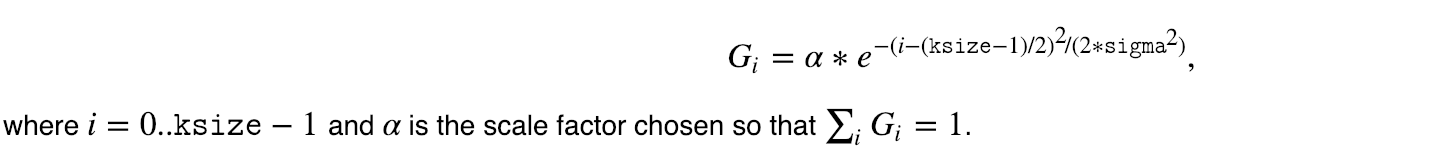

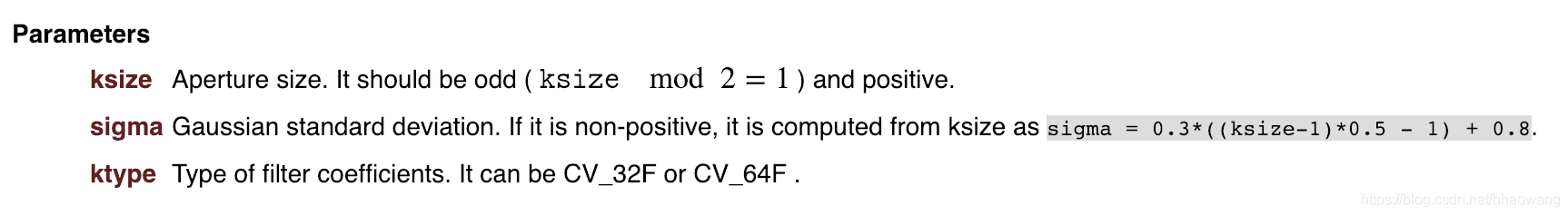

getGaussianKernel()

Returns Gaussian filter coefficients.

The function computes and returns the ?????×1 matrix of Gaussian filter coefficients:

Two of such generated kernels can be passed to sepFilter2D. Those functions automatically recognize smoothing kernels (a symmetrical kernel with sum of weights equal to 1) and handle them accordingly. You may also use the higher-level GaussianBlur.

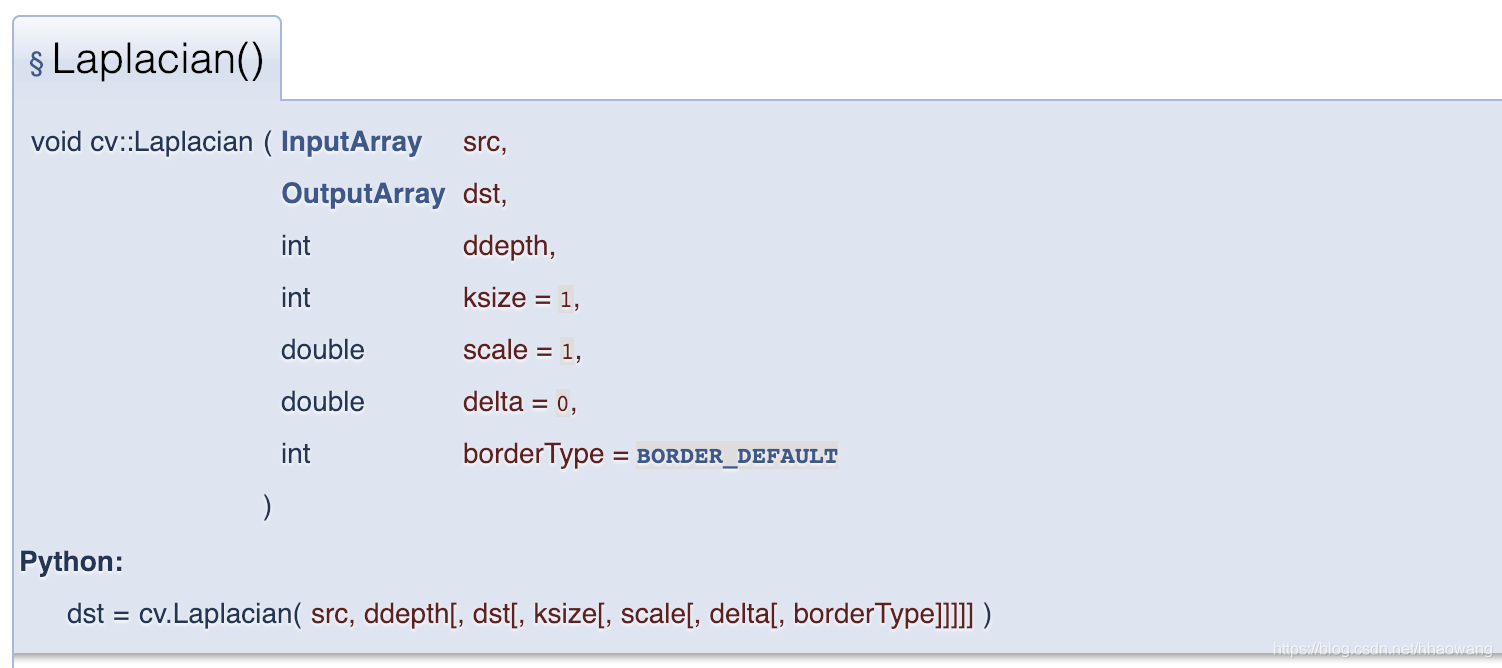

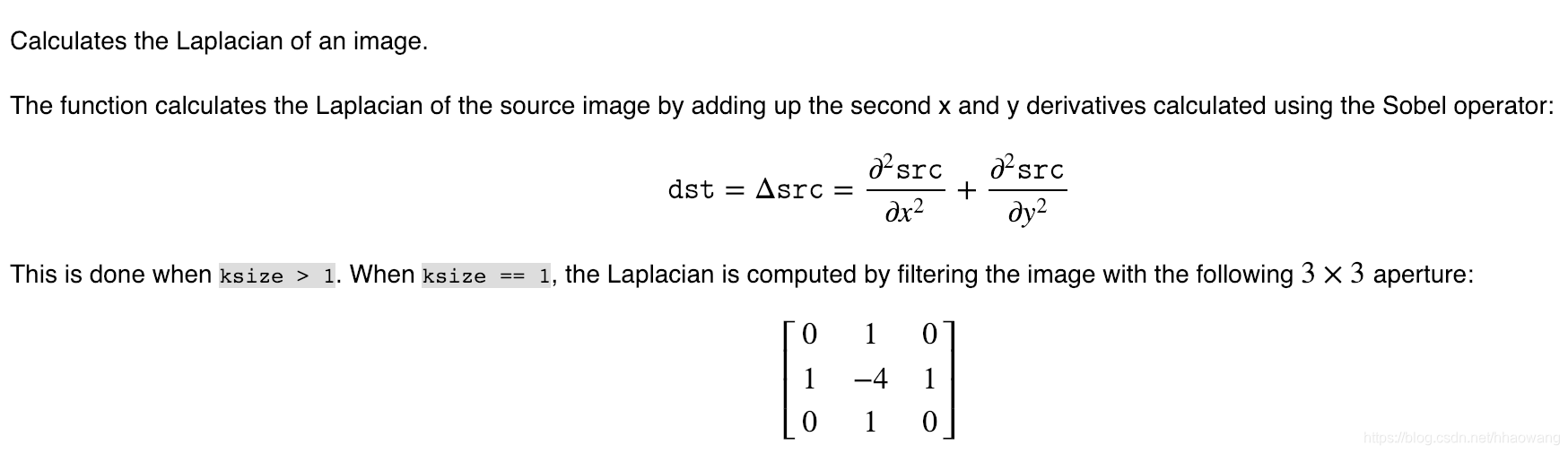

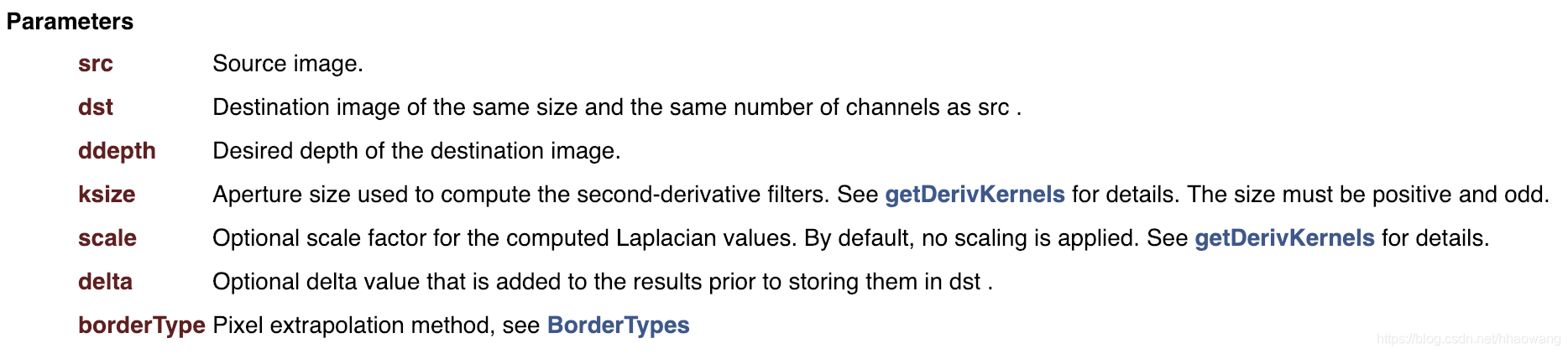

Laplacian()

C++实例:

An example using Laplace transformations for edge detection

#include "opencv2/videoio.hpp"

#include "opencv2/highgui.hpp"

#include "opencv2/imgproc.hpp"

#include <ctype.h>

#include <stdio.h>

#include <iostream>

using namespace cv;

using namespace std;

static void help()

{

cout <<

"\nThis program demonstrates Laplace point/edge detection using OpenCV function Laplacian()\n"

"It captures from the camera of your choice: 0, 1, ... default 0\n"

"Call:\n"

"./laplace -c=<camera #, default 0> -p=<index of the frame to be decoded/captured next>\n" << endl;

}

enum {GAUSSIAN, BLUR, MEDIAN};

int sigma = 3;

int smoothType = GAUSSIAN;

int main( int argc, char** argv )

{

VideoCapture cap;

cv::CommandLineParser parser(argc, argv, "{ c | 0 | }{ p | | }");

help();

if( parser.get<string>("c").size() == 1 && isdigit(parser.get<string>("c")[0]) )

cap.open(parser.get<int>("c"));

else

cap.open(parser.get<string>("c"));

if( cap.isOpened() )

cout << "Video " << parser.get<string>("c") <<

": width=" << cap.get(CAP_PROP_FRAME_WIDTH) <<

", height=" << cap.get(CAP_PROP_FRAME_HEIGHT) <<

", nframes=" << cap.get(CAP_PROP_FRAME_COUNT) << endl;

if( parser.has("p") )

{

int pos = parser.get<int>("p");

if (!parser.check())

{

parser.printErrors();

return -1;

}

cout << "seeking to frame #" << pos << endl;

cap.set(CAP_PROP_POS_FRAMES, pos);

}

if( !cap.isOpened() )

{

cout << "Could not initialize capturing...\n";

return -1;

}

namedWindow( "Laplacian", 0 );

createTrackbar( "Sigma", "Laplacian", &sigma, 15, 0 );

Mat smoothed, laplace, result;

for(;;)

{

Mat frame;

cap >> frame;

if( frame.empty() )

break;

int ksize = (sigma*5)|1;

if(smoothType == GAUSSIAN)

GaussianBlur(frame, smoothed, Size(ksize, ksize), sigma, sigma);

else if(smoothType == BLUR)

blur(frame, smoothed, Size(ksize, ksize));

else

medianBlur(frame, smoothed, ksize);

Laplacian(smoothed, laplace, CV_16S, 5);

convertScaleAbs(laplace, result, (sigma+1)*0.25);

imshow("Laplacian", result);

char c = (char)waitKey(30);

if( c == ' ' )

smoothType = smoothType == GAUSSIAN ? BLUR : smoothType == BLUR ? MEDIAN : GAUSSIAN;

if( c == 'q' || c == 'Q' || c == 27 )

break;

}

return 0;

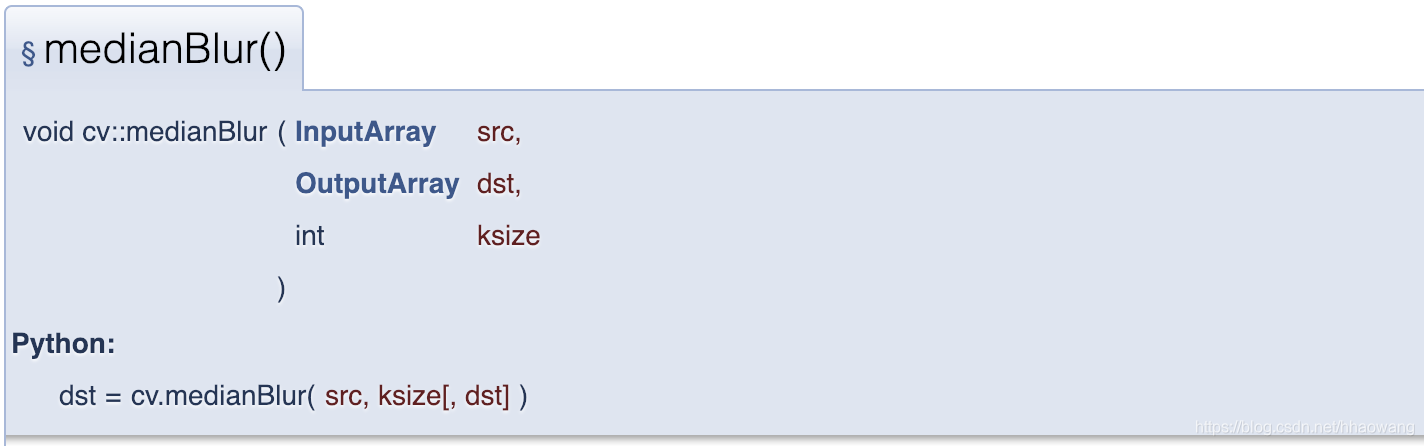

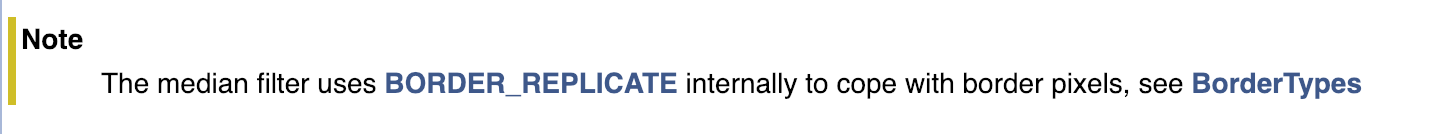

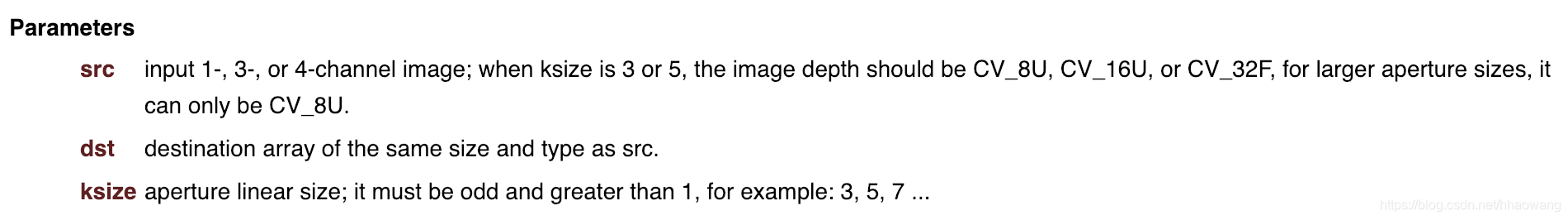

}medianBlur()

Blurs an image using the median filter.

The function smoothes an image using the median filter with the ?????×????? aperture. Each channel of a multi-channel image is processed independently. In-place operation is supported.

C++实例:

An example using the Hough circle detector

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include "opencv2/imgproc.hpp"

using namespace cv;

using namespace std;

int main(int argc, char** argv)

{

const char* filename = argc >=2 ? argv[1] : "../data/smarties.png";

// Loads an image

Mat src = imread( filename, IMREAD_COLOR );

// Check if image is loaded fine

if(src.empty()){

printf(" Error opening image\n");

printf(" Program Arguments: [image_name -- default %s] \n", filename);

return -1;

}

Mat gray;

cvtColor(src, gray, COLOR_BGR2GRAY);

medianBlur(gray, gray, 5);

vector<Vec3f> circles;

HoughCircles(gray, circles, HOUGH_GRADIENT, 1,

gray.rows/16, // change this value to detect circles with different distances to each other

100, 30, 1, 30 // change the last two parameters

// (min_radius & max_radius) to detect larger circles

);

for( size_t i = 0; i < circles.size(); i++ )

{

Vec3i c = circles[i];

Point center = Point(c[0], c[1]);

// circle center

circle( src, center, 1, Scalar(0,100,100), 3, LINE_AA);

// circle outline

int radius = c[2];

circle( src, center, radius, Scalar(255,0,255), 3, LINE_AA);

}

imshow("detected circles", src);

waitKey();

return 0;

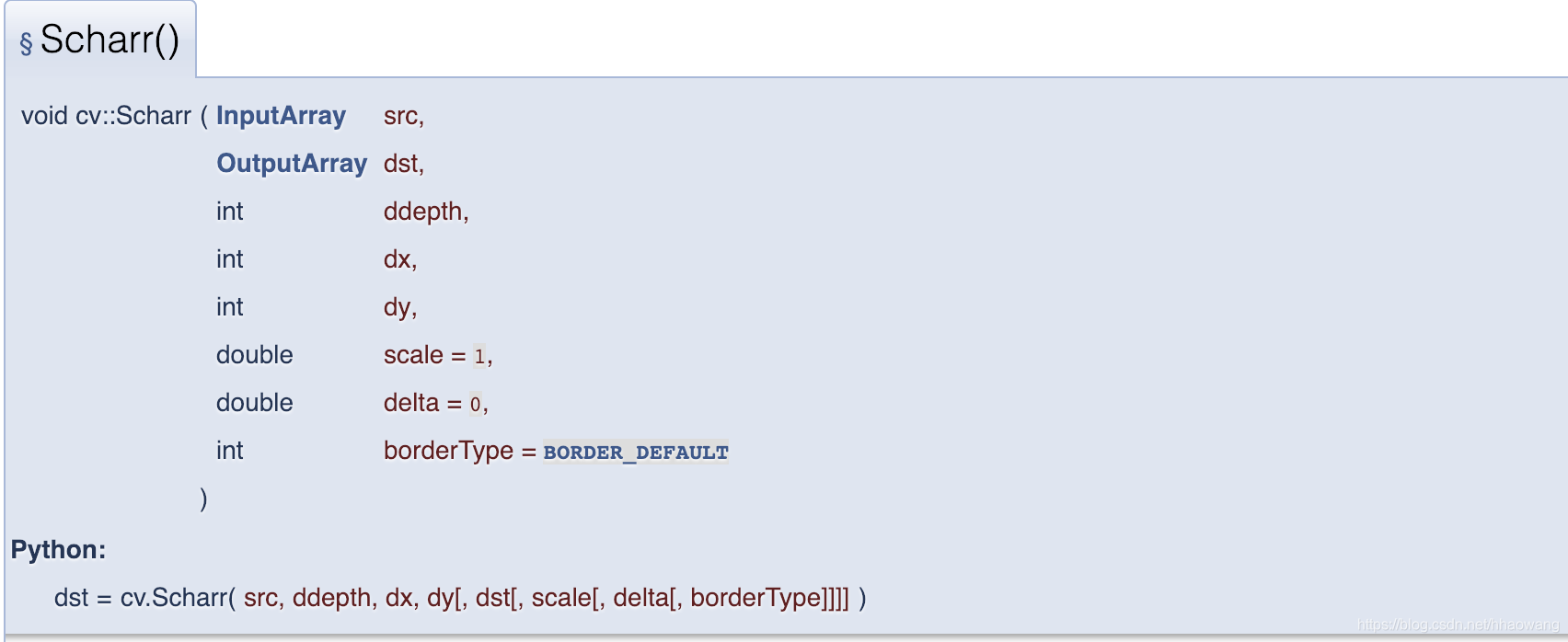

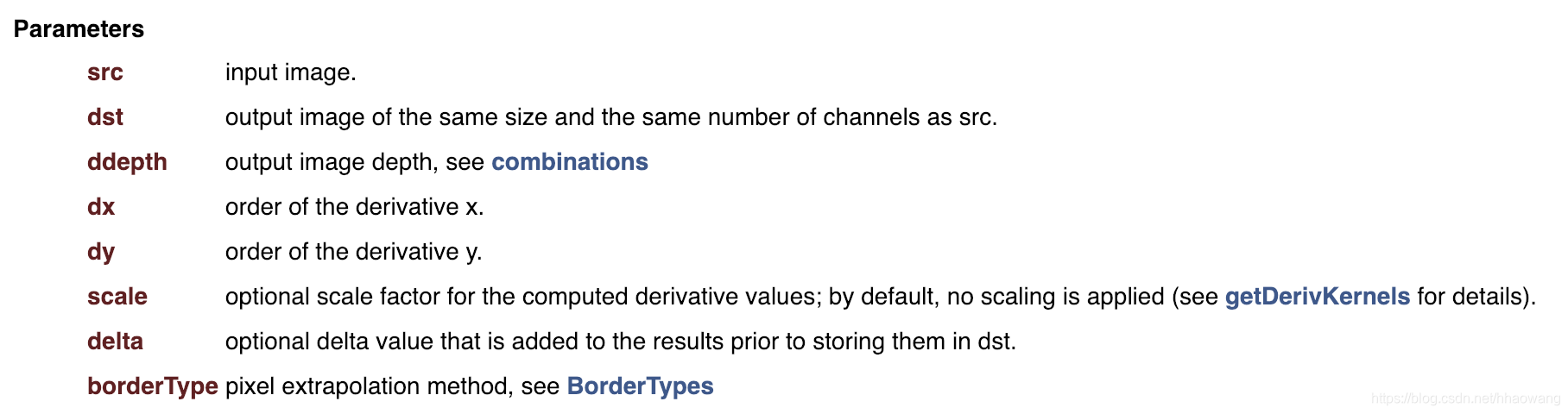

}Scharr()

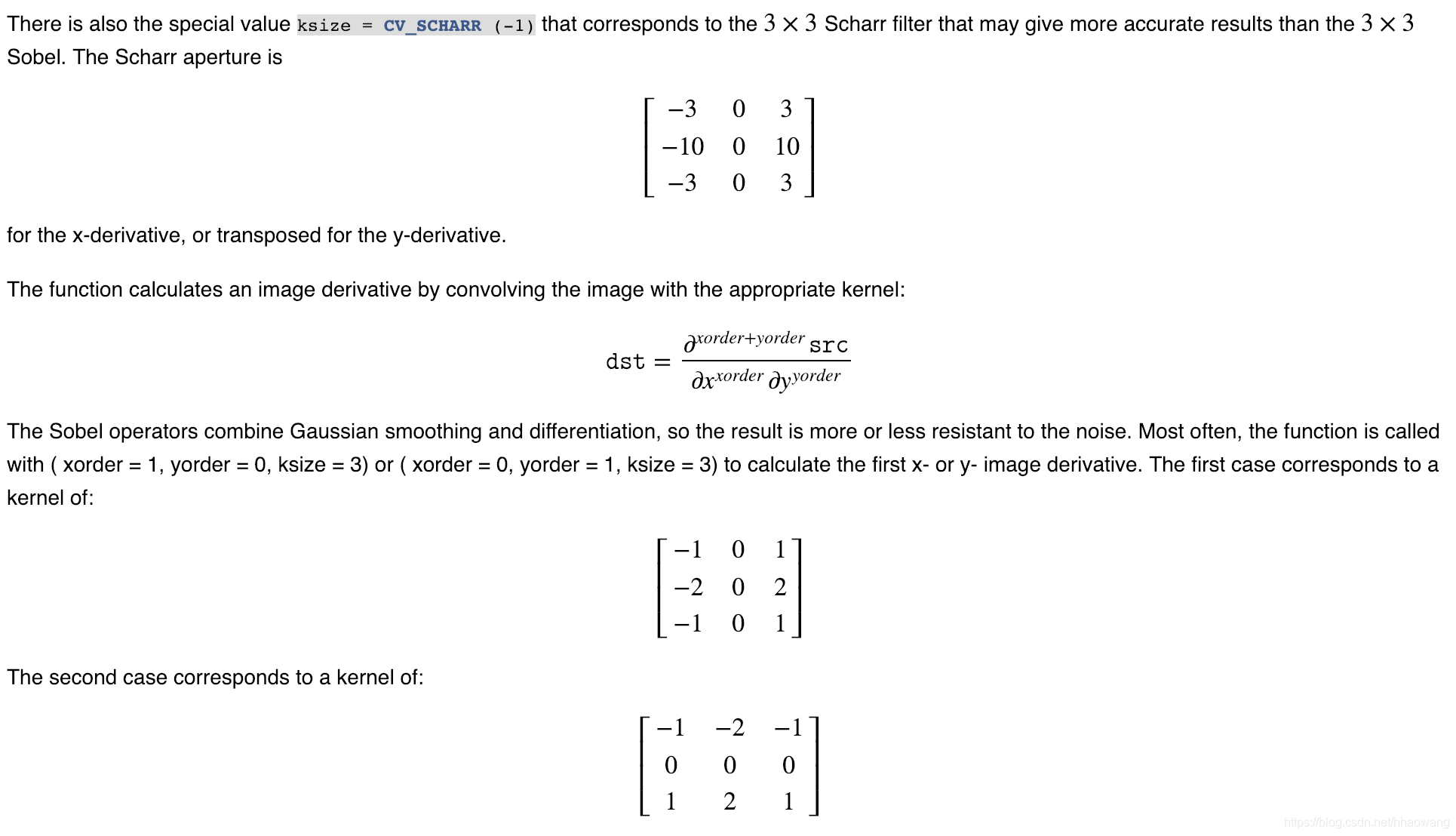

Calculates the first x- or y- image derivative using Scharr operator.

The function computes the first x- or y- spatial image derivative using the Scharr operator. The call

??????(???, ???, ??????, ??, ??, ?????, ?????, ??????????)

is equivalent to

?????(???, ???, ??????, ??, ??, ??_??????, ?????, ?????, ??????????).

C++实例:

This program demonstrates usage of the Canny edge detector

#include "opencv2/core/utility.hpp"

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include <stdio.h>

using namespace cv;

using namespace std;

int edgeThresh = 1;

int edgeThreshScharr=1;

Mat image, gray, blurImage, edge1, edge2, cedge;

const char* window_name1 = "Edge map : Canny default (Sobel gradient)";

const char* window_name2 = "Edge map : Canny with custom gradient (Scharr)";

// define a trackbar callback

static void onTrackbar(int, void*)

{

blur(gray, blurImage, Size(3,3));

// Run the edge detector on grayscale

Canny(blurImage, edge1, edgeThresh, edgeThresh*3, 3);

cedge = Scalar::all(0);

image.copyTo(cedge, edge1);

imshow(window_name1, cedge);

Mat dx,dy;

Scharr(blurImage,dx,CV_16S,1,0);

Scharr(blurImage,dy,CV_16S,0,1);

Canny( dx,dy, edge2, edgeThreshScharr, edgeThreshScharr*3 );

cedge = Scalar::all(0);

image.copyTo(cedge, edge2);

imshow(window_name2, cedge);

}

static void help()

{

printf("\nThis sample demonstrates Canny edge detection\n"

"Call:\n"

" /.edge [image_name -- Default is ../data/fruits.jpg]\n\n");

}

const char* keys =

{

"{help h||}{@image |../data/fruits.jpg|input image name}"

};

int main( int argc, const char** argv )

{

help();

CommandLineParser parser(argc, argv, keys);

string filename = parser.get<string>(0);

image = imread(filename, IMREAD_COLOR);

if(image.empty())

{

printf("Cannot read image file: %s\n", filename.c_str());

help();

return -1;

}

cedge.create(image.size(), image.type());

cvtColor(image, gray, COLOR_BGR2GRAY);

// Create a window

namedWindow(window_name1, 1);

namedWindow(window_name2, 1);

// create a toolbar

createTrackbar("Canny threshold default", window_name1, &edgeThresh, 100, onTrackbar);

createTrackbar("Canny threshold Scharr", window_name2, &edgeThreshScharr, 400, onTrackbar);

// Show the image

onTrackbar(0, 0);

// Wait for a key stroke; the same function arranges events processing

waitKey(0);

return 0;

}

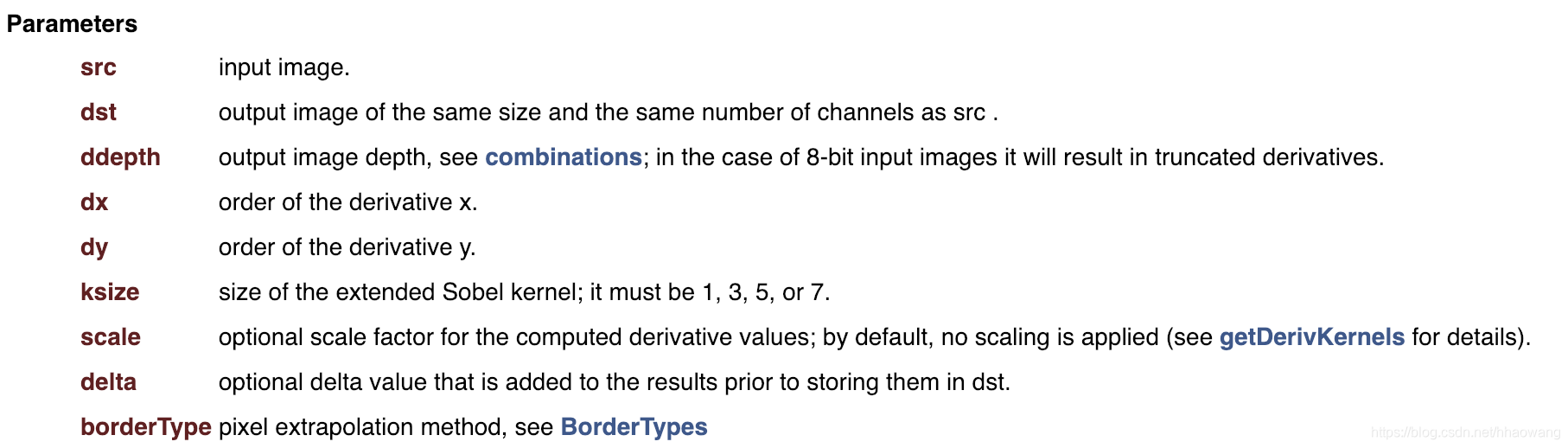

Sobel算子

Calculates the first, second, third, or mixed image derivatives using an extended Sobel operator.

In all cases except one, the ?????×????? separable kernel is used to calculate the derivative. When ????? = ?, the 3×1 or 1×3 kernel is used (that is, no Gaussian smoothing is done). ksize = 1 can only be used for the first or the second x- or y- derivatives.

C++实例:

Sample code using Sobel and/or Scharr OpenCV functions to make a simple Edge Detector

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include <iostream>

using namespace cv;

using namespace std;

int main( int argc, char** argv )

{

cv::CommandLineParser parser(argc, argv,

"{@input |../data/lena.jpg|input image}"

"{ksize k|1|ksize (hit 'K' to increase its value)}"

"{scale s|1|scale (hit 'S' to increase its value)}"

"{delta d|0|delta (hit 'D' to increase its value)}"

"{help h|false|show help message}");

cout << "The sample uses Sobel or Scharr OpenCV functions for edge detection\n\n";

parser.printMessage();

cout << "\nPress 'ESC' to exit program.\nPress 'R' to reset values ( ksize will be -1 equal to Scharr function )";

// First we declare the variables we are going to use

Mat image,src, src_gray;

Mat grad;

const String window_name = "Sobel Demo - Simple Edge Detector";

int ksize = parser.get<int>("ksize");

int scale = parser.get<int>("scale");

int delta = parser.get<int>("delta");

int ddepth = CV_16S;

String imageName = parser.get<String>("@input");

// As usual we load our source image (src)

image = imread( imageName, IMREAD_COLOR ); // Load an image

// Check if image is loaded fine

if( image.empty() )

{

printf("Error opening image: %s\n", imageName.c_str());

return 1;

}

for (;;)

{

// Remove noise by blurring with a Gaussian filter ( kernel size = 3 )

GaussianBlur(image, src, Size(3, 3), 0, 0, BORDER_DEFAULT);

// Convert the image to grayscale

cvtColor(src, src_gray, COLOR_BGR2GRAY);

Mat grad_x, grad_y;

Mat abs_grad_x, abs_grad_y;

Sobel(src_gray, grad_x, ddepth, 1, 0, ksize, scale, delta, BORDER_DEFAULT);

Sobel(src_gray, grad_y, ddepth, 0, 1, ksize, scale, delta, BORDER_DEFAULT);

// converting back to CV_8U

convertScaleAbs(grad_x, abs_grad_x);

convertScaleAbs(grad_y, abs_grad_y);

addWeighted(abs_grad_x, 0.5, abs_grad_y, 0.5, 0, grad);

imshow(window_name, grad);

char key = (char)waitKey(0);

if(key == 27)

{

return 0;

}

if (key == 'k' || key == 'K')

{

ksize = ksize < 30 ? ksize+2 : -1;

}

if (key == 's' || key == 'S')

{

scale++;

}

if (key == 'd' || key == 'D')

{

delta++;

}

if (key == 'r' || key == 'R')

{

scale = 1;

ksize = -1;

delta = 0;

}

}

return 0;

}

函数列表

| void | cv::bilateralFilter (InputArray src, OutputArray dst, int d, double sigmaColor, double sigmaSpace, int borderType=BORDER_DEFAULT) |

| Applies the bilateral filter to an image. | |

| void | cv::blur (InputArray src, OutputArray dst, Size ksize, Point anchor=Point(-1,-1), int borderType=BORDER_DEFAULT) |

| Blurs an image using the normalized box filter. | |

| void | cv::boxFilter (InputArray src, OutputArray dst, int ddepth, Size ksize, Point anchor=Point(-1,-1), bool normalize=true, int borderType=BORDER_DEFAULT) |

| Blurs an image using the box filter. | |

| void | cv::buildPyramid (InputArray src, OutputArrayOfArrays dst, int maxlevel, int borderType=BORDER_DEFAULT) |

| Constructs the Gaussian pyramid for an image. | |

| void | cv::dilate (InputArray src, OutputArray dst, InputArray kernel, Point anchor=Point(-1,-1), int iterations=1, int borderType=BORDER_CONSTANT, const Scalar &borderValue=morphologyDefaultBorderValue()) |

| Dilates an image by using a specific structuring element. | |

| void | cv::erode (InputArray src, OutputArray dst, InputArray kernel, Point anchor=Point(-1,-1), int iterations=1, int borderType=BORDER_CONSTANT, const Scalar &borderValue=morphologyDefaultBorderValue()) |

| Erodes an image by using a specific structuring element. | |

| void | cv::filter2D (InputArray src, OutputArray dst, int ddepth, InputArray kernel, Point anchor=Point(-1,-1), double delta=0, int borderType=BORDER_DEFAULT) |

| Convolves an image with the kernel. | |

| void | cv::GaussianBlur (InputArray src, OutputArray dst, Size ksize, double sigmaX, double sigmaY=0, int borderType=BORDER_DEFAULT) |

| Blurs an image using a Gaussian filter. | |

| void | cv::getDerivKernels (OutputArray kx, OutputArray ky, int dx, int dy, int ksize, bool normalize=false, int ktype=CV_32F) |

| Returns filter coefficients for computing spatial image derivatives. | |

| Mat | cv::getGaborKernel (Size ksize, double sigma, double theta, double lambd, double gamma, double psi=CV_PI *0.5, int ktype=CV_64F) |

| Returns Gabor filter coefficients. | |

| Mat | cv::getGaussianKernel (int ksize, double sigma, int ktype=CV_64F) |

| Returns Gaussian filter coefficients. | |

| Mat | cv::getStructuringElement (int shape, Size ksize, Point anchor=Point(-1,-1)) |

| Returns a structuring element of the specified size and shape for morphological operations. | |

| void | cv::Laplacian (InputArray src, OutputArray dst, int ddepth, int ksize=1, double scale=1, double delta=0, int borderType=BORDER_DEFAULT) |

| Calculates the Laplacian of an image. | |

| void | cv::medianBlur (InputArray src, OutputArray dst, int ksize) |

| Blurs an image using the median filter. | |

| static Scalar | cv::morphologyDefaultBorderValue () |

| returns "magic" border value for erosion and dilation. It is automatically transformed to Scalar::all(-DBL_MAX) for dilation. | |

| void | cv::morphologyEx (InputArray src, OutputArray dst, int op, InputArray kernel, Point anchor=Point(-1,-1), int iterations=1, int borderType=BORDER_CONSTANT, const Scalar &borderValue=morphologyDefaultBorderValue()) |

| Performs advanced morphological transformations. More... | |

| void | cv::pyrDown (InputArray src, OutputArray dst, const Size &dstsize=Size(), int borderType=BORDER_DEFAULT) |

| Blurs an image and downsamples it. | |

| void | cv::pyrMeanShiftFiltering (InputArray src, OutputArray dst, double sp, double sr, int maxLevel=1, TermCriteriatermcrit=TermCriteria(TermCriteria::MAX_ITER+TermCriteria::EPS, 5, 1)) |

| Performs initial step of meanshift segmentation of an image. | |

| void | cv::pyrUp (InputArray src, OutputArray dst, const Size &dstsize=Size(), int borderType=BORDER_DEFAULT) |

| Upsamples an image and then blurs it. More... | |

| void | cv::Scharr (InputArray src, OutputArray dst, int ddepth, int dx, int dy, double scale=1, double delta=0, int borderType=BORDER_DEFAULT) |

| Calculates the first x- or y- image derivative using Scharr operator. More... | |

| void | cv::sepFilter2D (InputArray src, OutputArray dst, int ddepth, InputArray kernelX, InputArray kernelY, Point anchor=Point(-1,-1), double delta=0, int borderType=BORDER_DEFAULT) |

| Applies a separable linear filter to an image. | |

| void | cv::Sobel (InputArray src, OutputArray dst, int ddepth, int dx, int dy, int ksize=3, double scale=1, double delta=0, int borderType=BORDER_DEFAULT) |

| Calculates the first, second, third, or mixed image derivatives using an extended Sobel operator. | |

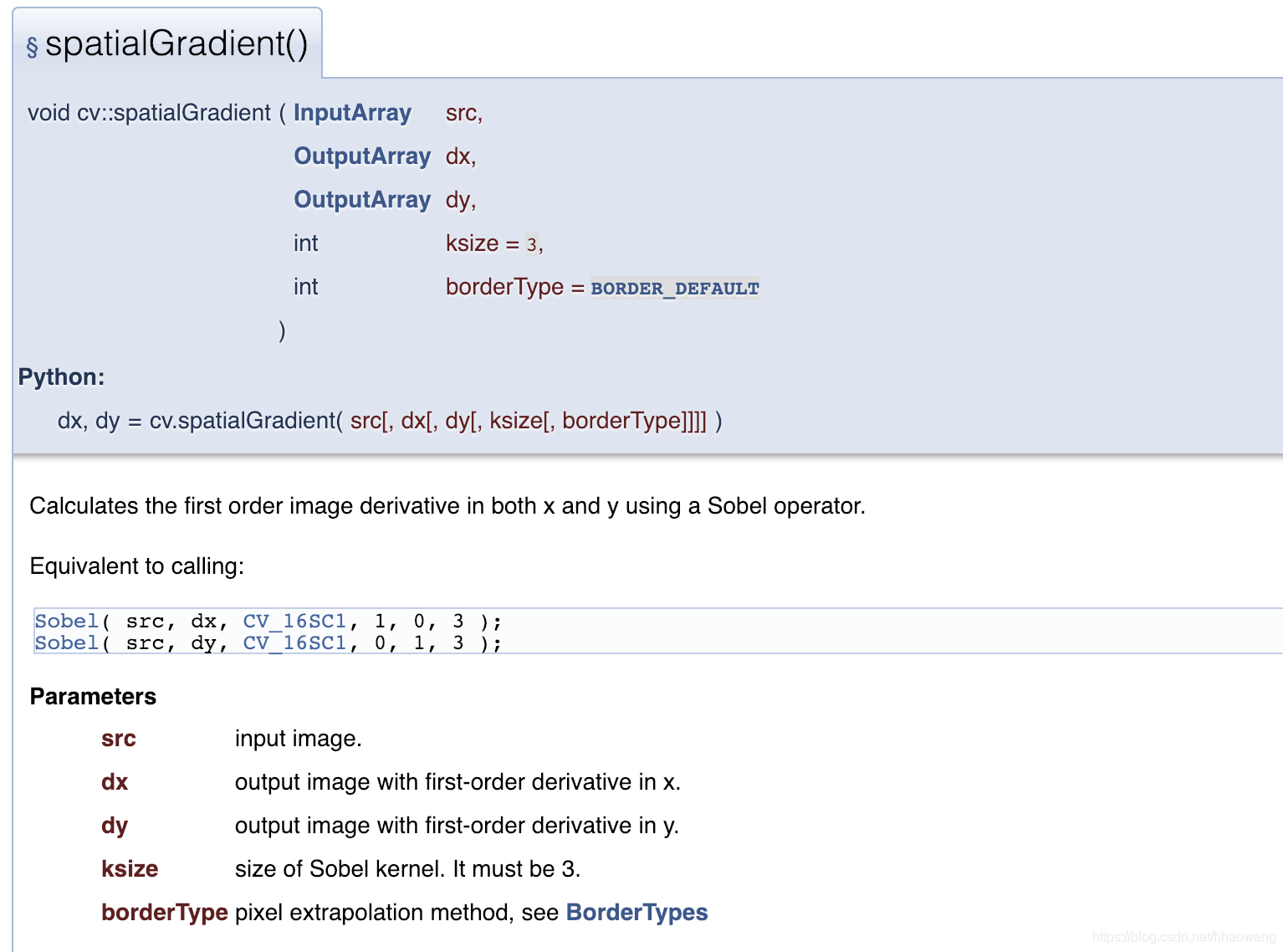

| void | cv::spatialGradient (InputArray src, OutputArray dx, OutputArray dy, int ksize=3, int borderType=BORDER_DEFAULT) |

| Calculates the first order image derivative in both x and y using a Sobel operator. | |

| void | cv::sqrBoxFilter (InputArray _src, OutputArray _dst, int ddepth, Size ksize, Point anchor=Point(-1, -1), bool normalize=true, int borderType=BORDER_DEFAULT) |

| Calculates the normalized sum of squares of the pixel values overlapping the filter. | |

原文链接:

https://docs.opencv.org/3.4.3/d4/d86/group__imgproc__filter.html#gae84c92d248183bd92fa713ce51cc3599

9597

9597

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?