- 效果

-

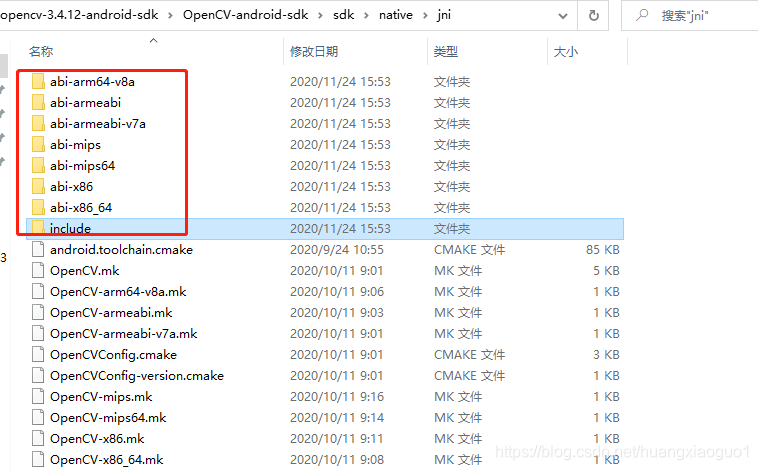

下载opencv

-

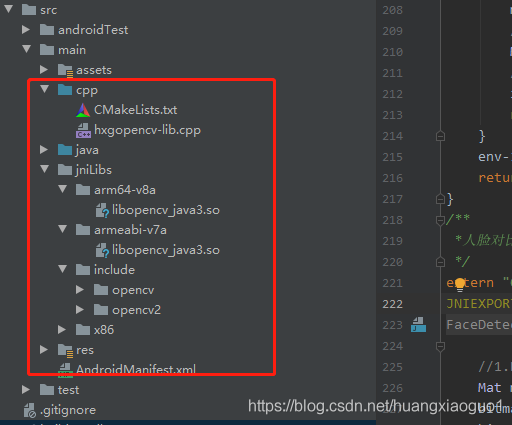

导入so库和.h头文件

sourceSets {

main {

jniLibs.srcDirs = ['libs']

}

}

- 修改CMakeLists.txt

# For more information about using CMake with Android Studio, read the

# documentation: https://d.android.com/studio/projects/add-native-code.html

# Sets the minimum version of CMake required to build the native library.

cmake_minimum_required(VERSION 3.4.1)

#需要引入我们头文件,以这个配置的目录为基准

include_directories(../jniLibs/include)

#添加依赖opencv.so库

set(my_lib_path ${CMAKE_SOURCE_DIR}/../jniLibs)

# 添加三方的so库

add_library(

opencv_java3

SHARED

IMPORTED)

# 指名第三方库的绝对路径

set_target_properties(

opencv_java3

PROPERTIES IMPORTED_LOCATION

${my_lib_path}/${ANDROID_ABI}/libopencv_java3.so)

# Creates and names a library, sets it as either STATIC

# or SHARED, and provides the relative paths to its source code.

# You can define multiple libraries, and CMake builds them for you.

# Gradle automatically packages shared libraries with your APK.

#****************人脸识别***************************

add_library( # Sets the name of the library.

hxgopencv-lib

# Sets the library as a shared library.

SHARED

# Provides a relative path to your source file(s).

hxgopencv-lib.cpp)

# Searches for a specified prebuilt library and stores the path as a

# variable. Because CMake includes system libraries in the search path by

# default, you only need to specify the name of the public NDK library

# you want to add. CMake verifies that the library exists before

# completing its build.

find_library( # Sets the name of the path variable.

log-lib

# Specifies the name of the NDK library that

# you want CMake to locate.

log)

# Specifies libraries CMake should link to your target library. You

# can link multiple libraries, such as libraries you define in this

# build script, prebuilt third-party libraries, or system libraries.

target_link_libraries( # Specifies the target library.

# 人脸识别

hxgopencv-lib

opencv_java3

#加入该依赖库 undefined reference to `AndroidBitmap_getInfo'

jnigraphics

# Links the target library to the log library

# included in the NDK.

${log-lib})

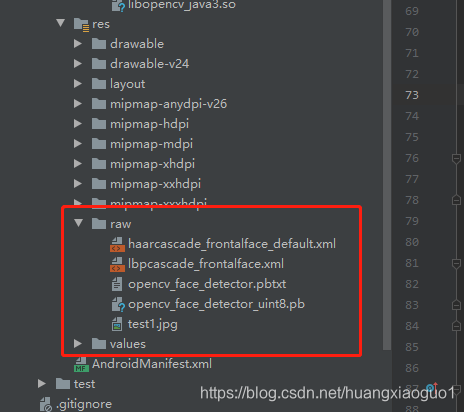

- 加载级联选择器

/**

* 加载人脸识别的分类器文件

*/

private void copyCaseCadeFile() {

try {

// load cascade file from application resources

InputStream is = getResources().openRawResource(R.raw.lbpcascade_frontalface);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

mCascadeFile = new File(cascadeDir, "lbpcascade_frontalface.xml");

if (mCascadeFile.exists()) return;

FileOutputStream os = new FileOutputStream(mCascadeFile);

byte[] buffer = new byte[4096];

int bytesRead;

while ((bytesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, bytesRead);

}

is.close();

os.close();

} catch (IOException e) {

e.printStackTrace();

}

}

/**

* 加载人脸识别的分类器文件

*

* @param filePath

*/

public native void loadCascade(String filePath);

#include <jni.h>

#include <string>

#include "opencv2/opencv.hpp"

#include "android/bitmap.h"

#include "android/log.h"

//使用命名空间

using namespace cv;

using namespace dnn;

/**

* 加载人脸识别的分类器文件

*/

CascadeClassifier cascadeClassifier;

extern "C"

JNIEXPORT void JNICALL

Java_com_hxg_ndkface_FaceDetection_loadCascade(JNIEnv *env, jobject instance, jstring file_path) {

const char *filePath = env->GetStringUTFChars(file_path, 0);

cascadeClassifier.load(filePath);

__android_log_print(ANDROID_LOG_INFO, "TTTTT", "%s", "分类器文件加载成功");

env->ReleaseStringUTFChars(file_path, filePath);

}

- 只检测是否有人脸

/**

* 只检测是否有人脸

*/

extern "C"

JNIEXPORT jboolean JNICALL

Java_com_hxg_ndkface_FaceDetection_faceDetection(JNIEnv *env, jobject thiz, jobject bitmap) {

//检测人脸,opencv 有个关键类 是Mat open只会处理Mat android里面是Bitmap

//1.Bitmap转成opencv能操作的C++对象Mat

Mat mat;

bitmap2Mat(env, mat, bitmap);

//处理灰度图,提高效率

Mat gray_mat;

__android_log_print(ANDROID_LOG_INFO, "TTTTT", "%s", "处理灰度图");

cvtColor(mat, gray_mat, COLOR_BGRA2GRAY);

__android_log_print(ANDROID_LOG_INFO, "TTTTT", "%s", "再次处理 直方均衡补偿");

//再次处理 直方均衡补偿

Mat equalize_mat;

equalizeHist(gray_mat, equalize_mat);

//识别人脸,要加载人脸分类器文件

std::vector<Rect> faces;

cascadeClassifier.detectMultiScale(equalize_mat, faces, 1.1, 3, CV_HAAR_SCALE_IMAGE,

Size(30, 30));

__android_log_print(ANDROID_LOG_INFO, "TTTTT", "人脸个数:%d", faces.size());

if (faces.size() == 1) {

return true;

}

return false;

}

- 检测有无人脸,并保存到文件夹

/**

* 检测有无人脸,并保存到文件夹

*/

extern "C"

JNIEXPORT jint JNICALL

Java_com_hxg_ndkface_FaceDetection_faceDetectionSaveInfo(JNIEnv *env, jobject instance,

jstring name,

jobject bitmap) {

const char *filePath = env->GetStringUTFChars(name, 0);

//检测人脸,opencv 有个关键类 是Mat open只会处理Mat android里面是Bitmap

//1.Bitmap转成opencv能操作的C++对象Mat

Mat mat;

bitmap2Mat(env, mat, bitmap);

//处理灰度图,提高效率

Mat gray_mat;

cvtColor(mat, gray_mat, COLOR_BGRA2GRAY);

//再次处理 直方均衡补偿

Mat equalize_mat;

equalizeHist(gray_mat, equalize_mat);

//识别人脸,要加载人脸分类器文件

std::vector<Rect> faces;

cascadeClassifier.detectMultiScale(equalize_mat, faces, 1.1, 5, 0 | CV_HAAR_SCALE_IMAGE,

Size(160, 160));

__android_log_print(ANDROID_LOG_INFO, "TTTTT", "人脸个数:%d", faces.size());

if (faces.size() == 1) {

Rect faceRect = faces[0];

//在人脸部分画个图

rectangle(mat, faceRect, Scalar(255, 0, 0), 3);

__android_log_print(ANDROID_LOG_ERROR, "TTTTT", "人脸个数:%s", "在人脸部分画个图");

//把mat我们又放到bitmap中

mat2Bitmap(env, mat, bitmap);

//保存人脸信息Mat,图片jpg

Mat saveMat = Mat(equalize_mat, faceRect);

//保存face_info_mat

imwrite(filePath, equalize_mat);

return 1;

}

env->ReleaseStringUTFChars(name, filePath);

return 0;

}

- 人脸对比

/**

*人脸对比

*/

extern "C"

JNIEXPORT jdouble JNICALL

Java_com_hxg_ndkface_FaceDetection_histogramMatch(JNIEnv *env, jobject instance, jobject bitmap1,

jobject bitmap2) {

//1.Bitmap转成opencv能操作的C++对象Mat

Mat mat, mat1;

bitmap2Mat(env, mat, bitmap1);

bitmap2Mat(env, mat1, bitmap2);

// 转灰度矩阵

cvtColor(mat, mat, COLOR_BGR2HSV);

cvtColor(mat1, mat1, COLOR_BGR2HSV);

int channels[] = {0, 1};

int histsize[] = {180, 255};

float r1[] = {0, 180};

float r2[] = {0, 255};

const float *ranges[] = {r1, r2};

Mat hist1, hist2;

calcHist(&mat, 3, channels, Mat(), hist1, 2, histsize, ranges, true);

//https://www.cnblogs.com/bjxqmy/p/12292421.html

normalize(hist1, hist1, 1, 0, NORM_L1);

calcHist(&mat1, 3, channels, Mat(), hist2, 2, histsize, ranges, true);

normalize(hist2, hist2, 1, 0, NORM_L1);

double similarity = compareHist(hist1, hist2, HISTCMP_CORREL);

__android_log_print(ANDROID_LOG_ERROR, "TTTTT", "相识度:%f", similarity);

return similarity;

}

- Dnn模式的人脸识别,并抠图

private void copyCaseCadeFilePbtxt() {

InputStream is = null;

FileOutputStream os = null;

try {

// load cascade file from application resources

is = getResources().openRawResource(R.raw.opencv_face_detector);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

mCascadeFile = new File(cascadeDir, "opencv_face_detector.pbtxt");

if (mCascadeFile.exists()) return;

os = new FileOutputStream(mCascadeFile);

byte[] buffer = new byte[1024 * 1024];

int bytesRead;

while ((bytesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, bytesRead);

}

is.close();

os.close();

} catch (IOException e) {

e.printStackTrace();

} finally {

try {

if (is != null) {

is.close();

}

if (os != null) {

os.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

private void copyCaseCadeFileUint8() {

InputStream is = null;

FileOutputStream os = null;

try {

// load cascade file from application resources

is = getResources().openRawResource(R.raw.opencv_face_detector_uint8);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

mCascadeFile = new File(cascadeDir, "opencv_face_detector_uint8.pb");

if (mCascadeFile.exists()) return;

os = new FileOutputStream(mCascadeFile);

byte[] buffer = new byte[1024 * 1024];

int bytesRead;

while ((bytesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, bytesRead);

}

is.close();

os.close();

} catch (IOException e) {

e.printStackTrace();

} finally {

try {

if (is != null) {

is.close();

}

if (os != null) {

os.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

/**

*Dnn模式的人脸识别,并抠图

*/

extern "C"

JNIEXPORT jboolean JNICALL

Java_com_hxg_ndkface_FaceDetection_faceDnnDetection(JNIEnv *env, jobject instance,

jstring model_binary,

jstring model_desc,

jstring checkPath,

jstring resultPath) {

const char *model_binary_path = env->GetStringUTFChars(model_binary, 0);

const char *model_desc_path = env->GetStringUTFChars(model_desc, 0);

const char *check_path = env->GetStringUTFChars(checkPath, 0);

const char *result_path = env->GetStringUTFChars(resultPath, 0);

Net net = readNetFromTensorflow(model_binary_path, model_desc_path);

net.setPreferableBackend(DNN_BACKEND_OPENCV);

net.setPreferableTarget(DNN_TARGET_CPU);

if (net.empty()) {

__android_log_print(ANDROID_LOG_ERROR, "TTTTT", "%s", "could not load net...");

return false;

}

Mat frame = imread(check_path); //读入检测文件

__android_log_print(ANDROID_LOG_ERROR, "TTTTT", "%s", "输入数据调整");

// 输入数据调整

Mat inputBlob = blobFromImage(frame, 1.0,

Size(300, 300), Scalar(104.0, 177.0, 123.0), false, false);

net.setInput(inputBlob, "data");

// 人脸检测

Mat detection = net.forward("detection_out");

Mat detectionMat(detection.size[2], detection.size[3], CV_32F, detection.ptr<float>());

Mat face_area;

for (int i = 0; i < detectionMat.rows; i++) {

// 置信度 0~1之间

float confidence = detectionMat.at<float>(i, 2);

if (confidence > 0.7) {

//count++;

int xLeftBottom = static_cast<int>(detectionMat.at<float>(i, 3) * frame.cols);

int yLeftBottom = static_cast<int>(detectionMat.at<float>(i, 4) * frame.rows);

int xRightTop = static_cast<int>(detectionMat.at<float>(i, 5) * frame.cols);

int yRightTop = static_cast<int>(detectionMat.at<float>(i, 6) * frame.rows);

Rect object((int) xLeftBottom, (int) yLeftBottom,

(int) (xRightTop - xLeftBottom),

(int) (yRightTop - yLeftBottom));

face_area = frame(object); //扣出图片

rectangle(frame, object, Scalar(0, 255, 0)); //画框

}

}

imwrite(result_path, face_area); //写出文件

env->ReleaseStringUTFChars(model_binary, model_binary_path);

env->ReleaseStringUTFChars(model_desc, model_desc_path);

env->ReleaseStringUTFChars(checkPath, check_path);

env->ReleaseStringUTFChars(resultPath, result_path);

return true;

}

- Bitmap和Mat互转

/**

* Bitmap转成opencv能操作的C++对象Mat

* @param env

* @param mat

* @param bitmap

*/

void bitmap2Mat(JNIEnv *env, Mat &mat, jobject bitmap) {

//Mat 里面有个type :CV_8UC4刚好对上我们的Bitmap中的ARGB_8888 , CV_8UC2对应Bitmap中的RGB_555

//获取 bitmap 信息

AndroidBitmapInfo info;

void *pixels;

try {

// AndroidBitmap_getInfo(env, bitmap, &info);

//锁定Bitmap画布

// AndroidBitmap_lockPixels(env, bitmap, &pixels);

CV_Assert(AndroidBitmap_getInfo(env, bitmap, &info) >= 0);

CV_Assert(info.format == ANDROID_BITMAP_FORMAT_RGBA_8888 ||

info.format == ANDROID_BITMAP_FORMAT_RGB_565);

CV_Assert(AndroidBitmap_lockPixels(env, bitmap, &pixels) >= 0);

CV_Assert(pixels);

//指定mat的宽高type BGRA

mat.create(info.height, info.width, CV_8UC4);

if (info.format == ANDROID_BITMAP_FORMAT_RGBA_8888) {

//对应mat应该是CV_8UC4

Mat temp(info.height, info.width, CV_8UC4, pixels);

//把数据temp复制到mat里面

temp.copyTo(mat);

} else if (info.format == ANDROID_BITMAP_FORMAT_RGB_565) {

//对应mat应该是CV_8UC2

Mat temp(info.height, info.width, CV_8UC2, pixels);

//mat 是CV_8UC4 ,CV_8UC2 > CV_8UC4

cvtColor(temp, mat, COLOR_BGR5652BGRA);

}

//解锁Bitmap画布

AndroidBitmap_unlockPixels(env, bitmap);

return;

} catch (Exception &e) {

AndroidBitmap_unlockPixels(env, bitmap);

jclass je = env->FindClass("java/lang/Exception");

env->ThrowNew(je, e.what());

return;

} catch (...) {

AndroidBitmap_unlockPixels(env, bitmap);

jclass je = env->FindClass("java/lang/Exception");

env->ThrowNew(je, "Unknown exception in JNI code {nBitmapToMat}");

return;

}

}

/**

* 把mat转成bitmap

* @param env

* @param mat

* @param bitmap

*/

void mat2Bitmap(JNIEnv *env, Mat mat, jobject bitmap) {

//Mat 里面有个type :CV_8UC4刚好对上我们的Bitmap中的ARGB_8888 , CV_8UC2对应Bitmap中的RGB_555

//获取 bitmap 信息

AndroidBitmapInfo info;

void *pixels;

try {

// AndroidBitmap_getInfo(env, bitmap, &info);

//锁定Bitmap画布

// AndroidBitmap_lockPixels(env, bitmap, &pixels);

CV_Assert(AndroidBitmap_getInfo(env, bitmap, &info) >= 0);

CV_Assert(info.format == ANDROID_BITMAP_FORMAT_RGBA_8888 ||

info.format == ANDROID_BITMAP_FORMAT_RGB_565);

CV_Assert(mat.dims == 2 && info.height == (uint32_t) mat.rows &&

info.width == (uint32_t) mat.cols);

CV_Assert(mat.type() == CV_8UC1 || mat.type() == CV_8UC3 || mat.type() == CV_8UC4);

CV_Assert(AndroidBitmap_lockPixels(env, bitmap, &pixels) >= 0);

CV_Assert(pixels);

if (info.format == ANDROID_BITMAP_FORMAT_RGBA_8888) {

//对应mat应该是CV_8UC4

Mat temp(info.height, info.width, CV_8UC4, pixels);

if (mat.type() == CV_8UC4) {

mat.copyTo(temp);

} else if (mat.type() == CV_8UC2) {

cvtColor(mat, temp, COLOR_BGR5652BGRA);

} else if (mat.type() == CV_8UC1) {//灰度mat

cvtColor(mat, temp, COLOR_GRAY2BGRA);

} else if (mat.type() == CV_8UC3) {

cvtColor(mat, temp, COLOR_RGB2BGRA);

}

} else if (info.format == ANDROID_BITMAP_FORMAT_RGB_565) {

//对应mat应该是CV_8UC2

Mat temp(info.height, info.width, CV_8UC2, pixels);

if (mat.type() == CV_8UC4) {

cvtColor(mat, temp, COLOR_BGRA2BGR565);

} else if (mat.type() == CV_8UC2) {

mat.copyTo(temp);

} else if (mat.type() == CV_8UC1) {//灰度mat

cvtColor(mat, temp, COLOR_GRAY2BGR565);

} else if (mat.type() == CV_8UC3) {

cvtColor(mat, temp, COLOR_RGB2BGR565);

}

}

//解锁Bitmap画布

AndroidBitmap_unlockPixels(env, bitmap);

return;

} catch (const Exception &e) {

AndroidBitmap_unlockPixels(env, bitmap);

jclass je = env->FindClass("java/lang/Exception");

env->ThrowNew(je, e.what());

return;

} catch (...) {

AndroidBitmap_unlockPixels(env, bitmap);

jclass je = env->FindClass("java/lang/Exception");

env->ThrowNew(je, "Unknown exception in JNI code {nMatToBitmap}");

return;

}

}

- 人脸检测Activity

package com.hxg.ndkface;

import android.Manifest;

import android.annotation.SuppressLint;

import android.content.Context;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.hardware.Camera;

import android.os.Bundle;

import androidx.appcompat.app.AppCompatActivity;

import androidx.appcompat.widget.AppCompatImageView;

import androidx.appcompat.widget.AppCompatTextView;

import androidx.arch.core.executor.ArchTaskExecutor;

import com.hxg.ndkface.camera.AutoTexturePreviewView;

import com.hxg.ndkface.manager.CameraPreviewManager;

import com.hxg.ndkface.model.SingleBaseConfig;

import com.hxg.ndkface.utils.CornerUtil;

import com.hxg.ndkface.utils.FileUtils;

import com.tbruyelle.rxpermissions3.RxPermissions;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

public class MainNorFaceActivity extends AppCompatActivity {

private Bitmap mFaceBitmap;

private FaceDetection mFaceDetection;

private File mCascadeFile;

private AppCompatTextView mTextView;

private RxPermissions rxPermissions;

private AutoTexturePreviewView mAutoCameraPreviewView;

// 图片越大,性能消耗越大,也可以选择640*480, 1280*720

private static final int PREFER_WIDTH = SingleBaseConfig.getBaseConfig().getRgbAndNirWidth();

private static final int PERFER_HEIGH = SingleBaseConfig.getBaseConfig().getRgbAndNirHeight();

@Override

public void onDetachedFromWindow() {

super.onDetachedFromWindow();

CameraPreviewManager.getInstance().stopPreview();

}

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

rxPermissions = new RxPermissions(this);

mTextView = findViewById(R.id.note);

mAutoCameraPreviewView = findViewById(R.id.auto_camera_preview_view);

mFaceBitmap = BitmapFactory.decodeResource(getResources(), R.drawable.face);

copyCaseCadeFile();

mFaceDetection = new FaceDetection();

mFaceDetection.loadCascade(mCascadeFile.getAbsolutePath());

}

/**

* 加载人脸识别的分类器文件

*/

private void copyCaseCadeFile() {

try {

// load cascade file from application resources

InputStream is = getResources().openRawResource(R.raw.lbpcascade_frontalface);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

mCascadeFile = new File(cascadeDir, "lbpcascade_frontalface.xml");

if (mCascadeFile.exists()) return;

FileOutputStream os = new FileOutputStream(mCascadeFile);

byte[] buffer = new byte[4096];

int bytesRead;

while ((bytesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, bytesRead);

}

is.close();

os.close();

} catch (IOException e) {

e.printStackTrace();

}

}

@Override

public void onAttachedToWindow() {

super.onAttachedToWindow();

CornerUtil.clipViewCircle(mAutoCameraPreviewView);

rxPermissions.request(

Manifest.permission.WRITE_EXTERNAL_STORAGE,

Manifest.permission.READ_EXTERNAL_STORAGE,

Manifest.permission.CAMERA)

.subscribe(aBoolean -> {

startTestOpenDebugRegisterFunction();

});

}

@SuppressLint("RestrictedApi")

private void startTestOpenDebugRegisterFunction() {

CameraPreviewManager.getInstance().setCameraFacing(CameraPreviewManager.CAMERA_FACING_FRONT);

CameraPreviewManager.getInstance().startPreview(this, mAutoCameraPreviewView,

PREFER_WIDTH, PERFER_HEIGH, (byte[] data, Camera camera, int width, int height) -> {

//识别人脸,保存人脸特征信息

// String name = FileUtils.createFile(this) + "/test.png";

// int type = mFaceDetection.faceDetectionSaveInfo(name, mFaceBitmap);

ArchTaskExecutor.getIOThreadExecutor().execute(() -> {

Bitmap bitmap = FileUtils.decodeToBitMap(data, camera);

boolean haveFace = mFaceDetection.faceDetection(bitmap);

runOnUiThread(() -> {

((AppCompatImageView) findViewById(R.id.tv_img)).setImageBitmap(bitmap);

});

if (haveFace) {

double similarity = mFaceDetection.histogramMatch(mFaceBitmap, bitmap);

String str = "对比度:";

runOnUiThread(() -> {

mTextView.setText(str + similarity);

});

}

});

});

}

}

- Dnn模式的人脸识别 Activity

package com.hxg.ndkface;

import android.Manifest;

import android.annotation.SuppressLint;

import android.content.Context;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.os.Bundle;

import android.util.Log;

import androidx.appcompat.app.AppCompatActivity;

import androidx.appcompat.widget.AppCompatImageView;

import androidx.arch.core.executor.ArchTaskExecutor;

import com.hxg.ndkface.utils.FileUtils;

import com.tbruyelle.rxpermissions3.RxPermissions;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.io.InputStream;

public class MainDnnFaceActivity extends AppCompatActivity {

private FaceDetection mFaceDetection;

private String mModelBinary;

private String mModelDesc;

private String mCheckPath;

private RxPermissions rxPermissions;

private File mCascadeFile;

private AppCompatImageView mIvHeader;

private AppCompatImageView mFace;

private AppCompatImageView mFace2;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main_dnn_face);

mIvHeader = findViewById(R.id.iv_header);

mFace = findViewById(R.id.iv_header_face);

mFace2 = findViewById(R.id.iv_header_face2);

mFaceDetection = new FaceDetection();

copyCaseCadeFileUint8();

copyCaseCadeFilePbtxt();

copyCaseCadeFileTest();

rxPermissions = new RxPermissions(this);

}

private void copyCaseCadeFileTest() {

InputStream is = null;

FileOutputStream os = null;

try {

// load cascade file from application resources

is = getResources().openRawResource(R.raw.test1);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

mCascadeFile = new File(cascadeDir, "test1.jpg");

if (mCascadeFile.exists()) return;

os = new FileOutputStream(mCascadeFile);

byte[] buffer = new byte[1024 * 1024];

int bytesRead;

while ((bytesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, bytesRead);

}

is.close();

os.close();

} catch (IOException e) {

e.printStackTrace();

} finally {

try {

if (is != null) {

is.close();

}

if (os != null) {

os.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

private void copyCaseCadeFilePbtxt() {

InputStream is = null;

FileOutputStream os = null;

try {

// load cascade file from application resources

is = getResources().openRawResource(R.raw.opencv_face_detector);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

mCascadeFile = new File(cascadeDir, "opencv_face_detector.pbtxt");

if (mCascadeFile.exists()) return;

os = new FileOutputStream(mCascadeFile);

byte[] buffer = new byte[1024 * 1024];

int bytesRead;

while ((bytesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, bytesRead);

}

is.close();

os.close();

} catch (IOException e) {

e.printStackTrace();

} finally {

try {

if (is != null) {

is.close();

}

if (os != null) {

os.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

private void copyCaseCadeFileUint8() {

InputStream is = null;

FileOutputStream os = null;

try {

// load cascade file from application resources

is = getResources().openRawResource(R.raw.opencv_face_detector_uint8);

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

mCascadeFile = new File(cascadeDir, "opencv_face_detector_uint8.pb");

if (mCascadeFile.exists()) return;

os = new FileOutputStream(mCascadeFile);

byte[] buffer = new byte[1024 * 1024];

int bytesRead;

while ((bytesRead = is.read(buffer)) != -1) {

os.write(buffer, 0, bytesRead);

}

is.close();

os.close();

} catch (IOException e) {

e.printStackTrace();

} finally {

try {

if (is != null) {

is.close();

}

if (os != null) {

os.close();

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

@Override

public void onAttachedToWindow() {

super.onAttachedToWindow();

File cascadeDir = getDir("cascade", Context.MODE_PRIVATE);

mModelBinary = cascadeDir + "/opencv_face_detector_uint8.pb";

mModelDesc = cascadeDir + "/opencv_face_detector.pbtxt";

mCheckPath = cascadeDir + "/test1.jpg";

mIvHeader.setImageBitmap(BitmapFactory.decodeFile(mCheckPath));

rxPermissions.request(

Manifest.permission.WRITE_EXTERNAL_STORAGE,

Manifest.permission.READ_EXTERNAL_STORAGE,

Manifest.permission.CAMERA)

.subscribe(aBoolean -> {

startTestFunction();

});

}

@SuppressLint("RestrictedApi")

private void startTestFunction() {

ArchTaskExecutor.getIOThreadExecutor().execute(() -> {

String name = FileUtils.createFile(this) + "/test1.png";

/**

* 使用Dnn模式的人脸识别,并抠图

*/

boolean b = mFaceDetection.faceDnnDetection(mModelBinary, mModelDesc, mCheckPath, name);

Log.i("TTTTT", "b===>" + b);

runOnUiThread(() -> {

mFace.setImageBitmap(BitmapFactory.decodeFile(name));

});

Bitmap bitmap = mFaceDetection.faceDnnDetectionBitmap(mModelBinary, mModelDesc, mCheckPath);

runOnUiThread(() -> {

if (bitmap != null) {

mFace2.setImageBitmap(bitmap);

}

});

});

}

}

249

249

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?