在中大型项目开发过程中,如何存储大量的数据是我们不可回避的难题,对这个问题的处理直接关系到系统的稳定性和可用性,我曾经负责过一个公司重点的老项目(交接过来的,哈哈~~),由于开发的时候没能正确预估数据的增长量,两三年之后有若干张表的单表数据量已经达到了五千万以上(未分库分表),我们都知道出于性能考虑mysql的单表数据量不要超过一千万,而且这个系统的数据库还有其他问题,比如和其他系统数据共库等等,最终导致系统所在库的表达到了1500多张,若干表的单表数据量也非常大。尽管后来采取了查询限流,强制索引,限定查询条件,归档数据,读写分离等等措施,这个系统的可维护性依然非常低。对于这样大数据量的系统而言必须采取分表和分库结合的策略。

目前市面上比较常用且开源的分库分表中间件有很多,之前有接触过MyCat,一种基于代理模式的数据库中间件。最近了解了下ShardingSphere,它是基于客户端分表分库的数据库中间件,当然也有代理模式的,只是没有广泛采用,详情见官网上的文档:https://shardingsphere.apache.org/document/legacy/3.x/document/cn/overview/。下面记录下ShardingSphere和SpringBoot整合实现分库分表的大致步骤。分两篇博客展开,分别介绍基于sharding-jdbc-core依赖的手工配置的集成方法和基于sharding-jdbc-spring-boot-starter依赖的自动配置的方法,除了集成了SpringBoot还使用了常用的MyBatis+Druid等技术。这一篇介绍下手工配置的方法,这种方法的优点是灵活。

- 首先创建SpringBoot项目,我是用的是2.1.6版本,引入sharding-jdbc-core依赖和druid、MyBatis等依赖,开发过程中还发现了一个好用的工具依赖hutool,此时的pom.xml文件如下:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.1.6.RELEASE</version>

<relativePath/>

</parent>

<groupId>com.hyc</groupId>

<artifactId>shard3-manual</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>shard3-manual</name>

<description>Spring Boot集成shardingsphere3.x</description>

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>2.0.1</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

<scope>runtime</scope>

<optional>true</optional>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-configuration-processor</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!-- shardingsphere start -->

<dependency>

<groupId>io.shardingsphere</groupId>

<artifactId>sharding-jdbc-core</artifactId>

<version>3.1.0</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.9</version>

</dependency>

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-all</artifactId>

<version>4.5.13</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.58</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.1.17</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

- 本地建test和test2两个分库,在每个分库下建立一样的分表,用户表,用户地址表,订单表,订单明细表和商品表。设想用户地址表依赖于用户表,订单明细表依赖于订单表,同一个用户的用户表数据和订单数据在一个库中不在一套表中,即后缀一致(最好能在一套表中),以上的表,除了商品表不需要分表以外,其他表都需要分表(商品的数据在我们公司大约几十万条不需要分表,因此作为全局表放在每个库里)以提高查询性能。建表语句如下:

SET NAMES utf8mb4;

-- ----------------------------

-- Table structure for t_order_0

-- ----------------------------

DROP TABLE IF EXISTS `t_order_0`;

CREATE TABLE `t_order_0` (

`order_id` bigint(32) NOT NULL COMMENT '主键',

`order_no` varchar(50) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL COMMENT '订单号',

`user_id` bigint(32) NOT NULL COMMENT '用户id',

`order_amount` decimal(20, 2) NOT NULL DEFAULT 0.00 COMMENT '订单总额',

`order_status` int(4) NOT NULL DEFAULT 1 COMMENT '订单状态,1-进行中,2-已完成,3-已取消',

`remark` varchar(100) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci DEFAULT NULL COMMENT '留言',

PRIMARY KEY (`order_id`),

INDEX `idx_order_user_id`(`user_id`),

INDEX `idx_order_order_no`(`order_no`)

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci COMMENT = '订单表';

-- ----------------------------

-- Table structure for t_order_1

-- ----------------------------

DROP TABLE IF EXISTS `t_order_1`;

CREATE TABLE `t_order_1` (

`order_id` bigint(32) NOT NULL COMMENT '主键',

`order_no` varchar(50) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL COMMENT '订单号',

`user_id` bigint(32) NOT NULL COMMENT '用户id',

`order_amount` decimal(20, 2) NOT NULL DEFAULT 0.00 COMMENT '订单总额',

`order_status` int(4) NOT NULL DEFAULT 1 COMMENT '订单状态,1-进行中,2-已完成,3-已取消',

`remark` varchar(100) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci DEFAULT NULL COMMENT '留言',

PRIMARY KEY (`order_id`),

INDEX `idx_order_user_id`(`user_id`),

INDEX `idx_order_order_no`(`order_no`)

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci COMMENT = '订单表';

-- ----------------------------

-- Table structure for t_order_item_0

-- ----------------------------

DROP TABLE IF EXISTS `t_order_item_0`;

CREATE TABLE `t_order_item_0` (

`order_item_id` bigint(32) NOT NULL COMMENT '主键',

`order_id` bigint(32) NOT NULL COMMENT '订单id',

`product_id` bigint(32) NOT NULL COMMENT '商品id',

`item_price` decimal(20, 2) NOT NULL DEFAULT 0.00 COMMENT '单价',

`total_num` int(4) NOT NULL DEFAULT 1 COMMENT '数量',

`total_price` decimal(20, 2) NOT NULL DEFAULT 0.00 COMMENT '订单总额',

`order_time` datetime(0) NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '下单时间',

`user_id` bigint(32) NOT NULL COMMENT '用户id',

PRIMARY KEY (`order_item_id`),

INDEX `idx_order_item_order_id`(`order_id`),

INDEX `idx_order_item_user_id`(`user_id`),

INDEX `idx_order_item_product_id`(`product_id`),

INDEX `idx_order_item_order_time`(`order_time`)

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci COMMENT = '订单明细表';

-- ----------------------------

-- Table structure for t_order_item_1

-- ----------------------------

DROP TABLE IF EXISTS `t_order_item_1`;

CREATE TABLE `t_order_item_1` (

`order_item_id` bigint(32) NOT NULL COMMENT '主键',

`order_id` bigint(32) NOT NULL COMMENT '订单id',

`product_id` bigint(32) NOT NULL COMMENT '商品id',

`item_price` decimal(20, 2) NOT NULL DEFAULT 0.00 COMMENT '单价',

`total_num` int(4) NOT NULL DEFAULT 1 COMMENT '数量',

`total_price` decimal(20, 2) NOT NULL DEFAULT 0.00 COMMENT '订单总额',

`order_time` datetime(0) NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '下单时间',

`user_id` bigint(32) NOT NULL COMMENT '用户id',

PRIMARY KEY (`order_item_id`),

INDEX `idx_order_item_order_id`(`order_id`),

INDEX `idx_order_item_user_id`(`user_id`),

INDEX `idx_order_item_product_id`(`product_id`),

INDEX `idx_order_item_order_time`(`order_time`)

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci COMMENT = '订单明细表';

-- ----------------------------

-- Table structure for t_product

-- ----------------------------

DROP TABLE IF EXISTS `t_product`;

CREATE TABLE `t_product` (

`product_id` bigint(32) NOT NULL COMMENT '主键',

`code` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL COMMENT '商品编码',

`name` varchar(50) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL COMMENT '商品名称',

`desc` varchar(100) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci DEFAULT NULL COMMENT '商品描述',

PRIMARY KEY (`product_id`),

INDEX `idx_user_product_code`(`code`)

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci COMMENT = '商品表';

-- ----------------------------

-- Table structure for t_user_0

-- ----------------------------

DROP TABLE IF EXISTS `t_user_0`;

CREATE TABLE `t_user_0` (

`user_id` bigint(32) NOT NULL COMMENT '主键',

`id_number` varchar(18) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL COMMENT '身份证号码',

`name` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL COMMENT '姓名',

`age` int(4) DEFAULT NULL COMMENT '年龄',

`gender` int(2) DEFAULT 1 COMMENT '性别:1-男;2-女',

`birth_date` date DEFAULT NULL COMMENT '出生日期',

PRIMARY KEY (`user_id`)

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci COMMENT = '用户表';

-- ----------------------------

-- Table structure for t_user_1

-- ----------------------------

DROP TABLE IF EXISTS `t_user_1`;

CREATE TABLE `t_user_1` (

`user_id` bigint(32) NOT NULL COMMENT '主键',

`id_number` varchar(18) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL COMMENT '身份证号码',

`name` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL COMMENT '姓名',

`age` int(4) DEFAULT NULL COMMENT '年龄',

`gender` int(2) DEFAULT 1 COMMENT '性别:1-男;2-女',

`birth_date` date DEFAULT NULL COMMENT '出生日期',

PRIMARY KEY (`user_id`)

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci COMMENT = '用户表';

-- ----------------------------

-- Table structure for t_user_address_0

-- ----------------------------

DROP TABLE IF EXISTS `t_user_address_0`;

CREATE TABLE `t_user_address_0` (

`address_id` bigint(32) NOT NULL COMMENT '主键',

`user_id` bigint(32) NOT NULL COMMENT '用户id',

`province` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL COMMENT '省',

`city` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL COMMENT '市',

`district` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci DEFAULT NULL COMMENT '区',

`detail` varchar(100) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci DEFAULT NULL COMMENT '详细地址',

`sort` int(4) DEFAULT 1 COMMENT '排序',

`gender` int(2) DEFAULT 1 COMMENT '性别:1-男;2-女',

PRIMARY KEY (`address_id`),

INDEX `idx_user_address_user_id`(`user_id`)

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci COMMENT = '地址表';

-- ----------------------------

-- Table structure for t_user_address_1

-- ----------------------------

DROP TABLE IF EXISTS `t_user_address_1`;

CREATE TABLE `t_user_address_1` (

`address_id` bigint(32) NOT NULL COMMENT '主键',

`user_id` bigint(32) NOT NULL COMMENT '用户id',

`province` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL COMMENT '省',

`city` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci NOT NULL COMMENT '市',

`district` varchar(20) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci DEFAULT NULL COMMENT '区',

`detail` varchar(100) CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci DEFAULT NULL COMMENT '详细地址',

`sort` int(4) DEFAULT 1 COMMENT '排序',

`gender` int(2) DEFAULT 1 COMMENT '性别:1-男;2-女',

PRIMARY KEY (`address_id`),

INDEX `idx_user_address_user_id`(`user_id`)

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_general_ci COMMENT = '地址表';- 使用Mybatis-Generator生成相关代码,这里只用用户表来举例,其他的表类似,限于篇幅不再一一列举

package com.hyc.entity;

import lombok.Data;

import java.util.Date;

@Data

public class User {

private Long userId;

private String idNumber;

private String name;

private Integer age;

private Integer gender;

private Date birthDate;

}package com.hyc.dao;

import com.hyc.entity.User;

import com.hyc.vo.ListUserVo;

import org.apache.ibatis.annotations.Param;

import java.util.List;

public interface UserMapper {

int deleteByPrimaryKey(Long userId);

int insert(User record);

int insertSelective(User record);

User selectByPrimaryKey(Long userId);

int updateByPrimaryKeySelective(User record);

int updateByPrimaryKey(User record);

List<User> listByCondition(@Param("condition") ListUserVo query);

int count(@Param("condition") ListUserVo query);

}<?xml version="1.0" encoding="UTF-8" ?>

<!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd" >

<mapper namespace="com.hyc.dao.UserMapper" >

<resultMap id="BaseResultMap" type="com.hyc.entity.User" >

<id column="user_id" property="userId" jdbcType="BIGINT" />

<result column="id_number" property="idNumber" jdbcType="VARCHAR" />

<result column="name" property="name" jdbcType="VARCHAR" />

<result column="age" property="age" jdbcType="INTEGER" />

<result column="gender" property="gender" jdbcType="INTEGER" />

<result column="birth_date" property="birthDate" jdbcType="DATE" />

</resultMap>

<sql id="Base_Column_List" >

user_id, id_number, name, age, gender, birth_date

</sql>

<sql id="queryListConditon">

<if test="condition.userId != null">

and user_id = #{condition.userId, jdbcType=BIGINT}

</if>

<if test="condition.code != null and condition.code !=''">

and code = #{condition.code, jdbcType=VARCHAR}

</if>

<if test="condition.name != null and condition.name !=''">

and name = #{condition.name, jdbcType=VARCHAR}

</if>

<if test="condition.age != null">

and age = #{condition.age, jdbcType=INTEGER}

</if>

<if test="condition.gender != null">

and gender = #{condition.gender, jdbcType=INTEGER}

</if>

<if test="condition.joinDateStart != null">

and <![CDATA[ join_date >= #{condition.joinDateStart, jdbcType=TIMESTAMP} ]]>

</if>

<if test="condition.joinDateEnd != null">

and <![CDATA[ join_date <= #{condition.joinDateEnd, jdbcType=TIMESTAMP} ]]>

</if>

</sql>

<select id="listByCondition" resultMap="BaseResultMap" parameterType="java.util.Map" >

select

<include refid="Base_Column_List" />

from t_user

<where>

<include refid="queryListConditon"/>

</where>

order by id desc

<if test="condition.start != null and condition.pageSize != null ">

limit #{condition.start},#{condition.pageSize}

</if>

</select>

<select id="count" resultType="java.lang.Integer" parameterType="java.util.Map" >

select

count(1)

from t_user

<where>

<include refid="queryListConditon"/>

</where>

</select>

<select id="selectByPrimaryKey" resultMap="BaseResultMap" parameterType="java.lang.Long" >

select

<include refid="Base_Column_List" />

from t_user

where user_id = #{userId,jdbcType=BIGINT}

</select>

<delete id="deleteByPrimaryKey" parameterType="java.lang.Long" >

delete from t_user

where user_id = #{userId,jdbcType=BIGINT}

</delete>

<insert id="insert" parameterType="com.hyc.entity.User" useGeneratedKeys="true" keyProperty="userId">

insert into t_user (user_id, id_number, name,

age, gender, birth_date

)

values (#{userId,jdbcType=BIGINT}, #{idNumber,jdbcType=VARCHAR}, #{name,jdbcType=VARCHAR},

#{age,jdbcType=INTEGER}, #{gender,jdbcType=INTEGER}, #{birthDate,jdbcType=DATE}

)

</insert>

<insert id="insertSelective" parameterType="com.hyc.entity.User" useGeneratedKeys="true" keyProperty="userId">

insert into t_user

<trim prefix="(" suffix=")" suffixOverrides="," >

<if test="userId != null" >

user_id,

</if>

<if test="idNumber != null" >

id_number,

</if>

<if test="name != null" >

name,

</if>

<if test="age != null" >

age,

</if>

<if test="gender != null" >

gender,

</if>

<if test="birthDate != null" >

birth_date,

</if>

</trim>

<trim prefix="values (" suffix=")" suffixOverrides="," >

<if test="userId != null" >

#{userId,jdbcType=BIGINT},

</if>

<if test="idNumber != null" >

#{idNumber,jdbcType=VARCHAR},

</if>

<if test="name != null" >

#{name,jdbcType=VARCHAR},

</if>

<if test="age != null" >

#{age,jdbcType=INTEGER},

</if>

<if test="gender != null" >

#{gender,jdbcType=INTEGER},

</if>

<if test="birthDate != null" >

#{birthDate,jdbcType=DATE},

</if>

</trim>

</insert>

<update id="updateByPrimaryKeySelective" parameterType="com.hyc.entity.User" >

update t_user

<set >

<if test="idNumber != null" >

id_number = #{idNumber,jdbcType=VARCHAR},

</if>

<if test="name != null" >

name = #{name,jdbcType=VARCHAR},

</if>

<if test="age != null" >

age = #{age,jdbcType=INTEGER},

</if>

<if test="gender != null" >

gender = #{gender,jdbcType=INTEGER},

</if>

<if test="birthDate != null" >

birth_date = #{birthDate,jdbcType=DATE},

</if>

</set>

where user_id = #{userId,jdbcType=BIGINT}

</update>

<update id="updateByPrimaryKey" parameterType="com.hyc.entity.User" >

update t_user

set id_number = #{idNumber,jdbcType=VARCHAR},

name = #{name,jdbcType=VARCHAR},

age = #{age,jdbcType=INTEGER},

gender = #{gender,jdbcType=INTEGER},

birth_date = #{birthDate,jdbcType=DATE}

where user_id = #{userId,jdbcType=BIGINT}

</update>

</mapper>- 写分库和分表的算法,分库分表时必然会根据表里的字段设计分表分库的算法,对于用户表和用户地址表用user_id取模来分库,使用gender来分表。对于订单和订单明细表,使用user_id取模来分库,order_id取模来分表。因此需要用到两个分片算法,根据性别分片和根据id分片。

package com.hyc.dbstrategy;

import com.google.common.collect.Range;

import com.hyc.enums.GenderEnum;

import io.shardingsphere.api.algorithm.sharding.PreciseShardingValue;

import io.shardingsphere.api.algorithm.sharding.RangeShardingValue;

import io.shardingsphere.api.algorithm.sharding.standard.PreciseShardingAlgorithm;

import io.shardingsphere.api.algorithm.sharding.standard.RangeShardingAlgorithm;

import java.util.Collection;

import java.util.LinkedHashSet;

public class GenderShardingAlgorithm implements PreciseShardingAlgorithm<Integer>, RangeShardingAlgorithm<Integer> {

/**

* Sharding.

*

* @param availableTargetNames available data sources or tables's names

* @param shardingValue sharding value

* @return sharding result for data source or table's name

*/

@Override

public String doSharding(Collection<String> availableTargetNames, PreciseShardingValue<Integer> shardingValue) {

String databaseName = availableTargetNames.stream().findFirst().get();

for (String dbName : availableTargetNames) {

if (dbName.endsWith(genderToTableSuffix(shardingValue.getValue()))) {

databaseName = dbName;

}

}

return databaseName;

}

/**

* Sharding.

*

* @param availableTargetNames available data sources or tables's names

* @param shardingValue sharding value

* @return sharding results for data sources or tables's names

*/

@Override

public Collection<String> doSharding(Collection<String> availableTargetNames, RangeShardingValue<Integer> shardingValue) {

Collection<String> dbs = new LinkedHashSet<>(availableTargetNames.size());

Range<Integer> range = (Range<Integer>) shardingValue.getValueRange();

for (Integer i = range.lowerEndpoint(); i <= range.upperEndpoint(); i++) {

for (String dbName : availableTargetNames) {

if (dbName.endsWith(genderToTableSuffix(i))) {

dbs.add(dbName);

}

}

}

return dbs;

}

/**

* 字段与分库的映射关系

*

* @param gender

* @return

*/

private String genderToTableSuffix(Integer gender) {

return gender.equals(GenderEnum.MALE.getCode()) ? "0" : "1";

}

}

package com.hyc.dbstrategy;

import com.google.common.collect.Range;

import io.shardingsphere.api.algorithm.sharding.PreciseShardingValue;

import io.shardingsphere.api.algorithm.sharding.RangeShardingValue;

import io.shardingsphere.api.algorithm.sharding.standard.PreciseShardingAlgorithm;

import io.shardingsphere.api.algorithm.sharding.standard.RangeShardingAlgorithm;

import java.util.Collection;

import java.util.LinkedHashSet;

public class IdShardingAlgorithm implements PreciseShardingAlgorithm<Long>, RangeShardingAlgorithm<Long> {

/**

* Sharding.

*

* @param availableTargetNames available data sources or tables's names

* @param shardingValue sharding value

* @return sharding result for data source or table's name

*/

@Override

public String doSharding(Collection<String> availableTargetNames, PreciseShardingValue<Long> shardingValue) {

String table = availableTargetNames.stream().findFirst().get();

for (String tableName : availableTargetNames) {

if (tableName.endsWith(idToTableSuffix(shardingValue.getValue()))) {

table = tableName;

}

}

return table;

}

/**

* Sharding.

*

* @param availableTargetNames available data sources or tables's names

* @param shardingValue sharding value

* @return sharding results for data sources or tables's names

*/

@Override

public Collection<String> doSharding(Collection<String> availableTargetNames, RangeShardingValue<Long> shardingValue) {

Collection<String> dbs = new LinkedHashSet<>(availableTargetNames.size());

Range<Long> range = (Range<Long>) shardingValue.getValueRange();

for (long i = range.lowerEndpoint(); i <= range.upperEndpoint(); i++) {

for (String dbName : availableTargetNames) {

if (dbName.endsWith(idToTableSuffix(i))) {

dbs.add(dbName);

}

}

}

return dbs;

}

/**

* 字段与分表的映射关系

*

* @param id

* @return 表后缀(201906、201907等)

*/

private String idToTableSuffix(Long id) {

return String.valueOf(id % 2);

}

}

- 编写ID生成算法,在数据分片的场景中使用MySQL主键自增就不太合适了,因此我使用了snowflake算法来生成主键,代码是从网上搜的,ShardingSphere支持我们制定主键生成,只需要实现KeyGenerator接口即可

package com.hyc.keygen;

import io.shardingsphere.core.keygen.KeyGenerator;

import java.util.Random;

public final class SnowflakeShardingKeyGenerator implements KeyGenerator {

/**

* 开始时间截 (2015-01-01)

*/

private final long twepoch = 1420041600000L;

/**

* 机器id所占的位数

*/

private final long workerIdBits = 5L;

/**

* 数据标识id所占的位数

*/

private final long dataCenterIdBits = 5L;

/**

* 支持的最大机器id,结果是31 (这个移位算法可以很快的计算出几位二进制数所能表示的最大十进制数)

*/

private final long maxWorkerId = -1L ^ (-1L << workerIdBits);

/**

* 支持的最大数据标识id,结果是31

*/

private final long maxDataCenterId = -1L ^ (-1L << dataCenterIdBits);

/**

* 序列在id中占的位数

*/

private final long sequenceBits = 12L;

/**

* 机器ID向左移12位

*/

private final long workerIdShift = sequenceBits;

/**

* 数据标识id向左移17位(12+5)

*/

private final long datacenterIdShift = sequenceBits + workerIdBits;

/**

* 时间截向左移22位(5+5+12)

*/

private final long timestampLeftShift = sequenceBits + workerIdBits + dataCenterIdBits;

/**

* 生成序列的掩码,这里为4095 (0b111111111111=0xfff=4095)

*/

private final long sequenceMask = -1L ^ (-1L << sequenceBits);

/**

* 工作机器ID(0~31)

*/

private long workerId;

/**

* 数据中心ID(0~31)

*/

private long dataCenterId;

/**

* 毫秒内序列(0~4095)

*/

private long sequence = 0L;

/**

* 上次生成ID的时间截

*/

private long lastTimestamp = -1L;

private Random random = new Random();

/**

* 构造函数

*

* @param workerId 工作ID (0~31)

* @param dataCenterId 数据中心ID (0~31)

*/

public SnowflakeShardingKeyGenerator(long workerId, long dataCenterId) {

if (workerId > maxWorkerId || workerId < 0) {

throw new IllegalArgumentException(String.format("worker Id can't be greater than %d or less than 0", maxWorkerId));

}

if (dataCenterId > maxDataCenterId || dataCenterId < 0) {

throw new IllegalArgumentException(String.format("datacenter Id can't be greater than %d or less than 0", maxDataCenterId));

}

this.workerId = workerId;

this.dataCenterId = dataCenterId;

}

/**

* 阻塞到下一个毫秒,直到获得新的时间戳

*

* @param lastTimestamp 上次生成ID的时间截

* @return 当前时间戳

*/

private long tilNextMillis(long lastTimestamp) {

long timestamp = timeGen();

while (timestamp <= lastTimestamp) {

timestamp = timeGen();

}

return timestamp;

}

/**

* 返回以毫秒为单位的当前时间

*

* @return 当前时间(毫秒)

*/

private long timeGen() {

return System.currentTimeMillis();

}

/**

* Generate key.

*

* @return generated key

*/

@Override

public Number generateKey() {

long timestamp = timeGen();

//如果当前时间小于上一次ID生成的时间戳,说明系统时钟回退过这个时候应当抛出异常

if (timestamp < lastTimestamp) {

throw new RuntimeException(

String.format("Clock moved backwards. Refusing to generate id for %d milliseconds", lastTimestamp - timestamp));

}

//如果是同一时间生成的,则进行毫秒内序列

if (lastTimestamp == timestamp) {

sequence = (sequence + 1) & sequenceMask;

//毫秒内序列溢出

if (sequence == 0) {

//阻塞到下一个毫秒,获得新的时间戳

timestamp = tilNextMillis(lastTimestamp);

}

}

//时间戳改变,毫秒内序列重置

else {

sequence = 0L;

}

//上次生成ID的时间截

lastTimestamp = timestamp;

long result = ((timestamp - twepoch) << timestampLeftShift)

| (dataCenterId << datacenterIdShift)

| (workerId << workerIdShift)

| sequence;

int randomNum = random.nextInt(10);

//移位并通过或运算拼到一起组成64位的ID

return result + randomNum;

}

public static void main(String[] args) {

SnowflakeShardingKeyGenerator generator = new SnowflakeShardingKeyGenerator(0,0);

for (int i = 0; i < 20; i++) {

System.out.println(generator.generateKey());

}

}

}

- 配置数据库连接、MyBatis集成和编写属性文件等等

#data source0

sharding.ds0.type=com.alibaba.druid.pool.DruidDataSource

sharding.ds0.jdbcUrl=jdbc:mysql://127.0.0.1:3306/test1?serverTimezone=GMT%2B8&useSSL=false

sharding.ds0.username=root

sharding.ds0.password=123456

#data source1

sharding.ds1.type=com.alibaba.druid.pool.DruidDataSource

sharding.ds1.jdbcUrl=jdbc:mysql://127.0.0.1:3306/test2?serverTimezone=GMT%2B8&useSSL=false

sharding.ds1.username=root

sharding.ds1.password=123456

#sql日志

logging.level.com.hyc.dao=debug

#actuator端口

management.server.port=9001

#开放所有页面节点 默认只开启了health、info两个节点

management.endpoints.web.exposure.include=*

#显示健康具体信息 默认不会显示详细信息

management.endpoint.health.show-details=always

snow.work.id=1

snow.datacenter.id=2

mybatis.configuration.map-underscore-to-camel-case=true

mybatis.type-aliases-package=com.hyc.entity

mybatis.mapper-locations=classpath:mappers/*.xml

package com.hyc.props;

import lombok.Data;

import org.springframework.boot.context.properties.ConfigurationProperties;

@Data

@ConfigurationProperties(prefix = "sharding.ds0")

public class FirstDsProp {

private String jdbcUrl;

private String username;

private String password;

private String type;

}

package com.hyc.props;

import lombok.Data;

import org.springframework.boot.context.properties.ConfigurationProperties;

@Data

@ConfigurationProperties(prefix = "sharding.ds1")

public class SecondDsProp {

private String jdbcUrl;

private String username;

private String password;

private String type;

}

- 下面就是最核心的部分了,数据分片相关配置,需要配置的东西很多,包括:各个表的配置规则TableRuleConfiguration、数据源DataSource,这部分可以参考官方文档说明来配置

package com.hyc.config;

import com.alibaba.druid.filter.Filter;

import com.alibaba.druid.filter.stat.StatFilter;

import com.alibaba.druid.pool.DruidDataSource;

import com.alibaba.druid.support.http.StatViewServlet;

import com.google.common.collect.Lists;

import com.hyc.dbstrategy.GenderShardingAlgorithm;

import com.hyc.dbstrategy.IdShardingAlgorithm;

import com.hyc.keygen.SnowflakeShardingKeyGenerator;

import com.hyc.props.FirstDsProp;

import com.hyc.props.SecondDsProp;

import com.hyc.util.DataSourceUtil;

import io.shardingsphere.api.config.rule.ShardingRuleConfiguration;

import io.shardingsphere.api.config.rule.TableRuleConfiguration;

import io.shardingsphere.api.config.strategy.StandardShardingStrategyConfiguration;

import io.shardingsphere.shardingjdbc.api.ShardingDataSourceFactory;

import org.apache.ibatis.session.SqlSessionFactory;

import org.mybatis.spring.SqlSessionFactoryBean;

import org.mybatis.spring.SqlSessionTemplate;

import org.mybatis.spring.annotation.MapperScan;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.context.properties.EnableConfigurationProperties;

import org.springframework.boot.web.servlet.ServletRegistrationBean;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.core.env.Environment;

import org.springframework.core.io.support.PathMatchingResourcePatternResolver;

import org.springframework.jdbc.datasource.DataSourceTransactionManager;

import org.springframework.transaction.annotation.EnableTransactionManagement;

import javax.sql.DataSource;

import java.sql.SQLException;

import java.util.HashMap;

import java.util.Map;

import java.util.Properties;

import java.util.concurrent.ConcurrentHashMap;

@Configuration

@EnableConfigurationProperties({FirstDsProp.class, SecondDsProp.class})

@EnableTransactionManagement(proxyTargetClass = true)

@MapperScan(basePackages = "com.hyc.dao", sqlSessionTemplateRef = "sqlSessionTemplate")

public class DataSourceConfig {

@Value("${snow.work.id:0}")

private Long workId;

@Value("${snow.datacenter.id:0}")

private Long datacenterId;

@Autowired

private Environment env;

/**

* druid数据源1

*

* @param firstDSProp

* @return

*/

@Bean("ds0")

public DataSource ds0(FirstDsProp firstDSProp) {

Map<String, Object> dsMap = new HashMap<>();

dsMap.put("type", firstDSProp.getType());

dsMap.put("url", firstDSProp.getJdbcUrl());

dsMap.put("username", firstDSProp.getUsername());

dsMap.put("password", firstDSProp.getPassword());

DruidDataSource ds = (DruidDataSource) DataSourceUtil.buildDataSource(dsMap);

ds.setProxyFilters(Lists.newArrayList(statFilter()));

// 每个分区最大的连接数

ds.setMaxActive(20);

// 每个分区最小的连接数

ds.setMinIdle(5);

return ds;

}

/**

* druid数据源2

*

* @param secondDsProp

* @return

*/

@Bean("ds1")

public DataSource ds1(SecondDsProp secondDsProp) {

Map<String, Object> dsMap = new HashMap<>();

dsMap.put("type", secondDsProp.getType());

dsMap.put("url", secondDsProp.getJdbcUrl());

dsMap.put("username", secondDsProp.getUsername());

dsMap.put("password", secondDsProp.getPassword());

DruidDataSource ds = (DruidDataSource) DataSourceUtil.buildDataSource(dsMap);

ds.setProxyFilters(Lists.newArrayList(statFilter()));

// 每个分区最大的连接数

ds.setMaxActive(20);

// 每个分区最小的连接数

ds.setMinIdle(5);

return ds;

}

@Bean

public Filter statFilter() {

StatFilter filter = new StatFilter();

filter.setSlowSqlMillis(5000);

filter.setLogSlowSql(true);

filter.setMergeSql(true);

return filter;

}

@Bean

public ServletRegistrationBean statViewServlet() {

//创建servlet注册实体

ServletRegistrationBean servletRegistrationBean = new ServletRegistrationBean(new StatViewServlet(), "/druid/*");

//设置ip白名单

servletRegistrationBean.addInitParameter("allow", "127.0.0.1");

//设置控制台管理用户

servletRegistrationBean.addInitParameter("loginUsername", "admin");

servletRegistrationBean.addInitParameter("loginPassword", "123456");

//是否可以重置数据

servletRegistrationBean.addInitParameter("resetEnable", "false");

return servletRegistrationBean;

}

/**

* shardingjdbc数据源

*

* @return

* @throws SQLException

*/

@Bean("dataSource")

public DataSource dataSource(@Qualifier("ds0") DataSource ds0, @Qualifier("ds1") DataSource ds1) throws SQLException {

// 配置真实数据源

Map<String, DataSource> dataSourceMap = new HashMap<>();

dataSourceMap.put("ds0", ds0);

dataSourceMap.put("ds1", ds1);

// 配置分片规则

ShardingRuleConfiguration shardingRuleConfig = new ShardingRuleConfiguration();

shardingRuleConfig.getTableRuleConfigs().add(userRuleConfig());

shardingRuleConfig.getTableRuleConfigs().add(addressRuleConfig());

shardingRuleConfig.getTableRuleConfigs().add(orderRuleConfig());

shardingRuleConfig.getTableRuleConfigs().add(orderItemRuleConfig());

shardingRuleConfig.setDefaultDatabaseShardingStrategyConfig(new StandardShardingStrategyConfiguration("user_id", new IdShardingAlgorithm(), new IdShardingAlgorithm()));

shardingRuleConfig.getBindingTableGroups().add("t_user, t_user_address");

shardingRuleConfig.getBindingTableGroups().add("t_order, t_order_item");

shardingRuleConfig.getBroadcastTables().add("t_product");

Properties p = new Properties();

p.setProperty("sql.show",Boolean.TRUE.toString());

// 获取数据源对象

DataSource dataSource = ShardingDataSourceFactory.createDataSource(dataSourceMap, shardingRuleConfig, new ConcurrentHashMap(), p);

return dataSource;

}

/**

* 需要手动配置事务管理器

* @param dataSource

* @return

*/

@Bean

public DataSourceTransactionManager transactitonManager(@Qualifier("dataSource") DataSource dataSource){

return new DataSourceTransactionManager(dataSource);

}

@Bean("sqlSessionFactory")

@Primary

public SqlSessionFactory sqlSessionFactory(@Qualifier("dataSource") DataSource dataSource) throws Exception {

SqlSessionFactoryBean bean = new SqlSessionFactoryBean();

bean.setDataSource(dataSource);

bean.setMapperLocations(new PathMatchingResourcePatternResolver().getResources("classpath:mappers/*.xml"));

return bean.getObject();

}

@Bean("sqlSessionTemplate")

@Primary

public SqlSessionTemplate sqlSessionTemplate(@Qualifier("sqlSessionFactory") SqlSessionFactory sqlSessionFactory) throws Exception {

return new SqlSessionTemplate(sqlSessionFactory);

}

private TableRuleConfiguration userRuleConfig() {

TableRuleConfiguration tableRuleConfig = new TableRuleConfiguration();

tableRuleConfig.setLogicTable("t_user");

tableRuleConfig.setActualDataNodes("ds${0..1}.t_user_${0..1}");

tableRuleConfig.setKeyGeneratorColumnName("user_id");

tableRuleConfig.setKeyGenerator(new SnowflakeShardingKeyGenerator(workId, datacenterId));

tableRuleConfig.setDatabaseShardingStrategyConfig(new StandardShardingStrategyConfiguration("user_id", new IdShardingAlgorithm(), new IdShardingAlgorithm()));

tableRuleConfig.setTableShardingStrategyConfig(new StandardShardingStrategyConfiguration("gender", new GenderShardingAlgorithm(), new GenderShardingAlgorithm()));

return tableRuleConfig;

}

private TableRuleConfiguration addressRuleConfig() {

TableRuleConfiguration tableRuleConfig = new TableRuleConfiguration();

tableRuleConfig.setLogicTable("t_user_address");

tableRuleConfig.setActualDataNodes("ds${0..1}.t_user_address_${0..1}");

tableRuleConfig.setKeyGeneratorColumnName("address_id");

tableRuleConfig.setKeyGenerator(new SnowflakeShardingKeyGenerator(workId, datacenterId));

tableRuleConfig.setDatabaseShardingStrategyConfig(new StandardShardingStrategyConfiguration("user_id", new IdShardingAlgorithm(), new IdShardingAlgorithm()));

tableRuleConfig.setTableShardingStrategyConfig(new StandardShardingStrategyConfiguration("gender", new GenderShardingAlgorithm(), new GenderShardingAlgorithm()));

return tableRuleConfig;

}

private TableRuleConfiguration orderRuleConfig() {

TableRuleConfiguration tableRuleConfig = new TableRuleConfiguration();

tableRuleConfig.setLogicTable("t_order");

tableRuleConfig.setActualDataNodes("ds${0..1}.t_order_${0..1}");

tableRuleConfig.setKeyGeneratorColumnName("order_id");

tableRuleConfig.setKeyGenerator(new SnowflakeShardingKeyGenerator(workId, datacenterId));

tableRuleConfig.setDatabaseShardingStrategyConfig(new StandardShardingStrategyConfiguration("user_id", new IdShardingAlgorithm(), new IdShardingAlgorithm()));

tableRuleConfig.setTableShardingStrategyConfig(new StandardShardingStrategyConfiguration("order_id", new IdShardingAlgorithm(), new IdShardingAlgorithm()));

return tableRuleConfig;

}

private TableRuleConfiguration orderItemRuleConfig() {

TableRuleConfiguration tableRuleConfig = new TableRuleConfiguration();

tableRuleConfig.setLogicTable("t_order_item");

tableRuleConfig.setActualDataNodes("ds${0..1}.t_order_item_${0..1}");

tableRuleConfig.setKeyGeneratorColumnName("order_item_id");

tableRuleConfig.setKeyGenerator(new SnowflakeShardingKeyGenerator(workId, datacenterId));

tableRuleConfig.setDatabaseShardingStrategyConfig(new StandardShardingStrategyConfiguration("user_id", new IdShardingAlgorithm(), new IdShardingAlgorithm()));

tableRuleConfig.setTableShardingStrategyConfig(new StandardShardingStrategyConfiguration("order_id", new IdShardingAlgorithm(), new IdShardingAlgorithm()));

return tableRuleConfig;

}

}

package com.hyc.util;

import lombok.extern.slf4j.Slf4j;

import org.springframework.boot.jdbc.DataSourceBuilder;

import javax.sql.DataSource;

import java.util.Map;

@Slf4j

public class DataSourceUtil {

private static final String DATASOURCE_TYPE_DEFAULT = "com.zaxxer.hikari.HikariDataSource";

public static DataSource buildDataSource(Map<String, Object> dataSourceMap) {

Object type = dataSourceMap.get("type");

if (type == null) {

type = DATASOURCE_TYPE_DEFAULT;

}

try {

Class<? extends DataSource> dataSourceType;

dataSourceType = (Class<? extends DataSource>) Class.forName((String) type);

//String driverClassName = dataSourceMap.get("driver").toString();

String url = dataSourceMap.get("url").toString();

String username = dataSourceMap.get("username").toString();

String password = dataSourceMap.get("password").toString();

// 自定义DataSource配置

DataSourceBuilder factory = DataSourceBuilder.create().url(url).username(username).password(password).type(dataSourceType);

return factory.build();

} catch (Exception e) {

log.error("构建数据源" + type + "出错", e);

}

return null;

}

}

- 启动类

package com.hyc;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.boot.autoconfigure.jdbc.DataSourceAutoConfiguration;

@SpringBootApplication(exclude={DataSourceAutoConfiguration.class})

public class Shard3ManualApplication {

public static void main(String[] args) {

SpringApplication.run(Shard3ManualApplication.class, args);

}

}

- 编写controller和service

package com.hyc.service;

import com.hyc.dao.*;

import com.hyc.entity.*;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

import org.springframework.transaction.annotation.Transactional;

import java.util.HashMap;

import java.util.Map;

@Service

public class BussinessService {

@Autowired

private UserMapper userMapper;

@Autowired

private UserAddressMapper addressMapper;

@Autowired

private OrderMapper orderMapper;

@Autowired

private OrderItemMapper orderItemMapper;

@Autowired

private ProductMapper productMapper;

@Transactional

public void saveAll(User user, UserAddress address, Order order, OrderItem orderItem) {

userMapper.insertSelective(user);

address.setUserId(user.getUserId());

addressMapper.insertSelective(address);

order.setUserId(user.getUserId());

orderMapper.insertSelective(order);

orderItem.setOrderId(order.getOrderId());

orderItem.setUserId(user.getUserId());

orderItemMapper.insertSelective(orderItem);

}

@Transactional

public void saveProduct(Product product) {

productMapper.insertSelective(product);

}

public Map<String, Object> findAll() {

Map<String, Object> result = new HashMap<>();

Long userId = 594099642262884355L;

User user = userMapper.selectByPrimaryKey(userId);

result.put("user", user);

UserAddress address = addressMapper.selectByUserId(userId);

result.put("address", address);

Order order = orderMapper.selectByUserId(userId);

result.put("order", order);

OrderItem orderItem = orderItemMapper.selectByOrderId(order.getOrderId());

result.put("orderItem", orderItem);

return result;

}

}

package com.hyc.controller;

import cn.hutool.core.date.DateUtil;

import com.alibaba.fastjson.JSON;

import com.hyc.entity.*;

import com.hyc.enums.GenderEnum;

import com.hyc.enums.OrderStatusEnum;

import com.hyc.service.BussinessService;

import com.hyc.util.SnowflakeIdGenerator;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.propertyeditors.CustomDateEditor;

import org.springframework.web.bind.WebDataBinder;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.InitBinder;

import org.springframework.web.bind.annotation.RestController;

import org.springframework.web.context.request.WebRequest;

import java.math.BigDecimal;

import java.text.DateFormat;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.HashMap;

import java.util.Map;

@Slf4j

@RestController

public class BussinessController {

@Autowired

private BussinessService bussinessService;

@Autowired

private SnowflakeIdGenerator snowflakeIdGenerator;

@InitBinder

public void initBinder(WebDataBinder binder, WebRequest request) {

//转换日期

DateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

binder.registerCustomEditor(Date.class, new CustomDateEditor(dateFormat, true));

}

@GetMapping("/buss/createProduct")

public String createProduct() {

for (int i = 1; i < 10; i++) {

Product product = new Product();

product.setProductId(snowflakeIdGenerator.nextId());

product.setCode("P00" + i);

product.setName("商品" + i);

product.setDesc("商品描述" + i);

bussinessService.saveProduct(product);

}

return "成功";

}

@GetMapping("/buss/create")

public String create() {

for (int i = 1; i <= 21; i++) {

User user = new User();

user.setName("王大毛" + i);

user.setGender(GenderEnum.MALE.getCode());

user.setAge(20 + i);

user.setBirthDate(DateUtil.parseDate("1989-08-16"));

user.setIdNumber("4101231989691" + i);

UserAddress address = new UserAddress();

address.setCity("某某市");

address.setDetail("某某街道");

address.setDistrict("某某区");

address.setProvince("江苏省");

address.setSort(1);

address.setGender(user.getGender());

Order order = new Order();

order.setOrderAmount(new BigDecimal(100));

order.setOrderNo("ORDER_00" + i);

order.setOrderStatus(OrderStatusEnum.PROCESSING.getCode());

order.setRemark("测试");

OrderItem orderItem = new OrderItem();

orderItem.setItemPrice(new BigDecimal(5));

orderItem.setOrderTime(DateUtil.parse("2019-06-27 17:50:05"));

orderItem.setProductId(593860920283758592L);

orderItem.setTotalNum(20);

orderItem.setTotalPrice(new BigDecimal(100));

bussinessService.saveAll(user, address, order, orderItem);

}

for (int i = 1; i <= 21; i++) {

User user = new User();

user.setName("王大莉" + i);

user.setGender(GenderEnum.FEMALE.getCode());

user.setAge(20 + i);

user.setBirthDate(DateUtil.parseDate("1989-08-16"));

user.setIdNumber("1101231989691" + i);

UserAddress address = new UserAddress();

address.setCity("某某市");

address.setDetail("某某街道");

address.setDistrict("某某区");

address.setProvince("江苏省");

address.setSort(1);

address.setGender(user.getGender());

Order order = new Order();

order.setOrderAmount(new BigDecimal(100));

order.setOrderNo("ORDER_00" + i);

order.setOrderStatus(OrderStatusEnum.PROCESSING.getCode());

order.setRemark("测试");

OrderItem orderItem = new OrderItem();

orderItem.setItemPrice(new BigDecimal(5));

orderItem.setOrderTime(DateUtil.parse("2019-06-27 17:50:05"));

orderItem.setProductId(593860924259958784L);

orderItem.setTotalNum(20);

orderItem.setTotalPrice(new BigDecimal(100));

bussinessService.saveAll(user, address, order, orderItem);

}

return "成功";

}

@GetMapping("/buss/all")

public String findAll(){

Map<String,Object> result = new HashMap<>();

result = bussinessService.findAll();

return JSON.toJSONString(result);

}

}

- 测试

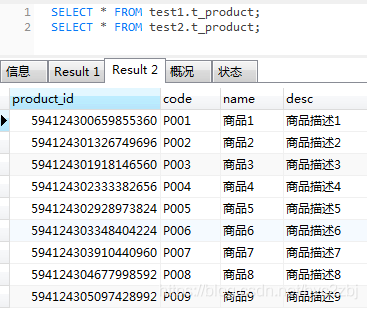

请求http://localhost:8080/buss/createProduct创建商品,可以发现商品表作为广播表在两个库中已经插入了两份一模一样的数据

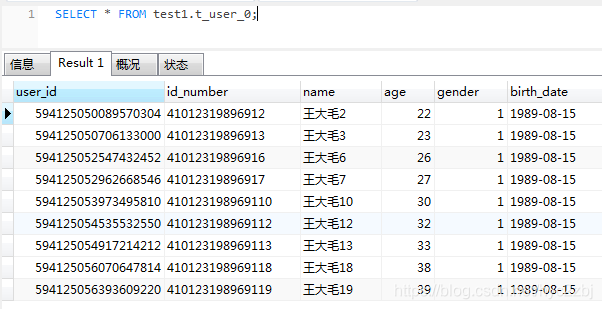

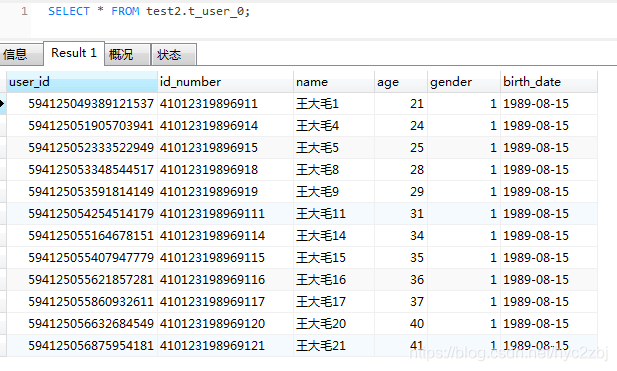

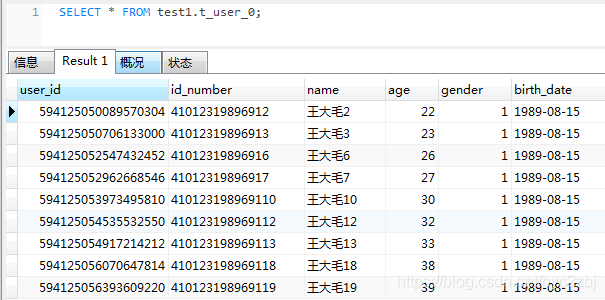

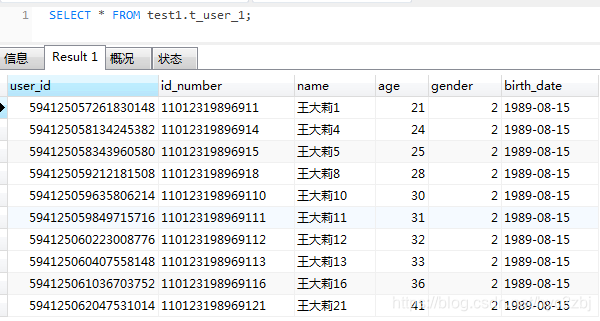

请求http://localhost:8080/buss/create,创建用户、订单等,观察数据库,以用户表举例,用户表按照user_id的奇偶性分表保存在了两个库里

也按照性别的不同存在了不同的分表里面

按照我们的预想分库分表,还可以做查询和事务等相关测试,这里不一一列举了

501

501

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?