相比前两天使用的其他网络爬虫工具,Webgic 框架更加灵活,使用更加方便,支持多种解析方式,纠错能力更强。

废话不多说,心得全在代码中,说多以后自己看起来也费劲。

1.添加依赖

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-core</artifactId>

<version>0.7.3</version>

</dependency>2.编写 PageProcessor 接口的实现类

import us.codecraft.webmagic.Page;

import us.codecraft.webmagic.Site;

import us.codecraft.webmagic.processor.PageProcessor;

import us.codecraft.webmagic.selector.Html;

import us.codecraft.webmagic.selector.Selectable;

import java.util.ArrayList;

import java.util.List;

public class PCZQProcessor implements PageProcessor {

/**

* 网络爬虫相关设置

* *这里设置了重试次数

*/

private Site site = Site.me()

.setRetryTimes(3)

.setSleepTime(5000)

.addHeader("User-Agent", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.122 Safari/537.36");

private List<JobDetails> jobs = new ArrayList<>();

public List<JobDetails> getJobs() {

return jobs;

}

public void setJobs(List<JobDetails> jobs) {

this.jobs = jobs;

}

@Override

public Site getSite()

{

return site;

}

@Override

public void process(Page page)

{

Html html = page.getHtml();

Selectable selectable = html.xpath("//div[@data-widget='app/ms_v2/wanted/list.js#companyAjaxBid']/dl");

for (Selectable node : selectable.nodes())

{

JobDetails jobDetails = new JobDetails();

jobDetails.setPost_id(node.xpath("//dt/a/@post_id").get())

.setPost_url(node.xpath("//dt/a/@post_url").get())

.setCompany_id(node.xpath("//dt/a/@company_id").get())

.setPuid(node.xpath("//dt/a/@puid").get())

.setTitle(node.xpath("//dt/a/text()").get())

.setCompany_name(node.xpath("//div[@class='clearfix']/p/text()").get())

.setAddress(node.xpath("//dd[@class='pay']/text()").get())

.setTime(node.xpath("//dd[@class='pub-time']/text()").get());

System.out.println(jobDetails);

jobs.add(jobDetails);

}

//检查是否分页有的话每个分页URL加入到待采集表中

List<String> moreurls = html.xpath("//*[@id=\"list-job-id\"]/div[13]").links().all();

//存储结果

page.addTargetRequests(moreurls);

}

}

3.测试方法

PCZQProcessor pczqProcessor = new PCZQProcessor();

JobDetailProcessor jobDetailProcessor = new JobDetailProcessor();

@RequestMapping("magic")

public String magic() throws IOException {

String url = "://beijing.gj.com/qita/";

Spider.create(pczqProcessor)

.addUrl(url)

.addPipeline(new JsonFilePipeline("outputfile/"))

.thread(1)

.run();

return pczqProcessor.getJobs().toString();

}

@RequestMapping("detail")

public String detail()

{

String url = "://beijing.gj.com/qita/3624203469x.htm";

Spider.create(jobDetailProcessor)

.addUrl(url)

.addPipeline(new JsonFilePipeline("outputfile/"))

.thread(1)

.run();

return pczqProcessor.getJobs().toString();

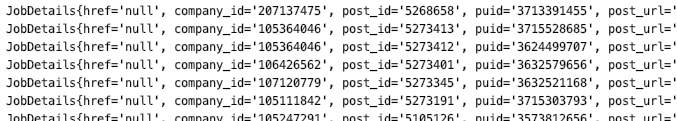

}4.浏览器测试运行http://localhost:9010/magic

成功解析

另一个抓取解析类 (里面包含了图片下载和图片内容识别方法 )

public class JobDetailProcessor implements PageProcessor {

private Site site = Site.me()

.setRetryTimes(3)

.setSleepTime(8000)

.addHeader("User-Agent", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.122 Safari/537.36");

private Map<String,Object> details = new HashMap<>();

public Map<String,Object> getDetails() {

return details;

}

public void setJobs(Map<String,Object> details) {

this.details = details;

}

@Override

public Site getSite()

{

return site;

}

@Override

public void process(Page page)

{

Html html = page.getHtml();

Selectable selectable = html.xpath("//ul[@data-widget='app/ms_v2/wanted/detail.js#shopAuth']");

List<String> dls = html.xpath("//div[@data-widget='app/ms_v2/wanted/list.js#companyAjaxBid']/dl").all();

details.put("title",html.xpath("//*[@id=\"wrapper\"]/div[4]/div[1]/div[1]/h1/text()").toString());

String update = html.xpath("//*[@id=\"wrapper\"]/div[4]/div[1]/div[1]/p/span[1]/text()").toString();

String[] strs=update.split(":");

details.put("updatetime",strs[strs.length-1]);

details.put("type", html.xpath("//*[@id=\"wrapper\"]/div[4]/div[1]/div[3]/ul/li[1]/em/a/text()").toString());

details.put("pay", html.xpath("//*[@id=\"wrapper\"]/div[4]/div[1]/div[3]/ul/li[2]/em/text()").get());

details.put("edu", html.xpath("//*[@id=\"wrapper\"]/div[4]/div[1]/div[3]/ul/li[3]/em/text()").get());

details.put("work", html.xpath("//*[@id=\"wrapper\"]/div[4]/div[1]/div[3]/ul/li[4]/em/text()").get());

details.put("age", html.xpath("//*[@id=\"wrapper\"]/div[4]/div[1]/div[3]/ul/li[5]/em/text()").get());;

details.put("number", html.xpath("*[@id=\"wrapper\"]/div[4]/div[1]/div[3]/ul/li[6]/em/text()").get());

String phone = getDomainForUrl(page.getUrl().toString()) +html.xpath("//*[@id=\"isShowPhoneBotton\"]/img/@src").get();

details.put("phone", phone);

details.put("contacts", html.xpath("//*[@id=\"wrapper\"]/div[4]/div[1]/div[7]/dl/dd[2]/text()").get());

details.put("address", html.xpath("//*[@id=\"wrapper\"]/div[4]/div[1]/div[7]/dl/dd[3]/text()").get());

details.put("worktime", html.xpath("//*[@id=\"wrapper\"]/div[4]/div[1]/div[3]/ul/li[9]/em/text()").get());

String describe = html.xpath("//*[@id=\"wrapper\"]/div[4]/div[1]/div[7]/div[1]/text()").get();

String[] describes = describe.split("\n|\r| ");

details.put("describe",describes);

details.put("payType", html.xpath("//*[@id=\"wrapper\"]/div[4]/div[1]/div[3]/ul/li[9]/em/span/em/text()").get());

String jSONObject= JSONObject.toJSONString(details);

page.putField("list",jSONObject);

// try {

// downImage(phone);

// System.out.println(imageUrl(phone));

// } catch (Exception e) {

// e.printStackTrace();

// }

}

public void downImage(String destUrl) throws Exception

{

//new一个URL对象

URL url = new URL(destUrl);

//打开链接

HttpURLConnection conn = (HttpURLConnection)url.openConnection();

//设置请求方式为"GET"

conn.setRequestMethod("GET");

//超时响应时间为5秒

conn.setConnectTimeout(5 * 1000);

//通过输入流获取图片数据

InputStream inStream = conn.getInputStream();

//得到图片的二进制数据,以二进制封装得到数据,具有通用性

byte[] data = readInputStream(inStream);

//new一个文件对象用来保存图片,默认保存当前工程根目录

File imageFile = new File("/Users/kangxg/Library/ApacheTomcat/webapps/log/pic2020.png");

// //创建输出流

FileOutputStream outStream = new FileOutputStream(imageFile);

//写入数据

outStream.write(data);

//关闭输出流

outStream.close();

}

public String imageUrl(String destUrl) throws Exception {

Tesseract instance = new Tesseract();

instance.setTessVariable("user_defined_dpi", "300");

// setDPI(300);

//如果未将tessdata放在根目录下需要指定绝对路径

// instance.setDatapath("tessdata");

//如果需要识别英文之外的语种,需要指定识别语种,并且需要将对应的语言包放进项目中

//instance.setLanguage("chi_sim");

File imageFile = new File("/Users/kangxg/Library/ApacheTomcat/webapps/log/pic2020.png");

BufferedImage image = ImageIO.read(imageFile);

// 指定识别图片

//new File("/Users/kangxg/Library/ApacheTomcat/webapps/log/20.png");

//long startTime = System.currentTimeMillis();

String result = instance.doOCR(convertImage(image));

//return ocrResult;

return result;

}

public BufferedImage convertImage(BufferedImage image) throws Exception {

//按指定宽高创建一个图像副本

//image = ImageHelper.getSubImage(image, 0, 0, image.getWidth(), image.getHeight());

//图像转换成灰度的简单方法 - 黑白处理

image = ImageHelper.convertImageToGrayscale(image);

//图像缩放 - 放大n倍图像

image = ImageHelper.getScaledInstance(image, image.getWidth() * 5, image.getHeight() * 5);

return image;

}

public byte[] readInputStream(InputStream inStream) throws Exception{

ByteArrayOutputStream outStream = new ByteArrayOutputStream();

//创建一个Buffer字符串

byte[] buffer = new byte[1024];

//每次读取的字符串长度,如果为-1,代表全部读取完毕

int len = 0;

//使用一个输入流从buffer里把数据读取出来

while( (len=inStream.read(buffer)) != -1 ){

//用输出流往buffer里写入数据,中间参数代表从哪个位置开始读,len代表读取的长度

outStream.write(buffer, 0, len);

}

//关闭输入流

inStream.close();

//把outStream里的数据写入内存

return outStream.toByteArray();

}

public static StringBuffer mergedString(String string1, String string2) {

StringBuffer sb = new StringBuffer(string1);

return sb.append(string2);

}

public String getDomainForUrl(String url){

//使用正则表达式过滤,

String re = "((http|ftp|https)://)(([a-zA-Z0-9._-]+)|([0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}))(([a-zA-Z]{2,6})|(:[0-9]{1,4})?)";

String str = "";

// 编译正则表达式

Pattern pattern = Pattern.compile(re);

// 忽略大小写的写法

// Pattern pat = Pattern.compile(regEx, Pattern.CASE_INSENSITIVE);

Matcher matcher = pattern.matcher(url);

//若url==http://127.0.0.1:9040或www.baidu.com的,正则表达式表示匹配

if (matcher.matches()) {

str = url;

} else {

String[] split2 = url.split(re);

if (split2.length > 1) {

String substring = url.substring(0, url.length() - split2[1].length());

str = substring;

} else {

str = split2[0];

}

}

return str;

}

}

90

90

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?