自然语言处理NLP星空智能对话机器人系列:深入理解Transformer自然语言处理 SRL(Semantic Role Labeling)

# Gavin大咖金句

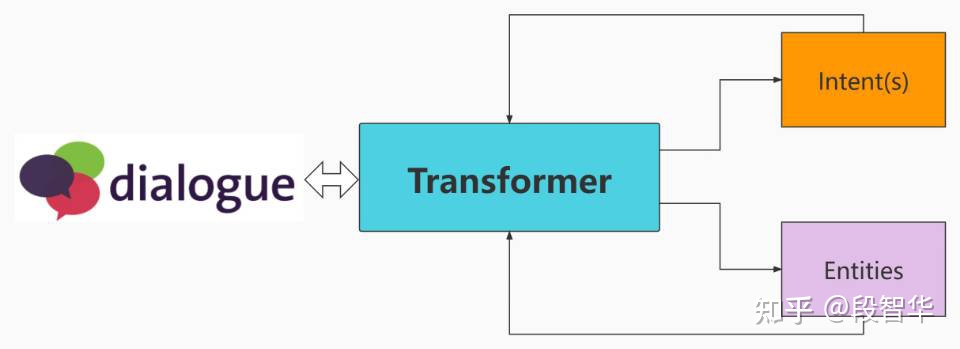

Gavin:理论上将Transformer能够更好的处理一切以“set of units”存在的数据,而计算机视觉、语音、自然语言处理等属于这种类型的数据,所以理论上讲Transformer会在接下来数十年对这些领域形成主导性的统治力。

Gavin:A feedforward network with a single layer is sufficient to represent any function, but the layer may be infeasibly large and may fail to learn and generalize correctly. — Ian Goodfellow, DLB

Gavin:Transformer是人工智能领域的新一代的引擎,本质是研究结构关系、工业界实践的核心是基于Transformer实现万物皆流。

Gavin:Non-linearity是Transformer的魔法

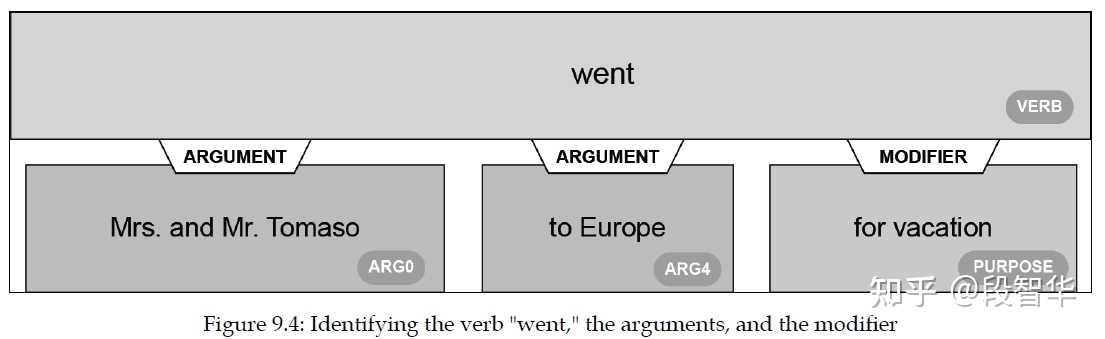

以下句子看似简单,但包含几个动词:

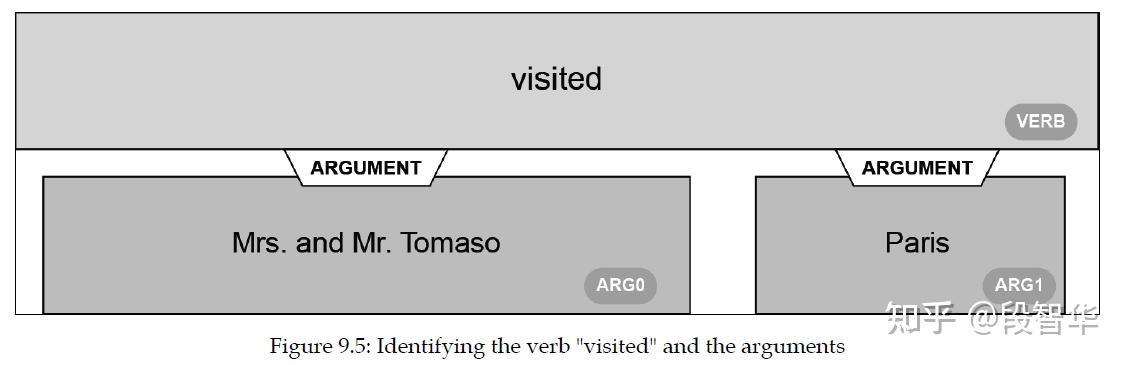

"Mrs. and Mr. Tomaso went to Europe for vacation and visited Paris and first went to visit the Eiffel Tower."这个令人困惑的句子会让Transformer 犹豫吗?我们运行SRL.ipynb的第2个示例:

!echo '{"sentence": "Mrs. and Mr. Tomaso went to Europe for vacation and visited Paris and first went to visit the Eiffel Tower."}' | \

allennlp predict https://storage.googleapis.com/allennlp-public-models/bert-base-srl-2020.03.24.tar.gz -运行结果如下:

2020-12-20 09:08:12,622 - INFO - transformers.file_utils - PyTorch version 1.5.1 available.

2020-12-20 09:08:12.774532: I tensorflow/stream_executor/platform/default/dso_loader.cc:49] Successfully opened dynamic library libcudart.so.10.1

2020-12-20 09:08:14,547 - INFO - transformers.file_utils - TensorFlow version 2.4.0 available.

2020-12-20 09:08:15,761 - INFO - allennlp.common.file_utils - checking cache for https://storage.googleapis.com/allennlp-public-models/bert-base-srl-2020.03.24.tar.gz at /root/.allennlp/cache/e20d5b792a8d456a1a61da245d1856d4b7778efe69ac3c30759af61940aa0f42.f72523a9682cb1f5ad3ecf834075fe53a1c25a6bcbf4b40c11e13b7f426a4724

2020-12-20 09:08:15,761 - INFO - allennlp.common.file_utils - waiting to acquire lock on /root/.allennlp/cache/e20d5b792a8d456a1a61da245d1856d4b7778efe69ac3c30759af61940aa0f42.f72523a9682cb1f5ad3ecf834075fe53a1c25a6bcbf4b40c11e13b7f426a4724

2020-12-20 09:08:15,762 - INFO - filelock - Lock 139888470380440 acquired on /root/.allennlp/cache/e20d5b792a8d456a1a61da245d1856d4b7778efe69ac3c30759af61940aa0f42.f72523a9682cb1f5ad3ecf834075fe53a1c25a6bcbf4b40c11e13b7f426a4724.lock

2020-12-20 09:08:15,763 - INFO - allennlp.common.file_utils - cache of https://storage.googleapis.com/allennlp-public-models/bert-base-srl-2020.03.24.tar.gz is up-to-date

2020-12-20 09:08:15,763 - INFO - filelock - Lock 139888470380440 released on /root/.allennlp/cache/e20d5b792a8d456a1a61da245d1856d4b7778efe69ac3c30759af61940aa0f42.f72523a9682cb1f5ad3ecf834075fe53a1c25a6bcbf4b40c11e13b7f426a4724.lock

2020-12-20 09:08:15,763 - INFO - allennlp.models.archival - loading archive file https://storage.googleapis.com/allennlp-public-models/bert-base-srl-2020.03.24.tar.gz from cache at /root/.allennlp/cache/e20d5b792a8d456a1a61da245d1856d4b7778efe69ac3c30759af61940aa0f42.f72523a9682cb1f5ad3ecf834075fe53a1c25a6bcbf4b40c11e13b7f426a4724

2020-12-20 09:08:15,763 - INFO - allennlp.models.archival - extracting archive file /root/.allennlp/cache/e20d5b792a8d456a1a61da245d1856d4b7778efe69ac3c30759af61940aa0f42.f72523a9682cb1f5ad3ecf834075fe53a1c25a6bcbf4b40c11e13b7f426a4724 to temp dir /tmp/tmp7jeuj77a

2020-12-20 09:08:19,975 - INFO - allennlp.common.params - type = from_instances

2020-12-20 09:08:19,976 - INFO - allennlp.data.vocabulary - Loading token dictionary from /tmp/tmp7jeuj77a/vocabulary.

2020-12-20 09:08:19,976 - INFO - filelock - Lock 139888468747992 acquired on /tmp/tmp7jeuj77a/vocabulary/.lock

2020-12-20 09:08:20,002 - INFO - filelock - Lock 139888468747992 released on /tmp/tmp7jeuj77a/vocabulary/.lock

2020-12-20 09:08:20,003 - INFO - allennlp.common.params - model.type = srl_bert

2020-12-20 09:08:20,004 - INFO - allennlp.common.params - model.regularizer = None

2020-12-20 09:08:20,004 - INFO - allennlp.common.params - model.bert_model = bert-base-uncased

2020-12-20 09:08:20,004 - INFO - allennlp.common.params - model.embedding_dropout = 0.1

2020-12-20 09:08:20,004 - INFO - allennlp.common.params - model.initializer = <allennlp.nn.initializers.InitializerApplicator object at 0x7f3a52797748>

2020-12-20 09:08:20,004 - INFO - allennlp.common.params - model.label_smoothing = None

2020-12-20 09:08:20,004 - INFO - allennlp.common.params - model.ignore_span_metric = False

2020-12-20 09:08:20,004 - INFO - allennlp.common.params - model.srl_eval_path = /usr/local/lib/python3.6/dist-packages/allennlp_models/structured_prediction/tools/srl-eval.pl

2020-12-20 09:08:20,298 - INFO - transformers.configuration_utils - loading configuration file https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-uncased-config.json from cache at /root/.cache/torch/transformers/4dad0251492946e18ac39290fcfe91b89d370fee250efe9521476438fe8ca185.7156163d5fdc189c3016baca0775ffce230789d7fa2a42ef516483e4ca884517

2020-12-20 09:08:20,298 - INFO - transformers.configuration_utils - Model config BertConfig {

"architectures": [

"BertForMaskedLM"

],

"attention_probs_dropout_prob": 0.1,

"hidden_act": "gelu",

"hidden_dropout_prob": 0.1,

"hidden_size": 768,

"initializer_range": 0.02,

"intermediate_size": 3072,

"layer_norm_eps": 1e-12,

"max_position_embeddings": 512,

"model_type": "bert",

"num_attention_heads": 12,

"num_hidden_layers": 12,

"pad_token_id": 0,

"type_vocab_size": 2,

"vocab_size": 30522

}

2020-12-20 09:08:20,492 - INFO - transformers.modeling_utils - loading weights file https://cdn.huggingface.co/bert-base-uncased-pytorch_model.bin from cache at /root/.cache/torch/transformers/f2ee78bdd635b758cc0a12352586868bef80e47401abe4c4fcc3832421e7338b.36ca03ab34a1a5d5fa7bc3d03d55c4fa650fed07220e2eeebc06ce58d0e9a157

2020-12-20 09:08:23,170 - INFO - allennlp.nn.initializers - Initializing parameters

2020-12-20 09:08:23,171 - INFO - allennlp.nn.initializers - Done initializing parameters; the following parameters are using their default initialization from their code

2020-12-20 09:08:23,171 - INFO - allennlp.nn.initializers - bert_model.embeddings.LayerNorm.bias

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.embeddings.LayerNorm.weight

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.embeddings.position_embeddings.weight

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.embeddings.token_type_embeddings.weight

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.embeddings.word_embeddings.weight

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.attention.output.LayerNorm.bias

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.attention.output.LayerNorm.weight

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.attention.output.dense.bias

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.attention.output.dense.weight

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.attention.self.key.bias

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.attention.self.key.weight

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.attention.self.query.bias

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.attention.self.query.weight

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.attention.self.value.bias

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.attention.self.value.weight

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.intermediate.dense.bias

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.intermediate.dense.weight

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.output.LayerNorm.bias

2020-12-20 09:08:23,172 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.output.LayerNorm.weight

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.output.dense.bias

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.0.output.dense.weight

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.attention.output.LayerNorm.bias

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.attention.output.LayerNorm.weight

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.attention.output.dense.bias

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.attention.output.dense.weight

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.attention.self.key.bias

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.attention.self.key.weight

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.attention.self.query.bias

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.attention.self.query.weight

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.attention.self.value.bias

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.attention.self.value.weight

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.intermediate.dense.bias

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.intermediate.dense.weight

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.output.LayerNorm.bias

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.output.LayerNorm.weight

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.output.dense.bias

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.1.output.dense.weight

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.attention.output.LayerNorm.bias

2020-12-20 09:08:23,173 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.attention.output.LayerNorm.weight

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.attention.output.dense.bias

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.attention.output.dense.weight

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.attention.self.key.bias

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.attention.self.key.weight

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.attention.self.query.bias

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.attention.self.query.weight

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.attention.self.value.bias

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.attention.self.value.weight

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.intermediate.dense.bias

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.intermediate.dense.weight

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.output.LayerNorm.bias

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.output.LayerNorm.weight

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.output.dense.bias

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.10.output.dense.weight

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.attention.output.LayerNorm.bias

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.attention.output.LayerNorm.weight

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.attention.output.dense.bias

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.attention.output.dense.weight

2020-12-20 09:08:23,174 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.attention.self.key.bias

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.attention.self.key.weight

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.attention.self.query.bias

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.attention.self.query.weight

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.attention.self.value.bias

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.attention.self.value.weight

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.intermediate.dense.bias

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.intermediate.dense.weight

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.output.LayerNorm.bias

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.output.LayerNorm.weight

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.output.dense.bias

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.11.output.dense.weight

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.attention.output.LayerNorm.bias

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.attention.output.LayerNorm.weight

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.attention.output.dense.bias

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.attention.output.dense.weight

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.attention.self.key.bias

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.attention.self.key.weight

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.attention.self.query.bias

2020-12-20 09:08:23,175 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.attention.self.query.weight

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.attention.self.value.bias

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.attention.self.value.weight

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.intermediate.dense.bias

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.intermediate.dense.weight

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.output.LayerNorm.bias

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.output.LayerNorm.weight

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.output.dense.bias

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.2.output.dense.weight

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.attention.output.LayerNorm.bias

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.attention.output.LayerNorm.weight

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.attention.output.dense.bias

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.attention.output.dense.weight

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.attention.self.key.bias

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.attention.self.key.weight

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.attention.self.query.bias

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.attention.self.query.weight

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.attention.self.value.bias

2020-12-20 09:08:23,176 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.attention.self.value.weight

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.intermediate.dense.bias

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.intermediate.dense.weight

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.output.LayerNorm.bias

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.output.LayerNorm.weight

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.output.dense.bias

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.3.output.dense.weight

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.attention.output.LayerNorm.bias

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.attention.output.LayerNorm.weight

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.attention.output.dense.bias

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.attention.output.dense.weight

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.attention.self.key.bias

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.attention.self.key.weight

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.attention.self.query.bias

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.attention.self.query.weight

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.attention.self.value.bias

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.attention.self.value.weight

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.intermediate.dense.bias

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.intermediate.dense.weight

2020-12-20 09:08:23,177 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.output.LayerNorm.bias

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.output.LayerNorm.weight

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.output.dense.bias

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.4.output.dense.weight

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.attention.output.LayerNorm.bias

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.attention.output.LayerNorm.weight

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.attention.output.dense.bias

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.attention.output.dense.weight

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.attention.self.key.bias

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.attention.self.key.weight

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.attention.self.query.bias

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.attention.self.query.weight

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.attention.self.value.bias

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.attention.self.value.weight

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.intermediate.dense.bias

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.intermediate.dense.weight

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.output.LayerNorm.bias

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.output.LayerNorm.weight

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.output.dense.bias

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.5.output.dense.weight

2020-12-20 09:08:23,178 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.attention.output.LayerNorm.bias

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.attention.output.LayerNorm.weight

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.attention.output.dense.bias

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.attention.output.dense.weight

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.attention.self.key.bias

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.attention.self.key.weight

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.attention.self.query.bias

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.attention.self.query.weight

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.attention.self.value.bias

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.attention.self.value.weight

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.intermediate.dense.bias

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.intermediate.dense.weight

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.output.LayerNorm.bias

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.output.LayerNorm.weight

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.output.dense.bias

2020-12-20 09:08:23,179 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.6.output.dense.weight

2020-12-20 09:08:23,180 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.attention.output.LayerNorm.bias

2020-12-20 09:08:23,180 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.attention.output.LayerNorm.weight

2020-12-20 09:08:23,180 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.attention.output.dense.bias

2020-12-20 09:08:23,180 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.attention.output.dense.weight

2020-12-20 09:08:23,180 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.attention.self.key.bias

2020-12-20 09:08:23,180 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.attention.self.key.weight

2020-12-20 09:08:23,180 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.attention.self.query.bias

2020-12-20 09:08:23,180 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.attention.self.query.weight

2020-12-20 09:08:23,180 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.attention.self.value.bias

2020-12-20 09:08:23,208 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.attention.self.value.weight

2020-12-20 09:08:23,209 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.intermediate.dense.bias

2020-12-20 09:08:23,209 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.intermediate.dense.weight

2020-12-20 09:08:23,209 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.output.LayerNorm.bias

2020-12-20 09:08:23,209 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.output.LayerNorm.weight

2020-12-20 09:08:23,209 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.output.dense.bias

2020-12-20 09:08:23,209 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.7.output.dense.weight

2020-12-20 09:08:23,209 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.attention.output.LayerNorm.bias

2020-12-20 09:08:23,209 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.attention.output.LayerNorm.weight

2020-12-20 09:08:23,209 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.attention.output.dense.bias

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.attention.output.dense.weight

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.attention.self.key.bias

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.attention.self.key.weight

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.attention.self.query.bias

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.attention.self.query.weight

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.attention.self.value.bias

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.attention.self.value.weight

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.intermediate.dense.bias

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.intermediate.dense.weight

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.output.LayerNorm.bias

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.output.LayerNorm.weight

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.output.dense.bias

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.8.output.dense.weight

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.attention.output.LayerNorm.bias

2020-12-20 09:08:23,210 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.attention.output.LayerNorm.weight

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.attention.output.dense.bias

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.attention.output.dense.weight

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.attention.self.key.bias

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.attention.self.key.weight

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.attention.self.query.bias

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.attention.self.query.weight

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.attention.self.value.bias

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.attention.self.value.weight

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.intermediate.dense.bias

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.intermediate.dense.weight

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.output.LayerNorm.bias

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.output.LayerNorm.weight

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.output.dense.bias

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.encoder.layer.9.output.dense.weight

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.pooler.dense.bias

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - bert_model.pooler.dense.weight

2020-12-20 09:08:23,211 - INFO - allennlp.nn.initializers - tag_projection_layer.bias

2020-12-20 09:08:23,212 - INFO - allennlp.nn.initializers - tag_projection_layer.weight

2020-12-20 09:08:23,664 - INFO - allennlp.common.params - dataset_reader.type = srl

2020-12-20 09:08:23,664 - INFO - allennlp.common.params - dataset_reader.lazy = False

2020-12-20 09:08:23,665 - INFO - allennlp.common.params - dataset_reader.cache_directory = None

2020-12-20 09:08:23,665 - INFO - allennlp.common.params - dataset_reader.max_instances = None

2020-12-20 09:08:23,665 - INFO - allennlp.common.params - dataset_reader.manual_distributed_sharding = False

2020-12-20 09:08:23,665 - INFO - allennlp.common.params - dataset_reader.manual_multi_process_sharding = False

2020-12-20 09:08:23,665 - INFO - allennlp.common.params - dataset_reader.token_indexers = None

2020-12-20 09:08:23,665 - INFO - allennlp.common.params - dataset_reader.domain_identifier = None

2020-12-20 09:08:23,665 - INFO - allennlp.common.params - dataset_reader.bert_model_name = bert-base-uncased

2020-12-20 09:08:23,987 - INFO - transformers.tokenization_utils - loading file https://s3.amazonaws.com/models.huggingface.co/bert/bert-base-uncased-vocab.txt from cache at /root/.cache/torch/transformers/26bc1ad6c0ac742e9b52263248f6d0f00068293b33709fae12320c0e35ccfbbb.542ce4285a40d23a559526243235df47c5f75c197f04f37d1a0c124c32c9a084

input 0: {"sentence": "Mrs. and Mr. Tomaso went to Europe for vacation and visited Paris and first went to visit the Eiffel Tower."}

prediction: {"verbs": [{"verb": "went", "description": "[ARG0: Mrs. and Mr. Tomaso] [V: went] [ARG4: to Europe] [ARGM-PRP: for vacation] and visited Paris and first went to visit the Eiffel Tower .", "tags": ["B-ARG0", "I-ARG0", "I-ARG0", "I-ARG0", "B-V", "B-ARG4", "I-ARG4", "B-ARGM-PRP", "I-ARGM-PRP", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O"]}, {"verb": "visited", "description": "[ARG0: Mrs. and Mr. Tomaso] went to Europe for vacation and [V: visited] [ARG1: Paris] and first went to visit the Eiffel Tower .", "tags": ["B-ARG0", "I-ARG0", "I-ARG0", "I-ARG0", "O", "O", "O", "O", "O", "O", "B-V", "B-ARG1", "O", "O", "O", "O", "O", "O", "O", "O", "O"]}, {"verb": "went", "description": "[ARG0: Mrs. and Mr. Tomaso] went to Europe for vacation and visited Paris and [ARGM-TMP: first] [V: went] [ARGM-PRP: to visit the Eiffel Tower] .", "tags": ["B-ARG0", "I-ARG0", "I-ARG0", "I-ARG0", "O", "O", "O", "O", "O", "O", "O", "O", "O", "B-ARGM-TMP", "B-V", "B-ARGM-PRP", "I-ARGM-PRP", "I-ARGM-PRP", "I-ARGM-PRP", "I-ARGM-PRP", "O"]}, {"verb": "visit", "description": "[ARG0: Mrs. and Mr. Tomaso] went to Europe for vacation and visited Paris and first went to [V: visit] [ARG1: the Eiffel Tower] .", "tags": ["B-ARG0", "I-ARG0", "I-ARG0", "I-ARG0", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O", "B-V", "B-ARG1", "I-ARG1", "I-ARG1", "O"]}], "words": ["Mrs.", "and", "Mr.", "Tomaso", "went", "to", "Europe", "for", "vacation", "and", "visited", "Paris", "and", "first", "went", "to", "visit", "the", "Eiffel", "Tower", "."]}

2020-12-20 09:08:25,342 - INFO - allennlp.models.archival - removing temporary unarchived model dir at /tmp/tmp7jeuj77a输出摘录表明transformer 正确识别了句子中的动词:

prediction: {

"verbs": [{

"verb": "went",

"description": "[ARG0: Mrs. and Mr. Tomaso] [V: went] [ARG4: to Europe] [ARGM-PRP: for vacation] and visited Paris and first went to visit the Eiffel Tower .",

"tags": ["B-ARG0", "I-ARG0", "I-ARG0", "I-ARG0", "B-V", "B-ARG4", "I-ARG4", "B-ARGM-PRP", "I-ARGM-PRP", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O"]

}在AllenNLP 运行示例表明,一个参数被识别为行程的目标:

我们可以解释动词went的参数,然而,transformer发现动词的修饰语是旅行的目的,Shi和Lin(2019)只是建立了一个简单的BERT模型来获得这一高质量的语法分析,这一结果也就不足为奇了。我们还可以注意到,“went”与“Europe”的联系是正确的。transformer正确地识别出动词“visit”与“Paris”相关:

transformer 可以将动词“visited”直接与“Eiffel Tower”联系起来,但它坚持自己的立场,做出了正确的决定。我们要求transformer 做的最终一个任务是识别动词“went”的第二次使用的上下文。同样,它没有陷入将所有与动词went相关的参数合并的陷阱,went在句子中使用了两次,它再次正确地分割了序列,并产生了一个出色的结果:

动词“went”用了两次,但transformer没有落入陷阱,它甚至发现“first”是动词“went”的时间修饰语。AllenNLP在线界面的格式化文本输出汇总了本示例获得的优秀结果:

input 0: {

"sentence": "Mrs. and Mr. Tomaso went to Europe for vacation and visited Paris and first went to visit the Eiffel Tower."

}

prediction: {

"verbs": [{

"verb": "went",

"description": "[ARG0: Mrs. and Mr. Tomaso] [V: went] [ARG4: to Europe] [ARGM-PRP: for vacation] and visited Paris and first went to visit the Eiffel Tower .",

"tags": ["B-ARG0", "I-ARG0", "I-ARG0", "I-ARG0", "B-V", "B-ARG4", "I-ARG4", "B-ARGM-PRP", "I-ARGM-PRP", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O"]

}, {

"verb": "visited",

"description": "[ARG0: Mrs. and Mr. Tomaso] went to Europe for vacation and [V: visited] [ARG1: Paris] and first went to visit the Eiffel Tower .",

"tags": ["B-ARG0", "I-ARG0", "I-ARG0", "I-ARG0", "O", "O", "O", "O", "O", "O", "B-V", "B-ARG1", "O", "O", "O", "O", "O", "O", "O", "O", "O"]

}, {

"verb": "went",

"description": "[ARG0: Mrs. and Mr. Tomaso] went to Europe for vacation and visited Paris and [ARGM-TMP: first] [V: went] [ARGM-PRP: to visit the Eiffel Tower] .",

"tags": ["B-ARG0", "I-ARG0", "I-ARG0", "I-ARG0", "O", "O", "O", "O", "O", "O", "O", "O", "O", "B-ARGM-TMP", "B-V", "B-ARGM-PRP", "I-ARGM-PRP", "I-ARGM-PRP", "I-ARGM-PRP", "I-ARGM-PRP", "O"]

}, {

"verb": "visit",

"description": "[ARG0: Mrs. and Mr. Tomaso] went to Europe for vacation and visited Paris and first went to [V: visit] [ARG1: the Eiffel Tower] .",

"tags": ["B-ARG0", "I-ARG0", "I-ARG0", "I-ARG0", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O", "O", "B-V", "B-ARG1", "I-ARG1", "I-ARG1", "O"]

}],

"words": ["Mrs.", "and", "Mr.", "Tomaso", "went", "to", "Europe", "for", "vacation", "and", "visited", "Paris", "and", "first", "went", "to", "visit", "the", "Eiffel", "Tower", "."]

}

}

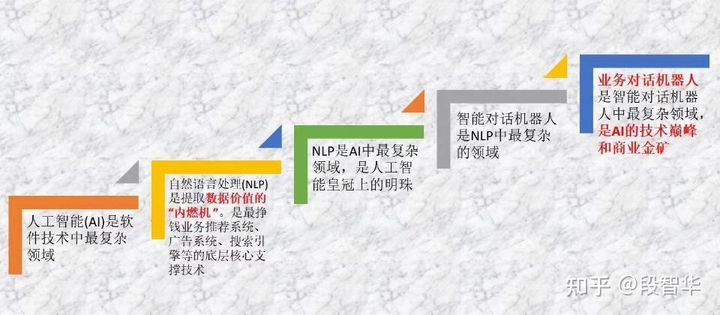

基于Transformer的NLP智能对话机器人实战课程

One Architecture, One Course,One World

本课程以Transformer架构为基石、萃取NLP中最具有使用价值的内容、

围绕手动实现工业级智能业务对话机器人所需要的全生命周期知识点展开,

学习完成后不仅能够从算法、源码、实战等方面融汇贯通NLP领域NLU、NLI、NLG等所有核心环节,

同时会具备独自开发业界领先智能业务对话机器人的知识体系、工具方法、及参考源码,

成为具备NLP硬实力的业界Top 1%人才。

课程特色:

101章围绕Transformer而诞生的NLP实用课程

5137个围绕Transformers的NLP细分知识点、

大小近1200个代码案例落地所有课程内容、

10000+行纯手工实现工业级智能业务对话机器人

在具体架构场景和项目案例中习得AI相关数学知识

NLP大赛全生命周期讲解并包含比赛的完整代码实现

直接加Gavin大咖微信咨询

522

522

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?