avilib 是一个很小的封装模块,它可以将一些图片数据封装成视频格式。在一些比较差的摄像头中,他们只支持V4L2_PIX_FMT_MJPEG 输出JPEG格式数据,以这种格式输出的数据,并不是标准的JPEG图片数据。因此,如果只是把MJPEG输出的所有文件存成一个文件,那么这个文件其实并不能播放。据网上的一些资料介绍,说是缺少Huffman 表,需要自己手动插入一个Huffman表。在一些嵌入式设备中,他们直接将MPEG模式下输出的数据,然后通过JPEG解码库解码成RGB数据,然后直接丢到LCD的BUFF中就可以了(这种方法我没有测试过,只是看网上资料这么介绍)。

本人主要开发嵌入式设备,且刚接触图形编码时间不久,暂时还没有使用一些大的图片格式转换库,库文件是在网上找的,avilib只有一个文件编译之后的大小也只有几十K,他可以直接将v4l2 MPEG模式输出的数据封装成avi格式视屏。它提供的接口有:

void AVI_set_video(avi_t *AVI, int width, int height, double fps, char *compressor);

void AVI_set_audio(avi_t *AVI, int channels, long rate, int bits, int format, long mp3rate);

int AVI_write_frame(avi_t *AVI, char *data, long bytes, int keyframe);

int AVI_dup_frame(avi_t *AVI);

int AVI_write_audio(avi_t *AVI, char *data, long bytes);

int AVI_append_audio(avi_t *AVI, char *data, long bytes);

long AVI_bytes_remain(avi_t *AVI);

int AVI_close(avi_t *AVI);

long AVI_bytes_written(avi_t *AVI);

avi_t *AVI_open_input_file(char *filename, int getIndex);

avi_t *AVI_open_fd(int fd, int getIndex);

int avi_parse_input_file(avi_t *AVI, int getIndex);

long AVI_audio_mp3rate(avi_t *AVI);

long AVI_video_frames(avi_t *AVI);

int AVI_video_width(avi_t *AVI);

int AVI_video_height(avi_t *AVI);

double AVI_frame_rate(avi_t *AVI);

char* AVI_video_compressor(avi_t *AVI);

int AVI_audio_channels(avi_t *AVI);

int AVI_audio_bits(avi_t *AVI);

int AVI_audio_format(avi_t *AVI);

long AVI_audio_rate(avi_t *AVI);

long AVI_audio_bytes(avi_t *AVI);

long AVI_audio_chunks(avi_t *AVI);

long AVI_max_video_chunk(avi_t *AVI);

long AVI_frame_size(avi_t *AVI, long frame);

long AVI_audio_size(avi_t *AVI, long frame);

int AVI_seek_start(avi_t *AVI);

int AVI_set_video_position(avi_t *AVI, long frame);

long AVI_get_video_position(avi_t *AVI, long frame);

long AVI_read_frame(avi_t *AVI, char *vidbuf, int *keyframe);

int AVI_set_audio_position(avi_t *AVI, long byte);

int AVI_set_audio_bitrate(avi_t *AVI, long bitrate);

long AVI_read_audio(avi_t *AVI, char *audbuf, long bytes);

long AVI_audio_codech_offset(avi_t *AVI);

long AVI_audio_codecf_offset(avi_t *AVI);

long AVI_video_codech_offset(avi_t *AVI);

long AVI_video_codecf_offset(avi_t *AVI);

int AVI_read_data(avi_t *AVI, char *vidbuf, long max_vidbuf,

char *audbuf, long max_audbuf,

long *len);

void AVI_print_error(char *str);

char *AVI_strerror();

char *AVI_syserror();

int AVI_scan(char *name);

int AVI_dump(char *name, int mode);

char *AVI_codec2str(short cc);

int AVI_file_check(char *import_file);

void AVI_info(avi_t *avifile);

uint64_t AVI_max_size();

int avi_update_header(avi_t *AVI);

int AVI_set_audio_track(avi_t *AVI, int track);

int AVI_get_audio_track(avi_t *AVI);

int AVI_audio_tracks(avi_t *AVI);

下面是一个简单的测试程序:

/*=============================================================================

# FileName: main.c

# Desc: this program aim to get image from USB camera,

# used the V4L2 interface.

# Author: Licaibiao

# Version:

# LastChange: 2016-12-10

# History:

=============================================================================*/

#include <unistd.h>

#include <sys/types.h>

#include <sys/stat.h>

#include <fcntl.h>

#include <stdio.h>

#include <sys/ioctl.h>

#include <stdlib.h>

#include <linux/types.h>

#include <linux/videodev2.h>

#include <malloc.h>

#include <math.h>

#include <string.h>

#include <sys/mman.h>

#include <errno.h>

#include <assert.h>

#include <sys/time.h>

#include "avilib.h"

#define FILE_VIDEO "/dev/video0"

#define JPG "./out/image%d.jpg"

typedef struct{

void *start;

int length;

}BUFTYPE;

BUFTYPE *usr_buf;

static unsigned int n_buffer = 0;

avi_t *out_fd = NULL;

struct timeval time;

float get_main_time(struct timeval* start , int update)

{

float dt;

struct timeval now;

gettimeofday(&now, NULL);

dt = (float)(now.tv_sec - start->tv_sec);

dt += (float)(now.tv_usec - start->tv_usec) * 1e-6;

if (update > 0) {

start->tv_sec = now.tv_sec;

start->tv_usec = now.tv_usec;

}

return dt;

}

/*set video capture ways(mmap)*/

int init_mmap(int fd)

{

/*to request frame cache, contain requested counts*/

struct v4l2_requestbuffers reqbufs;

memset(&reqbufs, 0, sizeof(reqbufs));

reqbufs.count = 6; /*the number of buffer*/

reqbufs.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

reqbufs.memory = V4L2_MEMORY_MMAP;

if(-1 == ioctl(fd,VIDIOC_REQBUFS,&reqbufs))

{

perror("Fail to ioctl 'VIDIOC_REQBUFS'");

exit(EXIT_FAILURE);

}

n_buffer = reqbufs.count;

printf("n_buffer = %d\n", n_buffer);

//usr_buf = calloc(reqbufs.count, sizeof(usr_buf));

usr_buf = calloc(reqbufs.count, sizeof(BUFTYPE));/*** (4) ***<span style="font-family: Arial, Helvetica, sans-serif;">/</span>

if(usr_buf == NULL)

{

printf("Out of memory\n");

exit(-1);

}

/*map kernel cache to user process*/

for(n_buffer = 0; n_buffer < reqbufs.count; ++n_buffer)

{

//stand for a frame

struct v4l2_buffer buf;

memset(&buf, 0, sizeof(buf));

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = n_buffer;

/*check the information of the kernel cache requested*/

if(-1 == ioctl(fd,VIDIOC_QUERYBUF,&buf))

{

perror("Fail to ioctl : VIDIOC_QUERYBUF");

exit(EXIT_FAILURE);

}

usr_buf[n_buffer].length = buf.length;

usr_buf[n_buffer].start = (char *)mmap(NULL,buf.length,PROT_READ | PROT_WRITE,MAP_PRIVATE, fd,buf.m.offset);

if(MAP_FAILED == usr_buf[n_buffer].start)

{

perror("Fail to mmap");

exit(EXIT_FAILURE);

}

}

}

int open_camera(void)

{

int fd;

/*open video device with block */

fd = open(FILE_VIDEO, O_RDONLY);

if(fd < 0)

{

fprintf(stderr, "%s open err \n", FILE_VIDEO);

exit(EXIT_FAILURE);

};

return fd;

}

int init_camera(int fd)

{

struct v4l2_capability cap; /* decive fuction, such as video input */

struct v4l2_format tv_fmt; /* frame format */

struct v4l2_fmtdesc fmtdesc; /* detail control value */

struct v4l2_control ctrl;

int ret;

/*show all the support format*/

memset(&fmtdesc, 0, sizeof(fmtdesc));

fmtdesc.index = 0 ; /* the number to check */

fmtdesc.type=V4L2_BUF_TYPE_VIDEO_CAPTURE;

/* check video decive driver capability */

if(ret=ioctl(fd, VIDIOC_QUERYCAP, &cap)<0)

{

fprintf(stderr, "fail to ioctl VIDEO_QUERYCAP \n");

exit(EXIT_FAILURE);

}

/*judge wherher or not to be a video-get device*/

if(!(cap.capabilities & V4L2_BUF_TYPE_VIDEO_CAPTURE))

{

fprintf(stderr, "The Current device is not a video capture device \n");

exit(EXIT_FAILURE);

}

/*judge whether or not to supply the form of video stream*/

if(!(cap.capabilities & V4L2_CAP_STREAMING))

{

printf("The Current device does not support streaming i/o\n");

exit(EXIT_FAILURE);

}

printf("\ncamera driver name is : %s\n",cap.driver);

printf("camera device name is : %s\n",cap.card);

printf("camera bus information: %s\n",cap.bus_info);

/*display the format device support*/

while(ioctl(fd,VIDIOC_ENUM_FMT,&fmtdesc)!=-1)

{

printf("\nsupport device %d.%s\n\n",fmtdesc.index+1,fmtdesc.description);

fmtdesc.index++;

}

/*set the form of camera capture data*/

tv_fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE; /*v4l2_buf_typea,camera must use V4L2_BUF_TYPE_VIDEO_CAPTURE*/

tv_fmt.fmt.pix.width = 320;

tv_fmt.fmt.pix.height = 240;

tv_fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_JPEG; /*V4L2_PIX_FMT_YYUV*/

tv_fmt.fmt.pix.field = V4L2_FIELD_NONE; /*V4L2_FIELD_NONE*/

if (ioctl(fd, VIDIOC_S_FMT, &tv_fmt)< 0)

{

fprintf(stderr,"VIDIOC_S_FMT set err\n");

exit(-1);

close(fd);

}

init_mmap(fd);

}

int start_capture(int fd)

{

unsigned int i;

enum v4l2_buf_type type;

/*place the kernel cache to a queue*/

for(i = 0; i < n_buffer; i++)

{

struct v4l2_buffer buf;

memset(&buf, 0, sizeof(buf));

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = i;

if(-1 == ioctl(fd, VIDIOC_QBUF, &buf))

{

perror("Fail to ioctl 'VIDIOC_QBUF'");

exit(EXIT_FAILURE);

}

}

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if(-1 == ioctl(fd, VIDIOC_STREAMON, &type))

{

printf("i=%d.\n", i);

perror("VIDIOC_STREAMON");

close(fd);

exit(EXIT_FAILURE);

}

return 0;

}

int process_image(void *addr, int length)

{

#if 0

FILE *fp;

static int num = 0;

char image_name[20];

sprintf(image_name, JPG, num++);

if((fp = fopen(image_name, "w")) == NULL)

{

perror("Fail to fopen");

exit(EXIT_FAILURE);

}

fwrite(addr, length, 1, fp);

usleep(500);

fclose(fp);

return 0;

#endif

int res = 0;

float ret = 0;

//ret = get_main_time(&time , 1);

res = AVI_write_frame(out_fd, addr, length,0);

//ret = get_main_time(&time , 1);

//printf("AVI write time = %f\n",ret);

if(res < 0)

{

perror("Fail to write frame\n");

exit(EXIT_FAILURE);

}

return 0;

}

int read_frame(int fd)

{

struct v4l2_buffer buf;

unsigned int i;

memset(&buf, 0, sizeof(buf));

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

//put cache from queue

if(-1 == ioctl(fd, VIDIOC_DQBUF,&buf))

{

perror("Fail to ioctl 'VIDIOC_DQBUF'");

exit(EXIT_FAILURE);

}

assert(buf.index < n_buffer);

//read process space's data to a file

process_image(usr_buf[buf.index].start, usr_buf[buf.index].length);

if(-1 == ioctl(fd, VIDIOC_QBUF,&buf))

{

perror("Fail to ioctl 'VIDIOC_QBUF'");

exit(EXIT_FAILURE);

}

return 1;

}

int mainloop(int fd)

{

int count = 400;

while(count-- > 0)

{

for(;;)

{

fd_set fds;

struct timeval tv;

int r;

float ret = 0;

ret = get_main_time(&time , 1);

FD_ZERO(&fds);

FD_SET(fd,&fds);

/*Timeout*/

tv.tv_sec = 2;

tv.tv_usec = 0;

r = select(fd + 1,&fds,NULL,NULL,&tv);

ret = get_main_time(&time , 1);

//printf("AVI write time = %f\n",ret);

if(-1 == r)

{

if(EINTR == errno)

continue;

perror("Fail to select");

exit(EXIT_FAILURE);

}

if(0 == r)

{

fprintf(stderr,"select Timeout\n");

exit(-1);

}

if(read_frame(fd))

{

break;

}

}

}

return 0;

}

void stop_capture(int fd)

{

enum v4l2_buf_type type;

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if(-1 == ioctl(fd,VIDIOC_STREAMOFF,&type))

{

perror("Fail to ioctl 'VIDIOC_STREAMOFF'");

exit(EXIT_FAILURE);

}

}

void close_camera_device(int fd)

{

unsigned int i;

for(i = 0;i < n_buffer; i++)

{

if(-1 == munmap(usr_buf[i].start,usr_buf[i].length))

{

exit(-1);

}

}

free(usr_buf);

if(-1 == close(fd))

{

perror("Fail to close fd");

exit(EXIT_FAILURE);

}

}

void init_avi(void)

{

char *filename = "avi_test.avi";

out_fd = AVI_open_output_file(filename);

if(out_fd!=NULL)

{

AVI_set_video(out_fd, 320, 240, 25, "MJPG");

}

else

{

perror("Fail to open AVI\n");

exit(EXIT_FAILURE);

}

}

void close_avi(void)

{

AVI_close(out_fd);

}

void main(void)

{

int fd;

fd = open_camera();

init_avi();

init_camera(fd);

start_capture(fd);

mainloop(fd);

stop_capture(fd);

close_avi();

close_camera_device(fd);

}

编译运行结果:

root@ubuntu:/home/share/test/v4l2_MJPEG_AVI# make clean

rm -f *.o a.out test core *~ *.avi

root@ubuntu:/home/share/test/v4l2_MJPEG_AVI# make

gcc -c -o main.o main.c

gcc -c -o avilib.o avilib.c

gcc -o test main.o avilib.o

root@ubuntu:/home/share/test/v4l2_MJPEG_AVI# ./test

camera driver name is : gspca_zc3xx

camera device name is : PC Camera

camera bus information: usb-0000:02:00.0-2.1

support device 1.JPEG

n_buffer = 6

root@ubuntu:/home/share/test/v4l2_MJPEG_AVI# ls

avilib.c avilib.h avilib.o avi_test.avi main.c main.o Makefile test

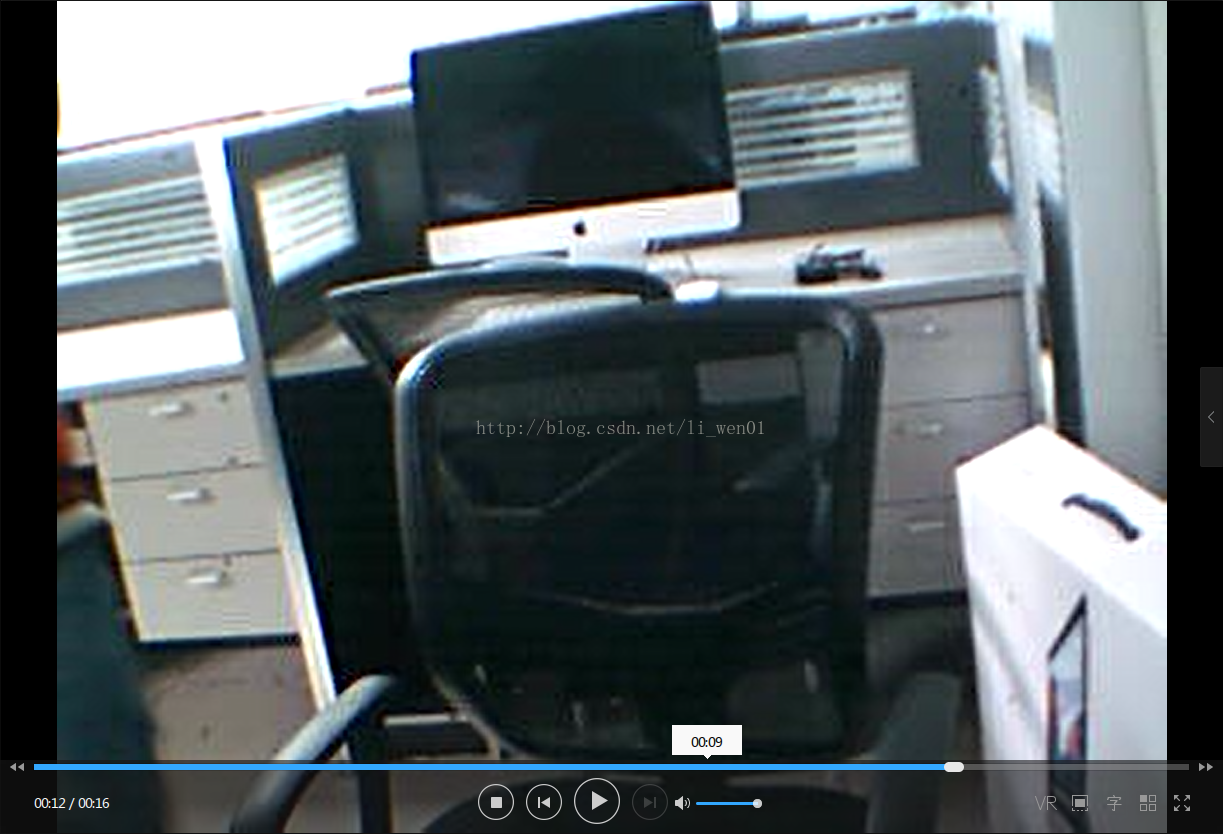

root@ubuntu:/home/share/test/v4l2_MJPEG_AVI#生成的avi_est.avi 文件,在迅雷播放器上播放如下:

这里需要注意,可能是因为我的USB驱动问题,读取JPEG需要的时间过长,导致录制的视频,播放的时候会出现快进的问题。另外,如果在设置v4l2 内存映射的时候,如果申请的buff 块太少也是会导致数据采集过慢的问题。

完整的工程代码可以这里下载:avilib将JPEG数据封装成AVI视频

本博客将停止更新

新的文章内容和附件工程文件更新到了

公众号 : liwen01

liwen01 2022.08.30

2330

2330

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?