背景:

live555作为知名的流媒体开源框架,在实际项目中,经常使用到。在Android播放器中,可以使用其作为流媒体部分的拉流端,特别是对于RTSP及组播播放,live555相对还是很稳定的。

这次将其移植到Android SDK上,并完成RTSP及组播拉流小程序,权当玩乐及熟悉live555之用。

RTSP拉流小程序基本就是原来live555测试代码testRTSPClient.cpp,仅对其做了点小修改,让其能完成对电视节目RTSP流的获取,所以后面有机会再讲live555 RTSP内部实现流程吧。

这次就讲Android上移植live555及实现组播简单拉流代码。

Android移植live555

live555 Android平台移植方法CSDN上很多可以参考,并不难,我简单说下我的移植方法

首先官网下载live555源码 http://www.live555.com/liveMedia/public/

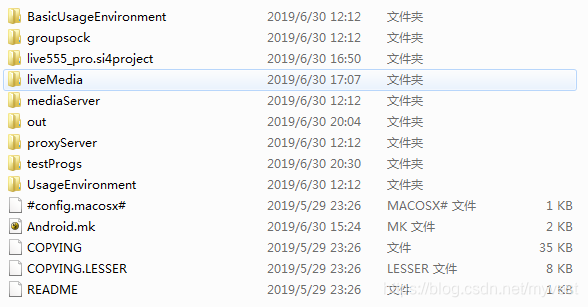

对于Android,可以删除不必要的目录,我仅保留如下目录:

编写Android.mk文件,如下,

将liveMedia目录中的CPP和C代码全部包含进来,另外其他几个目录需要用到的文件也添加进来。

指定LOCAL_CPPFLAGS 和LOCAL_LDFLAGS ,主要是对STL的支持。

这样编译即可,会在live555源码out目录下生成liblive555.so库。

编译过程中可能有些小错误,不同live555的错误可能略有不同,主要是对NDK的兼容问题,网上查查修改即可,比较简单。

LOCAL_PATH := $(call my-dir)

include $(CLEAR_VARS)

LOCAL_MODULE := liblive555

LOCAL_MODULE_PATH := $(LOCAL_PATH)/out

LOCAL_C_INCLUDES := \

$(LOCAL_PATH) \

$(LOCAL_PATH)/BasicUsageEnvironment/include \

$(LOCAL_PATH)/BasicUsageEnvironment \

$(LOCAL_PATH)/UsageEnvironment/include \

$(LOCAL_PATH)/UsageEnvironment \

$(LOCAL_PATH)/groupsock/include \

$(LOCAL_PATH)/groupsock \

$(LOCAL_PATH)/liveMedia/include \

$(LOCAL_PATH)/liveMedia \

LOCAL_MODULE_TAGS := optional

prebuilt_stdcxx_PATH := prebuilts/ndk/current/sources/cxx-stl/gnu-libstdc++

SRC_LIST := $(wildcard $(LOCAL_PATH)/liveMedia/*.cpp)

SRC_LIST += $(wildcard $(LOCAL_PATH)/liveMedia/*.c)

LOCAL_SRC_FILES := $(SRC_LIST:$(LOCAL_PATH)/%=%)

LOCAL_SRC_FILES += \

groupsock/GroupsockHelper.cpp \

groupsock/GroupEId.cpp \

groupsock/inet.c \

groupsock/Groupsock.cpp \

groupsock/NetInterface.cpp \

groupsock/NetAddress.cpp \

groupsock/IOHandlers.cpp \

UsageEnvironment/UsageEnvironment.cpp \

UsageEnvironment/HashTable.cpp \

UsageEnvironment/strDup.cpp \

BasicUsageEnvironment/BasicUsageEnvironment0.cpp \

BasicUsageEnvironment/BasicUsageEnvironment.cpp \

BasicUsageEnvironment/BasicTaskScheduler0.cpp \

BasicUsageEnvironment/BasicTaskScheduler.cpp \

BasicUsageEnvironment/DelayQueue.cpp \

BasicUsageEnvironment/BasicHashTable.cpp \

LOCAL_LDLIBS := -lm -llog

LOCAL_CPPFLAGS := -fexceptions -DXLOCALE_NOT_USED=1 -DNULL=0 -DNO_SSTREAM=1 -UIP_ADD_SOURCE_MEMBERSHIP

LOCAL_LDFLAGS := -L$(prebuilt_stdcxx_PATH)/libs/$(TARGET_CPU_ABI) -lgnustl_static -lsupc++

include $(BUILD_SHARED_LIBRARY)

组播拉流程序

代码参考测试代码testMPEG2TransportReceiver.cpp实现。

1、

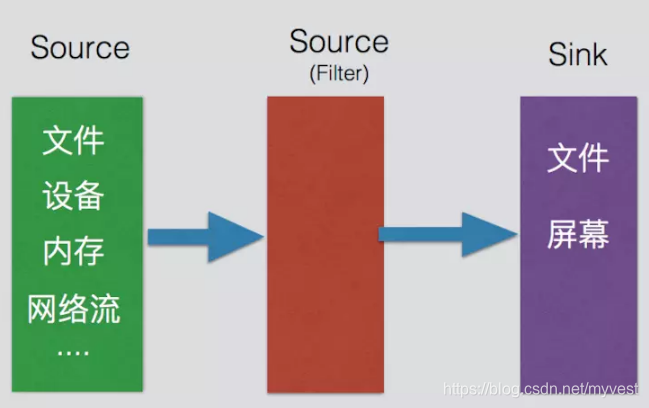

Source 和 Sink 在live555中是两个非常重要的概念。

Source 发送端,流的起点, 可直观理解为生产者,负责读取文件或网络流的信息。

Sink 接收端, 流的终点, 可理解为是消费者。

可以又多级source,对上级source在进行处理,也可以成为filter(实际上也是source),数据流向简单来说如下:

2、

Source 对于RTP封装的组播和UDP组播裸流传递的有所不同。

有RTP封装的情况下,创建SimpleRTPSource;

UDP裸流传递的情况下,则是创建BasicUDPSource;

同时创建MPEG2TransportStreamFramer这个filter来处理从source接受到TS流。当然也可以不用这个filter,testMPEG2TransportReceiver.cpp中就没有使用,经过MPEG2TransportStreamFramer可以对TS进行一些解析和处理等。

代码如下:

int main(int argc, char** argv) {

if (argc < 2) {

return 1;

}

char ipStr[64] = "";

unsigned short portNum = 0;

Boolean isRTP = (strstr(argv[1],"rtp://") != 0)? True:False;

if(isRTP){

sscanf(argv[1],"rtp://%[^:]:%hu",ipStr,&portNum);

}else{

sscanf(argv[1],"udp://%[^:]:%hu",ipStr,&portNum);

}

if(portNum == 0 || ipStr[0] == 0){

return 1;

}

TaskScheduler* scheduler = BasicTaskScheduler::createNew();

env = BasicUsageEnvironment::createNew(*scheduler);

struct in_addr sessionAddress;

sessionAddress.s_addr = our_inet_addr(ipStr);

const unsigned char ttl = 1; // low, in case routers don't admin scope

//RTP & UDP Socket

const Port port(portNum);

Groupsock inputsock(*env, sessionAddress, port, ttl);

//RTCP Socket

const Port rtcpPort(portNum+1);

Groupsock rtcpGroupsock(*env, sessionAddress, rtcpPort, ttl);

#if 1

if(isRTP){

//RTP

// Create the data source: a "MPEG-2 TransportStream RTP source" (which uses a 'simple' RTP payload format):

sessionState.udpSource = NULL;

sessionState.rtpSource = SimpleRTPSource::createNew(*env, &inputsock, 33, 90000, "video/MP2T", 0, False /*no 'M' bit*/);

sessionState.readSource= MPEG2TransportStreamFramer::createNew(*env, sessionState.rtpSource);

if(sessionState.readSource == NULL){

*env << "create source error...\n";

return 1;

}

// Create (and start) a 'RTCP instance' for the RTP source:

const unsigned estimatedSessionBandwidth = 5000; // in kbps; for RTCP b/w share

const unsigned maxCNAMElen = 100;

unsigned char CNAME[maxCNAMElen+1];

gethostname((char*)CNAME, maxCNAMElen);

CNAME[maxCNAMElen] = '\0'; // just in case

sessionState.rtcpInstance

= RTCPInstance::createNew(*env, &rtcpGroupsock,

estimatedSessionBandwidth, CNAME,

NULL /* we're a client */, sessionState.rtpSource);

// Note: This starts RTCP running automatically

}

else{

//UDP

// Create the data source: a "MPEG-2 TransportStream udp source"

sessionState.rtpSource = NULL;

sessionState.udpSource = BasicUDPSource::createNew(*env, &inputsock);

sessionState.readSource = MPEG2TransportStreamFramer::createNew(*env, sessionState.udpSource);

if(sessionState.readSource == NULL){

*env << "create source error...\n";

return 1;

}

}

......省略

}

3、

Sink 实现如下:

igmpSink继承MediaSink ,每次收取一帧数据,会调用到afterGettingFrame,通过continuePlaying又会处理获取下一帧数据,从而成为一个循环。所以在afterGettingFrame将流数据dump到文件mDumpFile之中。

// igmpSink //

class igmpSink: public MediaSink {

public:

// "bufferSize" should be at least as large as the largest expected input frame.

static igmpSink* createNew(UsageEnvironment& env, char const* fileName,

unsigned bufferSize = 20000);

protected:

igmpSink(UsageEnvironment& env, FILE* fid, unsigned bufferSize);

virtual ~igmpSink();

protected: // redefined virtual functions:

virtual Boolean continuePlaying();

protected:

static void afterGettingFrame(void* clientData, unsigned frameSize,

unsigned numTruncatedBytes,

struct timeval presentationTime,

unsigned durationInMicroseconds);

virtual void afterGettingFrame(unsigned frameSize,

unsigned numTruncatedBytes,

struct timeval presentationTime);

FILE* mDumpFile;

unsigned char* fBuffer;

unsigned fBufferSize;

};

// igmpSink //

igmpSink::igmpSink(UsageEnvironment& env, FILE* fid, unsigned bufferSize)

: MediaSink(env), mDumpFile(fid), fBufferSize(bufferSize){

fBuffer = new unsigned char[bufferSize];

}

igmpSink::~igmpSink() {

delete[] fBuffer;

if (mDumpFile != NULL) fclose(mDumpFile);

}

igmpSink* igmpSink::createNew(UsageEnvironment& env, char const* fileName, unsigned bufferSize) {

FILE* fid;

fid = fopen(fileName, "wb");

if (fid == NULL) return NULL;

return new igmpSink(env, fid, bufferSize);

}

Boolean igmpSink::continuePlaying() {

if (fSource == NULL) return False;

fSource->getNextFrame(fBuffer, fBufferSize,

afterGettingFrame, this,

onSourceClosure, this);

return True;

}

void igmpSink::afterGettingFrame(void* clientData, unsigned frameSize,

unsigned numTruncatedBytes,

struct timeval presentationTime,

unsigned /*durationInMicroseconds*/) {

igmpSink* sink = (igmpSink*)clientData;

sink->afterGettingFrame(frameSize, numTruncatedBytes, presentationTime);

}

void igmpSink::afterGettingFrame(unsigned frameSize,

unsigned numTruncatedBytes,

struct timeval presentationTime) {

if (numTruncatedBytes > 0) {

envir() << "igmpSink::afterGettingFrame(): The input frame data was too large for our buffer size ("

<< fBufferSize << "). "

<< numTruncatedBytes << " bytes of trailing data was dropped! Correct this by increasing the \"bufferSize\" parameter in the \"createNew()\" call to at least "

<< fBufferSize + numTruncatedBytes << "\n";

}

//envir() << "afterGettingFrame, Write to file\n";

if (mDumpFile != NULL && fBuffer != NULL) {

fwrite(fBuffer, 1, frameSize, mDumpFile);

}

if (mDumpFile == NULL || fflush(mDumpFile) == EOF) {

// The output file has closed. Handle this the same way as if the input source had closed:

if (fSource != NULL) fSource->stopGettingFrames();

onSourceClosure();

return;

}

// Then try getting the next frame:

continuePlaying();

}

4、

最后只要将source和sink绑定,启动即可。

数据流为:udpSource/ rtpSource–>readSource(MPEG2TransportStreamFramer)–>igmpSink。

env->taskScheduler().doEventLoop(); 在live555内部会通过其SingleStep一直循环。(以后分析RTSP流程时,再分析live555内部流程。)组播流会被dump到指定文件。

sessionState.sink = igmpSink::createNew(*env, "/storage/external_storage/sda1/testIGMP.ts", 5120);

// Finally, start receiving the multicast stream:

*env << "Beginning receiving multicast stream...\n";

sessionState.sink->startPlaying(*sessionState.readSource, afterPlaying, NULL);

env->taskScheduler().doEventLoop(); // does not return

5、

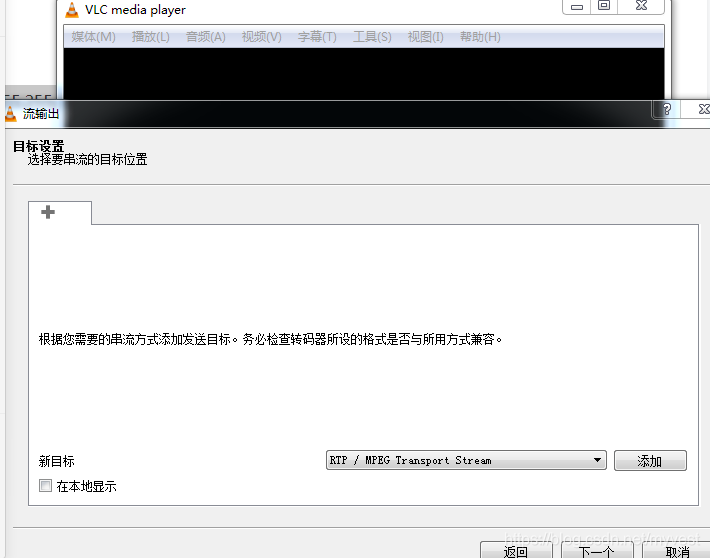

测试时可以使用VLC推流,RTP和UDP均可,可以看到流会被dump到我们指定的目录,拿出来播放,看是否一样即可。

2232

2232

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?