目录

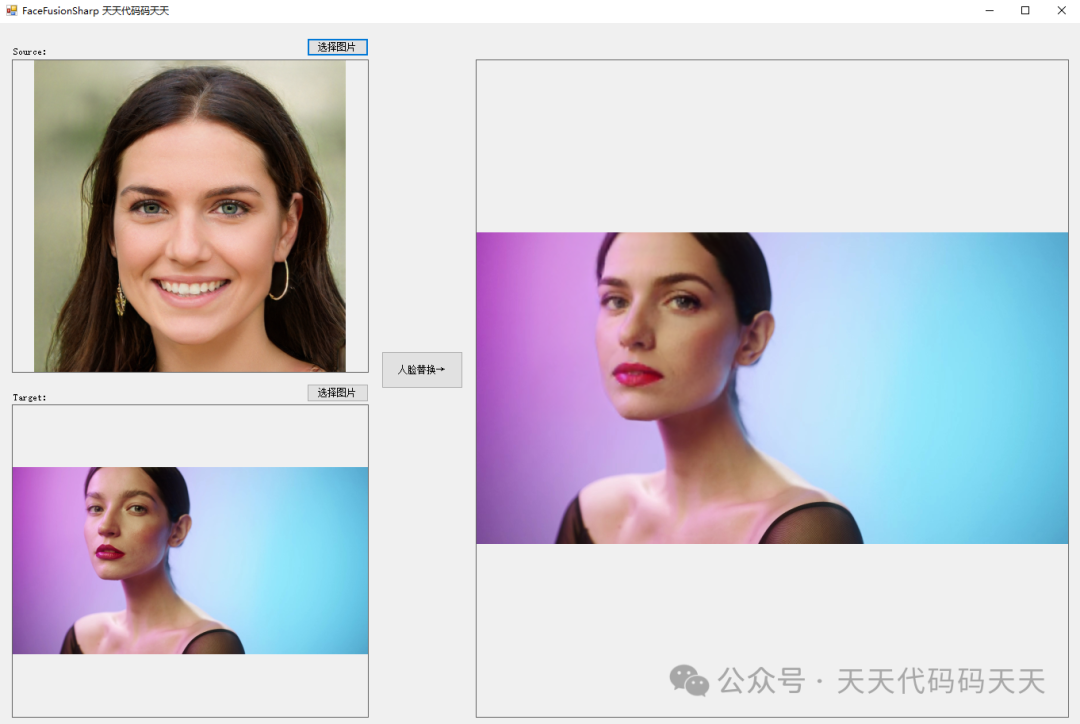

先看效果

人脸替换

说明

C#版Facefusion一共有如下5个步骤:

1、使用yoloface_8n.onnx进行人脸检测

2、使用2dfan4.onnx获取人脸关键点

3、使用arcface_w600k_r50.onnx获取人脸特征值

4、使用inswapper_128.onnx进行人脸替换

5、使用gfpgan_1.4.onnx进行人脸增强

本文分享使用inswapper_128.onnx实现C#版Facefusion第四步:人脸替换。

到此人脸替换基本完成,但是生成的图片比较模糊。

顺便再看一下C++、Python代码的实现方式,可以对比学习。

回顾:

C#版Facefusion:让你的脸与世界融为一体!-01 人脸检测

C#版Facefusion:让你的脸与世界融为一体!-02 获取人脸关键点

C#版Facefusion:让你的脸与世界融为一体!-03 获取人脸特征值

模型信息

Inputs

-------------------------

name:target

tensor:Float[1, 3, 128, 128]

name:source

tensor:Float[1, 512]

---------------------------------------------------------------

Outputs

-------------------------

name:output

tensor:Float[1, 3, 128, 128]

---------------------------------------------------------------

代码

调用代码

using Newtonsoft.Json;

using OpenCvSharp;

using OpenCvSharp.Extensions;

using System;

using System.Collections.Generic;

using System.Drawing;

using System.Windows.Forms;

namespace FaceFusionSharp

{

public partial class Form4 : Form

{

public Form4()

{

InitializeComponent();

}

string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";

string startupPath = "";

string source_path = "";

string target_path = "";

SwapFace swap_face;

private void button2_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.Filter = fileFilter;

if (ofd.ShowDialog() != DialogResult.OK) return;

pictureBox1.Image = null;

source_path = ofd.FileName;

pictureBox1.Image = new Bitmap(source_path);

}

private void button3_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.Filter = fileFilter;

if (ofd.ShowDialog() != DialogResult.OK) return;

pictureBox2.Image = null;

target_path = ofd.FileName;

pictureBox2.Image = new Bitmap(target_path);

}

private void button1_Click(object sender, EventArgs e)

{

if (pictureBox1.Image == null || pictureBox2.Image == null)

{

return;

}

pictureBox3.Image = null;

button1.Enabled = false;

Application.DoEvents();

Mat source_img = Cv2.ImRead(source_path);

Mat target_img = Cv2.ImRead(target_path);

List<float> source_face_embedding = new List<float>();

string source_face_embeddingStr = "[1.27829754,-0.843142569,-0.06048897,1.217865,0.05804708,-0.922453,-0.3946921,0.354699373,-0.463791549,-0.2642475,0.105297916,-0.7907695,-0.356749445,0.3069641,-0.8946595,0.170947254,1.44868386,0.759071946,-0.269189358,-0.5085244,-0.9652322,-1.04884982,0.9766977,1.07973742,0.0248709321,-0.4092621,-0.6058987,-0.848997355,-1.03252912,-0.9534966,-0.9342567,0.9198751,0.4637577,-0.12646234,-0.959137,0.215259671,0.202829659,0.386633456,-1.06374037,0.9076231,0.4178339,-0.307011932,0.175406933,-0.8017055,0.0265568867,-0.0304557681,0.381101757,-0.613952756,-0.446841478,-0.02897077,-1.83899212,-0.303131342,-0.4501938,-0.156551331,0.463839,2.24176764,-1.44412839,0.07924119,-0.478609055,-0.07747641,-0.227516085,-0.6149595,0.247599155,0.7158034,-0.989331543,0.336038023,-0.260178417,-0.736905932,0.6045121,-0.5151367,0.0177601129,0.2451405,-0.4607039,-0.9134231,-0.9179117,0.0190813988,-0.0810294747,-0.7007484,0.05699861,0.794708,0.189010963,-0.6672335,-0.0455337241,0.103580572,0.5497576,0.995198667,-0.957392335,0.7217704,-0.359451652,0.4541813,-0.230636075,-0.547900736,-0.5641564,1.813883,-0.7031114,0.00149327889,-1.0309937,-0.103514418,0.4285911,0.0026740334,-0.661017954,-0.6178541,0.0246957541,-0.350938439,-0.852270365,0.388598084,-0.8937982,0.472674131,0.522144735,0.799444556,0.2309232,-1.08995068,-1.442019,-1.91144061,-0.7164683,-0.6465371,0.760996938,-0.420196772,-1.26484954,-0.09949406,-0.151404992,-0.61891,-0.743678153,0.00494776666,0.20202066,-1.08374822,0.0426106676,-0.955584645,0.09357526,-0.766932249,-0.507255733,0.04017231,0.289033234,0.4830236,-0.262155324,-0.7767182,0.179391116,0.320753068,0.6572064,0.7744924,-0.880204558,-0.9739305,0.475606382,-0.0342647,0.505723536,-0.4457739,-0.6569923,-0.9067787,0.4584064,0.107994281,-0.414298415,-0.524813831,-1.03696835,-0.298128754,0.94455415,0.578622341,0.4745661,-0.5985398,0.419998139,-0.0477161035,-0.805623531,1.22836232,-0.996593058,-0.449891567,0.252311468,-1.76361179,0.204254434,-1.17584419,0.0494393073,0.2145797,-1.75655448,0.548028231,-0.3806628,0.8410565,-1.35499954,0.140949339,0.591043949,0.0298345536,0.12179298,-0.7399838,-0.06857535,0.23784174,-0.690012932,-0.147698313,-0.911997,0.680546463,0.1976757,0.9299851,-0.362830281,0.318164885,-0.0501053035,-0.575328946,0.129042387,1.08131313,-0.8875152,0.559377253,1.11513853,1.12112761,-0.123456843,1.202241,-0.6952012,-0.557888448,0.540348053,-0.521905243,-0.138044775,-0.550300062,-2.14852977,-1.39409924,0.104200155,0.839064062,0.281964779,-0.202217847,-0.480831623,1.08107018,0.7986622,-0.2772641,-1.57516074,-0.5475309,0.25043875,1.18010235,0.6972798,0.1838305,-0.151265711,0.5103554,-0.883137345,-1.34374917,0.8238913,0.373506874,-0.506602466,1.12764454,-0.00945023447,-0.0426563546,-0.671316266,0.252278179,-0.7500384,-0.858895063,-0.7738279,0.489211917,0.7337883,-0.5536902,-0.710563064,0.533735633,-0.267439723,-0.08325979,-0.9056747,1.1245147,1.34881878,0.4010091,-0.150992,-0.413697422,0.876372457,0.864017546,0.7379206,-0.6320749,-0.419689536,0.815245,-0.118938759,0.683474243,-0.6155008,-0.6915616,-0.6239222,-0.583537,0.110704079,-0.302822769,0.3435551,-1.17488611,-1.01326025,0.32583034,0.381028563,0.6072552,-0.3146818,0.371741,0.187356383,0.1772259,-1.85920739,-0.504295051,-0.8785569,0.13697955,-1.11337721,-0.01934576,-0.4575694,-1.15144432,1.89849365,-0.1349,-0.6015017,1.42154992,-0.716133237,-0.153033137,0.76939106,-0.07523422,-0.6604878,-1.48084462,0.2875409,-1.12858534,-0.5869999,-0.614957333,-1.463373,-0.6721835,-0.8257968,-0.8025705,-0.05431364,0.692136168,1.29751766,0.488991469,1.05194938,0.270692348,-0.9085438,-0.802716434,0.309471458,0.448509455,0.6789823,-0.5252856,-0.435200185,0.225147322,-0.07077629,1.345535,0.387805045,0.5236529,-0.764065266,0.0691546053,0.250542849,0.1982695,0.149731383,0.845968544,-0.566032946,0.654774547,0.07547854,0.8683217,1.290068,-0.152055,-0.803692758,-0.152090073,0.558371961,0.157687336,0.839655459,1.01181054,-0.5604553,-1.40365577,-0.0167575851,0.933371544,0.078309074,-0.399255246,1.34938979,-0.119476132,0.432984,-0.300964683,0.226254016,0.012853846,0.02476523,-1.31901956,-0.127706885,-0.6488211,-0.7127493,0.749162853,-0.893739045,-0.175434247,-0.335470438,1.18117,0.492022336,1.23091626,0.406947345,-0.3563189,0.8080479,-0.426982045,-0.739384949,-0.551647067,0.1390677,0.20869185,-0.0231712535,-0.214353234,-0.174618453,0.0277073532,-0.241463527,0.9559633,0.262964159,-0.851067245,-0.03425724,0.08168835,0.3511026,-0.466765344,-0.134850383,0.08376661,1.48223615,-1.61568224,1.56967258,-0.391382277,-1.56669474,-1.37852716,0.124903291,-0.3481225,-1.23350728,-0.6862239,0.103708193,1.10754442,0.057642363,-0.321929336,-0.2979336,1.83333886,-0.904876,-0.3975336,-1.07201684,0.458736777,-0.4938286,-0.763312,-1.83132732,-0.748038769,0.475634664,0.297061145,-0.2685745,-0.0666656047,0.4759698,-1.03472865,-0.406694651,-0.4281593,-0.9864616,-0.300786138,-0.12080624,0.631304443,-0.153151155,1.42306745,-0.3394043,-0.5216301,0.9424391,0.407645643,-0.240343288,1.197725,0.62536,-0.756885648,0.510467649,0.4989131,0.0761876553,0.10052751,0.105433822,-0.167532444,0.8946594,-0.521723866,-0.580115259,-1.10355973,-0.418604881,0.163044125,0.402529866,0.385285437,0.50639534,1.8232342,0.343647063,0.8509874,-0.7942822,0.6470037,0.00863461,0.4432856,0.7659954,-0.9592937,0.5787302,-0.496584,-1.267057,-0.8610047,0.0339910947,-0.145451844,-1.28451169,-0.193874747,0.5775311,-0.537475049,0.197097167,-1.57822132,0.257652581,0.163942844,1.132039,0.108674683,-0.185894847,0.50037,0.07695928,-0.420834035,-0.3034144,0.162115663,-0.45547688,-0.295086831,-0.0236618519,0.6726147,0.764866352,0.35937,-0.330009639,0.151511714,-0.533296764,-1.08290327,0.230814755,0.06941691,-0.354930282,0.5848398,-1.68328464,-0.476737082,-1.61717749,1.00269365,-1.809915,0.6276051,-0.848550439,0.288911045,-0.4656973,0.5820218,0.851961,0.01968059,0.03812991,0.33123517,-0.349143356,0.16208598,-1.4402169,-0.6137045,-0.3490757,0.2402328,-1.21176457,0.119369812,-0.896918654,0.1288858]";

source_face_embedding = JsonConvert.DeserializeObject<List<float>>(source_face_embeddingStr);

List<Point2f> target_landmark_5 = new List<Point2f>();

string target_landmark_5Str = "[{\"X\":485.602539,\"Y\":247.84906},{\"X\":704.237549,\"Y\":247.422546},{\"X\":527.5082,\"Y\":360.211731},{\"X\":485.430084,\"Y\":495.7987},{\"X\":647.741638,\"Y\":505.131042}]";

target_landmark_5 = JsonConvert.DeserializeObject<List<Point2f>>(target_landmark_5Str);

Mat swapimg = swap_face.process(target_img, source_face_embedding, target_landmark_5);

pictureBox3.Image = swapimg.ToBitmap();

button1.Enabled = true;

}

private void Form1_Load(object sender, EventArgs e)

{

swap_face = new SwapFace("model/inswapper_128.onnx");

target_path = "images/target.jpg";

source_path = "images/source.jpg";

pictureBox1.Image = new Bitmap(source_path);

pictureBox2.Image = new Bitmap(target_path);

}

}

}

SwapFace.cs

using Microsoft.ML.OnnxRuntime;

using Microsoft.ML.OnnxRuntime.Tensors;

using OpenCvSharp;

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

namespace FaceFusionSharp

{

internal class SwapFace

{

float[] input_image;

float[] input_embedding;

int input_height;

int input_width;

const int len_feature = 512;

float[] model_matrix;

List<Point2f> normed_template;

float FACE_MASK_BLUR = 0.3f;

int[] FACE_MASK_PADDING = new int[4] { 0, 0, 0, 0 };

float[] INSWAPPER_128_MODEL_MEAN = new float[3] { 0.0f, 0.0f, 0.0f };

float[] INSWAPPER_128_MODEL_STD = new float[3] { 1.0f, 1.0f, 1.0f };

SessionOptions options;

InferenceSession onnx_session;

public SwapFace(string modelpath)

{

input_height = 128;

input_width = 128;

options = new SessionOptions();

options.LogSeverityLevel = OrtLoggingLevel.ORT_LOGGING_LEVEL_INFO;

options.AppendExecutionProvider_CPU(0);// 设置为CPU上运行

// 创建推理模型类,读取本地模型文件

onnx_session = new InferenceSession(modelpath, options);//model_path 为onnx模型文件的路径

normed_template=new List<Point2f>();

normed_template.Add(new Point2f(46.29459968f, 51.69629952f));

normed_template.Add(new Point2f(81.53180032f, 51.50140032f));

normed_template.Add(new Point2f(64.02519936f, 71.73660032f));

normed_template.Add(new Point2f(49.54930048f, 92.36550016f));

normed_template.Add(new Point2f(78.72989952f, 92.20409984f));

//读model_matrix.bin

model_matrix = ReadFloatDataFromBinaryFile("model/model_matrix.bin");

}

float[] ReadFloatDataFromBinaryFile(string filePath)

{

// 打开文件流和二进制读取器

using (FileStream fileStream = new FileStream(filePath, FileMode.Open, FileAccess.Read))

using (BinaryReader binaryReader = new BinaryReader(fileStream))

{

// 读取文件到float数组

float[] floatData = new float[fileStream.Length / sizeof(float)];

for (int i = 0; i < floatData.Length; i++)

{

floatData[i] = binaryReader.ReadSingle();

}

return floatData;

}

}

Mat preprocess(Mat srcimg, List<Point2f> face_landmark_5, List<float> source_face_embedding, ref Mat affine_matrix, ref Mat box_mask)

{

Mat crop_img = new Mat();

affine_matrix = Common.warp_face_by_face_landmark_5(srcimg, crop_img, face_landmark_5, normed_template, new Size(128, 128));

int[] crop_size = new int[2] { crop_img.Cols, crop_img.Rows };

box_mask = Common.create_static_box_mask(crop_size, FACE_MASK_BLUR, FACE_MASK_PADDING);

Mat[] bgrChannels = Cv2.Split(crop_img);

for (int c = 0; c < 3; c++)

{

bgrChannels[c].ConvertTo(bgrChannels[c], MatType.CV_32FC1, 1 / (255.0 * INSWAPPER_128_MODEL_STD[c]), -INSWAPPER_128_MODEL_MEAN[c] / INSWAPPER_128_MODEL_STD[c]);

}

Cv2.Merge(bgrChannels, crop_img);

foreach (Mat channel in bgrChannels)

{

channel.Dispose();

}

input_image = Common.ExtractMat(crop_img);

crop_img.Dispose();

float linalg_norm = 0;

for (int i = 0; i < len_feature; i++)

{

linalg_norm = (float)(linalg_norm + Math.Pow(source_face_embedding[i], 2));

}

linalg_norm = (float)Math.Sqrt(linalg_norm);

input_embedding = new float[len_feature];

for (int i = 0; i < len_feature; i++)

{

float sum = 0;

for (int j = 0; j < len_feature; j++)

{

sum += (source_face_embedding[j] * model_matrix[j * len_feature + i]);

}

input_embedding[i] = sum / linalg_norm;

}

return box_mask;

}

internal Mat process(Mat target_img, List<float> source_face_embedding, List<Point2f> target_landmark_5)

{

Mat affine_matrix = new Mat();

Mat box_mask = new Mat();

preprocess(target_img, target_landmark_5, source_face_embedding, ref affine_matrix,ref box_mask);

Tensor<float> input_tensor = new DenseTensor<float>(input_image, new[] { 1, 3, input_height, input_width });

Tensor<float> input_embedding_shape = new DenseTensor<float>(input_embedding, new[] { 1, len_feature });

List<NamedOnnxValue> input_container = new List<NamedOnnxValue>

{

NamedOnnxValue.CreateFromTensor("target", input_tensor),

NamedOnnxValue.CreateFromTensor("source", input_embedding_shape)

};

var ort_outputs = onnx_session.Run(input_container).ToArray();

float[] pdata = ort_outputs[0].AsTensor<float>().ToArray();

int out_h = 128;

int out_w = 128;

int channel_step = out_h * out_w;

for (int i = 0; i < pdata.Length ; i++)

{

pdata[i] = pdata[i] * 255.0f;

if (pdata[i] < 0)

{

pdata[i] = 0;

}

if (pdata[i] > 255) {

pdata[i] = 255;

}

}

float[] temp_r = new float[channel_step];

float[] temp_g = new float[channel_step];

float[] temp_b = new float[channel_step];

Array.Copy(pdata, temp_r, channel_step);

Array.Copy(pdata, channel_step, temp_g, 0, channel_step);

Array.Copy(pdata, channel_step * 2, temp_b, 0, channel_step);

Mat rmat = new Mat(128, 128, MatType.CV_32FC1, temp_r);

Mat gmat = new Mat(128, 128, MatType.CV_32FC1, temp_g);

Mat bmat = new Mat(128, 128, MatType.CV_32FC1, temp_b);

Mat result=new Mat();

Cv2.Merge(new Mat[] { bmat, gmat, rmat }, result);

float[] box_mask_data;

box_mask.GetArray<float>(out box_mask_data);

int cols=box_mask.Cols;

int rows = box_mask.Rows;

MatType matType = box_mask.Type();

for (int i = 0; i < box_mask_data.Length; i++)

{

if (box_mask_data[i]<0)

{

box_mask_data[i] = 0;

}

if (box_mask_data[i] > 1)

{

box_mask_data[i] = 1;

}

}

box_mask=new Mat(rows, cols, matType, box_mask_data);

Mat dstimg = Common.paste_back(target_img, result, box_mask, affine_matrix);

return dstimg;

}

}

}

C++代码

我们顺便看一下C++代码的实现,方便对比学习。

头文件

# ifndef FACESWAP

# define FACESWAP

#include <fstream>

#include <sstream>

#include "opencv2/opencv.hpp"

//#include <cuda_provider_factory.h> ///如果使用cuda加速,需要取消注释

#include <onnxruntime_cxx_api.h>

#include"utils.h"

class SwapFace

{

public:

SwapFace(std::string modelpath);

cv::Mat process(cv::Mat target_img, const std::vector<float> source_face_embedding, const std::vector<cv::Point2f> target_landmark_5);

~SwapFace(); // 析构函数, 释放内存

private:

void preprocess(cv::Mat target_img, const std::vector<cv::Point2f> face_landmark_5, const std::vector<float> source_face_embedding, cv::Mat& affine_matrix, cv::Mat& box_mask);

std::vector<float> input_image;

std::vector<float> input_embedding;

int input_height;

int input_width;

const int len_feature = 512;

float* model_matrix;

std::vector<cv::Point2f> normed_template;

const float FACE_MASK_BLUR = 0.3;

const int FACE_MASK_PADDING[4] = {0, 0, 0, 0};

const float INSWAPPER_128_MODEL_MEAN[3] = {0.0, 0.0, 0.0};

const float INSWAPPER_128_MODEL_STD[3] = {1.0, 1.0, 1.0};

Ort::Env env = Ort::Env(ORT_LOGGING_LEVEL_ERROR, "Face Swap");

Ort::Session *ort_session = nullptr;

Ort::SessionOptions sessionOptions = Ort::SessionOptions();

std::vector<char*> input_names;

std::vector<char*> output_names;

std::vector<std::vector<int64_t>> input_node_dims; // >=1 outputs

std::vector<std::vector<int64_t>> output_node_dims; // >=1 outputs

Ort::MemoryInfo memory_info_handler = Ort::MemoryInfo::CreateCpu(OrtDeviceAllocator, OrtMemTypeCPU);

};

#endif

源文件

#include"faceswap.h"

using namespace cv;

using namespace std;

using namespace Ort;

SwapFace::SwapFace(string model_path)

{

/// OrtStatus* status = OrtSessionOptionsAppendExecutionProvider_CUDA(sessionOptions, 0); ///如果使用cuda加速,需要取消注释

sessionOptions.SetGraphOptimizationLevel(ORT_ENABLE_BASIC);

/// std::wstring widestr = std::wstring(model_path.begin(), model_path.end()); windows写法

/// ort_session = new Session(env, widestr.c_str(), sessionOptions); windows写法

ort_session = new Session(env, model_path.c_str(), sessionOptions); linux写法

size_t numInputNodes = ort_session->GetInputCount();

size_t numOutputNodes = ort_session->GetOutputCount();

AllocatorWithDefaultOptions allocator;

for (int i = 0; i < numInputNodes; i++)

{

input_names.push_back(ort_session->GetInputName(i, allocator)); /// 低版本onnxruntime的接口函数

AllocatedStringPtr input_name_Ptr = ort_session->GetInputNameAllocated(i, allocator); /// 高版本onnxruntime的接口函数

input_names.push_back(input_name_Ptr.get()); /// 高版本onnxruntime的接口函数

Ort::TypeInfo input_type_info = ort_session->GetInputTypeInfo(i);

auto input_tensor_info = input_type_info.GetTensorTypeAndShapeInfo();

auto input_dims = input_tensor_info.GetShape();

input_node_dims.push_back(input_dims);

}

for (int i = 0; i < numOutputNodes; i++)

{

output_names.push_back(ort_session->GetOutputName(i, allocator)); /// 低版本onnxruntime的接口函数

AllocatedStringPtr output_name_Ptr= ort_session->GetInputNameAllocated(i, allocator);

output_names.push_back(output_name_Ptr.get()); /// 高版本onnxruntime的接口函数

Ort::TypeInfo output_type_info = ort_session->GetOutputTypeInfo(i);

auto output_tensor_info = output_type_info.GetTensorTypeAndShapeInfo();

auto output_dims = output_tensor_info.GetShape();

output_node_dims.push_back(output_dims);

}

this->input_height = input_node_dims[0][2];

this->input_width = input_node_dims[0][3];

const int length = this->len_feature*this->len_feature;

this->model_matrix = new float[length];

cout<<"start read model_matrix.bin"<<endl;

FILE* fp = fopen("model_matrix.bin", "rb");

fread(this->model_matrix, sizeof(float), length, fp);//导入数据

fclose(fp);//关闭文件

cout<<"read model_matrix.bin finish"<<endl;

在这里就直接定义了,没有像python程序里的那样normed_template = TEMPLATES.get(template) * crop_size

this->normed_template.emplace_back(Point2f(46.29459968, 51.69629952));

this->normed_template.emplace_back(Point2f(81.53180032, 51.50140032));

this->normed_template.emplace_back(Point2f(64.02519936, 71.73660032));

this->normed_template.emplace_back(Point2f(49.54930048, 92.36550016));

this->normed_template.emplace_back(Point2f(78.72989952, 92.20409984));

}

SwapFace::~SwapFace()

{

delete[] this->model_matrix;

this->model_matrix = nullptr;

this->normed_template.clear();

}

void SwapFace::preprocess(Mat srcimg, const vector<Point2f> face_landmark_5, const vector<float> source_face_embedding, Mat& affine_matrix, Mat& box_mask)

{

Mat crop_img;

affine_matrix = warp_face_by_face_landmark_5(srcimg, crop_img, face_landmark_5, this->normed_template, Size(128, 128));

const int crop_size[2] = {crop_img.cols, crop_img.rows};

box_mask = create_static_box_mask(crop_size, this->FACE_MASK_BLUR, this->FACE_MASK_PADDING);

vector<cv::Mat> bgrChannels(3);

split(crop_img, bgrChannels);

for (int c = 0; c < 3; c++)

{

bgrChannels[c].convertTo(bgrChannels[c], CV_32FC1, 1 / (255.0*this->INSWAPPER_128_MODEL_STD[c]), -this->INSWAPPER_128_MODEL_MEAN[c]/this->INSWAPPER_128_MODEL_STD[c]);

}

const int image_area = this->input_height * this->input_width;

this->input_image.resize(3 * image_area);

size_t single_chn_size = image_area * sizeof(float);

memcpy(this->input_image.data(), (float *)bgrChannels[2].data, single_chn_size); ///rgb顺序

memcpy(this->input_image.data() + image_area, (float *)bgrChannels[1].data, single_chn_size);

memcpy(this->input_image.data() + image_area * 2, (float *)bgrChannels[0].data, single_chn_size);

float linalg_norm = 0;

for(int i=0;i<this->len_feature;i++)

{

linalg_norm += powf(source_face_embedding[i], 2);

}

linalg_norm = sqrt(linalg_norm);

this->input_embedding.resize(this->len_feature);

for(int i=0;i<this->len_feature;i++)

{

float sum=0;

for(int j=0;j<this->len_feature;j++)

{

sum += (source_face_embedding[j]*this->model_matrix[j*this->len_feature+i]);

}

this->input_embedding[i] = sum/linalg_norm;

}

}

Mat SwapFace::process(Mat target_img, const vector<float> source_face_embedding, const vector<Point2f> target_landmark_5)

{

Mat affine_matrix;

Mat box_mask;

this->preprocess(target_img, target_landmark_5, source_face_embedding, affine_matrix, box_mask);

std::vector<Ort::Value> inputs_tensor;

std::vector<int64_t> input_img_shape = {1, 3, this->input_height, this->input_width};

inputs_tensor.emplace_back(Value::CreateTensor<float>(memory_info_handler, this->input_image.data(), this->input_image.size(), input_img_shape.data(), input_img_shape.size()));

std::vector<int64_t> input_embedding_shape = {1, this->len_feature};

inputs_tensor.emplace_back(Value::CreateTensor<float>(memory_info_handler, this->input_embedding.data(), this->input_embedding.size(), input_embedding_shape.data(), input_embedding_shape.size()));

Ort::RunOptions runOptions;

vector<Value> ort_outputs = this->ort_session->Run(runOptions, this->input_names.data(), inputs_tensor.data(), inputs_tensor.size(), this->output_names.data(), output_names.size());

float* pdata = ort_outputs[0].GetTensorMutableData<float>();

std::vector<int64_t> outs_shape = ort_outputs[0].GetTensorTypeAndShapeInfo().GetShape();

const int out_h = outs_shape[2];

const int out_w = outs_shape[3];

const int channel_step = out_h * out_w;

Mat rmat(out_h, out_w, CV_32FC1, pdata);

Mat gmat(out_h, out_w, CV_32FC1, pdata + channel_step);

Mat bmat(out_h, out_w, CV_32FC1, pdata + 2 * channel_step);

rmat *= 255.f;

gmat *= 255.f;

bmat *= 255.f;

rmat.setTo(0, rmat < 0);

rmat.setTo(255, rmat > 255);

gmat.setTo(0, gmat < 0);

gmat.setTo(255, gmat > 255);

bmat.setTo(0, bmat < 0);

bmat.setTo(255, bmat > 255);

vector<Mat> channel_mats(3);

channel_mats[0] = bmat;

channel_mats[1] = gmat;

channel_mats[2] = rmat;

Mat result;

merge(channel_mats, result);

box_mask.setTo(0, box_mask < 0);

box_mask.setTo(1, box_mask > 1);

Mat dstimg = paste_back(target_img, result, box_mask, affine_matrix);

return dstimg;

}

Python代码

import numpy as np

import onnxruntime

from utils import warp_face_by_face_landmark_5, create_static_box_mask, paste_back

FACE_MASK_BLUR = 0.3

FACE_MASK_PADDING = (0, 0, 0, 0)

INSWAPPER_128_MODEL_MEAN = [0.0, 0.0, 0.0]

INSWAPPER_128_MODEL_STD = [1.0, 1.0, 1.0]

class swap_face:

def __init__(self, modelpath):

# Initialize model

session_option = onnxruntime.SessionOptions()

session_option.log_severity_level = 3

# self.session = onnxruntime.InferenceSession(modelpath, providers=['CUDAExecutionProvider', 'CPUExecutionProvider'])

self.session = onnxruntime.InferenceSession(modelpath, sess_options=session_option) ###opencv-dnn读取onnx失败

model_inputs = self.session.get_inputs()

self.input_names = [model_inputs[i].name for i in range(len(model_inputs))]

self.input_shape = model_inputs[0].shape

self.input_height = int(self.input_shape[2])

self.input_width = int(self.input_shape[3])

self.model_matrix = np.load('model_matrix.npy')

def process(self, target_img, source_face_embedding, target_landmark_5):

###preprocess

crop_img, affine_matrix = warp_face_by_face_landmark_5(target_img, target_landmark_5, 'arcface_128_v2', (128, 128))

crop_mask_list = []

box_mask = create_static_box_mask((crop_img.shape[1],crop_img.shape[0]), FACE_MASK_BLUR, FACE_MASK_PADDING)

crop_mask_list.append(box_mask)

crop_img = crop_img[:, :, ::-1].astype(np.float32) / 255.0

crop_img = (crop_img - INSWAPPER_128_MODEL_MEAN) / INSWAPPER_128_MODEL_STD

crop_img = np.expand_dims(crop_img.transpose(2, 0, 1), axis = 0).astype(np.float32)

source_embedding = source_face_embedding.reshape((1, -1))

source_embedding = np.dot(source_embedding, self.model_matrix) / np.linalg.norm(source_embedding)

###Perform inference on the image

result = self.session.run(None, {'target':crop_img, 'source':source_embedding})[0][0]

###normalize_crop_frame

result = result.transpose(1, 2, 0)

result = (result * 255.0).round()

result = result[:, :, ::-1]

crop_mask = np.minimum.reduce(crop_mask_list).clip(0, 1) ###print(np.array_equal(np.minimum.reduce(crop_mask_list), crop_mask_list[0])) 打印是True,说明np.minimum.reduce(crop_mask_list)等于crop_mask_list[0],也就是box_mask,因此做np.minimum.reduce(crop_mask_list)完全是多此一举

dstimg = paste_back(target_img, result, crop_mask, affine_matrix)

return dstimg

其他

《C#版Facefusion:让你的脸与世界融为一体!》中的Demo程序已经在QQ群(758616458)中分享,需要的可以去QQ群文件中下载体验。

模型下载

https://docs.facefusion.io/introduction/license#models

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?