更新额外信息:

由于以下试验测试环境为 PC 以及 Android O 的手机,由于Android 在9.0 之前并未在内核真正实现 PTHREAD_EXPLICIT_SCHED 的设置,因此在线程优先级是否有效上的结论并不准确。目前可以知道的是,在Android P之前的版本上,native 层的线程优先级设置并不会有预期的效果。而由于我手上目前没有 root 的 Android P 设备,也没有办法验证Android P上是否完全具备了线程优先级设置的功能。

先写结果:

对于非高并发,CPU并未达到瓶颈,事务线程的时间花销在十秒以下的情况,多线程优先级的设置,对线程的优化十分有限,几乎可以忽略。

最近同事对于我在后台线程采用默认优先级颇有微词,而由于我早期做的实验并未留下足够的数据来证明我的观点,因此这次我就做一个稍微详细的记录。大概讲一下应用场景,有一个常驻线程,另有数个(十个以下)的事务线程,按需并发启动,每个事务线程目前的完成时间在 140~200ms之间,同事认为如果使用 SCHED_RR 或者 SCHED_FIFO,可以将完成时间提升 50ms!这个想法简直太过分了,但是网上也查不到到底这个优先级对线程的提升有多大,因此逼得我只有写代码来验证了,代码如下:

#include <pthread.h>

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <semaphore.h>

#include <sys/time.h>

#include <errno.h>

unsigned long long cfp_get_uptime()

{

struct timeval cur;

long long int timems = 0;

gettimeofday(&cur, NULL);

timems = (long long int) (cur.tv_sec * 1000 + (cur.tv_usec) / 1000);

return timems;

}

#define handle_error_en(en, msg) \

do { errno = en; perror(msg); exit(EXIT_FAILURE); } while(0)

#define handle_error(msg) \

do { perror(msg); exit(EXIT_FAILURE); } while(0)

#define LOOP_NUM 100000000

#define SLEEP_FREQ 1000 // usleep while looping SLEEP_FREQ times

#define MAX_THREAD_NUM 30

sem_t start_sem;

typedef struct {

pthread_t tid;

size_t id;

char name[32];

}ThreadInfo;

void *loop_thread(void *arg)

{

pthread_detach(pthread_self());

int i = 0;

unsigned long long t0 = cfp_get_uptime();

ThreadInfo *myInfo = (ThreadInfo*)arg;

usleep(0);

//memcpy(&myInfo, arg, sizeof(ThreadInfo));

for(; i < LOOP_NUM; ++i)

{

if((i % SLEEP_FREQ) == 0)

usleep(1);

}

printf("[%s] [%llu ms] done\n", myInfo->name, cfp_get_uptime() - t0);

return NULL;

}

void *OTHERThreadFactory(void *p)

{

ThreadInfo tInfo[MAX_THREAD_NUM];

pthread_attr_t attrs[MAX_THREAD_NUM];

struct sched_param param;

param.sched_priority = SCHED_OTHER;

int id = 0;

int s = 0;

// create 30 SCHED_OTHER threads

for(; id < MAX_THREAD_NUM; ++id)

{

s = pthread_attr_init(&attrs[id]);

if(s != 0) {

handle_error_en(s, "pthread_attr_init");

}

s = pthread_attr_setschedpolicy(&attrs[id], SCHED_OTHER);

if(s != 0) {

handle_error_en(s, "pthread_attr_setpolicy");

}

s = pthread_attr_setschedparam(&attrs[id], ¶m);

if(s != 0) {

handle_error_en(s, "pthread_attr_setschedparam");

}

tInfo[id].id = (size_t)id;

sprintf(tInfo[id].name, "OTHERThread[%d]", id); // notice, id length can not longer than 3 bytes

}

printf("OTHERThreadFactory Data prepared!\n");

sem_wait(&start_sem);

int i = 0;

for(;i < MAX_THREAD_NUM; ++i)

{

s = pthread_create(&tInfo[i].tid, &attrs[i], &loop_thread, &tInfo[i]);

if(s != 0) {

handle_error_en(s, "pthread_create");

}

usleep(0);

}

for(i = 0; i < MAX_THREAD_NUM; ++i)

{

s = pthread_attr_destroy(&attrs[i]);

if(s != 0) {

handle_error_en(s, "pthread_attr_destroy");

}

}

while(1) {

++i;

usleep(1000*1000*1000);

if(i == 1000)

break;

}

return NULL;

}

void *RRThreadFactory(void *p)

{

ThreadInfo tInfo[MAX_THREAD_NUM];

pthread_attr_t attrs[MAX_THREAD_NUM];

struct sched_param param;

param.sched_priority = SCHED_RR;

int id = 0;

int s = 0;

// create 30 SCHED_RR threads

for(; id < MAX_THREAD_NUM; ++id)

{

s = pthread_attr_init(&attrs[id]);

if(s != 0) {

handle_error_en(s, "pthread_attr_init");

}

s = pthread_attr_setschedpolicy(&attrs[id], SCHED_RR);

if(s != 0) {

handle_error_en(s, "pthread_attr_setpolicy");

}

s = pthread_attr_setschedparam(&attrs[id], ¶m);

if(s != 0) {

handle_error_en(s, "pthread_attr_setschedparam");

}

tInfo[id].id = (size_t)id;

sprintf(tInfo[id].name, "RRThread[%d]", id); // notice, id length can not longer than 3 bytes

}

printf("RRThreadFactory Data prepared!\n");

sem_wait(&start_sem);

int i = 0;

for(;i < MAX_THREAD_NUM; ++i)

{

s = pthread_create(&tInfo[i].tid, &attrs[i], &loop_thread, &tInfo[i]);

if(s != 0) {

handle_error_en(s, "pthread_create");

}

usleep(0);

}

for(i = 0; i < MAX_THREAD_NUM; ++i)

{

s = pthread_attr_destroy(&attrs[i]);

if(s != 0) {

handle_error_en(s, "pthread_attr_destroy");

}

}

while(1) {

++i;

usleep(1000*1000*1000);

if(i == 1000)

break;

}

return NULL;

}

void *FIFOThreadFactory(void *p)

{

ThreadInfo tInfo[MAX_THREAD_NUM];

pthread_attr_t attrs[MAX_THREAD_NUM];

struct sched_param param;

param.sched_priority = SCHED_FIFO;

int id = 0;

int s = 0;

// create 30 SCHED_FIFO threads

for(; id < MAX_THREAD_NUM; ++id)

{

s = pthread_attr_init(&attrs[id]);

if(s != 0) {

handle_error_en(s, "pthread_attr_init");

}

s = pthread_attr_setschedpolicy(&attrs[id], SCHED_FIFO);

if(s != 0) {

handle_error_en(s, "pthread_attr_setpolicy");

}

s = pthread_attr_setschedparam(&attrs[id], ¶m);

if(s != 0) {

handle_error_en(s, "pthread_attr_setschedparam");

}

tInfo[id].id = (size_t)id;

sprintf(tInfo[id].name, "FIFOThread[%d]", id); // notice, id length can not longer than 3 bytes

}

printf("FIFOThreadFactory Data prepared!\n");

sem_wait(&start_sem);

int i = 0;

for(;i < MAX_THREAD_NUM; ++i)

{

s = pthread_create(&tInfo[i].tid, &attrs[i], &loop_thread, &tInfo[i]);

if(s != 0) {

handle_error_en(s, "pthread_create");

}

usleep(0);

}

for(i = 0; i < MAX_THREAD_NUM; ++i)

{

s = pthread_attr_destroy(&attrs[i]);

if(s != 0) {

handle_error_en(s, "pthread_attr_destroy");

}

}

while(1) {

++i;

usleep(1000*1000*1000);

if(i == 1000)

break;

}

return NULL;

}

void sigHandler(int signal)

{

static int count = 0;

if(!count)

sem_post(&start_sem);

else

exit(0);

}

int main()

{

ThreadInfo tOtherInfo[MAX_THREAD_NUM];

ThreadInfo tFIFOInfo[MAX_THREAD_NUM];

ThreadInfo tRRInfo[MAX_THREAD_NUM];

pthread_attr_t otherAttrs[MAX_THREAD_NUM];

pthread_attr_t fifoAttrs[MAX_THREAD_NUM];

pthread_attr_t rrAttrs[MAX_THREAD_NUM];

struct sched_param otherParam;

struct sched_param fifoParam;

struct sched_param rrParam;

otherParam.sched_priority = SCHED_OTHER;

fifoParam.sched_priority = SCHED_FIFO;

rrParam.sched_priority = SCHED_RR;

int id = 0;

int s = 0;

// create 30 SCHED_FIFO threads

for(; id < MAX_THREAD_NUM; ++id)

{

s = pthread_attr_init(&otherAttrs[id]);

if(s != 0) {

handle_error_en(s, "pthread_attr_init otherAttrs");

}

s = pthread_attr_init(&fifoAttrs[id]);

if(s != 0) {

handle_error_en(s, "pthread_attr_init fifoAttrs");

}

s = pthread_attr_init(&rrAttrs[id]);

if(s != 0) {

handle_error_en(s, "pthread_attr_init rrAttrs");

}

s = pthread_attr_setschedpolicy(&otherAttrs[id], SCHED_OTHER);

if(s != 0) {

handle_error_en(s, "pthread_attr_setpolicy otherAttrs");

}

s = pthread_attr_setschedpolicy(&fifoAttrs[id], SCHED_FIFO);

if(s != 0) {

handle_error_en(s, "pthread_attr_setpolicy fifoAttrs");

}

s = pthread_attr_setschedpolicy(&rrAttrs[id], SCHED_RR);

if(s != 0) {

handle_error_en(s, "pthread_attr_setpolicy rrAttrs");

}

s = pthread_attr_setschedparam(&otherAttrs[id], &otherParam);

if(s != 0) {

handle_error_en(s, "pthread_attr_setschedparam otherParam");

}

s = pthread_attr_setschedparam(&fifoAttrs[id], &fifoParam);

if(s != 0) {

handle_error_en(s, "pthread_attr_setschedparam fifoParam");

}

s = pthread_attr_setschedparam(&rrAttrs[id], &rrParam);

if(s != 0) {

handle_error_en(s, "pthread_attr_setschedparam rrParam");

}

tOtherInfo[id].id = (size_t)id;

tFIFOInfo[id].id = (size_t)id;

tRRInfo[id].id = (size_t)id;

sprintf(tOtherInfo[id].name, "OTHERThread[%d]", id); // notice, id length can not longer than 3 bytes

sprintf(tFIFOInfo[id].name, "FIFOThread[%d]", id); // notice, id length can not longer than 3 bytes

sprintf(tRRInfo[id].name, "RRThread[%d]", id); // notice, id length can not longer than 3 bytes

}

printf("Threads' Data prepared!\n");

int i = 0;

for(;i < MAX_THREAD_NUM; ++i)

{

// s = pthread_create(&tOtherInfo[i].tid, &otherAttrs[i], &loop_thread, &tOtherInfo[i]);

// if(s != 0) {

// handle_error_en(s, "pthread_create tOtherInfo");

// }

// s = pthread_create(&tFIFOInfo[i].tid, &fifoAttrs[i], &loop_thread, &tFIFOInfo[i]);

// if(s != 0) {

// handle_error_en(s, "pthread_create tFIFOInfo");

// }

s = pthread_create(&tRRInfo[i].tid, &rrAttrs[i], &loop_thread, &tRRInfo[i]);

if(s != 0) {

handle_error_en(s, "pthread_create tRRInfo");

}

usleep(0);

}

for(i = 0; i < MAX_THREAD_NUM; ++i)

{

s = pthread_attr_destroy(&otherAttrs[i]);

if(s != 0) {

handle_error_en(s, "pthread_attr_destroy otherAttrs");

}

s = pthread_attr_destroy(&fifoAttrs[i]);

if(s != 0) {

handle_error_en(s, "pthread_attr_destroy fifoAttrs");

}

s = pthread_attr_destroy(&rrAttrs[i]);

if(s != 0) {

handle_error_en(s, "pthread_attr_destroy rrAttrs");

}

}

while(1) {

usleep(1000*1000*1000);

}

return 0;

}

#if 0

int main()

{

sem_init(&start_sem, 0, 0);

pthread_t tid[3];

pthread_create(&tid[0], NULL, &OTHERThreadFactory, NULL);

pthread_create(&tid[1], NULL, &RRThreadFactory, NULL);

pthread_create(&tid[2], NULL, &FIFOThreadFactory, NULL);

getchar();

int sVal = 0;

sem_getvalue(&start_sem, &sVal);

printf("Before sem_post, sVal = %d\n", sVal);

sem_post(&start_sem);

usleep(1);

sem_getvalue(&start_sem, &sVal);

printf("After sem_post, sVal = %d\n", sVal);

sem_post(&start_sem);

usleep(1);

sem_getvalue(&start_sem, &sVal);

printf("After sem_post, sVal = %d\n", sVal);

sem_post(&start_sem);

usleep(1);

sem_getvalue(&start_sem, &sVal);

printf("After sem_post, sVal = %d\n", sVal);

pthread_join(tid[0], NULL);

pthread_join(tid[1], NULL);

pthread_join(tid[2], NULL);

while(1) usleep(100000000);

return 0;

}

#endif

这里我做了三种验证:

第一种,见最后注释起来的 main 函数,流程如下,首先,以默认优先级创建三个Factory线程,然后通过 sem 信号量,通知的方式在三个Factory线程中开始创建SCHED_OTHER,SCHED_RR,SCHED_FIFO 三种优先级的线程,然后等待结束。结果如下:

[RRThread[3]][3] done

[RRThread[1]][1] done

[RRThread[29]][29] done

[RRThread[18]][18] done

[RRThread[6]][6] done

[RRThread[10]][10] done

[RRThread[4]][4] done

[RRThread[0]][0] done

[RRThread[23]][23] done

[RRThread[16]][16] done

[RRThread[8]][8] done

[RRThread[2]][2] done

[RRThread[15]][15] done

[RRThread[22]][22] done

[RRThread[14]][14] done

[RRThread[24]][24] done

[RRThread[27]][27] done

[RRThread[13]][13] done

[RRThread[26]][26] done

[RRThread[19]][19] done

[RRThread[28]][28] done

[RRThread[9]][9] done

[RRThread[11]][11] done

[RRThread[7]][7] done

[RRThread[12]][12] done

[RRThread[25]][25] done

[RRThread[17]][17] done

[RRThread[20]][20] done

[RRThread[5]][5] done

[RRThread[21]][21] done

[FIFOThread[28]][28] done

[FIFOThread[22]][22] done

[FIFOThread[21]][21] done

[FIFOThread[23]][23] done

[FIFOThread[5]][5] done

[FIFOThread[25]][25] done

[FIFOThread[18]][18] done

[FIFOThread[1]][1] done

[FIFOThread[2]][2] done

[FIFOThread[11]][11] done

[FIFOThread[12]][12] done

[FIFOThread[6]][6] done

[FIFOThread[14]][14] done

[FIFOThread[24]][24] done

[FIFOThread[19]][19] done

[FIFOThread[0]][0] done

[FIFOThread[7]][7] done

[FIFOThread[4]][4] done

[FIFOThread[10]][10] done

[FIFOThread[8]][8] done

[FIFOThread[13]][13] done

[FIFOThread[3]][3] done

[FIFOThread[27]][27] done

[FIFOThread[29]][29] done

[FIFOThread[15]][15] done

[FIFOThread[26]][26] done

[FIFOThread[17]][17] done

[FIFOThread[20]][20] done

[FIFOThread[16]][16] done

[FIFOThread[9]][9] done

[OTHERThread[25]][25] done

[OTHERThread[18]][18] done

[OTHERThread[9]][9] done

[OTHERThread[26]][26] done

[OTHERThread[23]][23] done

[OTHERThread[16]][16] done

[OTHERThread[14]][14] done

[OTHERThread[10]][10] done

[OTHERThread[29]][29] done

[OTHERThread[0]][0] done

[OTHERThread[19]][19] done

[OTHERThread[4]][4] done

[OTHERThread[20]][20] done

[OTHERThread[5]][5] done

[OTHERThread[24]][24] done

[OTHERThread[17]][17] done

[OTHERThread[28]][28] done

[OTHERThread[1]][1] done

[OTHERThread[12]][12] done

[OTHERThread[13]][13] done

[OTHERThread[2]][2] done

[OTHERThread[15]][15] done

[OTHERThread[11]][11] done

[OTHERThread[7]][7] done

[OTHERThread[27]][27] done

[OTHERThread[21]][21] done

[OTHERThread[3]][3] done

[OTHERThread[6]][6] done

[OTHERThread[22]][22] done

[OTHERThread[8]][8] done注:这个版本还没有添加线程时间花销...

可以看到,优先级确实是有效的,RR > FIFO > OTHER,测试用的机器是相当的慢,我等了十多分钟才有结果。但是这里还有点问题,由于sem_post 有时间顺序,而 pthread_create 也是有时间花销的,也许是由于RRFactory最先执行呢?

于是,就有了第二版的验证,即是在现在的 main 函数中,将注释起来的 pthread_create 打开之后的代码,可以看到现在我按照 OTHER - FIFO - RR的顺序依次创建线程,每次各创建一个线程,并且在线程中计时,就可以排除掉之前版本中的担忧了,结果如何呢?

[RRThread[0]] [660885 ms] done

[RRThread[4]] [661478 ms] done

[RRThread[15]] [661418 ms] done

[RRThread[6]] [661583 ms] done

[RRThread[22]] [661448 ms] done

[RRThread[5]] [661686 ms] done

[RRThread[3]] [661711 ms] done

[RRThread[1]] [661756 ms] done

[RRThread[28]] [661391 ms] done

[RRThread[10]] [661776 ms] done

[RRThread[11]] [661790 ms] done

[RRThread[8]] [661816 ms] done

[RRThread[9]] [661860 ms] done

[RRThread[29]] [661564 ms] done

[RRThread[16]] [661843 ms] done

[RRThread[17]] [661843 ms] done

[RRThread[18]] [661870 ms] done

[RRThread[23]] [661863 ms] done

[RRThread[20]] [661962 ms] done

[RRThread[2]] [662197 ms] done

[RRThread[27]] [661900 ms] done

[RRThread[26]] [661945 ms] done

[RRThread[25]] [661986 ms] done

[RRThread[13]] [662229 ms] done

[RRThread[21]] [662143 ms] done

[RRThread[7]] [662376 ms] done

[RRThread[14]] [662348 ms] done

[RRThread[19]] [662425 ms] done

[RRThread[24]] [662402 ms] done

[RRThread[12]] [662606 ms] done

[FIFOThread[12]] [701678 ms] done

[FIFOThread[0]] [701965 ms] done

[FIFOThread[8]] [702015 ms] done

[FIFOThread[1]] [702100 ms] done

[FIFOThread[3]] [702108 ms] done

[FIFOThread[18]] [701981 ms] done

[FIFOThread[24]] [701894 ms] done

[FIFOThread[19]] [702065 ms] done

[FIFOThread[5]] [702247 ms] done

[FIFOThread[20]] [702136 ms] done

[FIFOThread[9]] [702316 ms] done

[FIFOThread[2]] [702376 ms] done

[FIFOThread[21]] [702159 ms] done

[FIFOThread[27]] [702050 ms] done

[FIFOThread[22]] [702158 ms] done

[FIFOThread[29]] [701997 ms] done

[FIFOThread[23]] [702241 ms] done

[FIFOThread[28]] [702124 ms] done

[FIFOThread[11]] [702443 ms] done

[FIFOThread[14]] [702409 ms] done

[FIFOThread[6]] [702504 ms] done

[FIFOThread[26]] [702240 ms] done

[FIFOThread[13]] [702464 ms] done

[FIFOThread[15]] [702434 ms] done

[FIFOThread[16]] [702437 ms] done

[FIFOThread[4]] [702564 ms] done

[FIFOThread[17]] [702428 ms] done

[FIFOThread[10]] [702544 ms] done

[FIFOThread[7]] [702623 ms] done

[FIFOThread[25]] [702408 ms] done

[OTHERThread[2]] [779770 ms] done

[OTHERThread[10]] [780088 ms] done

[OTHERThread[23]] [781879 ms] done

[OTHERThread[6]] [782559 ms] done

[OTHERThread[3]] [782734 ms] done

[OTHERThread[8]] [783248 ms] done

[OTHERThread[19]] [783905 ms] done

[OTHERThread[29]] [784210 ms] done

[OTHERThread[1]] [784734 ms] done

[OTHERThread[5]] [785046 ms] done

[OTHERThread[17]] [785276 ms] done

[OTHERThread[16]] [785409 ms] done

[OTHERThread[12]] [785502 ms] done

[OTHERThread[4]] [786089 ms] done

[OTHERThread[24]] [786325 ms] done

[OTHERThread[11]] [787313 ms] done

[OTHERThread[14]] [787917 ms] done

[OTHERThread[20]] [788784 ms] done

[OTHERThread[25]] [789240 ms] done

[OTHERThread[21]] [789440 ms] done

[OTHERThread[22]] [789454 ms] done

[OTHERThread[13]] [790964 ms] done

[OTHERThread[9]] [792509 ms] done

[OTHERThread[28]] [792540 ms] done

[OTHERThread[18]] [793866 ms] done

[OTHERThread[26]] [794501 ms] done

[OTHERThread[15]] [796167 ms] done

[OTHERThread[27]] [796447 ms] done

[OTHERThread[0]] [797998 ms] done

[OTHERThread[7]] [800962 ms] done注:这份log所用代码的LOOP_NUM比现在的多一个0,也就是十亿次

同样的结果,时间开销逐渐增加,是多线程竞争的必然结果,说到竞争,这里要说明一下,在 pthread_attr_t 中的主要属性的意义如下:

__detachstate,表示新线程是否与进程中其他线程脱离同步, 如果设置为PTHREAD_CREATE_DETACHED 则新线程不能用pthread_join()来同步,且在退出时自行释放所占用的资源。缺省为PTHREAD_CREATE_JOINABLE状态。这个属性也可以在线程创建并运行以后用pthread_detach()来设置,而一旦设置为PTHREAD_CREATE_DETACH状态(不论是创建时设置还是运行时设置)则不能再恢复到PTHREAD_CREATE_JOINABLE状态。

__schedpolicy,表示新线程的调度策略,主要包括SCHED_OTHER(正常、非实时)、SCHED_RR(实时、轮转法)和SCHED_FIFO(实时、先入先出)三种,缺省为SCHED_OTHER,后两种调度策略仅对超级用户有效。运行时可以用过pthread_setschedparam()来改变。

__schedparam,一个struct sched_param结构,目前仅有一个sched_priority整型变量表示线程的运行优先级。这个参数仅当调度策略为实时(即SCHED_RR或SCHED_FIFO)时才有效,并可以在运行时通过pthread_setschedparam()函数来改变,缺省为0。

__inheritsched,有两种值可供选择:PTHREAD_EXPLICIT_SCHED和PTHREAD_INHERIT_SCHED,前者表示新线程使用显式指定调度策略和调度参数(即attr中的值),而后者表示继承调用者线程的值。缺省为PTHREAD_EXPLICIT_SCHED。

__scope,表示线程间竞争CPU的范围,也就是说线程优先级的有效范围。POSIX的标准中定义了两个值:PTHREAD_SCOPE_SYSTEM和PTHREAD_SCOPE_PROCESS,前者表示与系统中所有线程一起竞争CPU时间,后者表示仅与同进程中的线程竞争CPU。目前LinuxThreads仅实现了PTHREAD_SCOPE_SYSTEM一值。

在最后一条可以看到,在Linux环境下,是与系统中所有线程一起竞争CPU时间的。但是这样的结果,并不能说明优先级对我们的线程没有提升呀。好吧,那我就进行第三种验证方法,即如现在的代码,编译出两个可执行文件,一个只创建 OTHER 线程,一个只创建 RR 线程,分别执行后的结果:

[OTHERThread[15]] [20865 ms] done

[OTHERThread[24]] [20959 ms] done

[OTHERThread[22]] [20989 ms] done

[OTHERThread[3]] [21010 ms] done

[OTHERThread[19]] [21039 ms] done

[OTHERThread[0]] [21067 ms] done

[OTHERThread[9]] [21078 ms] done

[OTHERThread[6]] [21119 ms] done

[OTHERThread[26]] [21214 ms] done

[OTHERThread[8]] [21628 ms] done

[OTHERThread[28]] [21857 ms] done

[OTHERThread[18]] [21979 ms] done

[OTHERThread[2]] [22230 ms] done

[OTHERThread[27]] [22547 ms] done

[OTHERThread[14]] [22820 ms] done

[OTHERThread[4]] [22831 ms] done

[OTHERThread[7]] [22894 ms] done

[OTHERThread[20]] [22909 ms] done

[OTHERThread[21]] [22907 ms] done

[OTHERThread[25]] [22925 ms] done

[OTHERThread[11]] [22987 ms] done

[OTHERThread[23]] [22972 ms] done

[OTHERThread[29]] [22973 ms] done

[OTHERThread[5]] [23051 ms] done

[OTHERThread[1]] [23083 ms] done

[OTHERThread[16]] [23098 ms] done

[OTHERThread[12]] [23129 ms] done

[OTHERThread[13]] [23228 ms] done

[OTHERThread[10]] [23240 ms] done

[OTHERThread[17]] [23308 ms] done

[RRThread[0]] [22389 ms] done

[RRThread[8]] [22406 ms] done

[RRThread[7]] [22424 ms] done

[RRThread[2]] [22434 ms] done

[RRThread[5]] [22437 ms] done

[RRThread[4]] [22449 ms] done

[RRThread[1]] [22459 ms] done

[RRThread[6]] [22463 ms] done

[RRThread[3]] [22473 ms] done

[RRThread[9]] [22456 ms] done

[RRThread[11]] [22457 ms] done

[RRThread[12]] [22462 ms] done

[RRThread[21]] [22442 ms] done

[RRThread[17]] [22461 ms] done

[RRThread[13]] [22472 ms] done

[RRThread[10]] [22479 ms] done

[RRThread[16]] [22486 ms] done

[RRThread[23]] [22470 ms] done

[RRThread[25]] [22464 ms] done

[RRThread[15]] [22496 ms] done

[RRThread[20]] [22485 ms] done

[RRThread[18]] [22491 ms] done

[RRThread[27]] [22449 ms] done

[RRThread[14]] [22500 ms] done

[RRThread[19]] [22490 ms] done

[RRThread[24]] [22477 ms] done

[RRThread[22]] [22484 ms] done

[RRThread[28]] [22449 ms] done

[RRThread[26]] [22471 ms] done

[RRThread[29]] [22450 ms] done

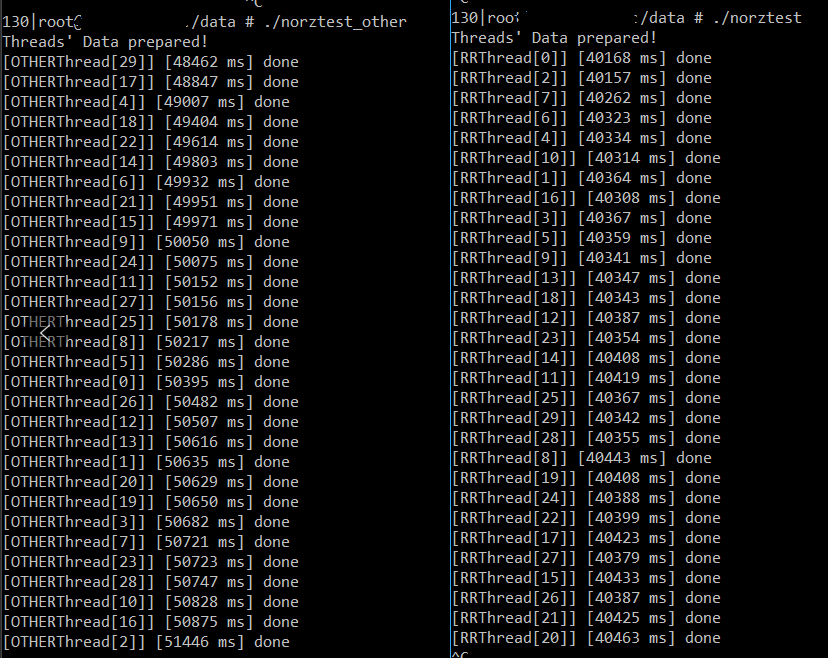

可以看到 OTHER 与 RR 平均时间基本一致,最快的速度还比RR快一些,可以看出,将普通优先级线程改为高优先级,并不会提升时间开销。而后,我又进行了一个测试,我让两个可执行程序同时进行,结果如下图:

可以看到,在RR与OTHER同时运行时,不论是否同一个进程,都是竞争关系,并且RR优先级更高,由此,我们可以得出结论,只要系统中没有太多高优先级RR/FIFO的耗时线程正在运行,对于普通优先级的线程,影响是有限,而通过将普通优先级的线程转变为高优先级,对于线程的时间,并不会有太大改善。

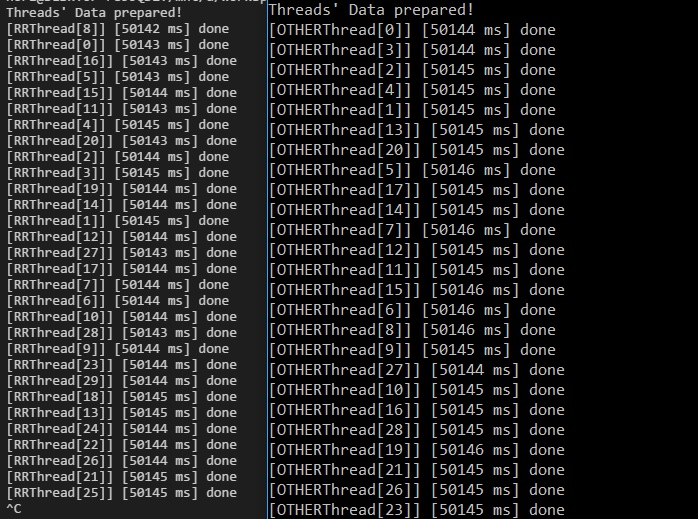

最后贴一个我在我本地电脑上同时执行的测试结果:

可以看到在CPU强劲(i7-6700HQ)的情况下,RR和OTHER基本没有差距,RR优先级可以提升 2ms 左右(i7的2ms啊,可以跑多少次运算了!!)

但是耐不住同事的“劝说”,我还是在代码中加上了优先级设置,但是结果也与我预料的一般...根本看不出来有什么区别

一亿次循环,产生8000ms的差距,平均十万次循环,才有8ms的提升...

5460

5460

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?