一、总体思路

- 下载包括摄像头(目标检测)和雷达(导航)功能的小车模型;

- 使用现有的算法和下载的轨道模型进行自动导航循迹;

- 下载模型库并自行搭建轨道模型对小车进行自动导航循迹;

- 对指定的物体进行检测(分别对一个物体与多个物体进行检测)。

二、内容设计

- Ubuntu安装Gazebo并与ROS连接:

- 安装Gazebo 9。

- 安装ROS Melodic。

- 配置Gazebo与ROS的连接。

- 进行自动导航循迹:

- 使用预训练模型进行自动导航循迹。

- 自行搭建模型并进行训练以实现自动导航循迹。

- 加载YOLO进行目标检测:

- 安装darknet_ros功能包。

- 加载预训练的YOLO模型进行目标检测。

三、实验环境

1、环境搭建

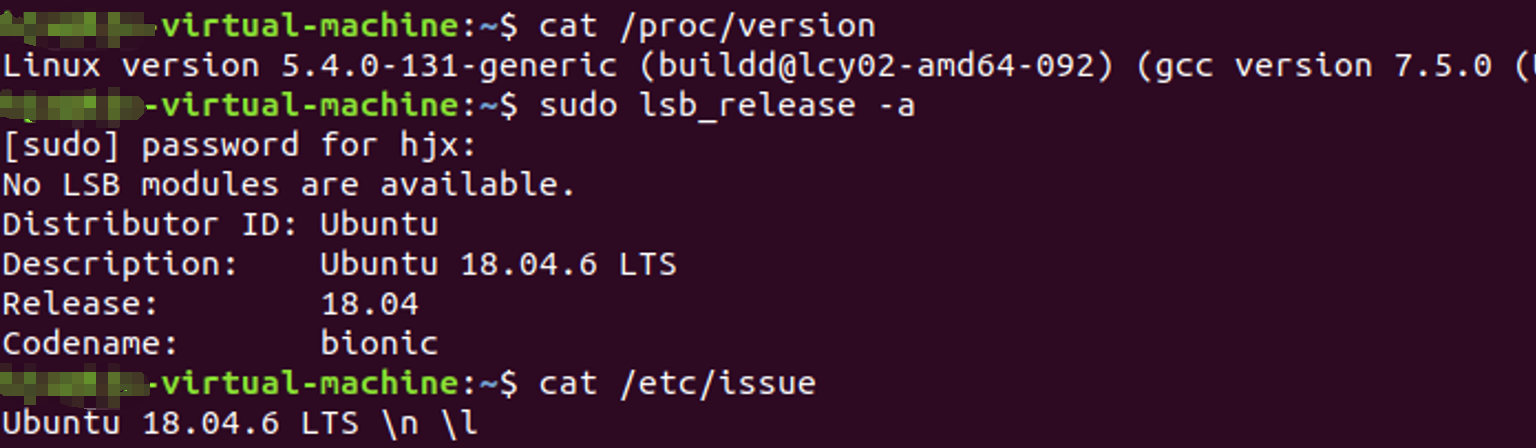

- 主机操作系统版本:Windows 10 Home,

- 虚拟机版本:VMware Workstation 16 Pro——16.2.4

- Ubuntu版本:Ubuntu 18.04.6 LTS

- 内存配置:4GB

- linux内核版本:Linux version 5.4.0-131-generic (buildd@lcy02-amd64-092)

- ROS版本:ROS Melodic、

- gcc编译器版本:gcc version 7.5.0

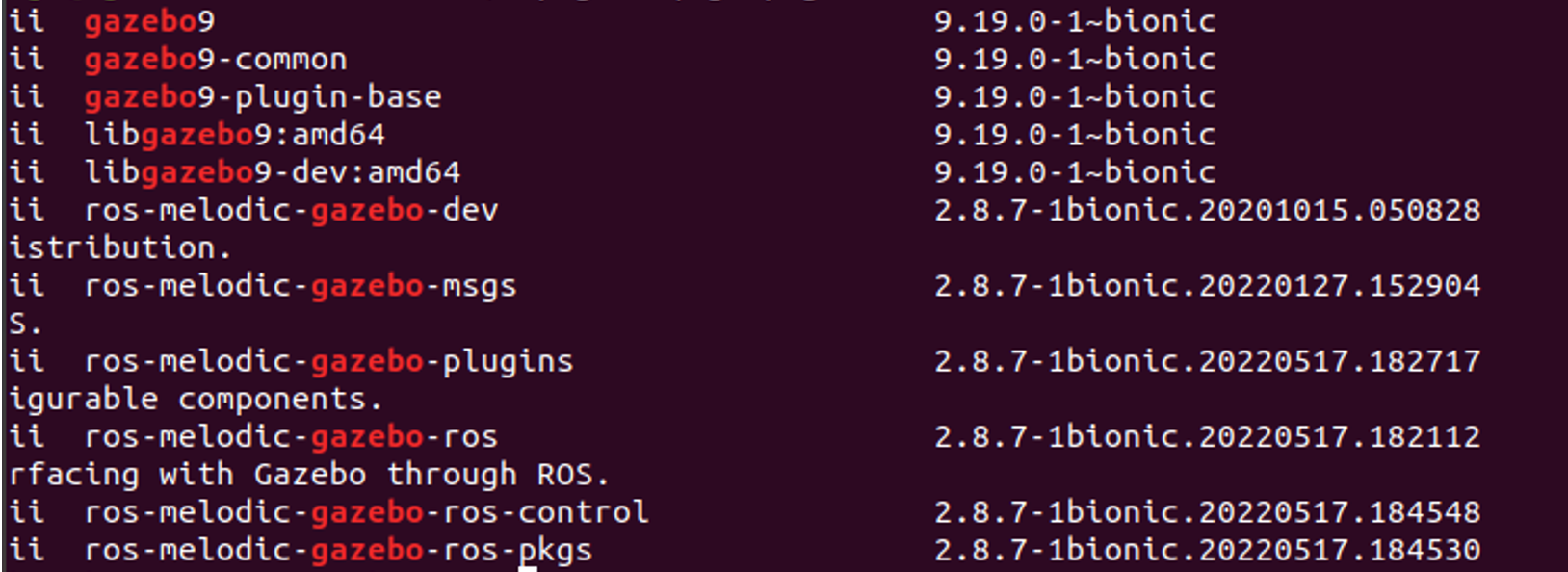

- Gazebo版本:Gazebo 9.19.0

dpkg -l | grep gazebo

2、仿真环境配置

①racecar功能包来源:GitHub - xmy0916/racecar: 智能车仿真软件

②darknet_ros功能包来源: GitHub - leggedrobotics/darknet_ros: YOLO ROS: Real-Time Object Detection for ROS

③权重文件:百度网盘百度网盘 请输入提取码 提取码: xqdm

场景搭建所需模块文件库来源:osrf / gazebo_models / Downloads — Bitbucket

四、核心算法和功能实现原理

1、安装Gazebo(Ubuntu18.04需下载Gazebo9版本)

①把gazebo的源添加到apt的source list,让电脑可以从package.osrfoundation.org接收软件

sudo sh -c 'echo "deb http://packages.osrfoundation.org/gazebo/ubuntu-stable `lsb_release -cs` main" > /etc/apt/sources.list.d/gazebo-stable.list'

②设定密钥

wget https://packages.osrfoundation.org/gazebo.key -O - | sudo apt-key add –

③先执行apt更新,然后安装Gazebo9

sudo apt-get update

sudo apt-get install gazebo9

sudo apt-get install libgazebo9-dev

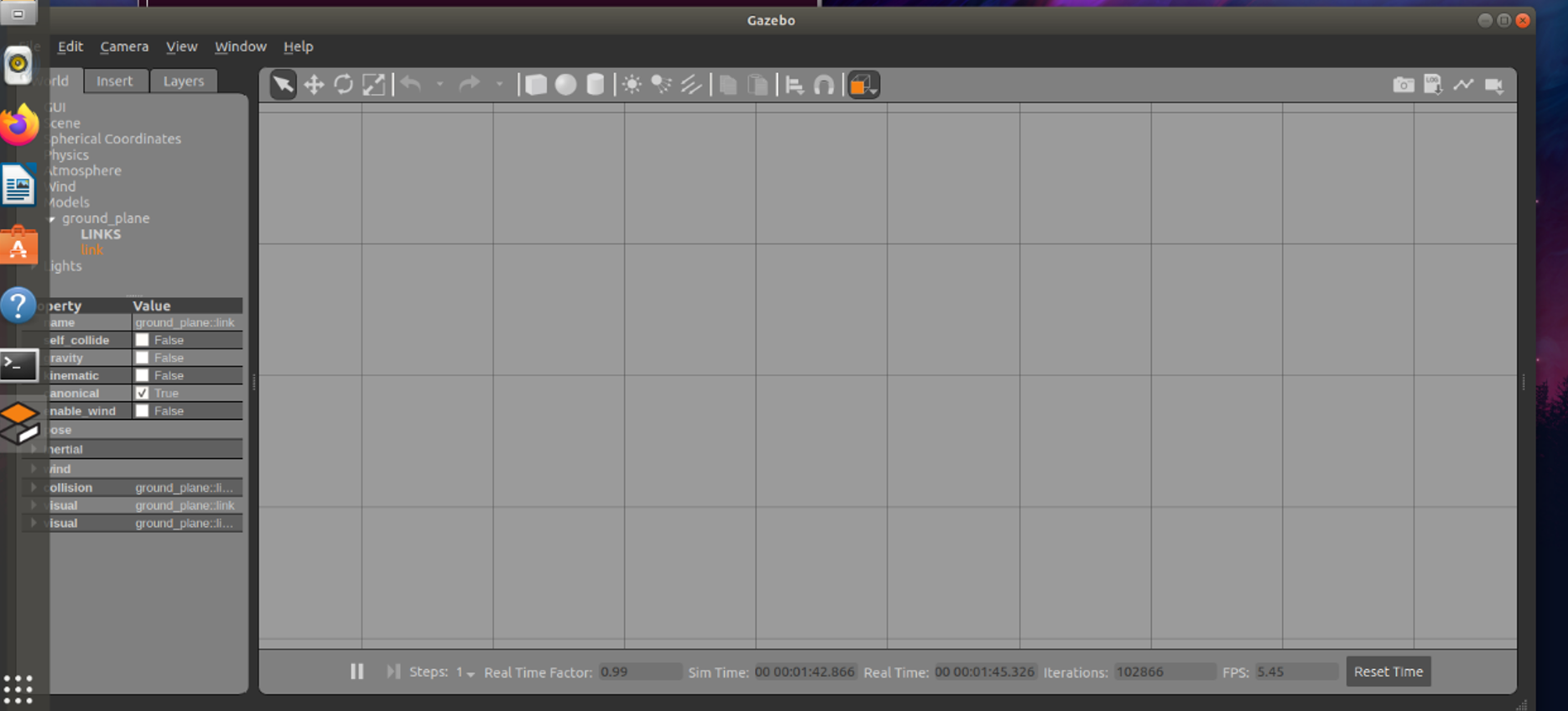

④打开Gazebo窗口

gazebo

2、将Gazebo与ROS连接

① melodic版本gazebo_ros_pkgs元功能包

sudo apt install ros-melodic-gazebo-ros-pkgs ros-melodic-gazebo-msgs ros-melodic-gazebo-plugins ros-melodic-gazebo-ros-control

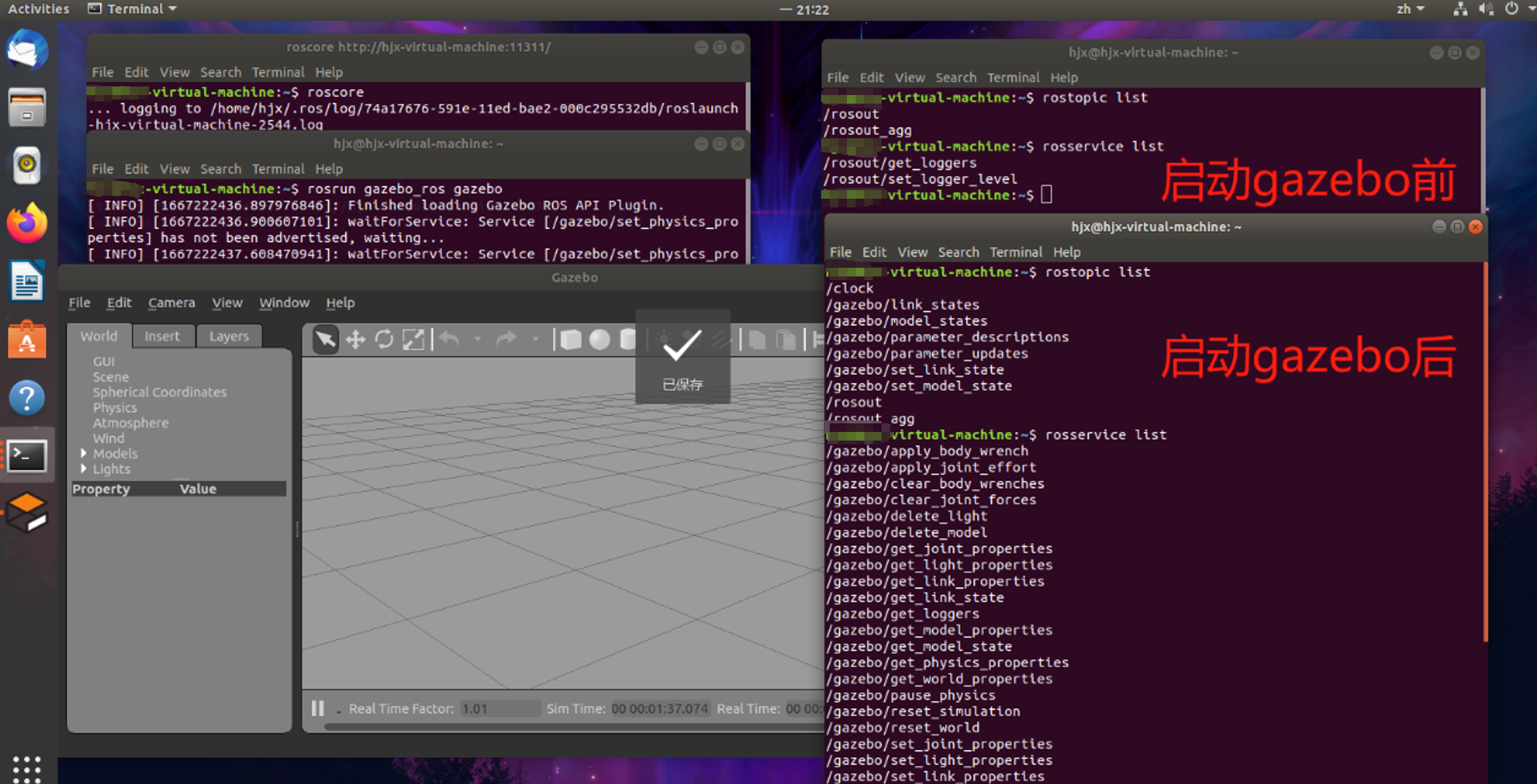

②验证是否连接成功:运行Gazebo并查看当前系统所有的话题列表以及service服务

roscore

rosrun gazebo_ros gazebo

rostopic list

rosservice list

3、创建工作空间与功能包

①创建工作空间

mkdir -p ~/racecar_ws/src

cd ~/racecar_ws/src

catkin_init_workspace

②下载racecar和darknet_ros功能包

git clone https://github.com/xmy0916/racecar.git

git clone https://github.com/leggedrobotics/darknet_ros.git

③安装需要的控件

sudo apt-get install ros-melodic-driver-base

sudo apt-get install ros-melodic-ackermann-msgs

sudo apt-get install ros-melodic-global-planner

sudo apt-get install ros-melodic-teb-local-planner

④编译功能包

cd ~/racecar_ws

catkin_make

⑤设置环境变量

echo "source ~/racecar_ws/devel/setup.bash" >> ~/.bashrc

source ~/.bashrc

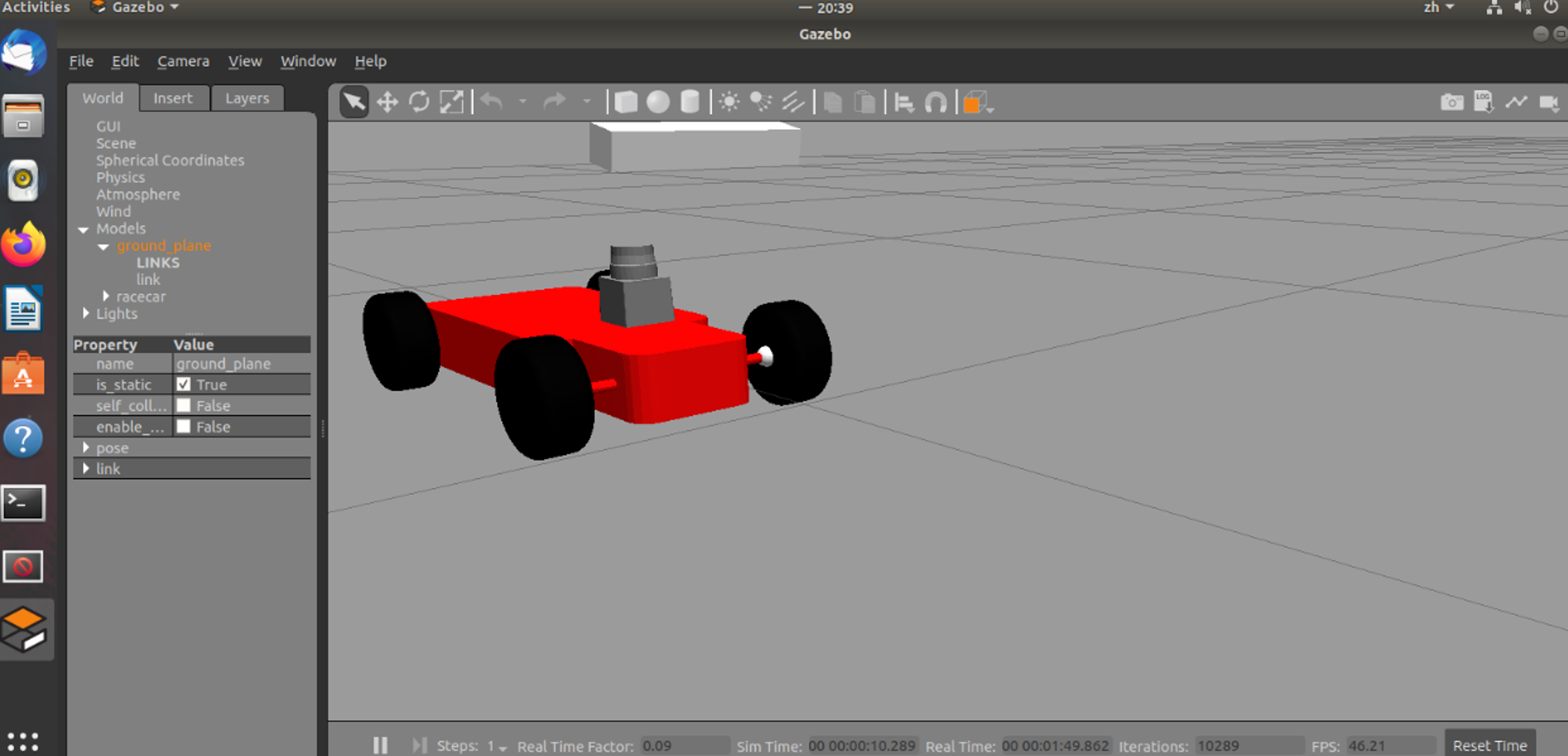

⑥启动Gazebo,运行②中下载的小车模型

roslaunch racecar_gazebo racecar.launch

4、基于Gazebo搭建仿真机器人环境

①下载模型文件库

git clone https://bitbucket.org/osrf/gazebo_models/downloads/

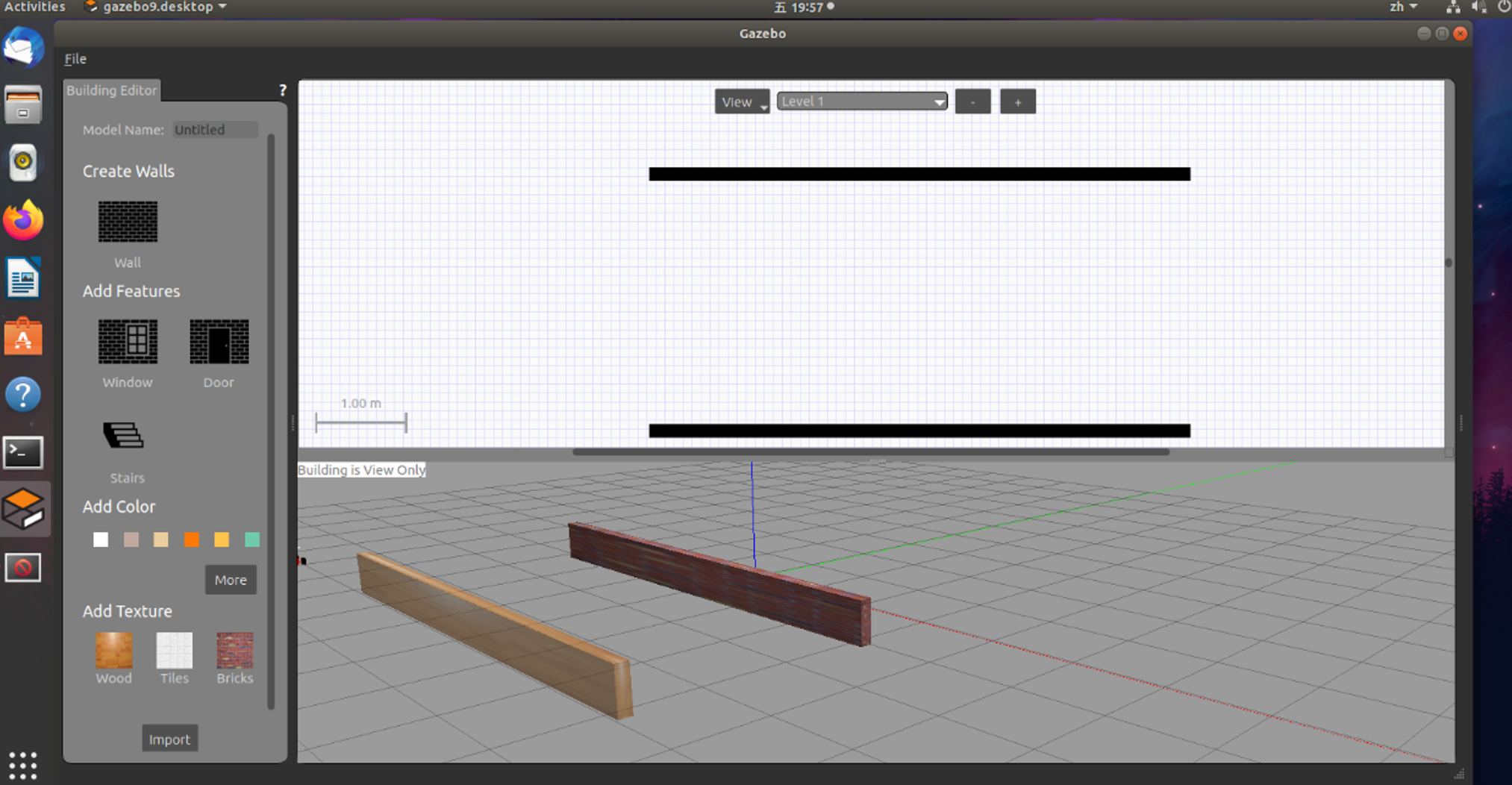

②打开gazebo,点击Edit→Build Editor,自己创建模型并增加障碍物以便后期进行目标检测

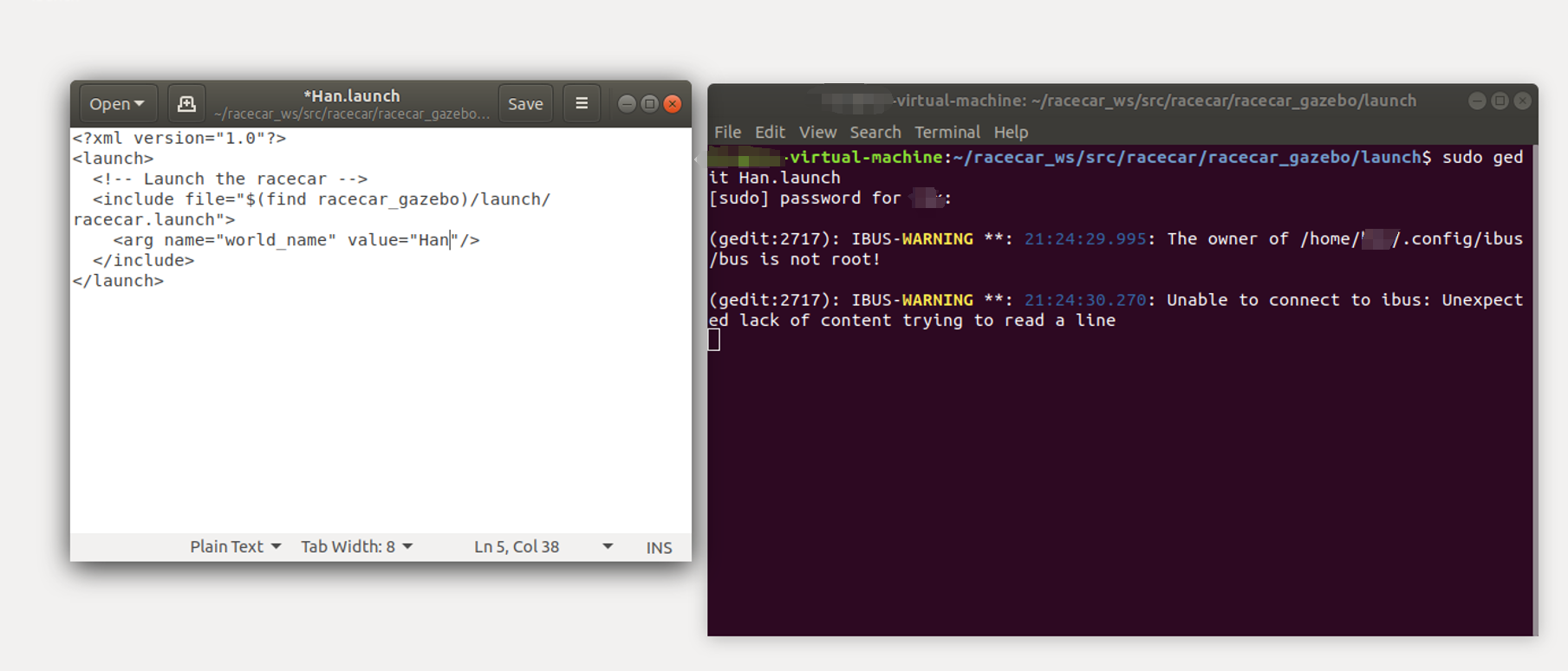

③保存环境到racecar功能包中的worlds目录下(命名为Han.world)

④创建Han.launch文件,配置赛道参数

5、自动导航循迹算法

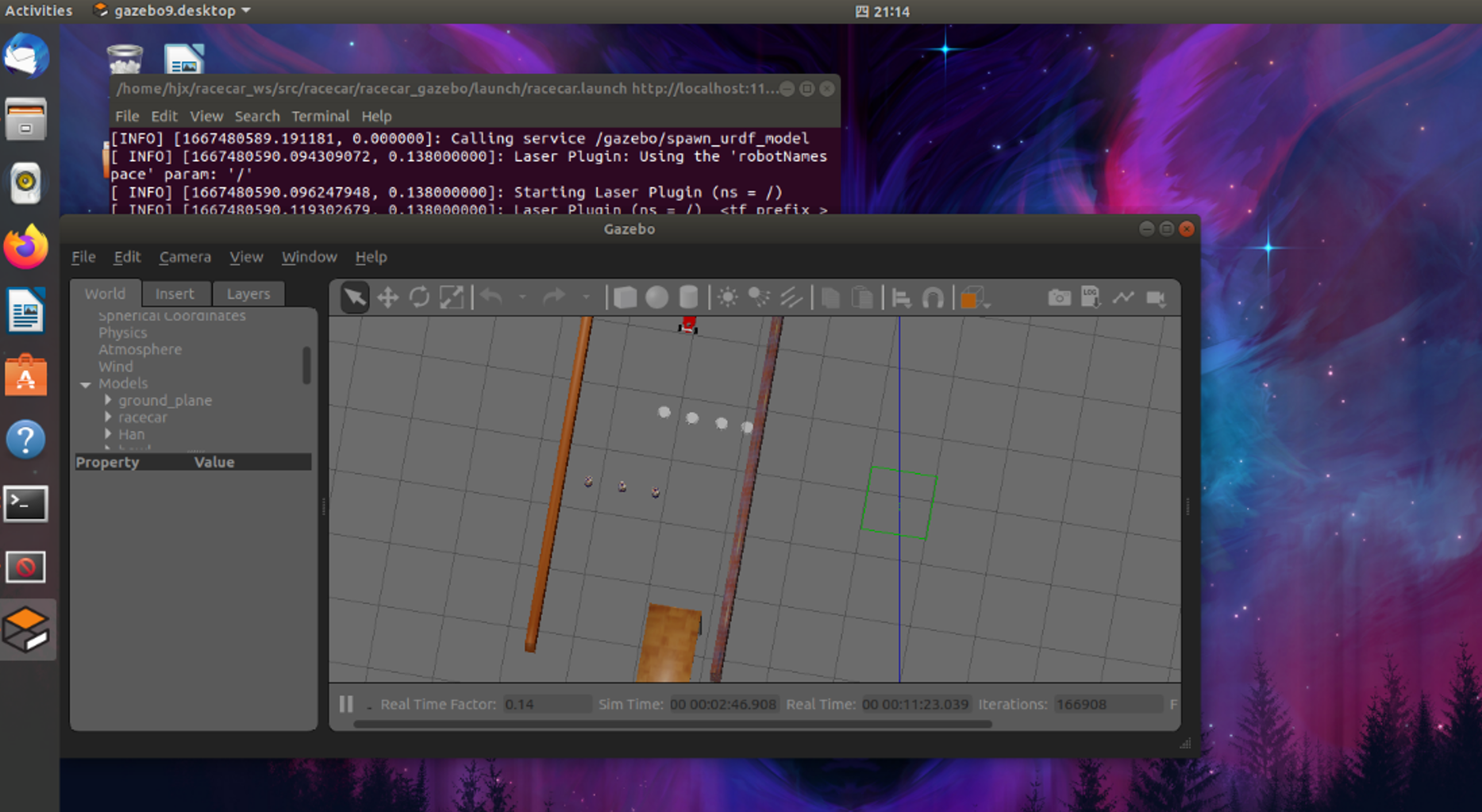

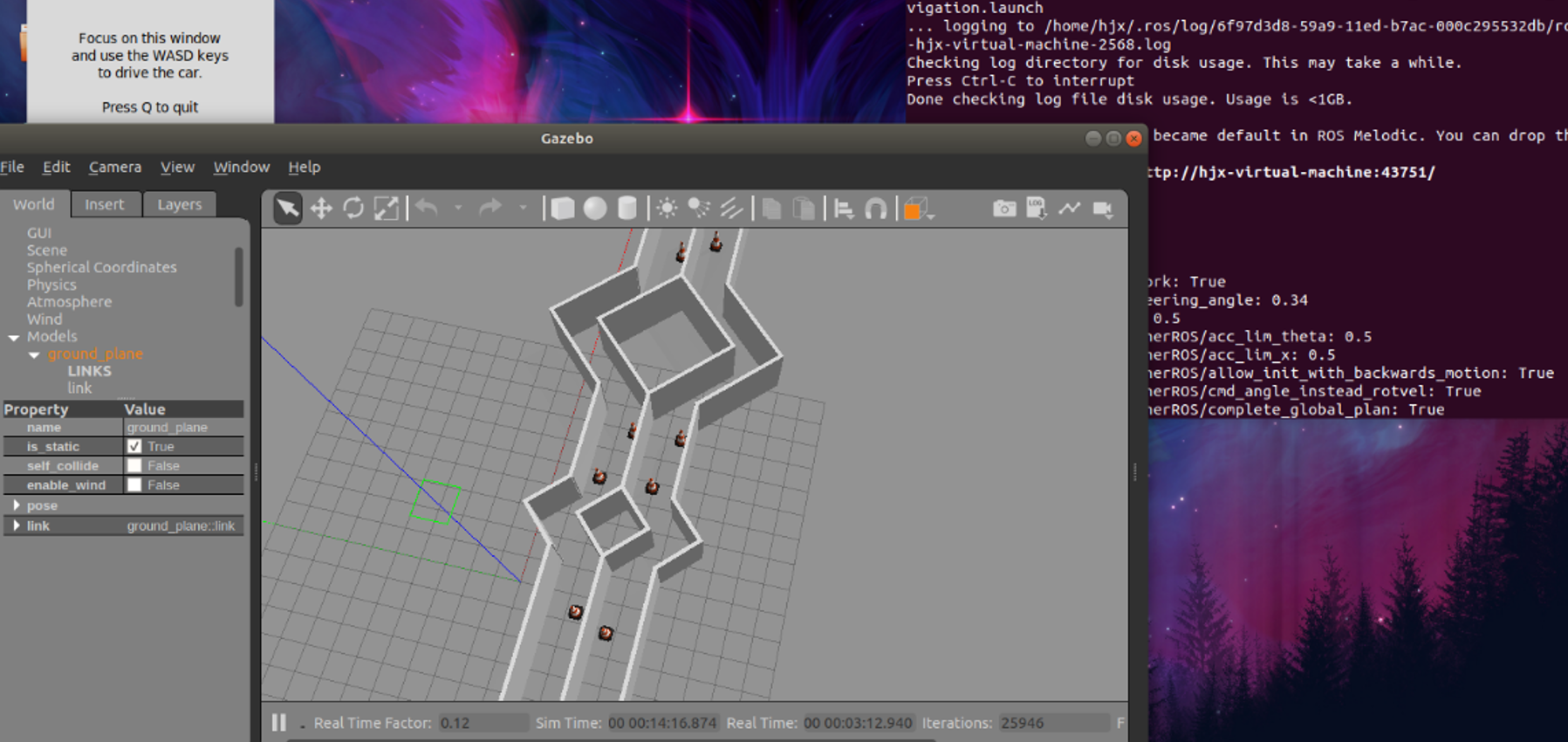

①运行已有环境

roslaunch racecar_gazebo racecar_runway_navigation.launch

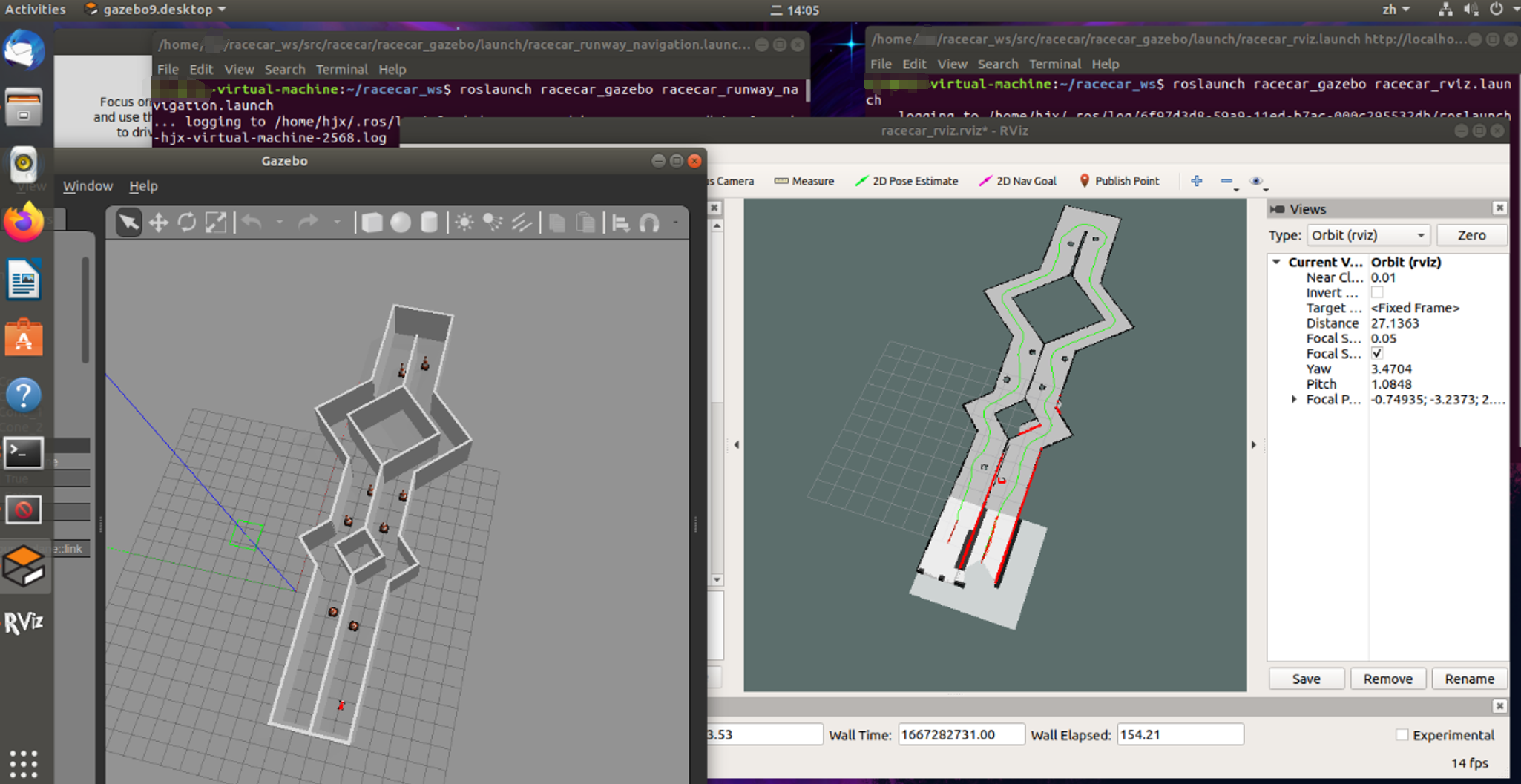

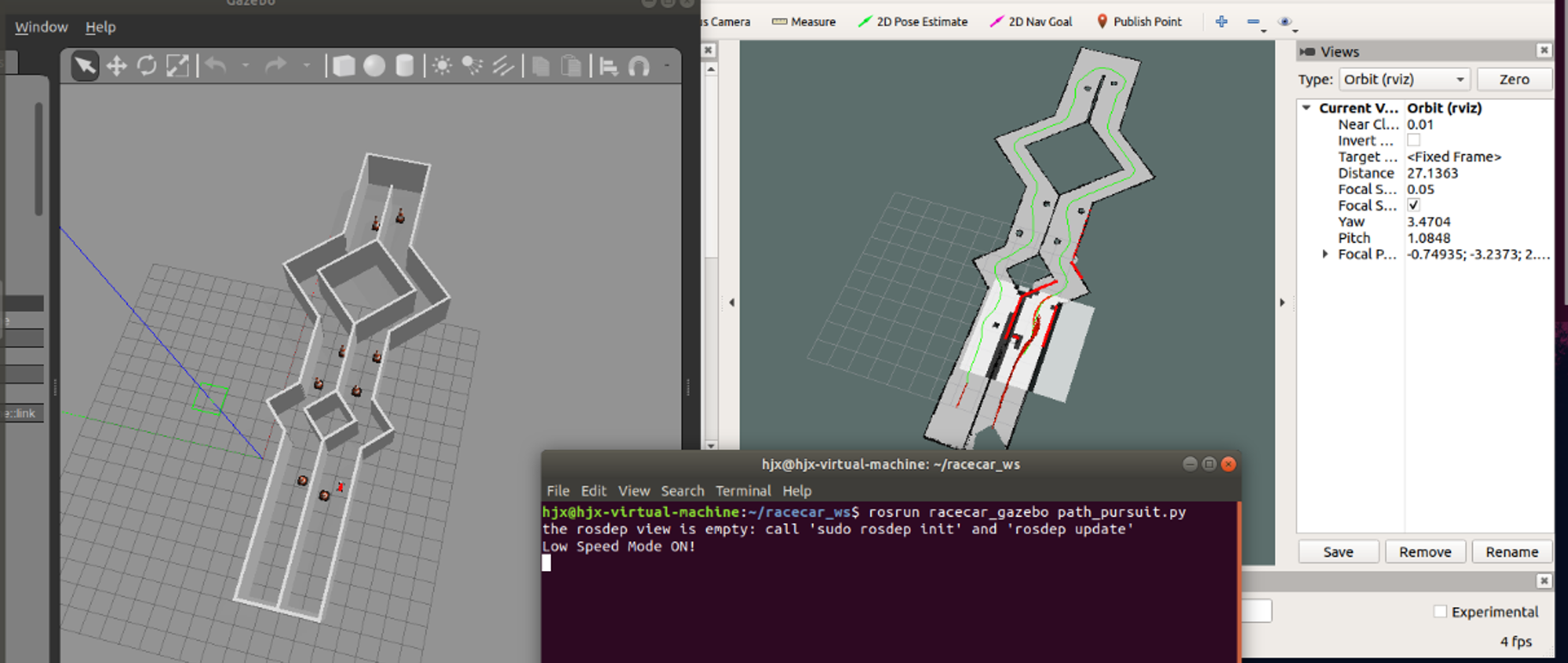

②启动rviz并设计小车的行车轨迹

roslaunch racecar_gazebo racecar_rviz.launch

③启动path_pursuit.py脚本

rosrun racecar_gazebo path_pursuit.py

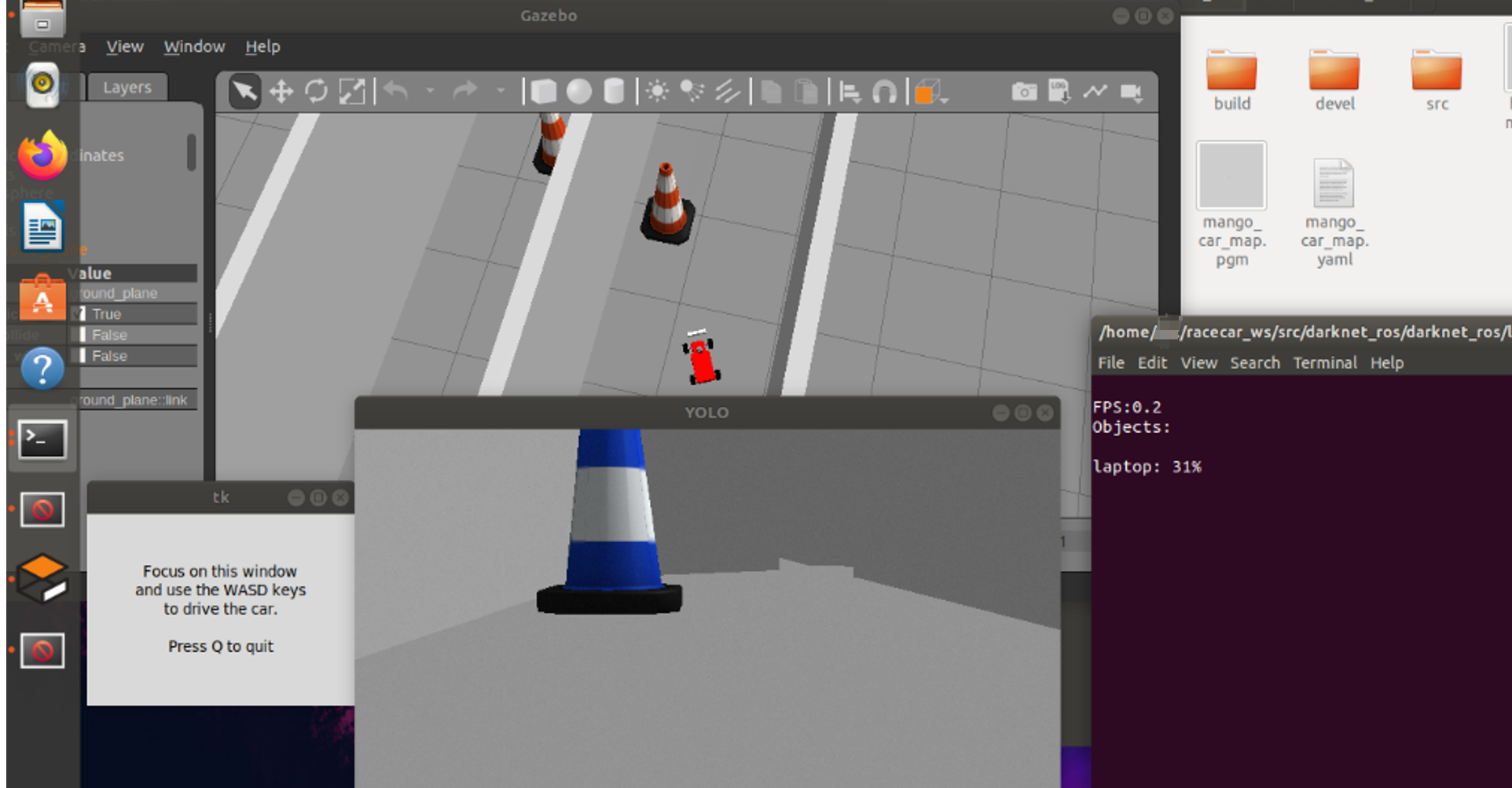

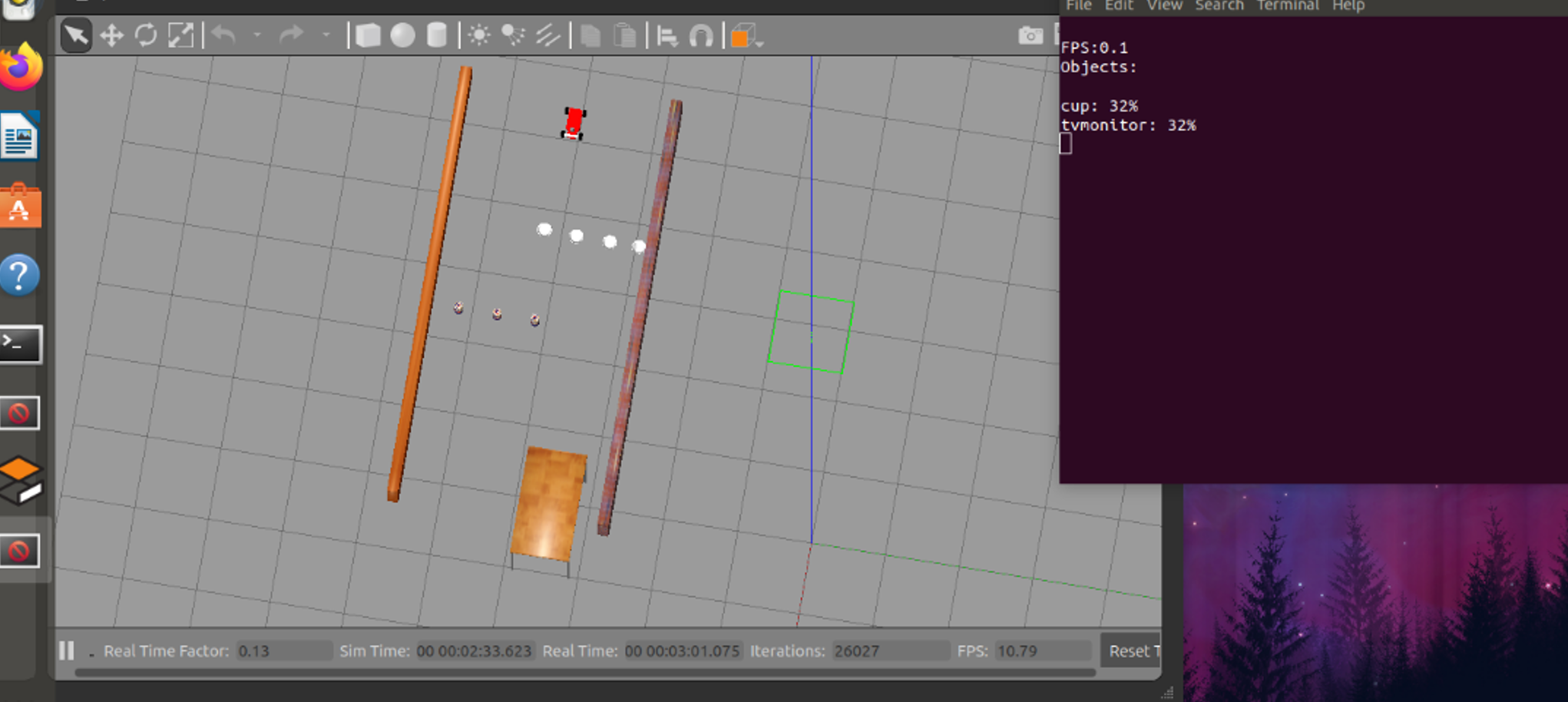

6、目标检测算法识别的结果图

①一个物体

②多个物体

五、实验中遇到的问题及解决方案

① 蓝屏重启问题:

安装VMware 15.5.0版本刚开启虚拟机便蓝屏重启,经查询为版本不匹配,最终安装了16.2.4版本。

② Intel VT-x处于禁用状态:

经咨询联想客服知:依次点击开始→设置→更新和安全→恢复→高级启动→立即重新启动→疑难解答→高级选项 UEFI固件设置→重启,进入 BIOS界面,打开Intel(R) Virtualizaton Technology,F10保存退出。

③ 开启虚拟机创建账号时卡死:

该问题无解,进行每步操作时需要慢慢等待,不能操之过急,否则只能从头再来……

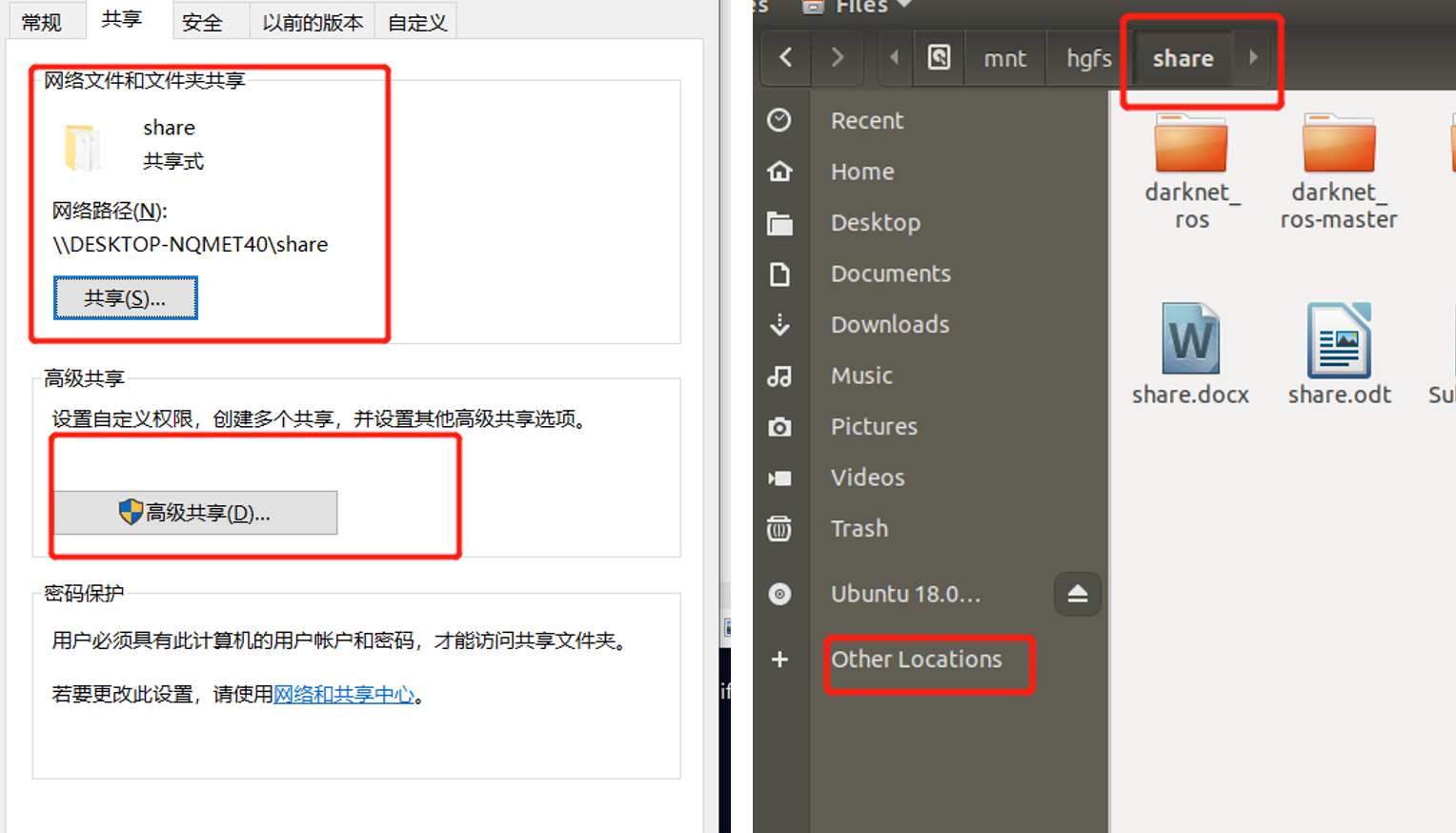

④ 安装好VMware Tools后主机和虚拟机依旧无法互传

该问题亦无解,通过csdn查询到多种修改方法,操作后均无效,最后只能通过共享文件夹的方式实现文件互传:如图所示,在主机创建share文件夹,开启共享,可在虚拟机中如下位置找到share文件夹,从而实现文件互传。

⑤ ROS系统换源:**

国内源pass,中科大源pass,阿里源pass……千挑万选后清华源终于成功了!

⑥ Linux系统连接校园网:

打开终端,输入以下指令(两次密码留同一个)

sudo passwd root

下载校园网Linux64位客户端,解压后在DrClient目录下打开终端,依次运行如下指令(需要先右键→属性(Properties)→权限(Perssions)→执行(Execute)→勾选允许执行)

./privillege.sh

./ DrClientLinux

⑦ 与主机共享同一网络:

依次点击VMware界面上的,虚拟机→设置→网络适配器,将网络连接下桥接模式改为NAT模式

⑧ 虚拟机下载中文输入法

依次点击右上角→用户名→Account settings→Region & Language→Input Sources,下载中文拼音输入法,下载成功后可通过win+空格键进行输入法切换。

六、代码

path_pursuit.py

#!/usr/bin/env python

import rospy

from nav_msgs.msg import Path, Odometry

from ackermann_msgs.msg import AckermannDriveStamped

from geometry_msgs.msg import PoseStamped, PoseArray

import math

import numpy as np

from numpy import linalg as LA

from tf.transformations import euler_from_quaternion, quaternion_from_euler

import csv

import os

class following_path:

def __init__(self):

self.current_pose = rospy.Subscriber('/pf/pose/odom', Odometry, self.callback_read_current_position, queue_size=1)

self.Pose = []

self.path_pose = rospy.Subscriber('/move_base/TebLocalPlannerROS/global_plan', Path, self.callback_read_path, queue_size=1)

self.path_info = []

self.Goal = []

self.navigation_input = rospy.Publisher('/vesc/low_level/ackermann_cmd_mux/input/navigation', AckermannDriveStamped, queue_size=1)

self.reach_goal = False

self.MAX_VELOCITY = 0.5

self.MIN_VELOCITY = 0

self.max_angle = 1

self.steering_velocity = 1

self.jerk = 0.0

self.acceleration = 0.0

self.LOOKAHEAD_DISTANCE = 0.4

self.Low_Speed_Mode = False

def callback_read_path(self, data):

# Organize the pose message and only ask for (x,y) and orientation

# Read the Real time pose message and load them into path_info

self.path_info = []

path_array = data.poses

for path_pose in path_array:

path_x = path_pose.pose.position.x

path_y = path_pose.pose.position.y

path_qx = path_pose.pose.orientation.x

path_qy = path_pose.pose.orientation.y

path_qz = path_pose.pose.orientation.z

path_qw = path_pose.pose.orientation.w

path_quaternion = (path_qx, path_qy, path_qz, path_qw)

path_euler = euler_from_quaternion(path_quaternion)

path_yaw = path_euler[2]

self.path_info.append([float(path_x), float(path_y), float(path_yaw)])

self.Goal = list(self.path_info[-1]) # Set the last pose of the global path as goal location

def callback_read_current_position(self, data):

if self.reach_goal: # Stop updating the information.

self.path_info = []

self.Pose = []

ackermann_control = AckermannDriveStamped()

ackermann_control.drive.speed = 0.0

ackermann_control.drive.steering_angle = 0.0

ackermann_control.drive.steering_angle_velocity = 0.0

if not len(self.path_info) == 0:

# Read the path information to path_point list

path_points_x = [float(point[0]) for point in self.path_info]

path_points_y = [float(point[1]) for point in self.path_info]

path_points_w = [float(point[2]) for point in self.path_info]

# Read the current pose of the car from particle filter

x = data.pose.pose.position.x

y = data.pose.pose.position.y

qx = data.pose.pose.orientation.x

qy = data.pose.pose.orientation.y

qz = data.pose.pose.orientation.z

qw = data.pose.pose.orientation.w

# Convert the quaternion angle to eular angle

quaternion = (qx,qy,qz,qw)

euler = euler_from_quaternion(quaternion)

yaw = euler[2]

self.Pose = [float(x), float(y), float(yaw)]

if self.dist(self.Goal, self.Pose) < 1.0:

self.Low_Speed_Mode = True

if self.dist(self.Goal, self.Pose) < 0.3:

self.reach_goal = True

print('Goal Reached!')

else:

print('Low Speed Mode ON!')

else:

self.Low_Speed_Mode = False

# 2. Find the path point closest to the vehichle tat is >= 1 lookahead distance from vehicle's current location.

dist_array = np.zeros(len(path_points_x))

for i in range(len(path_points_x)):

dist_array[i] = self.dist((path_points_x[i], path_points_y[i]), (x,y))

goal = np.argmin(dist_array) # Assume the closet point as the goal point at first

goal_array = np.where((dist_array < (self.LOOKAHEAD_DISTANCE + 0.3)) & (dist_array > (self.LOOKAHEAD_DISTANCE - 0.3)))[0]

for id in goal_array:

v1 = [path_points_x[id] - x, path_points_y[id] - y]

v2 = [math.cos(yaw), math.sin(yaw)]

diff_angle = self.find_angle(v1,v2)

if abs(diff_angle) < np.pi/4: # Check if the one that is the cloest to the lookahead direction

goal = id

break

L = dist_array[goal]

# 3. Transform the goal point to vehicle coordinates.

glob_x = path_points_x[goal] - x

glob_y = path_points_y[goal] - y

goal_x_veh_coord = glob_x*np.cos(yaw) + glob_y*np.sin(yaw)

goal_y_veh_coord = glob_y*np.cos(yaw) - glob_x*np.sin(yaw)

# 4. Calculate the curvature = 1/r = 2x/l^2

# The curvature is transformed into steering wheel angle by the vehicle on board controller.

# Hint: You may need to flip to negative because for the VESC a right steering angle has a negative value.

diff_angle = path_points_w[goal] - yaw # Find the turning angle

r = L/(2*math.sin(diff_angle)) # Calculate the turning radius

angle = 2 * math.atan(0.4/r) # Find the wheel turning radius

angle = np.clip(angle, -self.max_angle, self.max_angle) # 0.4189 radians = 24 degrees because car can only turn 24 degrees max

angle = (0 if abs(angle) < 0.1 else angle)

VELOCITY = self.speed_control(angle, self.MIN_VELOCITY, self.MAX_VELOCITY)

# Write the Velocity and angle data into the ackermann message

ackermann_control = AckermannDriveStamped()

ackermann_control.drive.speed = VELOCITY

ackermann_control.drive.steering_angle = angle

ackermann_control.drive.steering_angle_velocity = self.steering_velocity

else:

ackermann_control = AckermannDriveStamped()

ackermann_control.drive.speed = 0.0

ackermann_control.drive.steering_angle = 0.0

ackermann_control.drive.steering_angle_velocity = 0.0

self.navigation_input.publish(ackermann_control)

# Computes the Euclidean distance between two 2D points p1 and p2

def dist(self, p1, p2):

try:

return np.sqrt((p1[0] - p2[0]) ** 2 + (p1[1] - p2[1]) ** 2)

except:

return 0.5

# Compute the angle between car direction and goal direction

def find_angle(self, v1, v2):

cos_ang = np.dot(v1, v2)

sin_ang = LA.norm(np.cross(v1, v2))

return np.arctan2(sin_ang, cos_ang)

# Control the speed of the car within the speed limit

def speed_control(self, angle, MIN_VELOCITY, MAX_VELOCITY):

# Assume the speed change linearly with respect to yaw angle

if self.Low_Speed_Mode:

Velocity = 0.5

else:

k = (MIN_VELOCITY - MAX_VELOCITY)/self.max_angle + 0.5

Velocity = k * abs(angle) + MAX_VELOCITY

return Velocity

if __name__ == "__main__":

rospy.init_node("pursuit_path")

following_path()

rospy.spin()

1361

1361

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?