一、基础环境配置

1、检查操作系统版本

# 此方式要求k8s集群要求在centos7.5版本或者以上版本[root@k8s-master /]# cat /etc/redhat-release

CentOS Linux release 7.9.2009 (Core)

2、主机名解析

为了方便后面集群间的直接调用,配置主机名解析

[root@k8s-master /]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.174.128 k8s-master

192.168.174.129 k8s-node1

192.168.174.130 k8s-node23、时间同步

k8s要求集群中的节点时间必须精确一致,这里使用chronyd服务从网络同步时间# 启动chronyd服务

# 启动服务

[root@k8s-master /]# systemctl start chronyd

# 设置服务自启动

[root@k8s-master /]# systemctl enable chronyd

# 查看时间

[root@k8s-master /]# date

2021年 09月 17日 星期五 15:55:26 CST

4、禁用iptables和firewalld服务

k8s运行中会产生大量的iptable规则,避免规则混淆,直接关闭系统原则

[root@k8s-master /]# systemctl stop firewalld

[root@k8s-master /]# systemctl disable firewalld

[root@k8s-master /]# systemctl stop iptables

[root@k8s-master /]# systemctl disable iptables5、禁用selinux

selinux是linux系统的一个安全服务,如果不关闭将造成集群各种问题

# 编辑、/etc/selinux/config 文件

# 修改之后需要重启服务

SELINUX=disabled6、禁用swap分区

# 编辑分区配置文件 etc/fstab ,注释掉swap分区这一行

[root@k8s-node2 /]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Mon May 24 05:53:45 2021

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=34218ca8-2e15-49b3-86d7-2493ae5c58ae /boot xfs defaults 0 0

# /dev/mapper/centos-swap swap swap defaults 0 07、修改linux内核参数

# 修改(添加)linux内核参数,天机网桥过滤和地址转发功能# 编辑 vim etc/sysctl.d/kubernetes.conf

[root@k8s-master /]# vim etc/sysctl.d/kubernetes.conf

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1# 重新加载配置

[root@k8s-master /]# sysctl -p

#加载网桥过滤模块

[root@k8s-master /]# modprobe br_netfilter

# 查看网桥过滤模块是否加载成功

[root@k8s-master /]# lsmod | grep br_netfilter

br_netfilter 22256 0

bridge 151336 1 br_netfilter

8、配置ipvs功能

k8s配置使用ipvs为代理

# 1、安装upset和ipvsadmin

yum install ipset ipvsadm -y

# 2、添加需要加载的模块写入脚本文件

cat <<EOF > /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe – ip_vs

modprobe – ip_vs_rr

modprobe – ip_vs_wrr

modprobe – ip_vs_sh

modprobe – nf_conntrack_ipv4

EOF

# 3、为脚本添加权限

chmod +x /etc/sysconfig/modules/ipvs.modules

# 4、执行脚本

/bin/bash /etc/sysconfig/modules/ipvs.modules

# 5、查看对应模块是否加载成功

grep -e ip_vs -e nf_conntrack_ipv49、重启服务

reboot10、安装docker切换镜像

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

1、査看当前镜像源中支持的docker版本

yum list docker-ce --showduplicates

2、安装特定版本的docker-ce

#必须指定--setopt=obsoletes=0,否则yum#自动安装更高版本

yum install docker-ce-19.03.15-3.el7

3、 添加一个配件

# Docker在默认情况下使用的Cgroup Driver为cgroupfs,而kubernetes荐庚用systemd来代曾cgroupfs

# mkdir /etc/docker

cat<<EOF > /etc/docker/daemon.json

{

"registry-mirrors": [

"https://kfwkfulq.mirror.aliyuncs.com",

"https://2lqq34jg.mirror.aliyuncs.com",

"https://pee6w651.mirror.aliyuncs.com",

"https://registry.docker-cn.com",

"http://hub-mirror.c.163.com"],

"exec-opts":["native.cgroupdriver=systemd"]

}

EOF

# 5 后动docker

# systemctl restart docker11、安装k8s组件

# 由于kubernetes的镜像源在国外,速度比较慢,这里切换成国内的镜像源

# 编辑 /etc/yum.repos.d/kubernetes.repo,添加下面的配置

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

# 安装kubeadnu kubelet和kubectl(制定版本)

# yum install --setopt=obsoletes=0 kubeadm-1.17.4 kubelet-1.17.4 kubectl-1.17.4 -y

#安装最新版本,也可安装指定版本

yum install -y kubelet kubeadm kubectl

#查看kubelet版本

kubelet--version

#查看kubeadm版本

kubeadm version#重新加载配置文件

systemctl daemon-reload

#启动kubelet

systemctl start kubelet

#查看kubelet启动状态

systemctl status kubelet

#没启动成功,报错先不管,后面的kubeadm init会拉起

#设置开机自启动

systemctl enable kubelet

#查看kubelet开机启动状态 enabled:开启, disabled:关闭

systemctl is-enabled kubelet

#查看日志

journalctl -xefu kubelet# 配置 kubelet的cgroup

# 编辑 /etc/sysconfig/kubelet,添加下面的配置

KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

# 设置kubelet幵机自启

# systemctl enable kubelet

二、集群初始化

1、准备镜像文件

#查看k8s组件版本

kubeadm config images list

#通过国内镜像仓库下载所需的镜像文件

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.4

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.4

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.4

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.4

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5

#更改镜像名称

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.4 k8s.gcr.io/kube-apiserver:v1.17.4

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.4 k8s.gcr.io/kube-controller-manager:v1.17.4

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.4 k8s.gcr.io/kube-scheduler:v1.17.4

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.4 k8s.gcr.io/kube-proxy:v1.17.4

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0 k8s.gcr.io/etcd:3.4.3-0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5 k8s.gcr.io/coredns:1.6.5

#删除原先的镜像

docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.17.4

docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.17.4

docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.17.4

docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.17.4

docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1

docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.3-0

docker image rm registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.6.5

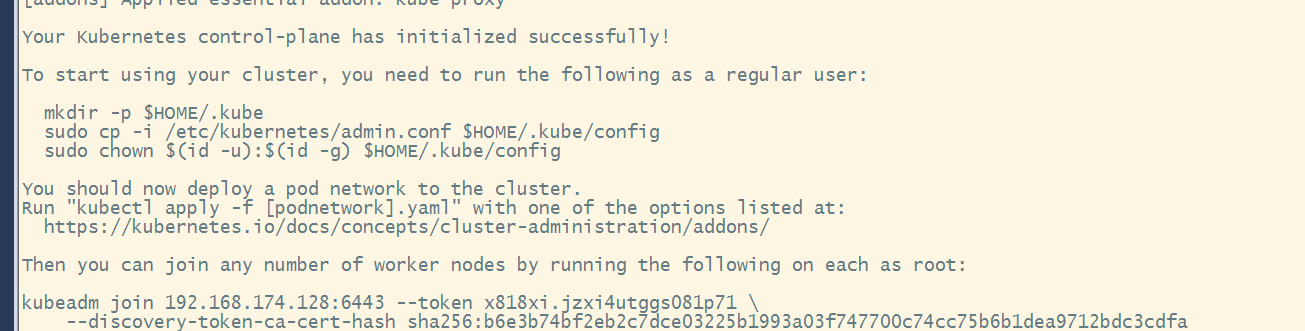

2、初始化集群(master节点)

#执行初始化命令,创建集群

kubeadm init --kubernetes-version v1.17.4 --pod-network-cidr 10.244.0.0/16 --ignore-preflight-errors=NumCPU

#创建必要的文件

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

#查看节点

kubectl get node

初始化错误解决办法:

#删除文件

/var/lib/kubelet/kubeadm-flags.env

/var/lib/kubelet/config.yaml

/etc/kubernetes

#执行脚本

swapoff -a

kubeadm reset

systemctl daemon-reload

systemctl restart kubelet

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

# 重新执行初始化脚本

3、node节点加入集群(node节点)

kubeadm join 192.168.174.128:6443 --token x818xi.jzxi4utggs081p71 \

--discovery-token-ca-cert-hash sha256:b6e3b74bf2eb2c7dce03225b1993a03f747700c74cc75b6b1dea9712bdc3cdfa

4、安装网络插件(master节点)

#创建文件夹

mkdir flannel && cd flannel

#下载文件

curl -O https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# kube-flannel.yml里需要下载镜像,我这里提前先下载

docker pull quay.io/coreos/flannel:v0.14.0-rc1

#创建flannel网络插件

kubectl apply -f kube-flannel.yml

#过一会查看k8s集群节点,变成Ready状态了

kubectl get nodes

[root@k8s-master flannel]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 21m v1.17.4

k8s-node1 Ready <none> 4m37s v1.17.4

k8s-node2 Ready <none> 17m v1.17.4

搞定,但是 raw.githubusercontent.com已经被墙了,这里放上kube-flannel.yml文件的源码

cat <<EOF > kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

EOF

5、服务验证(部署一个nginx服务)

#部署nginx

kubectl create deployment nginx --image=nginx:1.14-alpine

#暴露端口

kubectl expose deployment nginx --port=80 --type=NodePort

#查看服务状态

kubectl get pod

kubectl get service/svc

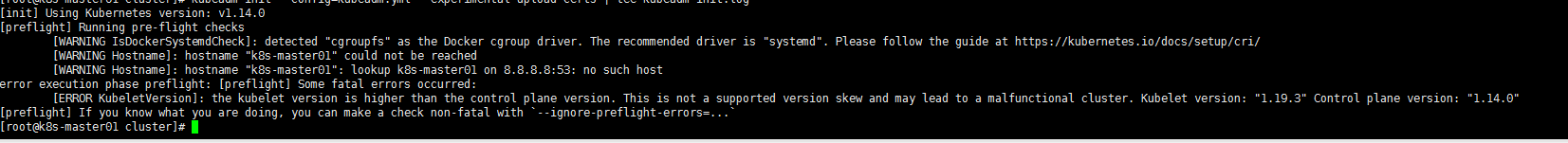

6、k8s版本问题解决办法

[ERROR KubeletVersion]: the kubelet version is higher than the control plane version. This is not a supported version skew and may lead to a malfunctional cluster

原因:

Kubelet 和 Kubeadm 版本不一致导致

查看kubelet 和 kubeadm 版本

[root@k8s-master01 cluster]# kubelet --version

Kubernetes v1.19.3

[root@k8s-master01 cluster]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"14", GitVersion:"v1.14.0", GitCommit:"641856db18352033a0d96dbc99153fa3b27298e5", GitTreeState:"clean", BuildDate:"2019-03-25T15:51:21Z", GoVersion:"go1.12.1", Compiler:"gc", Platform:"linux/amd64"}

[root@k8s-master01 cluster]#

解决办法:

重新安装对应的 kubelet 版本

yum -y remove kubelet

yum -y install kubelet-1.14.0 kubeadm-1.14.0 kubectl-1.14.0 --disableexcludes=kubernetes

启动服务

systemctl enable kubelet && systemctl restart kubelet

本文详细介绍Kubernetes(K8s)集群的搭建过程,包括基础环境配置、集群初始化、网络插件安装及服务验证等关键步骤。

本文详细介绍Kubernetes(K8s)集群的搭建过程,包括基础环境配置、集群初始化、网络插件安装及服务验证等关键步骤。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?