关于使用prometheus监控k8s集群

一、环境准备

1、环境信息

| 节点名称 | IP地址 |

|---|---|

| k8s-master1 | 192.168.227.131 |

| k8s-node1 | 192.168.227.132 |

| k8s-node2 | 192.168.227.133 |

2、硬件环境信息

| 名称 | 描述 |

|---|---|

| 办公电脑 | winxp10 |

| 虚拟机 | VMware® Workstation 15 Pro 15.5.1 build-15018445 |

| 操作系统 | CentOS Linux 7 (Core) |

| linux内核 | CentOS Linux (5.4.123-1.el7.elrepo.x86_64) 7 (Core) |

| CPU | 至少2核(此版本的k8s要求至少2核,否则kubeadm init会报错) |

| 内存 | 2G及其以上 |

二、云原生k8s集群安装

- 关于k8s集群的安装,可以参考我的另一篇博客,傻瓜式操作,硬件准备好的情况下, 半个小时左右就可安装起来

链接: kubernetes(k8s)在centos7上快速安装指导.

三、部署Prometheus

1、准备glusterfs共享存储

说明:prometheus安装的时候,需要使用到共享存储,存储采集的监控数据。

我这里使用的glusterfs。

glusterfs在centos7上的安装及其使用方法参见此链接:https://www.cnblogs.com/lingfenglian/p/11731849.html

1)创建存储卷目录

- 分别在glusterfs的三个节点上,创建存储卷的文件目录

mkdir -p /data/k8s/volprome01

2)创建存储卷

gluster volume create volprome01 replica 3 k8s-master1:/data/k8s/volprome01 k8s-node1:/data/k8s/volprome01 k8s-node2:/data/k8s/volprome01 force

3)启动存储卷

gluster volume start volprome01

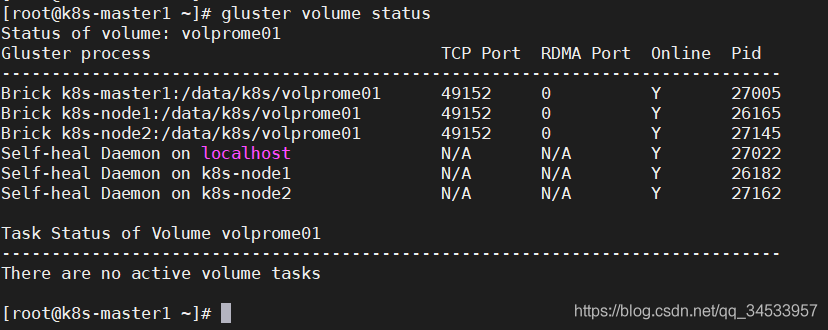

4)查看存储卷状态

gluster volume status

2、创建安装prometheus的命名空间(namespace)

kubectl create ns prome-system

说明:prometheus的相关资源将被安装在prome-system命名空间下面。

3、安装node-exporter

采用DaemonSet的方式的在k8s集群的每台服务器上部署node-exporter,node-exporter提供节点服务器的监控指标服务。

部署node-exporter的yaml文件内容如下,根据实际情况修改里面的namespace,

文件名:node-exporter-deploy.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: prome-system

labels:

name: node-exporter

spec:

selector:

matchLabels:

name: node-exporter

template:

metadata:

labels:

name: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true

containers:

- name: node-exporter

image: prom/node-exporter:v0.16.0

ports:

- containerPort: 9100

resources:

requests:

cpu: 0.15

securityContext:

privileged: true

args:

- --path.procfs

- /host/proc

- --path.sysfs

- /host/sys

- --collector.filesystem.ignored-mount-points

- '"^/(sys|proc|dev|host|etc)($|/)"'

volumeMounts:

- name: dev

mountPath: /host/dev

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: rootfs

mountPath: /rootfs

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /

4、安装kube-state-metrics

kube-state-metrics是为prometheus采集k8s资源数据的exporter,kube-state-metrics能够采集绝大多数k8s内置资源的相关数据,例如pod、deploy、service等等。同时它也提供自己的数据,主要是资源采集个数和采集发生的异常次数统计。

kube-state-metrics参照官方的文档进行安装即可,

链接: Kubernetes Deployment.

[root@k8s-master1 kube-state-metricsinstall]# git clone https://github.com/kubernetes/kube-state-metrics.git

[root@k8s-master1 kube-state-metricsinstall]# cd kube-state-metrics/examples/standard/

[root@k8s-master1 standard]# kubectl create -f .

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

serviceaccount/kube-state-metrics created

service/kube-state-metrics created

5、安装prometheus

1)创建glusterfs-endpoints

文件名:glusterfs-endpoints-prome.yaml

备注:请根据实际情况修改大小,我设置的Prometheus保存时间比较小,所以这里设置的存储空间也比较小。

apiVersion: v1

kind: Endpoints

metadata:

name: glusterfs-cluster

namespace: prome-system

subsets:

- addresses:

- ip: 192.168.227.131

- ip: 192.168.227.132

- ip: 192.168.227.133

ports:

- port: 49153

protocol: TCP

2)创建PV/PVC

文件名:prometheus-volume.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus

spec:

capacity:

storage: 2Gi

accessModes:

- ReadWriteMany

glusterfs:

endpoints: glusterfs-cluster

path: volprome01

readOnly: false

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus

namespace: prome-system

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi

3)创建RBAC相关访问权限

文件名:prometheus-rbac.yaml

备注:prometheus采集k8s相关资源指标的时候,需要获取相关RBAC权限才可以访问。

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: prome-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- pods

- nodes/proxy

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

- nodes/metrics

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: prome-system

4)创建prometheus的configmap对象

文件名:prometheus-configmap.yaml

备注:将prometheus.yml配置文件,挂载到k8s的configmap对象中。

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: prome-system

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'kubernetes-nodes'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'kubernetes-kubelet'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- job_name: 'container-pod-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

5)创建部署prometheus的deployment对象

文件名:prometheus-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: prome-system

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

serviceAccountName: prometheus

containers:

- image: prom/prometheus:v2.4.3

imagePullPolicy: IfNotPresent

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=2h"

- "--web.enable-admin-api" # 控制对admin HTTP API的访问,其中包括删除时间序列等功能

- "--web.enable-lifecycle" # 支持热更新,直接执行localhost:9090/-/reload立即生效

ports:

- containerPort: 9090

protocol: TCP

name: http

volumeMounts:

- mountPath: "/prometheus"

subPath: prometheus

name: data

- mountPath: "/etc/prometheus"

name: config-volume

resources:

requests:

cpu: 100m

memory: 512Mi

limits:

cpu: 100m

memory: 512Mi

securityContext:

runAsUser: 0

volumes:

- name: data

persistentVolumeClaim:

claimName: prometheus

- configMap:

name: prometheus-config

name: config-volume

6)创建访问prometheus的service对象

文件名:prometheus-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: prome-system

labels:

app: prometheus

spec:

selector:

app: prometheus

type: NodePort

ports:

- nodePort: 30001

name: web

port: 9090

targetPort: http

7)开始部署prometheus

- 执行如下命令,开始部署prometheus

备注:将上面6个文件,放到同一个文件夹下面,我这里是放在prometheus-install文件夹下面的。

cd /root/prometheus-install

kubectl create -f .

8)查看prometheus部署结果

- 查看prometheus和node-exporter运行状态

[root@k8s-master1 prometheus-install]# kubectl get pods -n prome-system

NAME READY STATUS RESTARTS AGE

node-exporter-765w8 1/1 Running 0 127m

node-exporter-kq9k6 1/1 Running 0 127m

node-exporter-tpg4t 1/1 Running 0 127m

prometheus-8446c7bbc7-qtbv2 1/1 Running 0 122m

[root@k8s-master1 prometheus-install]#

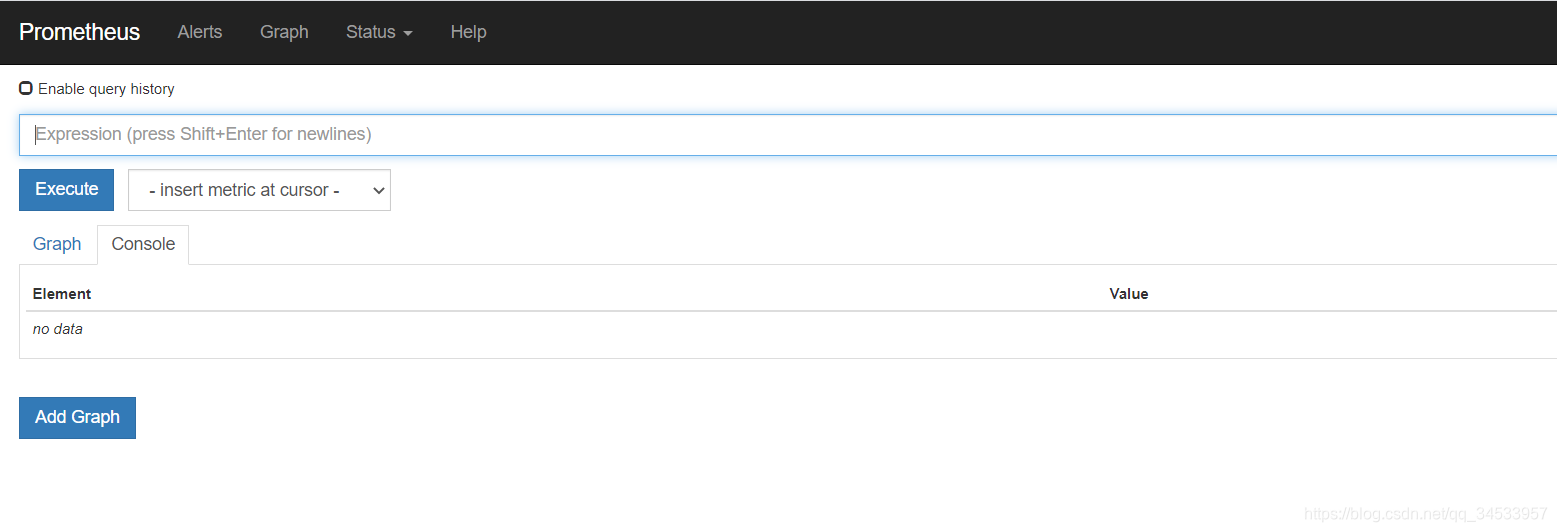

- 访问下prometheus ui页面

http://192.168.227.131:30001

备注:IP是k8s任一节点的服务器IP,端口是在prometheus-svc.yaml中设置的nodePort的端口

能否访问到如下页面表示,部署prometheus成功

四、prometheus采集任务job与k8s集群资源关系

在Kubernetes中,Promethues 通过与 Kubernetes API 集成,目前主要支持5中服务发现模式,分别是:Node、Service、Pod、Endpoints、Ingress。

1)监控k8s集群节点

Prometheus通过node-exporter来采集节点的监控指标数据。顾名思义,node_exporter 就是抓取用于采集服务器节点的各种运行指标,目前node_exporter支持几乎所有常见的监控点,比如 conntrack,cpu,diskstats,filesystem,loadavg,meminfo,netstat等,使用的kubernetes_sd_configs的node的服务发现。

- job_name: 'kubernetes-nodes'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

2)容器监控

Prometheus通过cAdvisor来采集容器的监控指标数据。目前,cAdvisor已经内置在了 kubelet 组件之中,所以我们不需要单独去安装,cAdvisor的数据路径为/api/v1/nodes//proxy/metrics,同样我们这里使用 node 的服务发现模式,因为每一个节点下面都有 kubelet,自然都有cAdvisor采集到的数据指标。使用的kubernetes_sd_configs的node的服务发现。

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

3)基于service的endpoints的服务发现采集pod的指标数据

使用的kubernetes_sd_configs的endpoints的服务发现

kube-state-metrics是为prometheus采集k8s资源数据的exporter

备注:用于监控k8s资源对象的kube-state-metrics服务,默认会创建名字为kube-state-metrics的service,而且设置了prometheus.io/scrape: “true”,故,下面的job_name: 'kubernetes-service-endpoints’会自动发现kube-state-metrics的service,从而采集到kube-state-metrics提供的监控指标数据。

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

4)k8s技术组件监控

使用的kubernetes_sd_configs的endpoints的服务发现

- kube-apiserver监控

- job_name: kubernetes-apiservers

scrape_interval: 30s

scrape_timeout: 20s

metrics_path: /metrics

scheme: https

kubernetes_sd_configs:

- role: endpoints

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: false

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

separator: ;

regex: default;kubernetes;https

replacement: $1

action: keep

- etcd监控

使用的kubernetes_sd_configs的endpoints的服务发现。

etcd开启了https证书访问,这里需要把etcd证书,创建为secret对象并挂载到prometheus的pod里面,这样在访问etcd采集指标数据的时候,会获取到其证书。

采用kubeadm安装的k8s集群,etcd证书的默认路径是/etc/kubernetes/pki/etcd

kubectl -n prome-system create secret generic etcd-certs --from-file=/etc/kubernetes/pki/etcd/healthcheck-client.crt --from-file=/etc/kubernetes/pki/etcd/healthcheck-client.key --from-file=/etc/kubernetes/pki/etcd/ca.crt

挂载到prometheus的pod里面

kubectl edit deploy -n prome-system prometheus

volumeMounts:

- mountPath: "/prometheus"

subPath: prometheus

name: data

- mountPath: "/etc/prometheus"

name: config-volume

- mountPath: /etc/kubernetes/pki/etcd

name: etcd-certs

readOnly: true

volumes:

- name: data

persistentVolumeClaim:

claimName: prometheus

- configMap:

name: prometheus-config

name: config-volume

- name: etcd-certs

secret:

defaultMode: 420

secretName: etcd-certs

再者,需要新增一个etcd的svc,etcd-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: etcd

namespace: kube-system

spec:

ports:

- name: https

port: 2379

protocol: TCP

targetPort: 2379

type: ClusterIP

selector:

component: etcd

prometheus的采集任务job内容如下,

- job_name: 'etcd'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

cert_file: /etc/kubernetes/pki/etcd/healthcheck-client.crt

key_file: /etc/kubernetes/pki/etcd/healthcheck-client.key

insecure_skip_verify: true

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: kube-system;etcd;https

- kube-proxy监控

因为,k8s集群的每个节点都有kube-proxy服务,这里使用的kubernetes_sd_configs的node的服务发现。

k8s安装后,kube-proxy的默认metrics指标地址为:

metricsBindAddress: 127.0.0.1:10249

需要修改为0.0.0.0:10249,

kubectl edit cm -n kube-system kube-proxy

prometheus的采集任务job内容如下,

- job_name: 'kube-proxy'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:10249'

target_label: __address__

action: replace

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

3556

3556

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?