RocketMQ存储文件

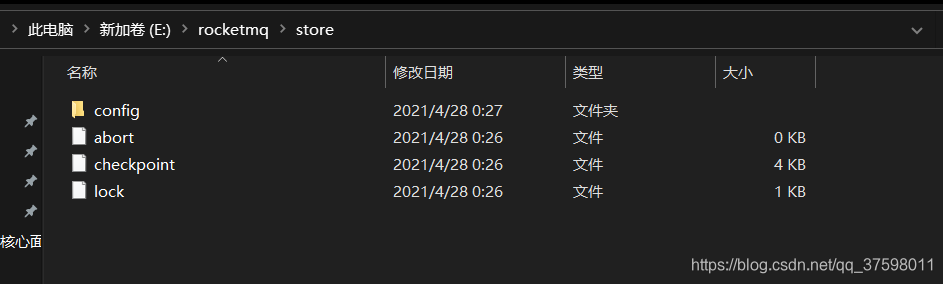

RocketMQ存储路径为${ROCKET_HOME}/store

RocketMQ主要的存储文件夹。

1)commitlog:消息存储目录。

2)config:运行期间一些配置信息,主要包括下列信息。

- consumerFilter.json:主题消息过滤信息。

- consumerOffset.json:集群消费模式消息消费进度。

- delayOffset.json:延时消息队列拉取进度。

- subscriptionGroup.json:消息消费组配置信息。

- topics.json:topic配置属性。

3)consumequeue:消息消费队列存储目录。

4)index:消息索引文件存储目录。

5)abort:如果存在abort文件说明Broker非正常关闭,该文件默认启动时创建,正常退出之前删除。

6)checkpoint:文件检测点,存储commitlog文件最后一次刷盘时间戳、consumequeue最后一次刷盘时间、index索引文件最后一次刷盘时间戳。

Commitlog文件:该目录下的文件主要存储消息,其特点是每一条消息长度不相同。

ConsumeQueue文件:RocketMQ为了适应消息消费的检索需求,设计了消息消费队列文件(Consumequeue),该文件可以看成是Commitlog关于消息消费的“索引”文件,consumequeue的第一级目录为消息主题,第二级目录为主题的消息队列。

Index索引文件:RocketMQ引入了Hash索引机制为消息建立索引,HashMap的设计包含两个基本点:Hash槽与Hash冲突的链表结构。

checkpoint文件:checkpoint的作用是记录Comitlog、ConsumeQueue、Index文件的刷盘时间点,文件固定长度为4k。

实时更新消息消费队列与索引文件

消息消费队列文件、消息属性索引文件都是基于CommitLog文件构建的,当消息生产者提交的消息存储在Commitlog文件中,ConsumeQueue、IndexFile需要及时更新,否则消息无法及时被消费,根据消息属性查找消息也会出现较大延迟。RocketMQ通过开启一个线程ReputMessageServcie来准实时转发CommitLog文件更新事件,相应的任务处理器根据转发的消息及时更新ConsumeQueue、IndexFile文件。

DefaultMessageStore#start

public void start() throws Exception {

lock = lockFile.getChannel().tryLock(0, 1, false);

if (lock == null || lock.isShared() || !lock.isValid()) {

throw new RuntimeException("Lock failed,MQ already started");

}

lockFile.getChannel().write(ByteBuffer.wrap("lock".getBytes()));

lockFile.getChannel().force(true);

{

/**

* 1. Make sure the fast-forward messages to be truncated during the recovering according to the max physical offset of the commitlog;

* 2. DLedger committedPos may be missing, so the maxPhysicalPosInLogicQueue maybe bigger that maxOffset returned by DLedgerCommitLog, just let it go;

* 3. Calculate the reput offset according to the consume queue;

* 4. Make sure the fall-behind messages to be dispatched before starting the commitlog, especially when the broker role are automatically changed.

*/

long maxPhysicalPosInLogicQueue = commitLog.getMinOffset();

for (ConcurrentMap<Integer, ConsumeQueue> maps : this.consumeQueueTable.values()) {

for (ConsumeQueue logic : maps.values()) {

if (logic.getMaxPhysicOffset() > maxPhysicalPosInLogicQueue) {

maxPhysicalPosInLogicQueue = logic.getMaxPhysicOffset();

}

}

}

if (maxPhysicalPosInLogicQueue < 0) {

maxPhysicalPosInLogicQueue = 0;

}

if (maxPhysicalPosInLogicQueue < this.commitLog.getMinOffset()) {

maxPhysicalPosInLogicQueue = this.commitLog.getMinOffset();

/**

* This happens in following conditions:

* 1. If someone removes all the consumequeue files or the disk get damaged.

* 2. Launch a new broker, and copy the commitlog from other brokers.

*

* All the conditions has the same in common that the maxPhysicalPosInLogicQueue should be 0.

* If the maxPhysicalPosInLogicQueue is gt 0, there maybe something wrong.

*/

log.warn("[TooSmallCqOffset] maxPhysicalPosInLogicQueue={} clMinOffset={}", maxPhysicalPosInLogicQueue, this.commitLog.getMinOffset());

}

log.info("[SetReputOffset] maxPhysicalPosInLogicQueue={} clMinOffset={} clMaxOffset={} clConfirmedOffset={}",

maxPhysicalPosInLogicQueue, this.commitLog.getMinOffset(), this.commitLog.getMaxOffset(), this.commitLog.getConfirmOffset());

this.reputMessageService.setReputFromOffset(maxPhysicalPosInLogicQueue);

this.reputMessageService.start();

/**

* 1. Finish dispatching the messages fall behind, then to start other services.

* 2. DLedger committedPos may be missing, so here just require dispatchBehindBytes <= 0

*/

while (true) {

if (dispatchBehindBytes() <= 0) {

break;

}

Thread.sleep(1000);

log.info("Try to finish doing reput the messages fall behind during the starting, reputOffset={} maxOffset={} behind={}", this.reputMessageService.getReputFromOffset(), this.getMaxPhyOffset(), this.dispatchBehindBytes());

}

this.recoverTopicQueueTable();

}

if (!messageStoreConfig.isEnableDLegerCommitLog()) {

this.haService.start();

this.handleScheduleMessageService(messageStoreConfig.getBrokerRole());

}

this.flushConsumeQueueService.start();

this.commitLog.start();

this.storeStatsService.start();

this.createTempFile();

this.addScheduleTask();

this.shutdown = false;

}Broker服务器在启动时会启动ReputMessageService线程,并初始化一个非常关键的参数reputFfomOffset,该参数的含义是ReputMessageService从哪个物理偏移量开始转发消息给ConsumeQueue和IndexFile。如果允许重复转发,reputFromOffset设置为CommitLog的提交指针;如果不允许重复转发,reputFromOffset设置为Commitlog的内存中最大偏移量。

@Override

public void run() {

DefaultMessageStore.log.info(this.getServiceName() + " service started");

while (!this.isStopped()) {

try {

Thread.sleep(1);

this.doReput();

} catch (Exception e) {

DefaultMessageStore.log.warn(this.getServiceName() + " service has exception. ", e);

}

}

DefaultMessageStore.log.info(this.getServiceName() + " service end");

}ReputMessageService线程每执行一次任务推送休息1毫秒就继续尝试推送消息到消息消费队列和索引文件,消息消费转发的核心实现在doReput方法中实现。

private void doReput() {

if (this.reputFromOffset < DefaultMessageStore.this.commitLog.getMinOffset()) {

log.warn("The reputFromOffset={} is smaller than minPyOffset={}, this usually indicate that the dispatch behind too much and the commitlog has expired.",

this.reputFromOffset, DefaultMessageStore.this.commitLog.getMinOffset());

this.reputFromOffset = DefaultMessageStore.this.commitLog.getMinOffset();

}

for (boolean doNext = true; this.isCommitLogAvailable() && doNext; ) {

if (DefaultMessageStore.this.getMessageStoreConfig().isDuplicationEnable()

&& this.reputFromOffset >= DefaultMessageStore.this.getConfirmOffset()) {

break;

}

SelectMappedBufferResult result = DefaultMessageStore.this.commitLog.getData(reputFromOffset);

if (result != null) {

try {

this.reputFromOffset = result.getStartOffset();

for (int readSize = 0; readSize < result.getSize() && doNext; ) {

DispatchRequest dispatchRequest =

DefaultMessageStore.this.commitLog.checkMessageAndReturnSize(result.getByteBuffer(), false, false);

int size = dispatchRequest.getBufferSize() == -1 ? dispatchRequest.getMsgSize() : dispatchRequest.getBufferSize();

if (dispatchRequest.isSuccess()) {

if (size > 0) {

DefaultMessageStore.this.doDispatch(dispatchRequest);

if (BrokerRole.SLAVE != DefaultMessageStore.this.getMessageStoreConfig().getBrokerRole()

&& DefaultMessageStore.this.brokerConfig.isLongPollingEnable()) {

DefaultMessageStore.this.messageArrivingListener.arriving(dispatchRequest.getTopic(),

dispatchRequest.getQueueId(), dispatchRequest.getConsumeQueueOffset() + 1,

dispatchRequest.getTagsCode(), dispatchRequest.getStoreTimestamp(),

dispatchRequest.getBitMap(), dispatchRequest.getPropertiesMap());

}

this.reputFromOffset += size;

readSize += size;

if (DefaultMessageStore.this.getMessageStoreConfig().getBrokerRole() == BrokerRole.SLAVE) {

DefaultMessageStore.this.storeStatsService

.getSinglePutMessageTopicTimesTotal(dispatchRequest.getTopic()).incrementAndGet();

DefaultMessageStore.this.storeStatsService

.getSinglePutMessageTopicSizeTotal(dispatchRequest.getTopic())

.addAndGet(dispatchRequest.getMsgSize());

}

} else if (size == 0) {

this.reputFromOffset = DefaultMessageStore.this.commitLog.rollNextFile(this.reputFromOffset);

readSize = result.getSize();

}

} else if (!dispatchRequest.isSuccess()) {

if (size > 0) {

log.error("[BUG]read total count not equals msg total size. reputFromOffset={}", reputFromOffset);

this.reputFromOffset += size;

} else {

doNext = false;

// If user open the dledger pattern or the broker is master node,

// it will not ignore the exception and fix the reputFromOffset variable

if (DefaultMessageStore.this.getMessageStoreConfig().isEnableDLegerCommitLog() ||

DefaultMessageStore.this.brokerConfig.getBrokerId() == MixAll.MASTER_ID) {

log.error("[BUG]dispatch message to consume queue error, COMMITLOG OFFSET: {}",

this.reputFromOffset);

this.reputFromOffset += result.getSize() - readSize;

}

}

}

}

} finally {

result.release();

}

} else {

doNext = false;

}

}

}1.返回reputFromOffset偏移量开始的全部有效数据(commitlog文件)。

SelectMappedBufferResult result = DefaultMessageStore.this.commitLog.getData(reputFromOffset);2.从result返回的ByteBuffer中循环读取消息,一次读取一条,创建DispatchRequest对象。如果消息长度大于0,则调用doDispatch方法。最终将分别调用CommitLogDispatcherBuildConsumeQueue(构建消息消费队列)、CommitLogDispatcherBuildIndex(构建索引文件)。

DispatchRequest dispatchRequest =

DefaultMessageStore.this.commitLog.checkMessageAndReturnSize(result.getByteBuffer(), false, false);

int size = dispatchRequest.getBufferSize() == -1 ? dispatchRequest.getMsgSize() : dispatchRequest.getBufferSize();

if (dispatchRequest.isSuccess()) {

if (size > 0) {

DefaultMessageStore.this.doDispatch(dispatchRequest);

if (BrokerRole.SLAVE != DefaultMessageStore.this.getMessageStoreConfig().getBrokerRole()

&& DefaultMessageStore.this.brokerConfig.isLongPollingEnable()) {

DefaultMessageStore.this.messageArrivingListener.arriving(dispatchRequest.getTopic(),

dispatchRequest.getQueueId(), dispatchRequest.getConsumeQueueOffset() + 1,

dispatchRequest.getTagsCode(), dispatchRequest.getStoreTimestamp(),

dispatchRequest.getBitMap(), dispatchRequest.getPropertiesMap());

}

this.reputFromOffset += size;

readSize += size;

if (DefaultMessageStore.this.getMessageStoreConfig().getBrokerRole() == BrokerRole.SLAVE) {

DefaultMessageStore.this.storeStatsService

.getSinglePutMessageTopicTimesTotal(dispatchRequest.getTopic()).incrementAndGet();

DefaultMessageStore.this.storeStatsService

.getSinglePutMessageTopicSizeTotal(dispatchRequest.getTopic())

.addAndGet(dispatchRequest.getMsgSize());

}

} else if (size == 0) {

this.reputFromOffset = DefaultMessageStore.this.commitLog.rollNextFile(this.reputFromOffset);

readSize = result.getSize();

}

} 根据消息更新ConumeQueue

消息消费队列转发任务实现类为:CommitLogDispatcherBuildConsumeQueue,内部最终将调用putMessagePositionInfo方法。

public void putMessagePositionInfo(DispatchRequest dispatchRequest) {

ConsumeQueue cq = this.findConsumeQueue(dispatchRequest.getTopic(), dispatchRequest.getQueueId());

cq.putMessagePositionInfoWrapper(dispatchRequest);

}1.根据消息主题与队列ID,先获取对应的ConumeQueue文件,其逻辑比较简单,因为每一个消息主题对应一个消息消费队列目录,然后主题下每一个消息队列对应一个文件夹,然后取出该文件夹最后的ConsumeQueue文件即可。

public void putMessagePositionInfoWrapper(DispatchRequest request) {

final int maxRetries = 30;

boolean canWrite = this.defaultMessageStore.getRunningFlags().isCQWriteable();

for (int i = 0; i < maxRetries && canWrite; i++) {

long tagsCode = request.getTagsCode();

if (isExtWriteEnable()) {

ConsumeQueueExt.CqExtUnit cqExtUnit = new ConsumeQueueExt.CqExtUnit();

cqExtUnit.setFilterBitMap(request.getBitMap());

cqExtUnit.setMsgStoreTime(request.getStoreTimestamp());

cqExtUnit.setTagsCode(request.getTagsCode());

long extAddr = this.consumeQueueExt.put(cqExtUnit);

if (isExtAddr(extAddr)) {

tagsCode = extAddr;

} else {

log.warn("Save consume queue extend fail, So just save tagsCode! {}, topic:{}, queueId:{}, offset:{}", cqExtUnit,

topic, queueId, request.getCommitLogOffset());

}

}

boolean result = this.putMessagePositionInfo(request.getCommitLogOffset(),

request.getMsgSize(), tagsCode, request.getConsumeQueueOffset());

if (result) {

if (this.defaultMessageStore.getMessageStoreConfig().getBrokerRole() == BrokerRole.SLAVE ||

this.defaultMessageStore.getMessageStoreConfig().isEnableDLegerCommitLog()) {

this.defaultMessageStore.getStoreCheckpoint().setPhysicMsgTimestamp(request.getStoreTimestamp());

}

this.defaultMessageStore.getStoreCheckpoint().setLogicsMsgTimestamp(request.getStoreTimestamp());

return;

} else {

// XXX: warn and notify me

log.warn("[BUG]put commit log position info to " + topic + ":" + queueId + " " + request.getCommitLogOffset()

+ " failed, retry " + i + " times");

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

log.warn("", e);

}

}

}

// XXX: warn and notify me

log.error("[BUG]consume queue can not write, {} {}", this.topic, this.queueId);

this.defaultMessageStore.getRunningFlags().makeLogicsQueueError();

}2.依次将消息偏移量、消息长度、taghashcode写入到ByteBuffer中,并根据consumeQueueOffset计算ConumeQueue中的物理地址,将内容追加到ConsumeQueue的内存映射文件中(本操作只追击并不刷盘), ConumeQueue的刷盘方式固定为异步刷盘模式。

根据消息更新Index索引文件

Hash索引文件转发任务实现类:CommitLogDispatcherBuildIndex。

class CommitLogDispatcherBuildIndex implements CommitLogDispatcher {

@Override

public void dispatch(DispatchRequest request) {

if (DefaultMessageStore.this.messageStoreConfig.isMessageIndexEnable()) {

DefaultMessageStore.this.indexService.buildIndex(request);

}

}

}如果messsageIndexEnable设置为true,则调用IndexService#buildIndex构建Hash索引,否则忽略本次转发任务。

IndexFile indexFile = retryGetAndCreateIndexFile();

if (indexFile != null) {

long endPhyOffset = indexFile.getEndPhyOffset();

DispatchRequest msg = req;

String topic = msg.getTopic();

String keys = msg.getKeys();

if (msg.getCommitLogOffset() < endPhyOffset) {

return;

}

……

}获取或创建IndexFile文件并获取所有文件最大的物理偏移量。如果该消息的物理偏移量小于索引文件中的物理偏移,则说明是重复数据,忽略本次索引构建。

if (req.getUniqKey() != null) {

indexFile = putKey(indexFile, msg, buildKey(topic, req.getUniqKey()));

if (indexFile == null) {

log.error("putKey error commitlog {} uniqkey {}", req.getCommitLogOffset(), req.getUniqKey());

return;

}

}如果消息的唯一键不为空,则添加到Hash索引中,以便加速根据唯一键检索消息。

if (keys != null && keys.length() > 0) {

String[] keyset = keys.split(MessageConst.KEY_SEPARATOR);

for (int i = 0; i < keyset.length; i++) {

String key = keyset[i];

if (key.length() > 0) {

indexFile = putKey(indexFile, msg, buildKey(topic, key));

if (indexFile == null) {

log.error("putKey error commitlog {} uniqkey {}", req.getCommitLogOffset(), req.getUniqKey());

return;

}

}

}

}构建索引键,RocketMQ支持为同一个消息建立多个索引,多个索引键空格分开。

消息队列与索引文件恢复

存储启动时所谓的文件恢复主要完成flushedPosition、committedWhere指针的设置、消息消费队列最大偏移量加载到内存,并删除flushedPosition之后所有的文件。如果Broker异常启动,在文件恢复过程中,RocketMQ会将最后一个有效文件中的所有消息重新转发到消息消费队列与索引文件,确保不丢失消息,但同时会带来消息重复的问题,纵观RocktMQ的整体设计思想,RocketMQ保证消息不丢失但不保证消息不会重复消费,故消息消费业务方需要实现消息消费的幂等设计。

文件刷盘机制

RocketMQ的存储与读写是基于JDK NIO的内存映射机制(MappedByteBuffer)的,消息存储时首先将消息追加到内存,再根据配置的刷盘策略在不同时间进行刷写磁盘。如果是同步刷盘,消息追加到内存后,将同步调用MappedByteBuffer的force()方法;如果是异步刷盘,在消息追加到内存后立刻返回给消息发送端。RocketMQ使用一个单独的线程按照某一个设定的频率执行刷盘操作。索引文件的刷盘并不是采取定时刷盘机制,而是每更新一次索引文件就会将上一次的改动刷写到磁盘。

Broker同步刷盘

同步刷盘,指的是在消息追加到内存映射文件的内存中后,立即将数据从内存刷写到磁盘文件,由CommitLog的handleDiskFlush方法实现。

public void handleDiskFlush(AppendMessageResult result, PutMessageResult putMessageResult, MessageExt messageExt) {

// Synchronization flush

if (FlushDiskType.SYNC_FLUSH == this.defaultMessageStore.getMessageStoreConfig().getFlushDiskType()) {

final GroupCommitService service = (GroupCommitService) this.flushCommitLogService;

if (messageExt.isWaitStoreMsgOK()) {

GroupCommitRequest request = new GroupCommitRequest(result.getWroteOffset() + result.getWroteBytes());

service.putRequest(request);

CompletableFuture<PutMessageStatus> flushOkFuture = request.future();

PutMessageStatus flushStatus = null;

try {

flushStatus = flushOkFuture.get(this.defaultMessageStore.getMessageStoreConfig().getSyncFlushTimeout(),

TimeUnit.MILLISECONDS);

} catch (InterruptedException | ExecutionException | TimeoutException e) {

//flushOK=false;

}

if (flushStatus != PutMessageStatus.PUT_OK) {

log.error("do groupcommit, wait for flush failed, topic: " + messageExt.getTopic() + " tags: " + messageExt.getTags()

+ " client address: " + messageExt.getBornHostString());

putMessageResult.setPutMessageStatus(PutMessageStatus.FLUSH_DISK_TIMEOUT);

}

} else {

service.wakeup();

}

}

// Asynchronous flush

else {

if (!this.defaultMessageStore.getMessageStoreConfig().isTransientStorePoolEnable()) {

flushCommitLogService.wakeup();

} else {

commitLogService.wakeup();

}

}

}

同步刷盘的简单描述就是,消息生产者在消息服务端将消息内容追加到内存映射文件中(内存)后,需要同步将内存的内容立刻刷写到磁盘。通过调用内存映射文件(MappedByteBuffer的force方法)可将内存中的数据写入磁盘。

Broker异步刷盘

异步刷盘根据是否开启transientStorePoolEnable机制,刷盘实现会有细微差别。如果transientStorePoolEnable为true, RocketMQ会单独申请一个与目标物理文件(commitlog)同样大小的堆外内存,该堆外内存将使用内存锁定,确保不会被置换到虚拟内存中去,消息首先追加到堆外内存,然后提交到与物理文件的内存映射内存中,再flush到磁盘。如果transientStorePoolEnable为flalse,消息直接追加到与物理文件直接映射的内存中,然后刷写到磁盘中。

CommitLog#handleDiskFlush

// Asynchronous flush

else {

if (!this.defaultMessageStore.getMessageStoreConfig().isTransientStorePoolEnable()) {

flushCommitLogService.wakeup();

} else {

commitLogService.wakeup();

}

}- 首先将消息直接追加到ByteBuffer(堆外内存DirectByteBuffer),wrotePosition随着消息的不断追加向后移动。

- CommitRealTimeService线程默认每200ms将ByteBuffer新追加的内容(wrotePosition减去commitedPosition)的数据提交到MappedByteBuffer中。

- MappedByteBuffer在内存中追加提交的内容,wrotePosition指针向前后移动,然后返回。

- commit操作成功返回,将commitedPosition向前后移动本次提交的内容长度,此时wrotePosition指针依然可以向前推进。

- FlushRealTimeService线程默认每500ms将MappedByteBuffer中新追加的内存(wrote Position减去上一次刷写位置flushedPositiont)通过调用MappedByteBuffer#force()方法将数据刷写到磁盘。

过期文件删除机制

RocketMQ清除过期文件的方法是:如果非当前写文件在一定时间间隔内没有再次被更新,则认为是过期文件,可以被删除,RocketMQ不会关注这个文件上的消息是否全部被消费。默认每个文件的过期时间为72小时,通过在Broker配置文件中设置fileReservedTime来改变过期时间,单位为小时。

DefaultMessageStore#addScheduleTask

private void addScheduleTask() {

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

DefaultMessageStore.this.cleanFilesPeriodically();

}

}, 1000 * 60, this.messageStoreConfig.getCleanResourceInterval(), TimeUnit.MILLISECONDS);

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

DefaultMessageStore.this.checkSelf();

}

}, 1, 10, TimeUnit.MINUTES);

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

if (DefaultMessageStore.this.getMessageStoreConfig().isDebugLockEnable()) {

try {

if (DefaultMessageStore.this.commitLog.getBeginTimeInLock() != 0) {

long lockTime = System.currentTimeMillis() - DefaultMessageStore.this.commitLog.getBeginTimeInLock();

if (lockTime > 1000 && lockTime < 10000000) {

String stack = UtilAll.jstack();

final String fileName = System.getProperty("user.home") + File.separator + "debug/lock/stack-"

+ DefaultMessageStore.this.commitLog.getBeginTimeInLock() + "-" + lockTime;

MixAll.string2FileNotSafe(stack, fileName);

}

}

} catch (Exception e) {

}

}

}

}, 1, 1, TimeUnit.SECONDS);

// this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

// @Override

// public void run() {

// DefaultMessageStore.this.cleanExpiredConsumerQueue();

// }

// }, 1, 1, TimeUnit.HOURS);

this.diskCheckScheduledExecutorService.scheduleAtFixedRate(new Runnable() {

public void run() {

DefaultMessageStore.this.cleanCommitLogService.isSpaceFull();

}

}, 1000L, 10000L, TimeUnit.MILLISECONDS);

}RocketMQ会每隔10s调度一次cleanFilesPeriodically,检测是否需要清除过期文件。执行频率可以通过设置cleanResourceInterval,默认为10s。

private void cleanFilesPeriodically() {

this.cleanCommitLogService.run();

this.cleanConsumeQueueService.run();

}分别执行清除消息存储文件(Commitlog文件)与消息消费队列文件(ConsumeQueue文件)。(消息消费队列文件与消息存储文件(Commitlog)共用一套过期文件删除机制)

private void deleteExpiredFiles() {

int deleteCount = 0;

long fileReservedTime = DefaultMessageStore.this.getMessageStoreConfig().getFileReservedTime();

int deletePhysicFilesInterval = DefaultMessageStore.this.getMessageStoreConfig().getDeleteCommitLogFilesInterval();

int destroyMapedFileIntervalForcibly = DefaultMessageStore.this.getMessageStoreConfig().getDestroyMapedFileIntervalForcibly();

boolean timeup = this.isTimeToDelete();

boolean spacefull = this.isSpaceToDelete();

boolean manualDelete = this.manualDeleteFileSeveralTimes > 0;

if (timeup || spacefull || manualDelete) {

if (manualDelete)

this.manualDeleteFileSeveralTimes--;

boolean cleanAtOnce = DefaultMessageStore.this.getMessageStoreConfig().isCleanFileForciblyEnable() && this.cleanImmediately;

log.info("begin to delete before {} hours file. timeup: {} spacefull: {} manualDeleteFileSeveralTimes: {} cleanAtOnce: {}",

fileReservedTime,

timeup,

spacefull,

manualDeleteFileSeveralTimes,

cleanAtOnce);

fileReservedTime *= 60 * 60 * 1000;

deleteCount = DefaultMessageStore.this.commitLog.deleteExpiredFile(fileReservedTime, deletePhysicFilesInterval,

destroyMapedFileIntervalForcibly, cleanAtOnce);

if (deleteCount > 0) {

} else if (spacefull) {

log.warn("disk space will be full soon, but delete file failed.");

}

}

}- fileReservedTime:文件保留时间,也就是从最后一次更新时间到现在,如果超过了该时间,则认为是过期文件,可以被删除。

- deletePhysicFilesInterval:删除物理文件的间隔,因为在一次清除过程中,可能需要被删除的文件不止一个,该值指定两次删除文件的间隔时间。

- destroyMapedFileIntervalForcibly:在清除过期文件时,如果该文件被其他线程所占用(引用次数大于0,比如读取消息),此时会阻止此次删除任务,同时在第一次试图删除该文件时记录当前时间戳,destroyMapedFileIntervalForcibly表示第一次拒绝删除之后能保留的最大时间,在此时间内,同样可以被拒绝删除,同时会将引用减少1000个,超过该时间间隔后,文件将被强制删除。

if (timeup || spacefull || manualDelete) {

……

}RocketMQ在如下三种情况任意之一满足的情况下将继续执行删除文件操作。

- 指定删除文件的时间点,RocketMQ通过deleteWhen设置一天的固定时间执行一次删除过期文件操作,默认为凌晨4点。

- 磁盘空间是否充足,如果磁盘空间不充足,则返回true,表示应该触发过期文件删除操作。

- 预留,手工触发,可以通过调用excuteDeleteFilesManualy方法手工触发过期文件删除,目前RocketMQ暂未封装手工触发文件删除的命令。

磁盘空间是否充足的实现逻辑。(isSpaceToDelete)

deleteCount = DefaultMessageStore.this.commitLog.deleteExpiredFile(fileReservedTime, deletePhysicFilesInterval,

destroyMapedFileIntervalForcibly, cleanAtOnce);如果当前磁盘分区使用率大于diskSpaceWarningLevelRatio,设置磁盘不可写,应该立即启动过期文件删除操作;如果当前磁盘分区使用率大于diskSpaceCleanForciblyRatio,建议立即执行过期文件清除;如果磁盘使用率低于diskSpaceCleanForciblyRatio将恢复磁盘可写;如果当前磁盘使用率小于diskMaxUsedSpaceRatio则返回false,表示磁盘使用率正常,否则返回true,需要执行清除过期文件。

public int deleteExpiredFileByTime(final long expiredTime,

final int deleteFilesInterval,

final long intervalForcibly,

final boolean cleanImmediately) {

Object[] mfs = this.copyMappedFiles(0);

if (null == mfs)

return 0;

int mfsLength = mfs.length - 1;

int deleteCount = 0;

List<MappedFile> files = new ArrayList<MappedFile>();

if (null != mfs) {

for (int i = 0; i < mfsLength; i++) {

MappedFile mappedFile = (MappedFile) mfs[i];

long liveMaxTimestamp = mappedFile.getLastModifiedTimestamp() + expiredTime;

if (System.currentTimeMillis() >= liveMaxTimestamp || cleanImmediately) {

if (mappedFile.destroy(intervalForcibly)) {

files.add(mappedFile);

deleteCount++;

if (files.size() >= DELETE_FILES_BATCH_MAX) {

break;

}

if (deleteFilesInterval > 0 && (i + 1) < mfsLength) {

try {

Thread.sleep(deleteFilesInterval);

} catch (InterruptedException e) {

}

}

} else {

break;

}

} else {

//avoid deleting files in the middle

break;

}

}

}

deleteExpiredFile(files);

return deleteCount;

}执行文件销毁与删除。从倒数第二个文件开始遍历,计算文件的最大存活时间(=文件的最后一次更新时间+文件存活时间(默认72小时)),如果当前时间大于文件的最大存活时间或需要强制删除文件(当磁盘使用超过设定的阈值)时则执行MappedFile#destory方法,清除MappedFile占有的相关资源,如果执行成功,将该文件加入到待删除文件列表中,然后统一执行File#delete方法将文件从物理磁盘中删除。

参考《RocketMQ技术内幕:RocketMQ架构设计与实现原理》

1288

1288

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?