利用前14位运动员的运动成绩为训练样本,预测第15为运动员的跳高成绩。具体数据如下:

| 序号 | 跳高成绩() | 30行进跑(s) | 立定三级跳远() | 助跑摸高() | 助跑4—6步跳高() | 负重深蹲杠铃() | 杠铃半蹲系数 | 100(s) | 抓举() |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2.24 | 3.2 | 9.6 | 3.45 | 2.15 | 140 | 2.8 | 11.0 | 50 |

| 2 | 2.33 | 3.2 | 10.3 | 3.75 | 2.2 | 120 | 3.4 | 10.9 | 70 |

| 3 | 2.24 | 3.0 | 9.0 | 3.5 | 2.2 | 140 | 3.5 | 11.4 | 50 |

| 4 | 2.32 | 3.2 | 10.3 | 3.65 | 2.2 | 150 | 2.8 | 10.8 | 80 |

| 5 | 2.2 | 3.2 | 10.1 | 3.5 | 2 | 80 | 1.5 | 11.3 | 50 |

| 6 | 2.27 | 3.4 | 10.0 | 3.4 | 2.15 | 130 | 3.2 | 11.5 | 60 |

| 7 | 2.2 | 3.2 | 9.6 | 3.55 | 2.1 | 130 | 3.5 | 11.8 | 65 |

| 8 | 2.26 | 3.0 | 9.0 | 3.5 | 2.1 | 100 | 1.8 | 11.3 | 40 |

| 9 | 2.2 | 3.2 | 9.6 | 3.55 | 2.1 | 130 | 3.5 | 11.8 | 65 |

| 10 | 2.24 | 3.2 | 9.2 | 3.5 | 2.1 | 140 | 2.5 | 11.0 | 50 |

| 11 | 2.24 | 3.2 | 9.5 | 3.4 | 2.15 | 115 | 2.8 | 11.9 | 50 |

| 12 | 2.2 | 3.9 | 9.0 | 3.1 | 2.0 | 80 | 2.2 | 13.0 | 50 |

| 13 | 2.2 | 3.1 | 9.5 | 3.6 | 2.1 | 90 | 2.7 | 11.1 | 70 |

| 14 | 2.35 | 3.2 | 9.7 | 3.45 | 2.15 | 130 | 4.6 | 10.85 | 70 |

| 15 | 3.0 | 9.3 | 3.3 | 2.05 | 100 | 2.8 | 11.2 | 50 |

程序代码如下:

import numpy as np

import scipy.special as sp

class net:

def __init__(self, sample_data, output_data, hidden_num1,error=0.001, rate=0.8):

sample_num, input_num = np.shape(sample_data)

self.sample_data = sample_data ##每个选手的各项成绩作为一行

self.output_data = output_data

self.sample_num = sample_num

self.input_num = input_num

self.hidden_num1 = hidden_num1

self.rate = rate

self.error = error

self.max_data = max(self.output_data)

self.min_data = min(self.output_data)

self.output_data = (self.output_data-self.min_data)/(self.max_data-self.min_data)

##初始化权重矩阵

self.w1 = np.random.rand(hidden_num1, input_num) * 2 - 1

self.w2 = np.random.rand(hidden_num2, hidden_num1) * 2 - 1

self.w3 = np.random.rand(1, hidden_num2) * 2 - 1

self.b1 = np.random.rand(hidden_num1, 1) * 2 - 1

self.b2 = np.random.rand(hidden_num2, 1) * 2 - 1

self.b3 = np.random.rand(1,1) * 2 - 1

self.train()

def train(self):

for i in range(self.sample_num):

input_data = self.sample_data[i]

lable = self.output_data[i]

input_data = input_data.reshape(self.input_num,1)

lable = lable.reshape(1,1)

input_hidden1 = np.dot(self.w1, input_data)+self.b1

output_hidden1 = sp.expit(input_hidden1)

output_hidden1 = output_hidden1.reshape(hidden_num1,1)

input_hidden2 = np.dot(self.w2, output_hidden1)+self.b2

output_hidden2 = sp.expit(input_hidden2)

output_hidden2 = output_hidden2.reshape(hidden_num2, 1)

input_out = np.dot(self.w3, output_hidden2)+self.b3

final = sp.expit(input_out)

E3 = final * (1 - final) * (lable - final)

while (abs(E3) >= self.error):

E2 = output_hidden2*(1-output_hidden2)*np.dot(self.w3.T,E3)

E1 = output_hidden1*(1-output_hidden1)*np.dot(self.w2.T,E2)

self.w1 += self.rate * E1 * input_data.T

self.w2 += self.rate * E2 * output_hidden1.T

self.w3 += self.rate * E3 * output_hidden2.T

self.b1 += self.rate * E1

self.b2 += self.rate * E2

self.b3 += self.rate * E3

input_hidden1 = np.dot(self.w1, input_data)+self.b1

output_hidden1 = sp.expit(input_hidden1)

output_hidden1 = output_hidden1.reshape(hidden_num1, 1)

input_hidden2 = np.dot(self.w2, output_hidden1)+self.b2

output_hidden2 = sp.expit(input_hidden2)

output_hidden2 = output_hidden2.reshape(hidden_num2, 1)

input_out = np.dot(self.w3, output_hidden2)+self.b3

final = sp.expit(input_out)

E3 = final * (1 - final) * (lable - final)

print('finish train!')

def privite(self, privite_data):

privite_data = privite_data.reshape(self.input_num, 1)

input_hidden1 = np.dot(self.w1, privite_data)+self.b1

output_hidden1 = sp.expit(input_hidden1)

output_hidden1 = output_hidden1.reshape(hidden_num1, 1)

input_hidden2 = np.dot(self.w2, output_hidden1)+self.b2

output_hidden2 = sp.expit(input_hidden2)

output_hidden2 = output_hidden2.reshape(hidden_num2, 1)

input_out = np.dot(self.w3, output_hidden2)+self.b3

final = sp.expit(input_out)

return (final*(self.max_data-self.min_data)+self.min_data)

sample_data =np.array([[3.2, 9.6, 3.45,2.15, 140, 2.8,11, 50 ],

[ 3.2,10.3, 3.75,2.2 ,120, 3.4,10.9,70 ],

[ 3, 9, 3.5, 2.2 ,140, 3.5,11.4,50 ],

[ 3.2,10.3, 3.65,2.2 , 150, 2.8,10.8,80 ],

[ 3.2,10.1, 3.5, 2, 80, 1.5,11.3,50 ],

[ 3.4,10, 3.4, 2.15, 130, 3.2,11.5,60 ],

[ 3.2, 9.6, 3.55,2.1, 130, 3.5,11.8,65 ],

[ 3, 9, 3.5, 2.1, 100, 1.8,11.3,40 ],

[ 3.2, 9.6, 3.55,2.1 , 130, 3.5,11.8,65 ],

[ 3.2, 9.2, 3.5, 2.1 , 140, 2.5,11, 50 ],

[ 3.2, 9.5, 3.4, 2.15, 115, 2.8,11.9,50 ],

[ 3.9, 9, 3.1, 2, 80, 2.2,13, 50 ],

[ 3.1, 9.5, 3.6, 2.1,90, 2.7,11.1,70 ],

[ 3.2, 9.7, 3.45,2.15, 130, 4.6,10.85 , 70 ]])

output_data = np.array([[2.24],[ 2.33],[2.24] , [2.32], [2.2], [2.27], [2.2], [2.26], [2.2], [2.24], [2.24], [2.2], [2.2], [2.35]])

hidden_num1 = 2

hidden_num2 = 1

a = net(sample_data,output_data,hidden_num1,hidden_num2)

privite_data = np.array([3.0,9.3,3.3,2.05,100,2.8,11.2,50])

y = a.privite(privite_data)

print("预测得到的跳高成绩为:",y[0][0])

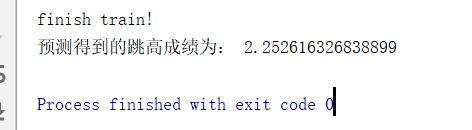

运行结果如下:

3万+

3万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?