一、问题描述

需求简述:有一张日度表,需要按照需求方要求去实现,其他逻辑暂时不讨论,其中会涉及一个月度聚合某字段,并去重复,且按照dt asc 排序

环境:spark sql 2.4.0

二、探索流程&解决

2.1 数据

SELECT 3517977374903924421 AS product_id,

'03年线下批发经验,严选品质,赠送运费险' AS recommend_remark,

"2022-02-03" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'下批发经验,严选品质,赠送运费险09年' AS recommend_remark,

"2022-02-09" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'线下批发经验,严选品质,赠送运费险23年' AS recommend_remark,

"2022-02-23" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'严选品质,赠送运费险,13年线下批发经验,' AS recommend_remark,

"2022-02-13" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'赠送运费险04' AS recommend_remark,

"2022-02-04" AS dt

2.2 初始sql

最初想用collect_set 直接处理然后子查询中排下序解决,这里用的pyspark sql 实现,没调用api 的方式,所以直接展示sql 了。

1)最初的sql

-- 造数据,假设表为all_data

WITH all_data AS

( SELECT 3517977374903924421 AS product_id,

'03年线下批发经验,严选品质,赠送运费险' AS recommend_remark,

"2022-02-03" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'下批发经验,严选品质,赠送运费险09年' AS recommend_remark,

"2022-02-09" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'线下批发经验,严选品质,赠送运费险23年' AS recommend_remark,

"2022-02-23" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'严选品质,赠送运费险,13年线下批发经验,' AS recommend_remark,

"2022-02-13" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'赠送运费险04' AS recommend_remark,

"2022-02-04" AS dt),

datas AS

( SELECT product_id,

recommend_remark,

dt

FROM all_data

WHERE dt>='2022-02-01'

AND dt<'2022-03-01'

AND product_id = 3517977374903924421

ORDER BY dt ASC)

SELECT product_id,

collect_set(recommend_remark) recommend_remark_list

FROM datas

GROUP BY product_id

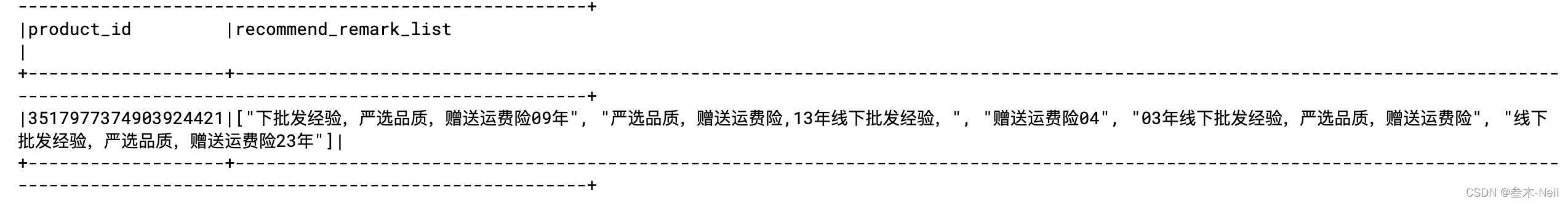

2)运行截图

3)问题

子查询中加了一个 dt asc 排序,但collect_set 后依旧内部无序…

4)解决

看了下hive 函数中有一个 sort_array 方法。发现其可以排序但是是根据内容自然序的方式。原本想自己重写一个带排序的自定义collect_set 方法,所以去看了下collect_set 实现,发现底层用了LinkHashSet实现,也就是说要想有序必须是输入元素顺序有序。那也就是说不能使用全局order 排序。改换为 distribute by product_id sort by dt asc ,发现依旧没有解决问题,倒是这么操作后collect_list 是有序的了,但是在hive on spark 1.6版本测试是成功的!,后为了快速解决直接写了一个udf 去对collect_list后的数据进行去重复的操作,这里是用python 实现。

from pyspark.sql.types import ArrayType,StringType

import json

def get_array_set(col_list):

new_list = []

if col_list:

try:

for x in col_list:

if x not in new_list:

new_list.append(x)

except:

print("数据json异常:" + str_json)

return []

return new_list

get_array_set = spark.udf.register('get_array_set', get_array_set, ArrayType(StringType()))

然后sql 改为

WITH all_data AS

(SELECT 3517977374903924421 AS product_id,

'03年线下批发经验,严选品质,赠送运费险' AS recommend_remark,

"2022-02-03" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'下批发经验,严选品质,赠送运费险09年' AS recommend_remark,

"2022-02-09" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'线下批发经验,严选品质,赠送运费险23年' AS recommend_remark,

"2022-02-23" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'严选品质,赠送运费险,13年线下批发经验,' AS recommend_remark,

"2022-02-13" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'赠送运费险04' AS recommend_remark,

"2022-02-04" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'赠送运费险03' AS recommend_remark,

"2022-02-03" AS dt

UNION ALL

SELECT 3517977374903924421 AS product_id,

'赠送运费险03' AS recommend_remark,

"2022-02-01" AS dt),

datas AS

( SELECT

product_id,

concat('"',recommend_remark,'"') as recommend_remark1,

dt

FROM all_data

WHERE dt>='2022-02-01'

AND dt<'2022-03-01'

distribute by product_id

sort by dt asc)

SELECT product_id,

get_array_set(collect_list(recommend_remark1)) recommend_remark_list

FROM datas

GROUP BY product_id

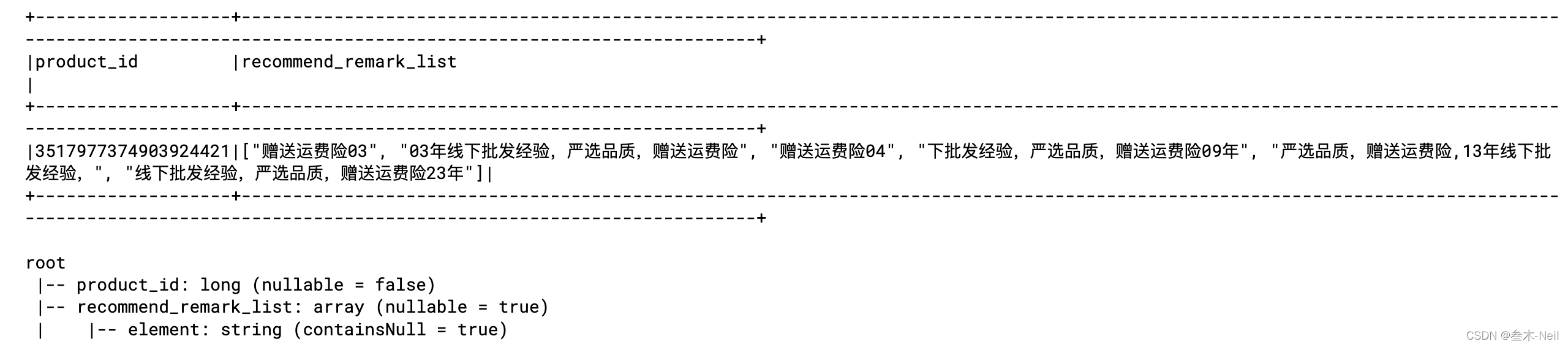

5)最终运行结果

5119

5119

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?