Hello Charm

sudo snap install charm --classic

mkdir -p ~/charms/{layers,interfaces}

export CHARM_LAYERS_DIR=~/charms/layers

export CHARM_INTERFACES_DIR=~/charms/interfaces

cd ~/charms/layers/

charm create layer-example

charm proof layer-example

sudo bash -c 'cat > layer-example/layer.yaml' << EOF

includes: ['layer:basic']

EOF

sudo bash -c 'cat > layer-example/metadata.yaml' << EOF

name: layer-example

summary: a very basic example charm

maintainer: hua <hua@t440p.lan>

description: |

This is my first charm

tags:

# https://jujucharms.com/docs/stable/authors-charm-metadata

- misc

- tutorials

EOF

sudo bash -c 'cat > layer-example/reactive/layer_example.py' << EOF

from charms.reactive import when, when_not, set_flag

@when_not('layer-example.installed')

def install_layer_example():

set_flag('layer-example.installed')

EOF

charm build layer-example

ls /tmp/charm-builds/layer-example

sudo bash -c 'cat > layer-example/layer.yaml' << EOF

includes:

- 'layer:basic'

- 'layer:apt'

options:

apt:

packages:

- hello

EOF

sudo bash -c 'cat > layer-example/reactive/layer_example.py' << EOF

from charms.reactive import set_flag, when, when_not

from charmhelpers.core.hookenv import application_version_set, status_set

from charmhelpers.fetch import get_upstream_version

import subprocess as sp

@when_not('example.installed')

def install_example():

set_flag('example.installed')

@when('apt.installed.hello')

def set_message_hello():

# Set the upstream version of hello for juju status.

application_version_set(get_upstream_version('hello'))

# Run hello and get the message

message = sp.check_output('hello', stderr=sp.STDOUT)

# Set the active status with the message

status_set('active', message )

# Signal that we know the version of hello

set_flag('hello.version.set')

EOF

charm build layer-example

sudo snap install juju --classic

juju bootstrap --debug --config bootstrap-series=bionic --config agent-stream=devel localhost lxd-controller

juju add-model test

juju deploy /tmp/charm-builds/layer-example

juju debug-log

ls /var/lib/juju/agents/unit-layer-example-0/charm/reactive/layer_example.py

Add a mysql interface

sudo bash -c 'cat > layer-example/layer.yaml' << EOF

includes:

- 'layer:basic'

- 'layer:apt'

- 'interface:mysql'

options:

apt:

packages:

- hello

EOF

sudo bash -c 'cat > layer-example/metadata.yaml' << EOF

name: layer-example

summary: a very basic example charm

maintainer: hua <hua@t440p.lan>

description: |

This is my first charm

tags:

# https://jujucharms.com/docs/stable/authors-charm-metadata

- misc

- tutorials

requires:

database:

interface: mysql

EOF

mkdir layer-example/templates

sudo bash -c 'cat > layer-example/templates/text-file.tmpl' << EOF

DB_HOST = '{{ my_database.host() }}'

DB_NAME = '{{ my_database.database() }}'

DB_USER = '{{ my_database.user() }}'

DB_PASSWORD = '{{ my_database.password() }}'

EOF

sudo bash -c 'cat > layer-example/reactive/layer_example.py' << EOF

from charms.reactive import set_flag, when, when_not

from charmhelpers.core.hookenv import application_version_set, status_set

from charmhelpers.fetch import get_upstream_version

import subprocess as sp

from charmhelpers.core.templating import render

@when_not('example.installed')

def install_example():

set_flag('example.installed')

@when('apt.installed.hello')

def set_message_hello():

# Set the upstream version of hello for juju status.

application_version_set(get_upstream_version('hello'))

# Run hello and get the message

message = sp.check_output('hello', stderr=sp.STDOUT)

# Set the maintenance status with a message

status_set('maintenance', message )

# Signal that we know the version of hello

set_flag('hello.version.set')

@when('database.available')

def write_text_file(mysql):

render(source='text-file.tmpl',

target='/root/text-file.txt',

owner='root',

perms=0o775,

context={

'my_database': mysql,

})

status_set('active', 'Ready: File rendered.')

@when_not('database.connected')

def missing_mysql():

status_set('blocked', 'Please add relation to MySQL')

@when('database.connected')

@when_not('database.available')

def waiting_mysql(mysql):

status_set('waiting', 'Waiting for MySQL')

EOF

charm proof layer-example

charm build layer-example

sudo snap install juju --classic

juju bootstrap --debug --config bootstrap-series=bionic --config agent-stream=devel localhost lxd-controller

juju add-model test

juju deploy /tmp/charm-builds/layer-example

juju deploy cs:mysql

juju add-relation layer-example mysql

$ juju ssh layer-example/0 sudo cat /root/text-file.txt

DB_HOST = '10.63.242.39'

DB_NAME = 'layer-example'

DB_USER = 'eiMogh5Ahc1aido'

DB_PASSWORD = 'bieng5Quah5aehe'Connection to 10.63.242.196 closed.

Charm Store

sudo bash -c 'cat >> layer-example/layer.yaml' << EOF

repo: git@github.com:zhhuabj/layer-example.git

EOF

sudo bash -c 'cat >> layer-example/metadata.yaml' << EOF

series:

- xenial

- bionic

EOF

charm proof layer-example

charm build layer-example

charm login #https://discourse.juju.is/

cd /tmp/charm-builds/layer-example

charm push .

juju deploy cs:~zhhuabj/example-0

OSM charm

sudo snap install charm --classic

mkdir -p /bak/work/charms/layers

export JUJU_REPOSITORY=/bak/work/charms

export LAYER_PATH=$JUJU_REPOSITORY/layers

cd $LAYER_PATH

charm create simple

cd simple

sudo bash -c 'cat >layer.yaml' <<EOF

includes: ['layer:basic', 'layer:vnfproxy']

options:

basic:

use_venv: false

EOF

sudo bash -c 'cat >metadata.yaml' <<EOF

name: simple

summary: a simple vnf proxy charm

maintainer: hua <hua@localhost>

subordinate: false

series: ['xenial']

EOF

sudo bash -c 'cat >actions.yaml' <<EOF

touch:

description: "Touch a file on the VNF."

params:

filename:

description: "The name of the file to touch."

type: string

default: ""

required:

- filename

EOF

mkdir -p actions

sudo bash -c 'cat >actions/touch' <<EOF

#!/usr/bin/env python3

import sys

sys.path.append('lib')

from charms.reactive import main, set_flag

from charmhelpers.core.hookenv import action_fail, action_name

set_flag('actions.{}'.format(action_name()))

try:

main()

except Exception as e:

action_fail(repr(e))

EOF

sudo chmod +x actions/touch

sudo bash -c 'cat >reactive/simple.py' <<EOF

from charmhelpers.core.hookenv import (

action_get,

action_fail,

action_set,

status_set,

)

from charms.reactive import (

clear_flag,

set_flag,

when,

when_not,

)

import charms.sshproxy

# Set the charm’s state to active so the LCM knows it’s ready to work.

@when_not('simple.installed')

def install_simple_proxy_charm():

set_flag('simple.installed')

status_set('active', 'Ready!')

# Define what to do when the `touch` primitive is invoked.

@when('actions.touch')

def touch():

err = ''

try:

filename = action_get('filename')

cmd = ['touch {}'.format(filename)]

result, err = charms.sshproxy._run(cmd)

except:

action_fail('command failed:' + err)

else:

action_set({'output': result})

finally:

clear_flag('actions.touch')

EOF

charm build

vnfd file

vnfd:vnfd-catalog:

vnfd:

- id: hackfest3charmed-vnf

name: hackfest3charmed-vnf

vnf-configuration:

juju:

charm: simple

initial-config-primitive:

- seq: '1'

name: config

parameter:

- name: ssh-hostname

value: <rw_mgmt_ip>

- name: ssh-username

value: ubuntu

- name: ssh-password

value: osm4u

- seq: '2'

name: touch

parameter:

- name: filename

value: '/home/ubuntu/first-touch'

config-primitive:

- name: touch

parameter:

- name: filename

data-type: STRING

default-value: '/home/ubuntu/touched'

Debug it

juju run --unit gnocchi/2 -- 'charms.reactive -p get_flags'

juju run --unit gnocchi/2 -- 'charms.reactive -p clear_flag run-default-upgrade-charm ; charms.reactive -p clear_flag ceph.create_pool.req.sent; hooks/update-status'

juju run --unit gnocchi/2 -- 'charms.reactive -p get_flags'

juju run --unit gnocchi/2 -- 'hooks/shared-db-relation-changed'

juju status

juju upgrade-charm gnocchi --revision 30

https://paste.ubuntu.com/p/qPm5dtFtz2/

juju run --unit vault/0 -- 'for flag in shared-db.connected shared-db.available shared-db.available.access_network shared-db.available.ssl; do charms.reactive -p clear_flag $flag; done'

#https://bugs.launchpad.net/juju/+bug/1891395/comments/4

juju remove-relation vault:secrets kubernetes-master:vault-kv --force

juju resolved vault/2 --no-retry #make sure there no error state

juju status --relation vault-kv #make sure all relations have been removed

juju run --unit vault/0 -- 'relation-ids shared-db'

juju add-relation vault:secrets kubernetes-master:vault-kv

juju run --unit graylog/0 -- charm-env python3.6 -c "import charms.leadership; from charmhelpers.core import host, hookenv, unitdata; print(unitdata.kv().get('nagios_token')); print(charms.leadership.leader_get('nagios_token'))"

[1] https://review.opendev.org/#/c/686584/3/src/reactive/gnocchi_handlers.py

[2] https://review.opendev.org/#/c/700125/1/src/reactive/gnocchi_handlers.py

[3] https://review.opendev.org/#/c/716545/4/src/reactive/gnocchi_handlers.py

快速测试juju hook相关的代码:

juju ssh octavia/0 -- sudo -s

cat << EOF | sudo tee /tmp/test.py

import charmhelpers.core.hookenv as hookenv;

running_units = []

for u in hookenv.iter_units_for_relation_name('cluster'):

running_units.append(u.unit)

print(running_units)

EOF

juju run --unit octavia/0 -- charm-env python3.6 /tmp/test.py

但直接运行/var/lib/juju/agents/unit-octavia-0/.venv/bin/python3.6只能运行像log这种不在charm-env里的函数

/var/lib/juju/agents/unit-octavia-0/.venv/bin/python3.6 -c "import charmhelpers.core.hookenv as hookenv; hookenv.log('test');"

juju run --unit octavia/0 -- charm-env python3.6 -c "import charmhelpers.core.hookenv as hookenv; hookenv.log('test');"

debug如db等功能,还能使用rpdb调试,但注意一点,有时候不生效那是因为后台跑了rpdb进程没有杀掉,kill它再运行"juju run --unit octavia/0 – charm-env python3.6 /tmp/test.py"即可:

cat << EOF | sudo tee /tmp/test.py

import charms.reactive as reactive

import charm.openstack.api_crud as api_crud

identity_service = reactive.endpoint_from_flag('identity-service.available')

# /var/lib/juju/agents/unit-octavia-0/.venv/bin/pip3.6 install rpdb

# nc 127.0.0.1 4444

import rpdb;rpdb.set_trace()

neutron_ip_list, neutron_ip_unit_map = api_crud.get_port_ips(identity_service)

print(neutron_ip_list)

EOF

#charm-env /var/lib/juju/agents/unit-octavia-0/.venv/bin/python3.6 /tmp/test.py

juju run --unit octavia/0 --timeout 35m0s -- charm-env python3.6 /tmp/test.py

还可以调试更复杂的,将hook.py里的方法粘出来放到/tmp/test.py即可:

import uuid

import charms.reactive as reactive

import charms.leadership as leadership

import charms_openstack.bus

import charms_openstack.charm as charm

import charms_openstack.ip as os_ip

import charmhelpers.core as ch_core

from charmhelpers.contrib.charmsupport import nrpe

import charm.openstack.api_crud as api_crud

import charm.openstack.octavia as octavia

def update_controller_ip_port_list():

"""Load state from Neutron and update ``controller-ip-port-list``."""

identity_service = reactive.endpoint_from_flag(

'identity-service.available')

leader_ip_list = leadership.leader_get('controller-ip-port-list') or []

import rpdb;rpdb.set_trace()

try:

neutron_ip_list, neutron_ip_unit_map = api_crud.get_port_ips(

identity_service)

neutron_ip_list = sorted(neutron_ip_list)

except api_crud.APIUnavailable as e:

ch_core.hookenv.log('Neutron API not available yet, deferring '

'port discovery. ("{}")'

.format(e),

level=ch_core.hookenv.DEBUG)

return

if neutron_ip_list != sorted(leader_ip_list):

# delete hm port which may be caused by 'juju remove-unit <octavia>'

missing_units = []

running_units = []

db_units = neutron_ip_unit_map.keys()

for u in ch_core.hookenv.iter_units_for_relation_name('cluster'):

running_units.append(u.unit.replace('/', '-'))

for unit_name in db_units:

if unit_name not in running_units:

missing_units.append(unit_name)

for unit_name in missing_units:

neutron_ip_list.remove(neutron_ip_unit_map.get(unit_name))

try:

ch_core.hookenv.log('deleting hm port for missing '

'unit {}'.format(unit_name))

api_crud.delete_hm_port(identity_service, unit_name)

except api_crud.APIUnavailable as e:

ch_core.hookenv.log('Neutron API not available yet, deferring '

'port discovery. ("{}")'

.format(e),

level=ch_core.hookenv.DEBUG)

return

leadership.leader_set(

{'controller-ip-port-list': json.dumps(neutron_ip_list)})

# /var/lib/juju/agents/unit-octavia-0/.venv/bin/pip3.6 install rpdb

# nc 127.0.0.1 4444

update_controller_ip_port_list()

sru

fix needs to backport to stable backport, eg: https://review.opendev.org/#/c/758038

and stable backport is merged and available in 20.08 charm cs:octavia-28 (when?)

another example

1, merge the code into master(openstack-charmers-next) - https://review.opendev.org/#/c/755276/

https://jaas.ai/u/openstack-charmers-next

https://jaas.ai/u/openstack-charmers-next/vault/118

https://jaas.ai/vault

hua@t440p:/bak/work/charms/vault$ git log |grep a38bf7 -A 2 |grep -E 'a38bf7|Date'

Merge: ae2d79f a38bf7c

Date: Wed Nov 4 12:36:34 2020 +0000

commit a38bf7cbd265ab46357ea7f9e99f55d126da8fb2

Date: Wed Sep 30 17:45:43 2020 +0530

2, Backport patch submitted to stable branch (eg: branch/20.10) according to https://docs.openstack.org/charm-guide/latest/release-schedule.html

3, stable backport is merged and available in 20.10 charm cs:vault-42 - https://jaas.ai/vault

4, the patch is ready to be applied. When would be a suitable time to update the Octavia charm in your environment?

but when will get charm revision nubmer? Is it the moment when the code is merged into the git repository, or is it going to take a few days? I think some one need to trigger -

https://docs.openstack.org/charm-guide/latest/release-policy.html

20220906更新 -

charmstore - https://charmhub.io/containers-kubernetes-worker

charmhub - https://charmhub.io/kubernetes-worker

charmstore - https://charmhub.io/containers-kubernetes-master

charmhub - https://charmhub.io/kubernetes-control-plane

现在改成了charmhub机制, 以前是stable/21.10是每3个月一发布,有时可以ping somebody来手工发布, 现在改成stable/yoga, 和社区一样是每6个月一发布, 只有最新的代码才会有edge (latest/edge, master分支), 其他版本只有stable版本, 一旦patch进入charm stable分支, patch会自动通过charmhub migration机制发布到charmhub.

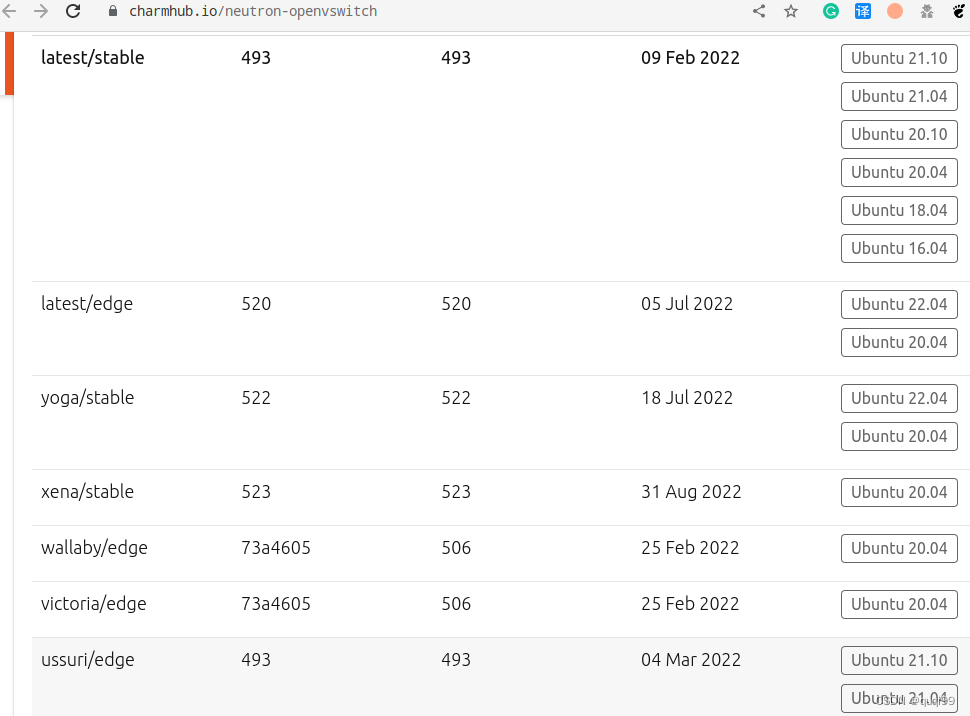

但目前charmhub migration机制还未完全完成, 如下图, 目前只有Xena及其以后的版本完成了(xena, yoga), xena之前的wallay, victoria, ussuri均未完成(若charmhub migration完成之后, 例如从https://charmhub.io/neutron-openvswitch 里看到的就只有stable, 不会有edge). 这样:

- 对于已经完成charmhub migration的xena, yoga, fix进入charm stable branch应该就自动进入charmhub了

- 对于尙未完成charmhub migration的W, V, U得问fix什么时候stable channel of W, V, U

有了charmhub之后不知为何用charm pull下载代码出错:

$ charm pull ch:neutron-openvswitch --channel ussuri/edge

ERROR cannot get archive: Invalid channel: ussuri/edge

现在似乎charm pull

最后用下列方法作为workaround:

juju download neutron-openvswitch --channel ussuri/edge --filepath neutron-openvswitch.charm

unzip -q neutron-openvswitch.charm -d neutron-openvswitch/

cd neutron-openvswitch/ && zip -rq ../neutron-openvswitch.charm . && cd ..

20201110更新 - nagios/nrpe/ntp charm流程

/etc/nagios/nrpe.d/check_ntpmon.py是由nrpe subordinate charm在render_nrpe_check_config中创建的。

# nrpe subordinate charm has a nrpe-external-master relation

juju add-relation nrpe:nrpe-external-master kubernetes-worker:nrpe-external-master

juju add-relation nrpe:monitors nagios:monitors

# subordinate charm ntp deployed to the same principal (kubernetes-worker) also has a nrpe-external-master relation

juju add-relation kubernetes-worker ntp

juju add-relation nrpe:nrpe-external-master ntp:nrpe-external-master

ntp与nrpe都是kubernetest-worker的subordinate charm, 所以运行上面的“juju add-relation nrpe:nrpe-external-master ntp:nrpe-external-master”之后nrpe subordinate charm也会把它和ntp subordinate charm的check_ntpmon这个monitor一起搜索后通过render_nrpe_check_config写到/etc/nagios/nrpe.d/check_ntpmon.py

$ juju run --unit nrpe/1 "relation-ids nrpe-external-master"

nrpe-external-master:18

nrpe-external-master:24

juju run --unit nrpe/1 "relation-list -r nrpe-external-master:18"

$ juju run --unit nrpe/1 "relation-list -r nrpe-external-master:18"

kubernetes-worker/0

$ juju run --unit nrpe/1 "relation-list -r nrpe-external-master:24"

ntp/1

$ juju run --unit nrpe/1 "relation-get -r nrpe-external-master:18 - kubernetes-worker/0"

egress-subnets: 10.5.2.192/32

ingress-address: 10.5.2.192

monitors: |

monitors:

remote:

nrpe:

node:

command: check_node

snap.kube-proxy.daemon:

command: check_snap.kube-proxy.daemon

snap.kubelet.daemon:

command: check_snap.kubelet.daemon

primary: "True"

private-address: 10.5.2.192

$ juju run --unit nrpe/1 "relation-get -r nrpe-external-master:24 - ntp/1"

egress-subnets: 10.5.2.192/32

ingress-address: 10.5.2.192

monitors: |

monitors:

remote:

nrpe:

ntpmon:

command: check_ntpmon

primary: "False"

private-address: 10.5.2.192

class MonitorsRelation(helpers.RelationContext):

...

def get_monitors(self):

all_monitors = Monitors()

monitors = [

self.get_principal_monitors(),

self.get_subordinate_monitors(),

self.get_user_defined_monitors(),

]

for mon in monitors:

all_monitors.add_monitors(mon)

return all_monitors

def render_nrpe_check_config(checkctxt):

"""Write nrpe check definition."""

# Only render if we actually have cmd parameters

if checkctxt["cmd_params"]:

render(

"nrpe_command.tmpl",

"/etc/nagios/nrpe.d/{}.cfg".format(checkctxt["cmd_name"]),

checkctxt,

)

这样会和下列升级路径产生竞争从而出现问题.

ntp包含了layer-ntpmon会先有/opt/ntpmon-ntp-charm/check_ntpmon.cfg

ntp upgrade之后调用update_nrpe_config, 会先调用charmhelpr的copy_nrpe_checks将/opt/ntpmon-ntp-charm/check_ntpmon.cfg写到/usr/local/lib/nagios/plugins/check_ntpmon.py

然后copy_nrpe_checks会继续调用charmhelper中的NRPE#add_check然后调用NRPE#write最后调用Check#write这时去写/etc/nagios/nrpe.d/check_ntpmon.py发现不存在从而抛错。

1, check_ntpmon.cfg is from layer-ntpmon (ntp charm includes it) and is copied to /opt/ntpmon-ntp-charm/check_ntpmon.cfg [3]

hua@t440p:/bak/work/charms/ntp$ grep -r 'options' layer.yaml -A 2

options:

ntpmon:

install-dir: /opt/ntpmon-ntp-charm

# https://git.launchpad.net/ntpmon/tree/reactive/ntpmon.py#n72

@when_not('ntpmon.installed')

def install_ntpmon():

install_dir = layer.options.get('ntpmon', 'install-dir')

if install_dir:

host.mkdir(os.path.dirname(install_dir))

host.rsync('src/', '{}/'.format(install_dir))

2, ntp's upgrade-charm will set nrpe-external-master.available so then update_nrpe_config is called

258 @when_all('nrpe-external-master.available', 'ntpmon.installed')

259 @when_not('ntp.nrpe.configured')

260 def update_nrpe_config():

261 options = layer.options('ntpmon')

262 if options is None or 'install-dir' not in options:

263 return

265 nrpe.copy_nrpe_checks(nrpe_files_dir=options['install-dir'])

3, charmhelper's copy_nrpe_checks will copy /opt/ntpmon-ntp-charm/check_ntpmon.cfg to /usr/local/lib/nagios/plugins/check_ntpmon.py

def copy_nrpe_checks(nrpe_files_dir=None):

...

NAGIOS_PLUGINS = '/usr/local/lib/nagios/plugins'

for fname in glob.glob(os.path.join(nrpe_files_dir, "check_*")):

if os.path.isfile(fname):

shutil.copy2(fname, os.path.join(NAGIOS_PLUGINS, os.path.basename(fname)))

4, then update_nrpe_config continues to run charmhelper NRPE's add_check,

258 @when_all('nrpe-external-master.available', 'ntpmon.installed')

259 @when_not('ntp.nrpe.configured')

260 def update_nrpe_config():

...

279 check_cmd = os.path.join(options['install-dir'], 'check_ntpmon.py') + ' --check ' + ' '.join(nagios_ntpmon_checks)

280 unitdata.kv().set('check_cmd', check_cmd)

281 nrpe_setup.add_check(

282 check_cmd=check_cmd,

283 description='Check NTPmon {}'.format(current_unit),

284 shortname="ntpmon",

285 )

286 nrpe_setup.write()

here check_cmd should be:

#juju config ntp nagios_ntpmon_checks

/opt/ntpmon-ntp-charm/check_ntpmon.cfg --check offset peers reach sync proc vars

We also saw the following context from sosreport, so there's no problem with this.

# check ntpmon

# The following header was added automatically by juju

# Modifying it will affect nagios monitoring and alerting

# servicegroups: juju

command[check_ntpmon]=/opt/ntpmon-ntp-charm/check_ntpmon.py --check offset peers reach sync proc vars

5, then update_nrpe_config continues to run charmhelper NRPE's write,

class NRPE(object):

...

nrpe_confdir = '/etc/nagios/nrpe.d'

def write(self):

...

# check that the charm can write to the conf dir. If not, then nagios

# probably isn't installed, and we can defer.

if not self.does_nrpe_conf_dir_exist():

return

for nrpecheck in self.checks:

nrpecheck.write(self.nagios_context, self.hostname, self.nagios_servicegroups)

6, charmhelp NRPE's write invokes Check's write.

class Check(object):

...

def _get_check_filename(self):

return os.path.join(NRPE.nrpe_confdir, '{}.cfg'.format(self.command))

def write(self, nagios_context, hostname, nagios_servicegroups):

nrpe_check_file = self._get_check_filename()

with open(nrpe_check_file, 'w') as nrpe_check_config:

...

原理搞清楚了,通过下列命令可以重现问题(直接运行“juju upgrade-charm ntp --revision 41”不知道为什么有时候不触发)

juju remove-relation nrpe:nrpe-external-master ntp:nrpe-external-master

juju run --unit ntp/2 -- 'charms.reactive -p clear_flag ntp.installed ; charms.reactive -p set_flag nrpe-external-master.available; hooks/update-status'

==> /var/log/juju/unit-ntp-2.log <==

2020-11-10 12:21:05 INFO juju-log Invoking reactive handler: reactive/ntp.py:112:install

2020-11-10 12:21:06 INFO juju-log Installing ['chrony'] with options: ['--option=Dpkg::Options::=--force-confold']

2020-11-10 12:21:06 INFO juju-log Installing ['facter', 'ntpdate', 'python3-psutil', 'virt-what'] with options: ['--option=Dpkg::Options::=--force-confold']

2020-11-10 12:21:09 INFO juju-log Invoking reactive handler: reactive/ntp.py:179:config_changed

2020-11-10 12:21:09 INFO juju-log Invoking reactive handler: reactive/ntp.py:258:update_nrpe_config

2020-11-10 12:21:09 ERROR juju-log Hook error:

Traceback (most recent call last):

File "/var/lib/juju/agents/unit-ntp-2/.venv/lib/python3.6/site-packages/charms/reactive/__init__.py", line 74, in main

bus.dispatch(restricted=restricted_mode)

File "/var/lib/juju/agents/unit-ntp-2/.venv/lib/python3.6/site-packages/charms/reactive/bus.py", line 390, in dispatch

_invoke(other_handlers)

File "/var/lib/juju/agents/unit-ntp-2/.venv/lib/python3.6/site-packages/charms/reactive/bus.py", line 359, in _invoke

handler.invoke()

File "/var/lib/juju/agents/unit-ntp-2/.venv/lib/python3.6/site-packages/charms/reactive/bus.py", line 181, in invoke

self._action(*args)

File "/var/lib/juju/agents/unit-ntp-2/charm/reactive/ntp.py", line 286, in update_nrpe_config

nrpe_setup.write()

File "/var/lib/juju/agents/unit-ntp-2/.venv/lib/python3.6/site-packages/charmhelpers/contrib/charmsupport/nrpe.py", line 315, in write

self.nagios_servicegroups)

File "/var/lib/juju/agents/unit-ntp-2/.venv/lib/python3.6/site-packages/charmhelpers/contrib/charmsupport/nrpe.py", line 196, in write

with open(nrpe_check_file, 'w') as nrpe_check_config:

FileNotFoundError: [Errno 2] No such file or directory: '/etc/nagios/nrpe.d/check_ntpmon.cfg'

发现只是nagios/0上的ntp/2才是error状态,而其他ntp units则是active的。显然是因为缺乏这个关系(juju add-relation nrpe:nrpe-external-master ntp:nrpe-external-master)导致。

nagios/0* active idle 2 10.5.2.4 80/tcp ready

ntp/2* error idle 10.5.2.4 123/udp chrony: Ready

所以要想恢复,得先把这个关系加上“juju add-relation nrpe:nrpe-external-master ntp:nrpe-external-master”之后,nrpe-external-master:24变成了nrpe-external-master:25

$ juju run --unit nrpe/1 "relation-get -r nrpe-external-master:25 - ntp/1"

egress-subnets: 10.5.2.192/32

ingress-address: 10.5.2.192

monitors: |

monitors:

remote:

nrpe:

ntpmon:

command: check_ntpmon

primary: "False"

private-address: 10.5.2.192

最后运行下列命令将error状态修复好。

juju run --unit ntp/2 -- 'charms.reactive -p clear_flag nrpe-external-master.available; hooks/update-status'

juju resolved ntp/2

环境搭建:

juju add-model k8s stsstack/stsstack

juju deploy kubernetes-core

juju deploy nagios

juju deploy nrpe

juju deploy cs:ntp-39

juju expose nagios

juju add-relation nagios:monitors nrpe:monitors

juju add-relation nrpe:nrpe-external-master kubernetes-master:nrpe-external-master

juju add-relation nrpe:nrpe-external-master kubernetes-worker:nrpe-external-master

juju add-relation nrpe:nrpe-external-master etcd:nrpe-external-master

juju add-relation nrpe:general-info easyrsa:juju-info

juju add-relation easyrsa:juju-info ntp:juju-info

juju add-relation kubernetes-worker:juju-info ntp:juju-info

juju add-relation nagios:juju-info ntp:juju-info

juju add-relation nrpe:nrpe-external-master ntp:nrpe-external-master

juju upgrade-charm ntp --revision 41

juju run --unit ntp/2 -- 'charms.reactive -p clear_flag ntp.installed ; charms.reactive -p set_flag nrpe-external-master.available; hooks/update-status'

juju add-model nagios stsstack/stsstack

juju deploy ubuntu

juju deploy nrpe

juju deploy nagios

juju deploy cs:ntp-39

juju add-relation ubuntu nrpe

juju add-relation nrpe:monitors nagios:monitors

juju add-relation ubuntu ntp

juju add-relation nagios:juju-info ntp:juju-info

juju add-relation nrpe:nrpe-external-master ntp:nrpe-external-master

juju upgrade-charm ntp --revision 41

20201114更新 - 如何获取vault中的cert/key

export VAULT_ADDR="http://10.5.0.27:8200"

vault status

curl -s -H "X-Vault-Token:$VAULT_TOKEN" http://localhost:8200/v1/$pki_backend/certs/pem

$ juju run --unit nova-cloud-controller/0 "relation-list -r $(juju run --unit nova-cloud-controller/0 "relation-ids certificates")"

vault/0

juju run --unit nova-cloud-controller/0 "relation-get -r $(juju run --unit nova-cloud-controller/0 "relation-ids certificates") - vault/0" |grep -E 'cert|key|ca'

juju run --unit vault/0 -- charm-env python3.6 -c "import charms.leadership; from charmhelpers.core import host, hookenv, unitdata; print(unitdata.kv().get('charm.vault.global-client-cert'));"

# charm-nova-cloud-controller/blob/master/charmhelpers/contrib/openstack/cert_utils.py#install_certs is used to install cert/key

vim vault/src/reactive/vault_handlers.py#publish_global_client_cert

def publish_global_client_cert():

...

bundle = vault_pki.generate_certificate('client', 'global-client', [], ttl, max_ttl)

unitdata.kv().set('charm.vault.global-client-cert', bundle)

...

tls.set_client_cert(bundle['certificate'], bundle['private_key'])

20201114更新 - k8s升级过程中出错

k8s在升级过程中会有两个client_token,下面逻辑会导致有的master的token是旧的,有的是新的,这样,workers上的token也会有旧的有新的,从而有的有问题有的无问题

juju run --unit kubernetes-master/N 'relation-get -r $(relation-ids kube-control) - kubernetes-master/N' for /0 and /4

juju run --unit kubernetes-master/0 'relation-get -r $(relation-ids kube-control) - kubernetes-master/0' |grep client_token

juju run --unit kubernetes-master/1 'relation-get -r $(relation-ids kube-control) - kubernetes-master/1' |grep client_token

This is the whole code process for the further diagnosis

1, kubernetes-master leader will invoke create_tokens_and_sign_auth_requests to generate client_token and then call 'kube_control.sign_auth_request', while non-leader won't do it.

if kubelet_token and proxy_token and client_token:

kube_control.sign_auth_request(request[0], username,

kubelet_token, proxy_token,

client_token)

https://github.com/freyes/charm-kubernetes-master/blob/master/reactive/kubernetes_master.py#L1273

2, interface-kube-control's sign_auth_request will update 'creds' for all relations like kubernetes-worker

https://github.com/juju-solutions/interface-kube-control/blob/master/provides.py#L95

3, interface-kube-control will set the flag kube-control.auth.available after 'creds' is set

https://github.com/juju-solutions/interface-kube-control/blob/master/requires.py#L37

4, kubernetes-worker will run start_worker if kube-control.auth.available is set

https://github.com/charmed-kubernetes/charm-kubernetes-worker/blob/master/reactive/kubernetes_worker.py#L592

and check if creds is changed and run create_config

creds = db.get('credentials')

data_changed('kube-control.creds', creds)

create_config(servers[get_unit_number() % len(servers)], creds)

https://github.com/charmed-kubernetes/charm-kubernetes-worker/blob/master/reactive/kubernetes_worker.py#L621

5, kubernetes_worker will call kube_control.get_auth_credentials when kube-control.connected is set

@when('kube-control.connected')

def catch_change_in_creds(kube_control):

"""Request a service restart in case credential updates were detected."""

nodeuser = 'system:node:{}'.format(get_node_name().lower())

creds = kube_control.get_auth_credentials(nodeuser)

https://github.com/charmed-kubernetes/charm-kubernetes-worker/blob/master/reactive/kubernetes_worker.py#L1205

6, get_auth_credentials get creds from all leader and non-leader

def get_auth_credentials(self, user):

rx = {}

for unit in self.all_joined_units:

rx.update(unit.received.get('creds', {}))

https://github.com/juju-solutions/interface-kube-control/blob/master/requires.py#L57-L58

是应该运行:

juju run --unit kubernetes-master/0 "relation-set --format=json -r kube-control:<ID> creds='admin::gQL9NVtbxY1GCAyIg2xc36JwZ1sHOXm9'"

juju run --unit kubernetes-master/5 "relation-set --format=json -r kube-control:<ID> creds='admin::gQL9NVtbxY1GCAyIg2xc36JwZ1sHOXm9'"

还是清空它呢?

juju run --unit kubernetes-master/0 "relation-set --format=json -r kube-control:<ID> creds=''"

juju run --unit kubernetes-master/5 "relation-set --format=json -r kube-control:<ID> creds=''"

workaround can be, (see - https://bugs.launchpad.net/charm-kubernetes-master/+bug/1905058 )

juju run -u kubernetes-master/leader 'for i in `kubectl --kubeconfig /root/.kube/config get -n kube-system secrets --field-selector type=juju.is/token-auth | grep -o .*-token-auth`; do echo user: $i; kubectl --kubeconfig /root/.kube/config get -n kube-system secrets/$i --template=dG9rZW46IA=={{.data.password}} | base64 -d; echo; echo; done'

finall fix - https://bugs.launchpad.net/charm-kubernetes-master/+bug/1905058

升级过程如下:

# Upgrade containerd charm

$ juju upgrade-charm containerd --revision 88

# Upgrade etcd charm

If you want you can make an additional backup of etcd:

$ juju run-action etcd/0 snapshot --wait

$ juju scp etcd/0:/home/ubuntu/etcd-snapshots/<filename>.tar.gz .

Perform the upgrade

$ juju upgrade-charm etcd --revision 531

# Upgrade easyrsa charm

$ juju upgrade-charm easyrsa --revision 325

# Upgrade canal charm

$ juju upgrade-charm canal --revision 736

# Upgrade kubernetes-master charm

$ juju upgrade-charm kubernetes-master --revision 865

# Upgrade kubernetes-worker charm

$ juju upgrade-charm kubernetes-worker --revision 692

# Make a backup

# Set kubernetes-master to the 1.18 channel

juju config kubernetes-master channel=1.18/stable

# Upgrade kubernetes-master units, one at a time

juju run-action kubernetes-master/0 upgrade

juju run-action kubernetes-master/3 upgrade

juju run-action kubernetes-master/4 upgrade

# Set kubernetes-worker to the 1.18 channel

juju config kubernetes-worker channel=1.18/stable

# Upgrade kubernetes-worker units, one at a time

juju run-action kubernetes-worker/0 upgrade

juju run-action kubernetes-worker/1 upgrade

juju run-action kubernetes-worker/2 upgrade

juju run-action kubernetes-worker/3 upgrade

juju config etcd channel="3.4/stable"

juju upgrade-charm openstack-dashboard --revision 308

juju upgrade-charm keystone --revision 319

Once we have upgraded to 1.18, if we still have time, I would recommend that we upgrade some additional charms to take advantage of some bug fixes.

# Make a backup

# Upgrade containerd charm

$ juju upgrade-charm containerd --revision 94

# Upgrade etcd charm

$ juju upgrade-charm etcd --revision 540

# Upgrade easyrsa charm

$ juju upgrade-charm easyrsa --revision 333

# Upgrade canal charm

$ juju upgrade-charm canal --revision 741

# Upgrade kubernetes-master charm

$ juju upgrade-charm kubernetes-master --revision 891

# Upgrade kubernetes-worker charm

$ juju upgrade-charm kubernetes-worker --revision 704

20220722更新 - 见:https://bugs.launchpad.net/vault-charm/+bug/1940549

https://review.opendev.org/c/openstack/charm-vault/+/842144

Considering that vault/0 is a leader, so we should run it on vault/1 and vault/2, and relation name should be certificates:26 according to the existing data.

1, on vault/1

juju run -u vault/1 "relation-set -r certificates:26

kubernetes-master_0.server.key='' kubernetes-master_0.server.cert='' kubernetes-master_0.processed_client_requests=''

kubernetes-master_1.server.key='' kubernetes-master_1.server.cert='' kubernetes-master_1.processed_client_requests=''

kubernetes-master_2.server.key='' kubernetes-master_2.server.cert='' kubernetes-master_2.processed_client_requests=''

"

then verify if the stale data has been cleared.

juju run --unit kubernetes-master/0 "relation-get -r $(juju run --unit kubernetes-master/0 "relation-ids certificates") - vault/1"

juju run --unit kubernetes-master/1 "relation-get -r $(juju run --unit kubernetes-master/1 "relation-ids certificates") - vault/1"

juju run --unit kubernetes-master/2 "relation-get -r $(juju run --unit kubernetes-master/2 "relation-ids certificates") - vault/1"

2, on vault/2

juju run -u vault/2 "relation-set -r certificates:26

kubernetes-master_0.server.key='' kubernetes-master_0.server.cert='' kubernetes-master_0.processed_client_requests=''

kubernetes-master_1.server.key='' kubernetes-master_1.server.cert='' kubernetes-master_1.processed_client_requests=''

kubernetes-master_2.server.key='' kubernetes-master_2.server.cert='' kubernetes-master_2.processed_client_requests=''

"

then verify if the stale data has been cleared.

juju run --unit kubernetes-master/0 "relation-get -r $(juju run --unit kubernetes-master/0 "relation-ids certificates") - vault/2"

juju run --unit kubernetes-master/1 "relation-get -r $(juju run --unit kubernetes-master/1 "relation-ids certificates") - vault/2"

juju run --unit kubernetes-master/2 "relation-get -r $(juju run --unit kubernetes-master/2 "relation-ids certificates") - vault/2"

或者用脚本:

for vault in `juju status vault | grep "8200" | grep -v '*' | awk '{print $1}'`; do echo -e "

Slave Vault : $vault Commands

"; for i in `juju run --unit $vault -- relation-ids certificates` ;do CMD="juju run -u $vault \"relation-set -r $i "; for rel in `juju run --unit $vault -- relation-list -r $i` ; do node=`echo $rel | tr '/' '_ ' `; CMD="$CMD $node.server.key='' $node.server.cert='' $node.processed_client_requests='' " ;done ; CMD="$CMD \" " ;echo -e "## $CMD

" ; done ;done

appendix - relation script

#!/bin/bash

# eg: ./relations.sh mongodb/9

# Usage: ./get_all_relations_info.sh <app_or_app/unit>

# Example: ./get_all_relations_info.sh mysql # This will default to "mysql/0"

# Example: ./get_all_relations_info.sh keystone/1

APP=`echo ${1} | awk -F\/+ '{print $1}'`

UNIT=`echo ${1} | awk -F\/+ '{print $2}'`

[ -z "$UNIT" ] && UNIT=0

for r in `juju show-application ${APP} | grep endpoint-bindings -A999 | tail -n +3 | awk -F\: '{print $1}' | sort`

do

for i in `juju run --unit ${APP}/${UNIT} "relation-ids ${r}" | awk -F\: '{print $2}' | sort`

do

echo "==========================================="

echo "RELATION INFO FOR ${APP}/${UNIT} - ${r}:${i}"

echo ""

juju run --unit ${APP}/${UNIT} "relation-get -r ${r}:${i} - ${APP}/${UNIT}" | sort

echo "==========================================="

done

done

exit 0

20210324 - juju一段源码分析

units没有leader - https://bugs.launchpad.net/juju/+bug/1810331

1, SetUpgradeSeriesStatus will set 'upgrade series' status in the remote state.

SetUpgradeSeriesStatus sets the upgrade series status of the unit in the remote state.

SetSeriesStatusUpgrade is intended to give the uniter a chance to

upgrade the status of a running series upgrade before or after

upgrade series hook code completes and, for display purposes, to

supply a reason as to why it is making the change.

pre-series-upgrade | post-series-upgrade | commit

-> juju/worker/uniter/operation/runhook.go(runHook->callbacks)

-> juju/worker/uniter/op_callbacks.go#SetUpgradeSeriesStatus

-> juju/worker/uniter/agent.go#setUpgradeSeriesStatus(u.unit.xxx)

-> juju/api/uniter/unit.go#SetUpgradeSeriesStatus(u.state.xxx)

-> upgradeseries.go#SetUpgradeSeriesUnitStatus

-> juju/api/base/caller.go#FacadeCall

2, leader.go#RemoteStateChanged will be called when the remote state changed during execution of the operation.

RemoteStateChanged returns a channel which is signalled

whenever the remote state is changed.

juju/worker/uniter/uniter.go#loop(case <-watcher.RemoteStateChanged())

juju/worker/uniter/remotestate/interface.go#RemoteStateChanged()(<-chan struct{})

-> ./juju/worker/uniter/operation/leader.go#RemoteStateChanged

3, Two units can monitor the remote change of 'no upgrade series in progress'

$ grep -r 'remotestate' var/log/juju/unit-mongodb-* |grep upgrade

var/log/juju/unit-mongodb-20.log:2021-03-15 08:41:58 DEBUG juju.worker.uniter.remotestate watcher.go:534 got upgrade series change

var/log/juju/unit-mongodb-20.log:2021-03-15 08:41:58 DEBUG juju.worker.uniter.remotestate watcher.go:669 no upgrade series in progress, reinitializing local upgrade series state

20210427 - 一个问题 - 无法快速调试reactive hook

在octavia#src/reactive/octavia_handlers.py中添加了一个cluster-relation-departed hook

@reactive.hook('cluster-relation-departed')

def cluster_departed():

ch_core.hookenv.log('xxxx', level=hookenv.DEBUG)

然后用“juju run --unit octavia/leader hooks/cluster-relation-departed”调试它,但看不到日志,似乎根本就没进这个hook

然后通过下列方法重新编译代替之前直接改代码,问题依旧:

export JUJU_REPOSITORY=/home/ubuntu/charms_rewrite

mkdir -p /home/ubuntu/charms_rewrite && cd $JUJU_REPOSITORY

mkdir -p interfaces; mkdir -p layers/builds;

export CHARM_INTERFACES_DIR=$JUJU_REPOSITORY/interfaces

export CHARM_LAYERS_DIR=$JUJU_REPOSITORY/layers

export CHARM_BUILD_DIR=$JUJU_REPOSITORY/layers/builds

cd $CHARM_LAYERS_DIR

git clone https://github.com/openstack/charm-octavia.git octavia

cd octavia && patch -p1 < diff

cd ..

charm build octavia/src/

cd $CHARM_BUILD_DIR

juju deploy ./octavia

# juju upgrade-charm octavia --debug --show-log --verbose --path /home/ubuntu/charms_rewrite/layers/builds/octavia

现在运行“juju remove-unit octavia/5",却在octaiva/1 (leader)上看到下列日志:

2021-04-27 10:20:32 WARNING cluster-relation-departed Traceback (most recent call last):

2021-04-27 10:20:32 WARNING cluster-relation-departed File "/var/lib/juju/agents/unit-octavia-1/charm/hooks/cluster-relation-departed", line 22, in <module>

2021-04-27 10:20:32 WARNING cluster-relation-departed main()

2021-04-27 10:20:32 WARNING cluster-relation-departed File "/var/lib/juju/agents/unit-octavia-1/.venv/lib/python3.6/site-packages/charms/reactive/__init__.py", line 74, in main

2021-04-27 10:20:32 WARNING cluster-relation-departed bus.dispatch(restricted=restricted_mode)

2021-04-27 10:20:32 WARNING cluster-relation-departed File "/var/lib/juju/agents/unit-octavia-1/.venv/lib/python3.6/site-packages/charms/reactive/bus.py", line 379, in dispatch

2021-04-27 10:20:32 WARNING cluster-relation-departed _invoke(hook_handlers)

2021-04-27 10:20:32 WARNING cluster-relation-departed File "/var/lib/juju/agents/unit-octavia-1/.venv/lib/python3.6/site-packages/charms/reactive/bus.py", line 359, in _invoke

2021-04-27 10:20:32 WARNING cluster-relation-departed handler.invoke()

2021-04-27 10:20:32 WARNING cluster-relation-departed File "/var/lib/juju/agents/unit-octavia-1/.venv/lib/python3.6/site-packages/charms/reactive/bus.py", line 181, in invoke

2021-04-27 10:20:32 WARNING cluster-relation-departed self._action(*args)

2021-04-27 10:20:32 WARNING cluster-relation-departed TypeError: cluster_departed() takes 0 positional arguments but 1 was given

2021-04-27 10:20:32 ERROR juju.worker.uniter.operation runhook.go:136 hook "cluster-relation-departed" (via explicit, bespoke hook script) failed: exit status 1

2021-04-27 10:20:32 INFO juju.worker.uniter resolver.go:143 awaiting error resolution for "relation-departed" hook

20210428 - reactive charm如何添加hook

原本想在$octavia/src/reactive/octavia_handlers.py中添加了一个cluster-relation-departed hook

@reactive.hook("cluster-relation-departed")

def cluster_departed():

ch_core.hookenv.log('xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx', level=hookenv.DEBUG)

但使用下列命令怎么都打印不了上面的日志。

juju run --application octavia is-leader

juju run --unit octavia/leader hooks/cluster-relation-departed

juju remove-unit octavia/xx #also try it

改成传统的hoos.hook也不行。

hooks = ch_core.hookenv.Hooks()

@hooks.hook("cluster-relation-departed")

def cluster_departed():

ch_core.hookenv.log('xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx', level=hookenv.DEBUG)

改成下面的也不行。

@reactive.when('endpoint.cluster.departed')

def cluster_departed():

ch_core.hookenv.log('xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx', level=hookenv.DEBUG)

通过‘ juju status octavia --relations’知道octavia charm依赖openstack-ha interface来提供octavia:cluster

$ juju status octavia --relations

...

Relation provider Requirer Interface Type Message

octavia:cluster octavia:cluster openstack-ha peer

这样在hooks/cluster-relation-departed被运行后(‘juju run --unit octavia/leader hooks/cluster-relation-departed’)应该运行的是https://github.com/openstack/charm-interface-openstack-ha/blob/master/peers.py中的下列方法:

@hook('{peers:openstack-ha}-relation-{broken,departed}')

def departed_or_broken(self):

conv = self.conversation()

conv.remove_state('{relation_name}.connected')

if not self.data_complete(conv):

conv.remove_state('{relation_name}.available')

所以应该是这样:

@reactive.when_not('cluster.available')

def cluster_departed():

ch_core.hookenv.log('xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx', level=hookenv.DEBUG)

但这个条件可能在remove-unit和初始创建unit时都会执行,但下列代码中的” if ch_core.hookenv.local_unit() == departing_unit“能保证在初始时不执行。

@reactive.when_not('cluster.connected')

def cluster_departed():

departing_unit = ch_core.hookenv.departing_unit()

local_unit = ch_core.hookenv.local_unit()

ch_core.hookenv.log('departing_unit:{} local_unit:{}'.format(

departing_unit, local_unit), level=ch_core.hookenv.DEBUG)

if ch_core.hookenv.local_unit() == departing_unit:

units = departing_unit

db = ch_core.unitdata.kv()

existing_units = db.get(DEPARTED_UNITS_KEY, '')

if existing_units:

units = units + ',' + existing_units

db.set(DEPARTED_UNITS_KEY, units)

ch_core.hookenv.log('setting KV departed-units: {}'.format(

units), level=ch_core.hookenv.DEBUG)

reactive.set_flag('unit.deleted')

经下列两个命令都能看到输出。

juju run --unit octavia/9 -- 'charms.reactive -p clear_flag cluster.available; hooks/update-status'

juju remove-unit octavia/9

2021-04-28 08:52:02 INFO juju-log ha:37: departing_unit: octavia/9

2021-04-28 08:52:23 INFO juju-log neutron-api:44: departing_unit: None

juju run --unit octavia/leader -- charm-env python3.6 -c "import charms.leadership; from charmhelpers.core import host, hookenv, unitdata; print(unitdata.kv().get('departing_unit')); "

还有一个没打印日志的原因是日志级别,改成INFO即可。或者使用:

juju model-config logging-config='unit=DEBUG'

另外,后来测试发现有时行有时不行,所以需要将@reactive.when_not(‘cluster.available’) 改成 @reactive.when_not(‘cluster.connected’)

@reactive.when_not('cluster.connected')

def cluster_departed():

ch_core.hookenv.log('xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx', level=hookenv.DEBUG)

运行了’juju remove-unit’之后会很长时间才运行完,运行完之后这个unit就没法ssh了,但是可以去controller ( juju ssh -m controller 0 ) 来搜索该unit的相关日志的。

为什么下列upgrade-charm可以使用@reactive.hook来定义,而cluster-relation-departed却只能由@reactive.when_not(‘cluster.connected’) 来添加呢?也许是因为没有interface来处理upgrade-charm hook的原因吧,至少这个解释能让我内心感受到和平与爱。

hua@t440p:/bak/work/charms/octavia$ grep -r '@reactive.hook' src/reactive/octavia_handlers.py -A2

@reactive.hook('upgrade-charm')

def upgrade_charm():

reactive.remove_state('octavia.nrpe.configured')

另外使用’juju remove-unit octavia/6’来删除一个unit时非常慢的。

还有,即使这样也会有问题。举例:leader unit is octava/1, departing unit is octavia/9。这里涉及到delete hm port与update ip list两个操作在哪个unit里做的问题,两个操作都需要departing unit name参数并且这个参数只能在octavia/9里获得。

- unit之间传递数据是relation_set()机制(reactive版本的relation_set见下一小节),不是unitdata.KV (这个是local, 不能accross unit)

- unit之前传递事件的问题,octaiva/9无法在运行完cluster_departed之后发事件通知octavia/1中的update_controller_ip_port_list继续做。

所以,对于octavia/1是使用@reactive.when_not(‘cluster.connected’)

@reactive.when_not('cluster.connected')

def cluster_departed():

departing_unit = ch_core.hookenv.departing_unit()

local_unit = ch_core.hookenv.local_unit()

ch_core.hookenv.log('departing_unit:{} local_unit:{}'.format(

departing_unit, local_unit), level=ch_core.hookenv.DEBUG)

if ch_core.hookenv.local_unit() == departing_unit:

...

对于octavia/7仍然应该是追加@reactive.when_not(‘cluster.connected’) 来触发(因为octavia/7 should be running -relation-changed hook and/or -broken and -departed )

但cluster_departed与update_controller_ip_port_list先运行的顺序无法保证。

@reactive.when_not('is-update-status-hook')

@reactive.when('leadership.is_leader')

@reactive.when('identity-service.available')

@reactive.when('neutron-api.available')

# Neutron API calls will consistently fail as long as AMQP is unavailable

@reactive.when('amqp.available')

@reactive.when_not('cluster.connected')

def update_controller_ip_port_list():

为了确定这些hook是否执行,做个实验吧,这次octavia/leader=octavia/3, depart-unit=octavia/4:

juju debug-hooks

juju debug-hooks octavia/leader cluster-relation-joined cluster-relation-changed cluster-relation-broken cluster-relation-departed

juju debug-hooks octavia/4 cluster-relation-joined cluster-relation-changed cluster-relation-broken cluster-relation-departed

触发了hook后进进入tmux windows, 然后就能使用下列命令调试,然后键exit退出以便继续进入下一个hook的debug tmux

root@juju-1dd8c4-octavia-12:/var/lib/juju/agents/unit-octavia-3/charm/hooks# set |grep JUJU

...

JUJU_CHARM_DIR=/var/lib/juju/agents/unit-octavia-3/charm

JUJU_DEBUG=/tmp/juju-debug-hooks-058791821

JUJU_DEPARTING_UNIT=octavia/4

JUJU_DISPATCH_PATH=hooks/cluster-relation-departed

JUJU_HOOK_NAME=cluster-relation-departed

JUJU_RELATION=cluster

JUJU_RELATION_ID=cluster:8

JUJU_REMOTE_APP=octavia

JUJU_REMOTE_UNIT=octavia/4

JUJU_UNIT_NAME=octavia/3

root@juju-1dd8c4-octavia-13:/var/lib/juju/agents/unit-octavia-4/charm/hooks# set |grep JUJU

...

JUJU_CHARM_DIR=/var/lib/juju/agents/unit-octavia-4/charm

JUJU_DEBUG=/tmp/juju-debug-hooks-642610779

JUJU_DEPARTING_UNIT=octavia/4

JUJU_DISPATCH_PATH=hooks/cluster-relation-departed

JUJU_HOOK_NAME=cluster-relation-departed

JUJU_RELATION=cluster

JUJU_RELATION_ID=cluster:8

JUJU_REMOTE_APP=octavia

JUJU_REMOTE_UNIT=octavia/0

JUJU_UNIT_NAME=octavia/4

实际上octavia/leader上也能获得JUJU_DEPARTING_UNIT=octavia/4

reactive版本的relation_set

例, 在$octavia/src/reactive/octavia_handlers.py中有一个charm.use_defaults:

charm.use_defaults(

...

'cluster.available',

)

它会调用openstack charm (https://github.com/openstack/charms.openstack.git)的./charms_openstack/charm/defaults.py#use_defaults

它也等价于(https://bugs.launchpad.net/charm-gnocchi/+bug/1858132):

@reactive.when('cluster.available')

def update_peers(cluster):

"""Inform peers about this unit"""

with charm.provide_charm_instance() as charm_instance:

charm_instance.update_peers(cluster)

得到charm_instance是指openstack_charm#./charms_openstack/charm/classes.py#update_peers

def update_peers(self, cluster):

...

cluster.set_address(os_ip.ADDRESS_MAP[addr_type]['binding'], laddr)

然后charm-interface-openstack-ha#peers.py将一个unit的这个k/v设置到其他所有units中去。

def set_address(self, address_type, address):

'''Advertise the address of this unit of a particular type

:param address_type: str Type of address being advertised, e.g.

internal/public/admin etc

:param address: str IP of this unit in 'address_type' network

@returns None'''

for conv in self.conversations():

conv.set_remote(

key='{}-address'.format(address_type),

value=address)

unit被remove后对应这个unit的remote data也会被删除。

新版本的处理法见: https://bugs.launchpad.net/charm-mysql-innodb-cluster/+bug/1874479

另外一个例子,关于non-reactive charm如何设置relation的.

如使用在heat(heat/0)中根据relation_id(amqp)为每一个remote units(eg: rabbitmq-server/0)用relation_set设置数据。

+ relation_set(relation_id=relation_id, ttlname='heat_expiry',

+ ttlreg='heat-engine-listener|engine_worker', ttl=config('ttl'))

在rabbitmq-server/0中根据relation_id(amqp)能取到remote_unit(heat/0)的relation的数据。

current = relation_get(rid=relation_id, unit=remote_unit)

ttl = current['ttl']

20240430 - 另一种获取running units的方法

伪造https://github.com/juju-solutions/interface-etcd/blob/master/peers.py,在/var/lib/juju/agents/unit-octavia-3/charm/hooks/relations/openstack-ha/peers.py ( https://github.com/openstack/charm-interface-openstack-ha/blob/master/peers.py )中添加下列方法:

def get_peers(self):

'''Return a list of names for the peers participating in this

conversation scope. '''

peers = []

# Iterate over all the conversations of this type.

for conversation in self.conversations():

peers.append(conversation.scope)

return peers

这时通过下列方法就能拿到running units了.

juju run --unit octavia/3 --timeout 35m0s – charm-env python3.6 /tmp/test.py

# cat /tmp/test.py

import charms.reactive as reactive

import charm.openstack.api_crud as api_crud

import rpdb;rpdb.set_trace()

cluster_peer = reactive.endpoint_from_flag('cluster.connected')

peers = cluster_peer.get_peers()

print(peers)

# /var/lib/juju/agents/unit-octavia-0/.venv/bin/pip3.6 install rpdb

# nc 127.0.0.1 4444

这块的API其实分两块 (https://charmsreactive.readthedocs.io/en/latest/charms.reactive.relations.html):

- 新的charms.reactive.endpoints - 参见上面“ 20201114更新 - k8s升级过程中出错”一节。

- 旧的charms.reactive.relations.RelationBase

20210520更新 - 哪里设置common_name

vault与ovn-central通过certificate关系连接。

juju run --unit vault/0 "relation-ids certificates"

juju run --unit vault/0 "relation-list -r certificates:44"

$ juju run --unit vault/0 "relation-get -r certificates:44 - ovn-central/0"

cert_requests: '{"juju-60494b-0-lxd-5": {"sans": ["10.230.59.34"]}}'

certificate_name: 73b1ab84-a6bd-4271-8047-c2bcc1e084e6

common_name: juju-60494b-0-lxd-5.segmaas.1ss

egress-subnets: 10.230.59.34/32

ingress-address: 10.230.59.34

private-address: 10.230.59.34

sans: '["10.230.59.34"]'

unit_name: ovn-central_0

vault依赖interface-tls-certificates作为provider, ovn-central依赖interface-tls-certificates作为requires

all_joined_units来自/var/lib/juju/agents/unit-ovn-central-0/.venv/lib/python3.6/site-packages/charms/reactive/endpoints.py它应该就是指上面的ovn-central units,所以根据[1]应该是所有ovn-central units的certificates关系中将根据common_name来request server cert

@property

def all_requests(self):

requests = []

for unit in self.all_joined_units:

# handle older single server cert request

if unit.received_raw['common_name']:

requests.append(CertificateRequest(

unit,

'server',

unit.received_raw['certificate_name'],

unit.received_raw['common_name'],

unit.received['sans'],

))

vault的reissue_certificates action应该能trigger上面的all_requests

def reissue_certificates(*args):

set_flag('certificates.reissue.requested')

set_flag('certificates.reissue.global.requested')

哪里在设置common_name,似乎是在这里

vim /var/lib/juju/agents/unit-ovn-central-0/charm/reactive/layer_openstack.py

@reactive.when('certificates.available',

'charms.openstack.do-default-certificates.available')

def default_request_certificates():

"""When the certificates interface is available, this default handler

requests TLS certificates.

"""

tls = reactive.endpoint_from_flag('certificates.available')

with charm.provide_charm_instance() as instance:

for cn, req in instance.get_certificate_requests().items():

tls.add_request_server_cert(cn, req['sans'])

vim /var/lib/juju/agents/unit-ovn-central-0/.venv/lib/python3.6/site-packages/charms_openstack/charm/classes.py

def get_certificate_requests(self, bindings=None):

"""Return a dict of certificate requests

:param bindings: List of binding string names for cert requests

:type bindings: List[str]

"""

return cert_utils.get_certificate_request(

json_encode=False, bindings=bindings).get('cert_requests', {})

vim /var/lib/juju/agents/unit-ovn-central-0/.venv/lib/python3.6/site-packages/charmhelpers/contrib/openstack/cert_utils.py

def add_hostname_cn(self):

"""Add a request for the hostname of the machine"""

ip = local_address(unit_get_fallback='private-address')

addresses = [ip]

# If a vip is being used without os-hostname config or

# network spaces then we need to ensure the local units

# cert has the approriate vip in the SAN list

vip = get_vip_in_network(resolve_network_cidr(ip))

if vip:

addresses.append(vip)

self.hostname_entry = {

'cn': get_hostname(ip),

'addresses': addresses}

[1] https://github.com/juju-solutions/interface-tls-certificates/blob/master/provides.py#L157-L164

20210813 - upgrade controller

juju create-backup -m foundations-maas:controller --filename controller-backup.tar.gz

juju upgrade-controller --agent-version 2.9.10 --dry-run

juju upgrade-controller --agent-version 2.9.10

20220601 - charmcraft + charmhub

在从charm store迁移至charmhub之后(eg: https://review.opendev.org/c/openstack/charm-neutron-openvswitch/+/842516 , 其他patch应该rebase在842516上,如果842516还在lp上未合并就直接在lp ui上使用824516来rebase,例: https://review.opendev.org/c/openstack/charm-neutron-openvswitch/+/838226),之前使用’charm build’来build reactive charm,现在要改成通’charmcraft build’ 在lxd容器里build reactive charm了.

# https://github.com/canonical/charmcraft

sudo snap install charmcraft --classic

sudo snap install lxd

sudo adduser $USER lxd

newgrp lxd

lxd init --auto

mkdir my-new-charm; cd my-new-charm

charmcraft init

$ tree .

├── actions.yaml

├── charmcraft.yaml

├── config.yaml

├── CONTRIBUTING.md

├── LICENSE

├── metadata.yaml

├── README.md

├── requirements-dev.txt

├── requirements.txt

├── run_tests

├── src

│ └── charm.py

└── tests

├── __init__.py

└── test_charm.py

charmcraft build

unzip -l test-charm.charm

charmcraft login

charmcraft upload

charmcraft release

git clone https://github.com/openstack/charm-neutron-openvswitch.git neutron-openvswitch

cd neutron-openvswitch

# use charmhub

git checkout -b xena origin/stable/xena

20220725更新

k8s charm 不work, 原来是从charm store升级为charmhub之后, kubernetes-master改名为kubernetes-control-plane了

juju refresh --switch ch:kubernetes-control-plane kubernetes-master

juju refresh --switch ch:kubernetes-worker kubernetes-worker

20230309 - upgrade vault charm from 1.5 to 1.8

#need to upgrade controller and model because charmhub is supported since juju 2.9.21

juju upgrade-controller

juju upgrade-model

#stop vault to avoid an error caused by snap refresh whilc vault is running

juju run -u vault/0 -- sudo systemctl stop vault

juju config vault channel=1.8/stable

# charm upgrade

juju upgrade-charm --switch ch:vault --channel 1.8/stable vault

juju run -u vault/0 -- sudo systemctl start vault

# unseal vault

export VAULT_ADDR=""

export VAULT_TOKEN=

vault operator unseal UNSEAL_KEY_1

vault operator unseal UNSEAL_KEY_2

vault operator unseal UNSEAL_KEY_3

20230612 - charm-helpers

#https://docs.openstack.org/charm-guide/rocky/making-a-change.html

sudo apt install git bzr tox libapt-pkg-dev python3-dev build-essential -y

git clone https://github.com/juju/charm-helpers/

cd charm-helpers

git checkout -b wsgisocketrotation

cat tox.ini

tox -r -epy310

tox -epy310

source .tox/py310/bin/activate

python -m subunit.run tests.contrib.openstack.test_os_contexts.ContextTests.test_wsgi_worker_config_context_user_and_group |subunit2pyunit

git commit -m 'xxxx'

#charm-helpers is not on opendev, it's on github so use pr

#fork https://github.com/juju/charm-helpers

git remote add zhhuabj git@github.com:zhhuabj/charm-helpers.git

git remote set-url --push origin no_push

git remote -v

git branch -a

#rebase

#git fetch origin

#git checkout master

#git rebase origin/master

#git checkout wsgisocketrotation

#git rebase origin/master wsgisocketrotation

#using 'git merge' instead of 'git rebase', in order to reserve the history so do it only when there is a conflict

git checkout master

git fetch origin

git merge origin/master

git push orign master

git checkout wsgisocketrotation

git fetch origin

git merge origin/master

git commit --amend

git push zhhuabj wsgisocketrotation

#submit a PR in https://github.com/zhhuabj/charm-helpers/tree/wsgisocketrotation

https://github.com/juju/charm-helpers/pull/801

git clone https://github.com/openstack/charm-nova-cloud-controller.git

cd charm-nova-cloud-controller

git checkout -b wsgisocketrotation

make sync

vim charm-helpers-hooks.yaml

#repo: https://github.com/juju/charm-helpers

repo: file:///bak/work/charms/charm-helpers

#NOTE: also need to switch branch to origin/stable/ussuri for charm-helpers if switching ncc to origin/stable/ussuri

python ../charm-helpers/tools/charm_helpers_sync/charm_helpers_sync.py -c ./charm-helpers-hooks.yaml

tox

#test the charm nova-cloud-controller

git clone https://github.com/openstack/charm-nova-cloud-controller.git cd charm-nova-cloud-controller

#to avoid error: series "focal" not supported by charm

git checkout -b ussuri origin/stable/ussuri

patch -p1 < ../charm-helpers/0001-Allow-users-to-disable-wsgi-socket-rotation.patch

patch -p1 < ./own.diff

juju upgrade-charm nova-cloud-controller --path $PWD

20230628 - debug juju

为了解决这个博客中遇到的问题 - https://blog.csdn.net/quqi99/article/details/129286737

cd /bak/golang/src/github.com/juju/juju

git checkout -b 2.9.43 upstream/increment-to-2.9.43

vim diff

diff --git a/container/lxd/image.go b/container/lxd/image.go

index ae3c5dea5a..bd1fbbb0d3 100644

--- a/container/lxd/image.go

+++ b/container/lxd/image.go

@@ -90,6 +90,10 @@ func (s *Server) FindImage(

if res, _, err := source.GetImageAliasType(string(virtType), alias); err == nil && res != nil && res.Target != "" {

target = res.Target

break

+ } else if err != nil {

+ logger.Debugf("GetImageAliasType returned an error for %s: remote.Host: %s, error: %s", alias, remote.Host, err.Error())

+ } else if res != nil {

+ logger.Debugf("No target returned for alias %s: remote.Host: %s res: %s", alias, remote.Host, err.Error())

}

}

if target != "" {

patch -p1 < diff

sudo snap install go --classic

sudo apt install make bzip2 -y

make go-build

#copy jujud to machine 0

cp /bak/golang/src/github.com/juju/juju/_build/linux_amd64/bin/jujud ~/ && multipass transfer ~/jujud test:. #on minipc

juju scp -m m _build/linux_amd64/bin/jujud 0:~/ #on multipass VM

juju scp -m m ~/jujud 0:~/

juju ssh -m m 0

sudo systemctl stop jujud-machine-0.service

#modify /etc/systemd/system/jujud-machine-0-exec-start.sh to change /var/lib/juju/tools/machine-0/jujud to /home/ubuntu/jujud

sudo systemctl start jujud-machine-0.service

#trigger it

juju add-machine --series jammy lxd:0

juju model-config -m m logging-config="<root>=debug" && sudo tail -f /var/log/juju/machine-0.log

2023-06-28 10:37:23 DEBUG juju.container.lxd image.go:99 Found image remotely - "lp2008993.com:443" "ubuntu-22.04-server-cloudimg-amd64-lxd.tar.xz" "dcfe2a671f1d491de76c58dd31186bfa5aa5fcb5fe82a559f0fc551add052771"

2023-06-28 10:37:23 DEBUG juju.container.lxd image.go:132 Copying image from remote server

2023-06-28 10:37:24 WARNING juju.worker.lxdprovisioner provisioner_task.go:1400 machine 0/lxd/10 failed to start: acquiring LXD image: Failed remote image download: Get "https://lp2008993.com:443/server/releases/jammy/release-20230616/ubuntu-22.04-server-cloudimg-amd64-lxd.tar.xz": x509: certificate signed by unknown authority

2023-06-28 10:37:24 WARNING juju.worker.lxdprovisioner provisioner_task.go:1438 failed to start machine 0/lxd/10 (acquiring LXD image: Failed remote image download: Get "https://lp2008993.com:443/server/releases/jammy/release-20230616/ubuntu-22.04-server-cloudimg-amd64-lxd.tar.xz": x509: certificate signed by unknown authority), retrying in 10s (9 more attempts)

20230704 - Use dlv to debug juju

用下列’dlv connect’来debug juju时总会报这么一个错’setting up container dependencies on host machine: failed to acquire initialization lock: unable to open /tmp/juju-machine-lock: permission denied’

juju bootstrap localhost lxd

sudo snap install juju --classic

juju add-model m

juju model-config -m m logging-config="<root>=DEBUG"

juju model-config container-image-metadata-url=https://node1.lan/lxdkvm

juju model-config image-metadata-url=https://node1.lan/lxdkvm

juju model-config ssl-hostname-verification=false

cat << EOF |tee test.yaml

cloudinit-userdata: |

postruncmd:

- bash -c 'iptables -A OUTPUT -d 185.125.190.37 -j DROP'

- bash -c 'iptables -A OUTPUT -d 185.125.190.40 -j DROP'

EOF

juju model-config ./test.yaml

juju add-machine --series jammy --constraints "mem=8G"

juju ssh -m controller 0 -- sudo iptables -A OUTPUT -d 185.125.190.37

juju ssh -m controller 0 -- sudo iptables -A OUTPUT -d 185.125.190.40

juju scp -m m ~/ca/ca.crt 0:~/

juju ssh -m m 0 -- sudo cp /home/ubuntu/ca.crt /usr/local/share/ca-certificates/ca.crt

juju ssh -m m 0 -- sudo update-ca-certificates --fresh

juju ssh -m m 0 -- curl https://node1.lan/lxdkvm/streams/v1/index.json |tail -n3

juju ssh -m m 0 -- sudo systemctl restart jujud-machine-0.service

date; juju add-machine --series jammy lxd:0

sudo snap install go --classic

go install github.com/go-delve/delve/cmd/dlv@latest

sudo mkdir -p /home/jenkins/workspace/build-juju/build/src/github.com/juju

sudo chown -R ubuntu /home/jenkins/workspace/build-juju/build/src/github.com/juju

scp -i ~/.local/share/juju/ssh/juju_id_rsa -r /bak/golang/src/github.com/juju/juju ubuntu@10.230.16.13:/home/jenkins/workspace/build-juju/build/src/github.com/juju/

cd /home/jenkins/workspace/build-juju/build/src/github.com/juju/juju

git checkout 2.9.43

export GOPATH=/home/ubuntu/go

sudo ~/go/bin/dlv attach $(pidof jujud)

(dlv) b FindImage

Breakpoint 1 set at 0x2223940 for github.com/juju/juju/container/lxd.(*Server).FindImage() ./container/lxd/image.go:38

(dlv) c

juju add-machine --series jammy lxd:0

juju debug-log -m m --replay | grep "Found image"

后来发现通过systemd启动jujud时这个lock的权限是root, 而通过上面dlv启动的jujud的权限(如果它已经变成ubuntu权限了可先rm -rf /tmp/juju-machine-lock)却是ubuntu(即使用root用户启动也会变成ubuntu), 这个lock是ubuntu后就会出现这个permission denied问题进而导致无法进入断点.

既然只能用systemd来启动jujud,那就用下面的’dlv attach’来代替上面的’dlv connect’吧,

#on machine 0

sudo systemctl stop jujud-machine-0.service

sudo rm -rf /tmp/juju-machine-lock #avoid permission denied

sudo ~/go/bin/dlv --headless -l 0.0.0.0:1234 exec /var/lib/juju/tools/machine-0/jujud -- machine --data-dir /var/lib/juju --machine-id 0 --debug

#on minipc

sudo mkdir -p /home/jenkins/workspace/build-juju/build

sudo chown -R hua /home/jenkins/workspace/build-juju/build

ln -sn /bak/golang/src /home/jenkins/workspace/build-juju/build/src

cd /home/jenkins/workspace/build-juju/build/src/github.com/juju/juju/

git checkout -b 2.9.43 juju-2.9.43

sudo /bak/golang/bin/dlv connect 10.230.16.13:1234

ls -al /tmp/juju-machine-lock

#trigger it

juju add-machine --series jammy lxd:0

注意:用’go build -gcflags “all=-N -l” -o jujud ./cmd/jujud’重新编译jujud不是必须的,我们将juju的源码拷进去就行 (源码版本一定得和juju controller的版本一致), juju源码是拷进去了的不编译jujud只是juju外的一些依赖会没有符号表这不影响调试

如果不编译jujud,那源码得放在/bak/golang/src /home/jenkins/workspace/build-juju/build/src目录(juju自带的jujud可能是在jenkins下自动编译的吧)

20230720 - 查询charmhub中的最新版本

如使用openstack antelope版本时可以使用ovn 23.03版本 - https://docs.openstack.org/charm-guide/latest/project/charm-delivery.html

可使用命令’juju info --series jammy ovn-central’查询用23.03/edge,在our testbed中可修改common/ch_channel_map/jammy-antelope

20231012 - charm-helpers

有charm-helpers的代码,要先进master, 然后再backport如2023.01/antelope, zed等branch (注:自2023.01开始openstack改命名规则了, 见:https://governance.openstack.org/tc/reference/release-naming.html),另外,可查询此页来看哪个release需要backport (https://ubuntu.com/openstack/docs/supported-versions).

然后再在每个工程下做sync(如cinder)到master, 2023.01/antelope, zed等

cd ../cinder

git checkout master && git pull

git checkout -b ussuri_rotation origin/stable/ussuri

../charm-helpers/tools/charm_helpers_sync/charm_helpers_sync.py -c charm-helpers-hooks.yaml

例 - https://bugs.launchpad.net/charm-nova-cloud-controller/+bug/2021550

20231012 - prometheus-openstack-exporter

如prometheus-openstack-exporter charm

它的PR我若没权限,即使做rebase了也无法trigger CI, 必须找人来trigger (https://code.launchpad.net/~zhhuabj/charm-prometheus-openstack-exporter/+git/charm-prometheus-openstack-exporter/+merge/448328)

此外,prometheus-openstack-exporter没有maintain branch,所以当代码进了master时,不需要如backport到23.04, 因为23.04是一个tag不是一个branch,在tag上也无法做PR的,这样只能等到23.10才能用(一般在10月底或11月初) - 见:https://bugs.launchpad.net/charm-prometheus-openstack-exporter/+bug/2029445

但是’juju info --series bionic prometheus-openstack-exporter’显示bionic是revision=28是tag 23.04, 是无法 backport到23.04的。

别外, 23.10移除了对bionic的支持(https://git.launchpad.net/charm-prometheus-openstack-exporter/commit/?id=19d016f7c125d65a244e19ee80eb1cd7347937f7), 因为juju 3.1只支持>=py37, 或许技术上(pip<xxx; python_version <= ‘3.6’)可实现, 但这是另一个问题 (ubuntu pro只支持openstack, 不支持juju/maas)

20231114 - mongodb

# Get the model uuid

juju show-model anbox-subcluster-2 | grep model-uuid

# ssh into the juju controller unit

juju ssh -m controller 0

# get credentials for the db

conf=/var/lib/juju/agents/machine-*/agent.conf

user=$(sudo awk '/tag/ {print $2}' $conf)

password=$(sudo awk '/statepassword/ {print $2}' $conf)

# access mongo. you might need to use `juju-db.mongo` instead of just `mongo` as

# the command

juju-db.mongo 127.0.0.1:37017/juju --authenticationDatabase admin --ssl --sslAllowInvalidCertificates --username "$user" --password "$password"

# in a HA environment, you will need to execute the commands on the master DB

juju-db.mongo --sslAllowInvalidHostnames --sslAllowInvalidCertificates localhost:37017/admin --ssl -u "$(ls /var/lib/juju/agents/ | grep machine)" \

-p "$(sudo grep statepassword /var/lib/juju/agents/$(ls /var/lib/juju/agents/ | grep machine)/agent.conf|awk '{print $2}')" \

--authenticationDatabase admin --eval "rs.status()"|grep -P '(name|stateStr)'

# verify the machines and the ip addresses

db.ip.addresses.find({"model-uuid": "<UUID from before>", "value": "192.168.100.1"})

db.ip.addresses.deleteMany({"model-uuid": "<UUID from before>", "value": "192.168.100.1"})

20231117 - comple the old charm (k8s 1.22 + python3.2)

After doing some hacks, I successfully compiled a local 1.22 charm using the following steps.

sudo snap install charm --classic

mkdir -p /home/ubuntu/charms

mkdir -p ~/charms/{layers,interfaces}

export JUJU_REPOSITORY=/home/ubuntu/charms

export CHARM_INTERFACES_DIR=$JUJU_REPOSITORY/interfaces

export CHARM_LAYERS_DIR=$JUJU_REPOSITORY/layers

export CHARM_BUILD_DIR=$JUJU_REPOSITORY/layers/builds

cd $CHARM_LAYERS_DIR

git clone https://github.com/charmed-kubernetes/charm-kubernetes-worker.git kubernetes-worker

cd kubernetes-worker && git checkout -b 1.22+ck2 1.22+ck2

sudo apt install python3-virtualenv tox -y

cd .. && charm build --debug layers/kubernetes-worker/

#cd ${JUJU_REPOSITORY}/layers/builds/kubernetes-worker && tox -e func

cd /home/ubuntu/charms/layers/builds/kubernetes-worker

zip -rq ../kubernetes-worker.charm .

First we will hit the following error.

python_version < "3.8"\' don\'t match your environment\nIgnoring Jinja2: markers \'python_version >= "3.0" and python_version <= "3.4"\'

So it seems we should compile with python>=3.0 and python<=3.4, but ppa:deadsnakes/ppa doesn't have these versions as well.

Then I tried to compile it on xenial

juju add-machine --series xenial -n

but xenial is using python3.5, so I hit this error.

DEPRECATION: Python 3.5 reached the end of its life on September 13th, 2020. Please upgrade your Python as Python 3.5 is no longer maintained. pip 21.0 will drop support for Python 3.5 in January 2021. pip 21.0 will remove support for this functionality.

Finally, I comiple python3.2 from git source code. Afterwards, I successfully compiled the local 1.22 charm

sudo apt-get install build-essential zlib1g-dev libncurses5-dev libgdbm-dev libnss3-dev libssl-dev libreadline-dev libffi-dev wget -y

wget https://www.python.org/ftp/python/3.2.6/Python-3.2.6.tgz

tar -xf Python-3.2.6.tgz

cd Python-3.2.6/

./configure --enable-optimizations

make -j$(nproc)

sudo make altinstall

python3.2 --version

alias python=python3.2

alias python3=python3.2

sudo apt install build-essential -y

sudo apt install python3-pip python3-dev python3-nose python3-mock -y

cd $CHARM_LAYERS_DIR/..

charm build --debug ./layers/kubernetes-worker/

cd /home/ubuntu/charms/layers/builds/kubernetes-worker

zip -rq ../kubernetes-worker.charm .

charm upgrade

see ‘k8s upgrade’ - https://blog.csdn.net/quqi99/article/details/134456281

mongodb

$ cat ./connectMongo.sh

#!/bin/bash

machine="${1:-0}"

model="${2:-controller}"

juju=$(command -v juju)

read -d '' -r cmds <<'EOF'

conf=/var/lib/juju/agents/machine-*/agent.conf

user=$(sudo awk '/tag/ {print $2}' $conf)

password=$(sudo awk '/statepassword/ {print $2}' $conf)

client=$(command -v mongo)

"$client" 127.0.0.1:37017/juju --authenticationDatabase admin --ssl --sslAllowInvalidCertificates --username "$user" --password "$password"

EOF

"$juju" ssh -m "$model" "$machine" "$cmds"

db.applications.find({"name":"prometheus-openstack-exporter"}).pretty()

db.applications.updateOne({"_id" : "aa0d67ac-2fde-465e-8c7b-55c302a45cf9:prometheus-openstack-exporter"},{ $set: {"charm-origin.platform.series":"focal"}})

db.applications.updateOne({"_id" : "aa0d67ac-2fde-465e-8c7b-55c302a45cf9:prometheus-openstack-exporter"},{ $set: {"series":"focal"}})

Reference

[1] https://osm.etsi.org/wikipub/index.php/Creating_your_VNF_Charm

[2] https://cloud.garr.it/charms/create-and-deploy-charms/

[3] https://discourse.juju.is/t/tutorial-charm-development-beginner-part-1/377

[4] https://discourse.juju.is/t/tutorial-charm-development-beginner-part-3/379

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?