作者:张华 发表于:2014-03-07

版权声明:可以任意转载,转载时请务必以超链接形式标明文章原始出处和作者信息及本版权声明

(http://blog.csdn.net/quqi99 )

Legacy Routing and Distributed Router in Neutron

先温习下l3-agent原理:l3-agent节点为所有subnet创建内部网关,外部网关,路由等。l3-agent定期同步router时会为将和该router相关联的subnet调度到相应的l3-agent节点上创建网关(根据port的device_owner属性找到对应的port, port里有subnet)。neutron支持在多个节点上启动多个l3-agent, l3-agent的调度是针对router为单位的, 试想, 如果我们创建众多的router, 每一个subnet都关联到一个router的话, 那么也意味着每一个subnet的网关都可被调度到不同的l3-agent上, 从而将不同的subnet的流量分流到不同的l3-agent节点.

但是上述方案不具有HA特性, 所以出现一个VRRP HA的Blueprint继续使用VRRP+Keepalived+Conntrackd技术解决单点l3-agent的HA问题, VRRP使用广播进行心跳检查, backup节点收不到master节点定期发出的心跳广播时便认为master死掉从而接管master的工作(删除掉原master节点上的网关的IP, 在新master节点上重设网关). 可参见我的另一博文:http://blog.csdn.net/quqi99/article/details/18799877

但是, 上述两种方案均无法解决相同子网的东西向流量不绕道l3-agent的状况,所以Legacy Routeing in Neutron具有性能瓶颈、水平可扩展性差。

所以出现DVR,设计要点如下:

- 东西向流量不再走l3-agent。

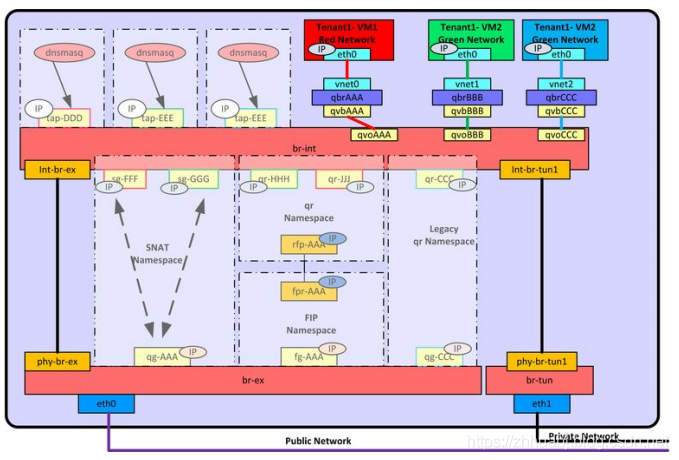

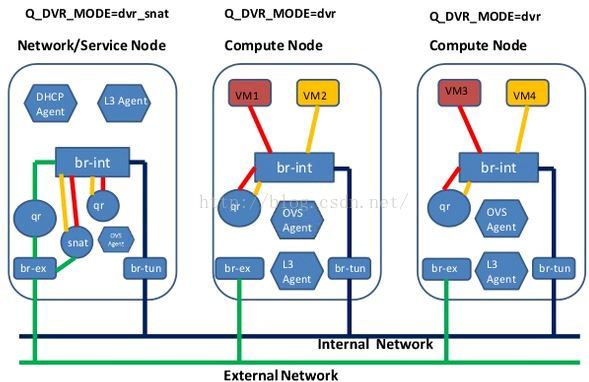

- 计算节点无FIP时SNAT/DNAT均走中心化的l3-agent节点。(此时计算节点有qrouter-xxx ns(qr接口为子网网关如192.168.21.1,此处采用策略路由将SNAT流量导到网络节点snat-xxx的sg接口上), 网络节点有qrouter-xxx ns与snat-xxx (qg接口为用于SNAT的外网接口,sg接口为该子网上与网关不同的IP如192.168.21.3, SNAT/DNAT规则设置在snat-xxx ns中)

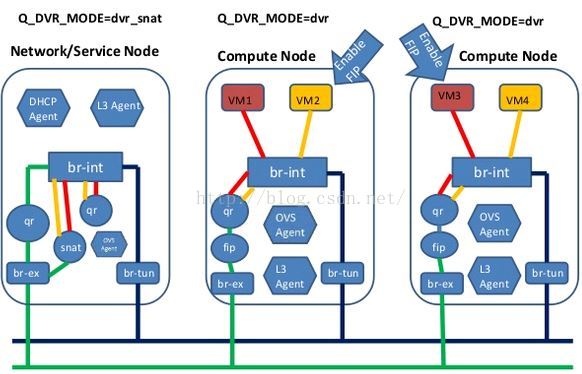

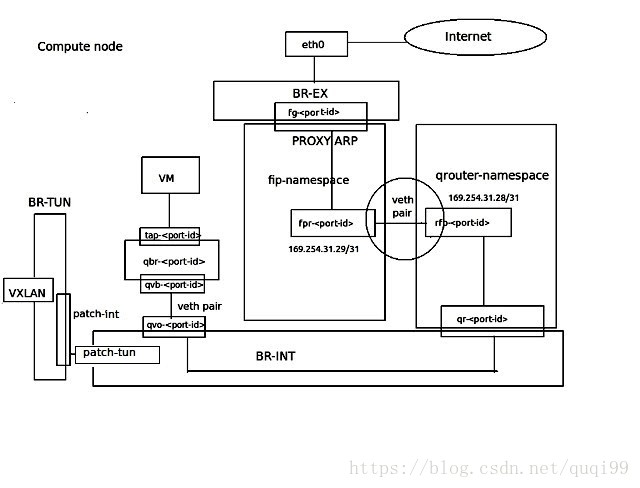

- 计算节点有FIP(且虚机也有FIP时, 无FIP的虚机属于上面第2种情况)时SNAT/DNAT均走计算节点。(相对于上面无FIP的情况,此时在计算节点多出fip-xxx ns, fip-xxx与qrouter-xxx通过fpr与rfp这对peer devices相连,fip-xxx中还有fg接口设置外网IP插在br-int上最终和br-ex接起来)对于SNAT, snat规则设置在qrouter-xxx上;对于DNAT,首先fip-xxx会有arp代理欺骗外部FIP的mac就是fg接口的mac地址这样外部流量到达fg接口,然后fip-xxx里的路由再将到FIP的流量导到qrouter-xxx的rfp, 然后在qrouter-xxx中设置DNAT规则导到虚机,由于采用了DNAT规则这样FIP是否设置在rfp接口都是无所谓的,在ocata版本中我看见就没设置)。

- DVR对VPNaaS的影响。DVR分legency, dvr, dvr_snat三种模式。dvr只用于计算节点,legency/dvr_snat只用于网络节点。legency模式下只有qrouter-xxx ns(SNAT等设置在此ns里), dvr_snat模式下才有snat_xxx(SNAT设置在此ns里)。由于vpnaas的特性它只能中心化使用,所以对于dvr router应该将VPNaaS服务只设置在中心化的snat_xxx ns中,并且将vpn流量从计算节点导到网络节点)。见:https://review.openstack.org/#/c/143203/

- DVR对FWaaS的影响。legency时代neutron只有一个qrouter-xxx,所以东西南北向的防火墙功能都在qrouter-xxx里;而DVR时代在计算节点多出fip-xxx(此ns中不安装防火墙规则),在网络节点多出snat-xxx处理SNAT,我们确保计算节点上的qrouter-xxx里也有南北向防火墙功能,并确保DVR在东西向流量不出问题。见:https://review.openstack.org/#/c/113359/ 与https://specs.openstack.org/openstack/neutron-specs/specs/juno/neutron-dvr-fwaas.html

- 自newton起,DVR与VRRP也可以结合使用

- DVR对LBaaS的影响,lbaas的vip是没有binding:host属性的 (20190322更新: ocata版本之前dvr环境有binding:host属性, queens之前没有了, 但在queens中我发现虚机无法ping网络结点snat-xxx里的qg-xxx 接口, 可以ping sg-xxx 接口, SNAT规则也都在那, 暂不清楚为什么) ,对于这种unbound port也应该中心化。nobound port like allowed_address_pair port and vip port - https://bugs.launchpad.net/neutron/+bug/1583694

https://www.openstack.org/assets/presentation-media/Neutron-Port-Binding-and-Impact-of-unbound-ports-on-DVR-Routers-with-FloatingIP.pdf

unbound port(像allowed_address_pair port和vip port),这种port可以和很多VM关联所以它没有binding:host属性(没有binding:host就意味着agentg不会处理它,因为MQ只对有host的agent发消息),这在DVR环境下造成了很多问题:如果这种port继承VM的port-binding属性,因为有多个VM也不知道该继承哪一个;当把FIP关联上这种unbound port之后(LB backend VMs中的各个port都可以关联有相同FIP的allowed_address_pair)会存在GARP问题造成FIP失效。

解决办法是将这种unbound port中心化,即只有中心化的网络节点来处理unbound port(allowed_address_pair port and vip port),这样网络节点上的snat_xxx名空间也需要处理unbound port的DNAT等事情。代码如下:

server/db side - https://review.openstack.org/#/c/466434/ - FIP port中没有binding:host时设置DVR_SNAT_BOUND内部变量,然后位于计算节点上的dvr-agent就别处理带DVR_SNAT_BOUND的port了 (server side别返回这种port就行,所以代码主要在server/db side)

agent side - https://review.openstack.org/#/c/437986/ - l3-agent需要在snat-xxx里添加带DVR_SNAT_BOUND的port,并设置它的SNAT/DNAT

然后有一个bug - Unbound ports floating ip not working with address scopes in DVR HA - https://bugs.launchpad.net/neutron/+bug/1753434

经过上面修改后,也顺便在计算节点上多出一个叫dvr_no_external的模式(https://review.openstack.org/#/c/493321/) - this mode enables only East/West DVR routing functionality for a L3 agent that runs on a compute host, the North/South functionality such as DNAT and SNAT will be provided by the centralized network node that is running in ‘dvr_snat’ mode. This mode should be used when there is no external network connectivity on the compute host. - 还有一个 - https://bugs.launchpad.net/neutron/+bug/1869887

- 但是使用stein时依然发生会出现这个问题 - https://bugs.launchpad.net/neutron/+bug/1794991/comments/59

代码:

server/db side: DVR VIP只是router中的一个属性(const.FLOATINGIP_KEY),它并不是有binding-host一样的普通port, 让这个unbound port中心化处理:

_process_router_update(l3-agent) -> L3PluginApi.get_routers -> sync_routers(rpc) -> list_active_sync_routers_on_active_l3_agent -> _get_active_l3_agent_routers_sync_data(l3_dvrscheduler_db.py) -> get_ha_sync_data_for_host(l3_dvr_db.py) -> _get_dvr_sync_data -> _process_floating_ips_dvr(router[const.FLOATINGIP_KEY] = router_floatingips)

agent side: l3-agent只处理有DVR_SNAT_BOUND标签(也就是unbount ports)的port

_process_router_update -> _process_router_if_compatible -> _process_added_router(ri.process) -> process_external -> configure_fip_addresses -> process_floating_ip_addresses -> add_floating_ip -> floating_ip_added_dist(only call add_cetralized_floatingip when having DVR_SNAT_BOUND) -> add_centralized_floatingip -> process_floating_ip_nat_rules_for_centralized_floatingip(floating_ips = self.get_floating_ips())

上面针对的是vip,对于allowed_addr_pair这种unbound port也是一样中心化处理

_dvr_handle_unbound_allowed_addr_pair_add|_notify_l3_agent_new_port|_notify_l3_agent_port_update -> update_arp_entry_for_dvr_service_port (generate arp table for every pairs of this port)

测试环境快速搭建

juju bootstrap

bzr branch lp:openstack-charm-testing && cd openstack-charm-testing/

juju-deployer -c ./next.yaml -d xenial-mitaka

juju status |grep message

juju set neutron-api l2-population=True enable-dvr=True

./configure

source ./novarc

nova boot --flavor 2 --image trusty --nic net-id=$(neutron net-list |grep ' private ' |awk '{print $2}') --poll i1

nova secgroup-add-rule default icmp -1 -1 0.0.0.0/0

nova secgroup-add-rule default tcp 22 22 0.0.0.0/0

nova floating-ip-create

nova floating-ip-associate i1 $(nova floating-ip-list |grep 'ext_net' |awk '{print $2}')

neutron net-create private_2 --provider:network_type gre --provider:segmentation_id 1012

neutron subnet-create --gateway 192.168.22.1 private_2 192.168.22.0/24 --enable_dhcp=True --name private_subnet_2

ROUTER_ID=$(neutron router-list |grep ' provider-router ' |awk '{print $2}')

SUBNET_ID=$(neutron subnet-list |grep '192.168.22.0/24' |awk '{print $2}')

neutron router-interface-add $ROUTER_ID $SUBNET_ID

nova hypervisor-list

nova boot --flavor 2 --image trusty --nic net-id=$(neutron net-list |grep ' private_2 ' |awk '{print $2}') --hint force_hosts=juju-zhhuabj-machine-16 i2

相关配置

1, neutron.conf on the node hosted neutron-api

router_distributed = True

2, ml2_conf.ini on the node hosts neutron-api

mechanism_drivers =openvswitch,l2population

3, l3_agent on the node hosted nova-compute

agent_mode = dvr

4, l3_agent on the node hosted l3-agent

agent_mode = dvr_snat

5, openvswitch_agent.ini on all nodes

[agent]

l2_population = True

enable_distributed_routing = True

基于策略的路由

如路由器上有多个接口,一个为IF1(IP为IP1), 与之相连的子网为P1_NET

a, 创建路由表T1, echo 200 T1 >> /etc/iproute2/rt_tables

b, 设置main表中的缺省路由,ip route add default via $P1

c, 设置main表中的路由,ip route add $P1_NET dev $IF1 src IP1, 注意一定要加src参数, 与步骤d)配合

d, 设置路由规则, ip rule add from $IP1 table T1, 注意与上步c)配合

e, 设置T1表中的路由, ip route add $P1_NET dev $IF1 src $IP1 table T1 && ip route flush cache

f, 负载均衡, ip route add default scope global nexthop via $P1 dev $IF1 weight 1 \

nexthop via $P2 dev $IF2 weight 1

1, 中心化SNAT流量 (计算节点没有FIP时会这么走, 以及计算节点有FIP 但虚机无FIP时)

DVR Deployment without FIP - SNAT goes centralized l3-agent:

-

qr namespace (R ns) in compute node uses table=218103809 policy router to guide SNAT traffic from compute node to centralized network node (eg: qr1=192.168.21.1, sg1=192.168.21.3, VM=192.168.21.5)

-

ip netns exec qr ip route add 192.168.21.0/24 dev qr1 src 192.168.21.1 table main #ingress

-

ip netns exec qr ip rule add from 192.168.21.1/24 table 218103809 #egress

-

ip netns exec qr ip route add default via 192.168.21.3 dev qr1 table 218103809

-

-

SNAT namespace in network node uses the following SNAT rules

-

-A neutron-l3-agent-snat -o qg -j SNAT --to-source <FIP_on_qg>

-

-A neutron-l3-agent-snat -m mark ! --mark 0x2/0xffff -m conntrack --ctstate DNAT -j SNAT --to-source <FIP_on_qg>

-

完整的路径如下:

计算节点只有qrouter-xxx名空间(计算节点有FIP才有fip-xxx名空间)

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip addr show

19: qr-48e90ad1-5f: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UNKNOWN group default qlen 1

link/ether fa:16:3e:f8:fe:97 brd ff:ff:ff:ff:ff:ff

inet 192.168.21.1/24 brd 192.168.21.255 scope global qr-48e90ad1-5f

valid_lft forever preferred_lft forever

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip rule list

0: from all lookup local

32766: from all lookup main

32767: from all lookup default

3232240897: from 192.168.21.1/24 lookup 3232240897

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip route list table main

192.168.21.0/24 dev qr-48e90ad1-5f proto kernel scope link src 192.168.21.1

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip route list table 3232240897

default via 192.168.21.3 dev qr-48e90ad1-5f

网络节点, sg-xxx上的接口即为同子网关的一个专用于SNAT的IP

$ sudo ip netns list

qrouter-9a3594b0-52d1-4f29-a799-2576682e275b (id: 2)

snat-9a3594b0-52d1-4f29-a799-2576682e275b (id: 1)

qdhcp-7a8aad5b-393e-4428-b098-066d002b2253 (id: 0)

$ sudo ip netns exec snat-9a3594b0-52d1-4f29-a799-2576682e275b ip addr show

2: qg-b825f548-32@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:c4:fd:ad brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.5.150.0/16 brd 10.5.255.255 scope global qg-b825f548-32

valid_lft forever preferred_lft forever

3: sg-95540cb3-85@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UP group default qlen 1000

link/ether fa:16:3e:62:d3:26 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.21.3/24 brd 192.168.21.255 scope global sg-95540cb3-85

valid_lft forever preferred_lft forever

# 虚机无FIP时

$ sudo ip netns exec snat-9a3594b0-52d1-4f29-a799-2576682e275b iptables-save |grep SNAT

-A neutron-l3-agent-snat -o qg-b825f548-32 -j SNAT --to-source 10.5.150.0

-A neutron-l3-agent-snat -m mark ! --mark 0x2/0xffff -m conntrack --ctstate DNAT -j SNAT --to-source 10.5.150.02, 计算节点有FIP且虚机也有FIP时SNAT走计算节点

DVR Deployment without FIP - SNAT goes from qr ns to FIP ns via peer devices rfp and fpr

-

qr namespace in compute node uses table=16 policy router to guide SNAT traffic from compute node to centralized network node (eg: rfp/qr=169.254.109.46/31, fpr/fip=169.254.106.115/31, VM=192.168.21.5)

-

ip netns exec qr ip route add 192.168.21.0/24 dev qr src 192.168.21.1 table main #ingress

-

ip netns exec qr ip rule add from 192.168.21.5 table 16 #egress

-

ip netns exec qr ip route add default via 169.254.106.115 dev rfp table 16

-

-

qr namespace in compute node also uses the following SNAT rules

-A neutron-l3-agent-float-snat -s 192.168.21.5/32 -j SNAT --to-source <FIP_on_rfp>

具实设置是,这个和下面的DNAT类似,计算节点上因为已经有FIP那SNAT也从计算节点出,故需要将SNAT流量从qrouter-xxx名空间通过rfp-xxx与fpr-xxx这对peer devices采用16这个路由表导到fip-xxx名空间。

$ sudo ip netns list

qrouter-9a3594b0-52d1-4f29-a799-2576682e275b

fip-57282976-a9b2-4c3f-9772-6710507c5c4e

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip addr show

4: rfp-9a3594b0-5@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UP group default qlen 1000

link/ether 3a:63:a4:11:4d:c4 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 169.254.109.46/31 scope global rfp-9a3594b0-5

valid_lft forever preferred_lft forever

inet 10.5.150.1/32 brd 10.5.150.1 scope global rfp-9a3594b0-5

valid_lft forever preferred_lft forever

19: qr-48e90ad1-5f: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UNKNOWN group default qlen 1

link/ether fa:16:3e:f8:fe:97 brd ff:ff:ff:ff:ff:ff

inet 192.168.21.1/24 brd 192.168.21.255 scope global qr-48e90ad1-5f

valid_lft forever preferred_lft forever

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip rule list

0: from all lookup local

32766: from all lookup main

32767: from all lookup default

57481: from 192.168.21.5 lookup 16

3232240897: from 192.168.21.1/24 lookup 3232240897

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip route list table main

169.254.109.46/31 dev rfp-9a3594b0-5 proto kernel scope link src 169.254.109.46

192.168.21.0/24 dev qr-48e90ad1-5f proto kernel scope link src 192.168.21.1

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip route list table 16

default via 169.254.106.115 dev rfp-9a3594b0-5

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b iptables-save |grep SNAT

-A neutron-l3-agent-float-snat -s 192.168.21.5/32 -j SNAT --to-source 10.5.150.1

$ sudo ip netns exec fip-57282976-a9b2-4c3f-9772-6710507c5c4e ip addr show

5: fpr-9a3594b0-5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UP group default qlen 1000

link/ether fa:e7:f9:fd:5a:40 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 169.254.106.115/31 scope global fpr-9a3594b0-5

valid_lft forever preferred_lft forever

17: fg-d35ad06f-ae: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UNKNOWN group default qlen 1

link/ether fa:16:3e:b5:2d:2c brd ff:ff:ff:ff:ff:ff

inet 10.5.150.2/16 brd 10.5.255.255 scope global fg-d35ad06f-ae

valid_lft forever preferred_lft forever

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

169.254.106.114 0.0.0.0 255.255.255.254 U 0 0 0 rfp-9a3594b0-5

192.168.21.0 0.0.0.0 255.255.255.0 U 0 0 0 qr-48e90ad1-5f

$ sudo ip netns exec fip-57282976-a9b2-4c3f-9772-6710507c5c4e route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.5.0.1 0.0.0.0 UG 0 0 0 fg-d35ad06f-ae

10.5.0.0 0.0.0.0 255.255.0.0 U 0 0 0 fg-d35ad06f-ae

10.5.150.1 169.254.106.114 255.255.255.255 UGH 0 0 0 fpr-9a3594b0-5

169.254.106.114 0.0.0.0 255.255.255.254 U 0 0 0 fpr-9a3594b0-5rfp-9a3594b0-5@if5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UP group default qlen 1000

link/ether 3a:63:a4:11:4d:c4 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 169.254.109.46/31 scope global rfp-9a3594b0-5

valid_lft forever preferred_lft forever

inet 10.5.150.1/32 brd 10.5.150.1 scope global rfp-9a3594b0-5

valid_lft forever preferred_lft forever

19: qr-48e90ad1-5f: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UNKNOWN group default qlen 1

link/ether fa:16:3e:f8:fe:97 brd ff:ff:ff:ff:ff:ff

inet 192.168.21.1/24 brd 192.168.21.255 scope global qr-48e90ad1-5f

valid_lft forever preferred_lft forever

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip rule list

0: from all lookup local

32766: from all lookup main

32767: from all lookup default

57481: from 192.168.21.5 lookup 16

3232240897: from 192.168.21.1/24 lookup 3232240897

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip route list table main

169.254.109.46/31 dev rfp-9a3594b0-5 proto kernel scope link src 169.254.109.46

192.168.21.0/24 dev qr-48e90ad1-5f proto kernel scope link src 192.168.21.1

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b ip route list table 16

default via 169.254.106.115 dev rfp-9a3594b0-5

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b iptables-save |grep SNAT

-A neutron-l3-agent-float-snat -s 192.168.21.5/32 -j SNAT --to-source 10.5.150.1

$ sudo ip netns exec fip-57282976-a9b2-4c3f-9772-6710507c5c4e ip addr show

5: fpr-9a3594b0-5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UP group default qlen 1000

link/ether fa:e7:f9:fd:5a:40 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 169.254.106.115/31 scope global fpr-9a3594b0-5

valid_lft forever preferred_lft forever

17: fg-d35ad06f-ae: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1458 qdisc noqueue state UNKNOWN group default qlen 1

link/ether fa:16:3e:b5:2d:2c brd ff:ff:ff:ff:ff:ff

inet 10.5.150.2/16 brd 10.5.255.255 scope global fg-d35ad06f-ae

valid_lft forever preferred_lft forever

$ sudo ip netns exec qrouter-9a3594b0-52d1-4f29-a799-2576682e275b route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

169.254.106.114 0.0.0.0 255.255.255.254 U 0 0 0 rfp-9a3594b0-5

192.168.21.0 0.0.0.0 255.255.255.0 U 0 0 0 qr-48e90ad1-5f

$ sudo ip netns exec fip-57282976-a9b2-4c3f-9772-6710507c5c4e route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.5.0.1 0.0.0.0 UG 0 0 0 fg-d35ad06f-ae

10.5.0.0 0.0.0.0 255.255.0.0 U 0 0 0 fg-d35ad06f-ae

10.5.150.1 169.254.106.114 255.255.255.255 UGH 0 0 0 fpr-9a3594b0-5

169.254.106.114 0.0.0.0 255.255.255.254 U 0 0 0 fpr-9a3594b0-5

3, 计算节点上的DNAT流量(一个计算节点需要两个外网IP)

网络节点上的namespace是qrouter(管子网网关)和snat(有qg-接口与用于SNAT的,与子网网关不同的sg-接口)。

计算节点上的namespace是qrouter(管子网网关,rpf:169.254.31.29/24,FIP位于rpf接口)和fip(fg:外网网关,fpr:169.254.31.28), qrouter与fip两个名空间通过fpr与rpf两个peer设备关联,流量从fip:fg经peer设备导到qrouter里FIP。

DVR Deployment without FIP - DNAT first via arp proxy then goes from FIP ns to qr ns via peer devices rfp and fpr

-

FIP ns need to proxy ARP for FIP_on_rfp on fg(fg will finally connect to br-ex) because rfp is in another ns

-

ip netns exec fip sysctl net.ipv4.conf.fg-d35ad06f-ae.proxy_arp=1

-

-

The floating traffic is guided from FIP ns to qr ns via peer devices (rfp and fpr)

-

qr namespace in compute node has the following DNAT

-

ip netns exec qr iptables -A neutron-l3-agent-PREROUTING -d <FIP_on_rfp> -j DNAT --to-destination 192.168.21.5

-

ip netns exec qr iptables -A neutron-l3-agent-POSTROUTING ! -i rfp ! -o rfp -m conntrack ! --ctstate DNAT -j ACCEPT

-

继续给上面的虚机13.0.0.6配置一个浮动IP(192.168.122.219)

a, 计算节点的qrouter名空间除了有它的网关13.0.0.1外,还会有它的浮动IP(192.168.122.219),和rfp-db5090df-8=169.254.31.28/31

有了DNAT规则(-A neutron-l3-agent-PREROUTING -d 192.168.122.219/32 -j DNAT --to-destination 13.0.0.6)

-A neutron-l3-agent-POSTROUTING ! -i rfp-db5090df-8 ! -o rfp-db5090df-8 -m conntrack ! --ctstate DNAT -j ACCEPT

c, 计算节点除了有qrouter名空间外,还多出一个fip名空间。它多用了一个外网IP(192.168.122.220), 和fpr-fpr-db5090df-8=169.254.31.29/31

d, 处理snat流量和之前一样由路由表21813809处理(以下所有命令均是在名空间执行的,为了清晰我去掉了)

ip route add 13.0.0.0/24 dev rfp-db5090df-8 src 13.0.0.1

ip rule add from 13.0.0.1 table 218103809

ip route add 13.0.0.0/24 dev rfp-db5090df-8 src 13.0.0.1 table 218103809

e, qrouter名空间内处理dnat流量新增路由表16处理,把从虚机13.0.0.6出来的流量通过rfp-db5090df-8导到fip名空间

ip rule add from 13.0.0.6 table 16

ip route add default gw dev rfp-db5090df-8 table 6

f, fip名空间处理dnat流量,

ip route add 169.254.31.28/31 dev fpr-db5090df-8

ip route add 192.168.122.219 dev fpr-db5090df-8

ip route add 192.168.122.0/24 dev fg-c56eb4c0-b0

4, 东西流量(同一tenant下不同子网)

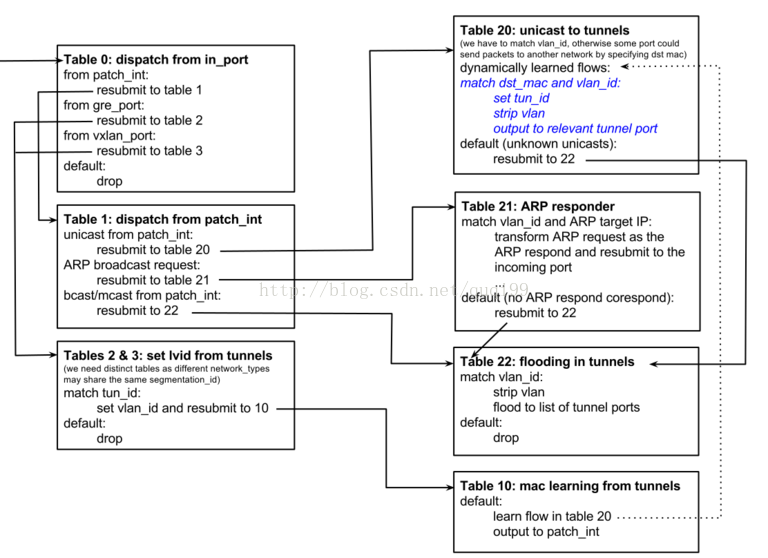

让我们先回顾下图中l2-pop的流表(来自:https://wiki.openstack.org/wiki/Ovs-flow-logic ) (实现代码是:

https://review.openstack.org/#/c/41239/2/neutron/plugins/openvswitch/agent/ovs_neutron_agent.py ), patch_int, gre_port, vxlan_port分别是br-tun上的三个port。它的流表设计目的是:1)地址学习,学习虚机及远端tunnel的MAC。2)禁用掉入口广播。3)禁用到除gre/vxlan等tunnel出口广播以外的一切不明广播。

- 入口流量 - 即从br-tun的gre_port或vxlan_port进入的流量,先转到table 2&3去tunnel id加lvid, 然后转到table 10去学习远程的tunnel地址,最到转到patch-int从而让流量从br-tun到br-int

- 出口流量 - 即从br-tun的patch-int进入的流量,先转到table 1,单播转到table 20去学习VM的MAC,广播或多播转到table 22, ARP广播则转到table 21

从下图的东西向流量(同一tenant下的不同子网)的流向图可以看出,它的设计的关键是肯定会出现多个计算节点上存在同一子网的网关,所以应该各计算节点上相同子网的所有网关使用相同的IP与MAC地址,或者也可以为每个计算节点生成唯一的DVR MAC地址。所以流表需要在进出br-tun时对DVR-MAC进行封装与解封。

具体的流表在进出计算节点的流量都应该替换到DVR MAC地址,流表要在上图的基础上增加,见:https://wiki.openstack.org/wiki/Neutron/DVR_L2_Agent

总结后的流表如下:

对于出口流量,

table=1, priority=4, dl_vlan=vlan1, dl_type=arp, ar_tpa=gw1 actions:drop #一计算节点所有子网到其他计算节点其网关的arp流量

table=1, priority=4, dl_vlan=vlan1, dl_dst=gw1-mac actions:drop #一计算节点所有子网到其他计算节点其网关的流量

table=1, priority=1, dl_vlan=vlan1, dl_src=gw1-mac, actions:mod dl_src=dvr-cn1-mac,resubmit(,2) #所有出计算节点的流量使用DVR MAC

对于入口流量,还得增加一个table 9, 插在table 2&3 (改先跳到table 9) 与table 10之间:

table=9, priority=1, dl_src=dvc-cn1-mac actions=output-> patch-int

table=9, priority=0, action=-resubmit(,10)

真实的流表如下:

$ sudo ovs-ofctl dump-flows br-int

NXST_FLOW reply (xid=0x4):

cookie=0xb9f4f1698b73b733, duration=4086.225s, table=0, n_packets=0, n_bytes=0, idle_age=4086, priority=10,icmp6,in_port=8,icmp_type=136 actions=resubmit(,24)

cookie=0xb9f4f1698b73b733, duration=4085.852s, table=0, n_packets=5, n_bytes=210, idle_age=3963, priority=10,arp,in_port=8 actions=resubmit(,24)

cookie=0xb9f4f1698b73b733, duration=91031.974s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=4,in_port=1,dl_src=fa:16:3f:02:1a:a7 actions=resubmit(,2)

cookie=0xb9f4f1698b73b733, duration=91031.667s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=2,in_port=2,dl_src=fa:16:3f:02:1a:a7 actions=resubmit(,1)

cookie=0xb9f4f1698b73b733, duration=91031.178s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=4,in_port=1,dl_src=fa:16:3f:be:36:1f actions=resubmit(,2)

cookie=0xb9f4f1698b73b733, duration=91030.686s, table=0, n_packets=87, n_bytes=6438, idle_age=475, hard_age=65534, priority=2,in_port=2,dl_src=fa:16:3f:be:36:1f actions=resubmit(,1)

cookie=0xb9f4f1698b73b733, duration=91030.203s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=4,in_port=1,dl_src=fa:16:3f:ef:44:e9 actions=resubmit(,2)

cookie=0xb9f4f1698b73b733, duration=91029.710s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=2,in_port=2,dl_src=fa:16:3f:ef:44:e9 actions=resubmit(,1)

cookie=0xb9f4f1698b73b733, duration=91033.507s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=2,in_port=1 actions=drop

cookie=0xb9f4f1698b73b733, duration=91039.210s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=NORMAL

cookie=0xb9f4f1698b73b733, duration=91033.676s, table=0, n_packets=535, n_bytes=51701, idle_age=3963, hard_age=65534, priority=1 actions=NORMAL

cookie=0xb9f4f1698b73b733, duration=4090.101s, table=1, n_packets=2, n_bytes=196, idle_age=3965, priority=4,dl_vlan=4,dl_dst=fa:16:3e:26:85:02 actions=strip_vlan,mod_dl_src:fa:16:3e:6d:f9:9c,output:8

cookie=0xb9f4f1698b73b733, duration=91033.992s, table=1, n_packets=84, n_bytes=6144, idle_age=475, hard_age=65534, priority=1 actions=drop

cookie=0xb9f4f1698b73b733, duration=91033.835s, table=2, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=1 actions=drop

cookie=0xb9f4f1698b73b733, duration=91034.151s, table=23, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop

cookie=0xb9f4f1698b73b733, duration=4086.430s, table=24, n_packets=0, n_bytes=0, idle_age=4086, priority=2,icmp6,in_port=8,icmp_type=136,nd_target=fe80::f816:3eff:fe26:8502 actions=NORMAL

cookie=0xb9f4f1698b73b733, duration=4086.043s, table=24, n_packets=5, n_bytes=210, idle_age=3963, priority=2,arp,in_port=8,arp_spa=192.168.22.5 actions=NORMAL

cookie=0xb9f4f1698b73b733, duration=91038.874s, table=24, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop

$ sudo ovs-ofctl dump-flows br-tun

NXST_FLOW reply (xid=0x4):

cookie=0x8502c5292d4bca36, duration=91092.422s, table=0, n_packets=165, n_bytes=14534, idle_age=4114, hard_age=65534, priority=1,in_port=1 actions=resubmit(,1)

cookie=0x8502c5292d4bca36, duration=4158.903s, table=0, n_packets=86, n_bytes=6340, idle_age=534, priority=1,in_port=4 actions=resubmit(,3)

cookie=0x8502c5292d4bca36, duration=4156.707s, table=0, n_packets=104, n_bytes=9459, idle_age=4114, priority=1,in_port=5 actions=resubmit(,3)

cookie=0x8502c5292d4bca36, duration=91093.596s, table=0, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop

cookie=0x8502c5292d4bca36, duration=4165.402s, table=1, n_packets=0, n_bytes=0, idle_age=4165, priority=3,arp,dl_vlan=3,arp_tpa=192.168.21.1 actions=drop

cookie=0x8502c5292d4bca36, duration=4154.134s, table=1, n_packets=1, n_bytes=42, idle_age=4123, priority=3,arp,dl_vlan=4,arp_tpa=192.168.22.1 actions=drop

cookie=0x8502c5292d4bca36, duration=4165.079s, table=1, n_packets=0, n_bytes=0, idle_age=4165, priority=2,dl_vlan=3,dl_dst=fa:16:3e:f8:fe:97 actions=drop

cookie=0x8502c5292d4bca36, duration=4153.942s, table=1, n_packets=0, n_bytes=0, idle_age=4153, priority=2,dl_vlan=4,dl_dst=fa:16:3e:6d:f9:9c actions=drop

cookie=0x8502c5292d4bca36, duration=4164.583s, table=1, n_packets=0, n_bytes=0, idle_age=4164, priority=1,dl_vlan=3,dl_src=fa:16:3e:f8:fe:97 actions=mod_dl_src:fa:16:3f:6b:1f:bb,resubmit(,2)

cookie=0x8502c5292d4bca36, duration=4153.760s, table=1, n_packets=0, n_bytes=0, idle_age=4153, priority=1,dl_vlan=4,dl_src=fa:16:3e:6d:f9:9c actions=mod_dl_src:fa:16:3f:6b:1f:bb,resubmit(,2)

cookie=0x8502c5292d4bca36, duration=91092.087s, table=1, n_packets=161, n_bytes=14198, idle_age=4114, hard_age=65534, priority=0 actions=resubmit(,2)

cookie=0x8502c5292d4bca36, duration=91093.596s, table=2, n_packets=104, n_bytes=8952, idle_age=4114, hard_age=65534, priority=0,dl_dst=00:00:00:00:00:00/01:00:00:00:00:00 actions=resubmit(,20)

cookie=0x8502c5292d4bca36, duration=91093.596s, table=2, n_packets=60, n_bytes=5540, idle_age=4115, hard_age=65534, priority=0,dl_dst=01:00:00:00:00:00/01:00:00:00:00:00 actions=resubmit(,22)

cookie=0x8502c5292d4bca36, duration=4166.465s, table=3, n_packets=81, n_bytes=6018, idle_age=534, priority=1,tun_id=0x5 actions=mod_vlan_vid:3,resubmit(,9)

cookie=0x8502c5292d4bca36, duration=4155.086s, table=3, n_packets=109, n_bytes=9781, idle_age=4024, priority=1,tun_id=0x3f4 actions=mod_vlan_vid:4,resubmit(,9)

cookie=0x8502c5292d4bca36, duration=91093.595s, table=3, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop

cookie=0x8502c5292d4bca36, duration=91093.594s, table=4, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop

cookie=0x8502c5292d4bca36, duration=91093.593s, table=6, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=0 actions=drop

cookie=0x8502c5292d4bca36, duration=91090.524s, table=9, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=1,dl_src=fa:16:3f:02:1a:a7 actions=output:1

cookie=0x8502c5292d4bca36, duration=91089.541s, table=9, n_packets=87, n_bytes=6438, idle_age=534, hard_age=65534, priority=1,dl_src=fa:16:3f:be:36:1f actions=output:1

cookie=0x8502c5292d4bca36, duration=91088.584s, table=9, n_packets=0, n_bytes=0, idle_age=65534, hard_age=65534, priority=1,dl_src=fa:16:3f:ef:44:e9 actions=output:1

cookie=0x8502c5292d4bca36, duration=91092.260s, table=9, n_packets=104, n_bytes=9459, idle_age=4114, hard_age=65534, priority=0 actions=resubmit(,10)

cookie=0x8502c5292d4bca36, duration=91093.593s, table=10, n_packets=104, n_bytes=9459, idle_age=4114, hard_age=65534, priority=1 actions=learn(table=20,hard_timeout=300,priority=1,cookie=0x8502c5292d4bca36,NXM_OF_VLAN_TCI[0..11],NXM_OF_ETH_DST[]=NXM_OF_ETH_SRC[],load:0->NXM_OF_VLAN_TCI[],load:NXM_NX_TUN_ID[]->NXM_NX_TUN_ID[],output:NXM_OF_IN_PORT[]),output:1

cookie=0x8502c5292d4bca36, duration=4158.170s, table=20, n_packets=0, n_bytes=0, idle_age=4158, priority=2,dl_vlan=3,dl_dst=fa:16:3e:4a:60:12 actions=strip_vlan,set_tunnel:0x5,output:4

cookie=0x8502c5292d4bca36, duration=4155.621s, table=20, n_packets=0, n_bytes=0, idle_age=4155, priority=2,dl_vlan=3,dl_dst=fa:16:3e:f9:88:90 actions=strip_vlan,set_tunnel:0x5,output:5

cookie=0x8502c5292d4bca36, duration=4155.219s, table=20, n_packets=0, n_bytes=0, idle_age=4155, priority=2,dl_vlan=3,dl_dst=fa:16:3e:62:d3:26 actions=strip_vlan,set_tunnel:0x5,output:5

cookie=0x8502c5292d4bca36, duration=4150.520s, table=20, n_packets=101, n_bytes=8658, idle_age=4114, priority=2,dl_vlan=4,dl_dst=fa:16:3e:55:1d:42 actions=strip_vlan,set_tunnel:0x3f4,output:5

cookie=0x8502c5292d4bca36, duration=4150.330s, table=20, n_packets=0, n_bytes=0, idle_age=4150, priority=2,dl_vlan=4,dl_dst=fa:16:3e:3b:59:ef actions=strip_vlan,set_tunnel:0x3f4,output:5

cookie=0x8502c5292d4bca36, duration=91093.592s, table=20, n_packets=2, n_bytes=196, idle_age=4567, hard_age=65534, priority=0 actions=resubmit(,22)

cookie=0x8502c5292d4bca36, duration=4158.469s, table=22, n_packets=0, n_bytes=0, idle_age=4158, hard_age=4156, dl_vlan=3 actions=strip_vlan,set_tunnel:0x5,output:4,output:5

cookie=0x8502c5292d4bca36, duration=4155.574s, table=22, n_packets=13, n_bytes=1554, idle_age=4115, hard_age=4150, dl_vlan=4 actions=strip_vlan,set_tunnel:0x3f4,output:4,output:5

cookie=0x8502c5292d4bca36, duration=91093.416s, table=22, n_packets=47, n_bytes=3986, idle_age=4148, hard_age=65534, priority=0 actions=drop特性历史

- DVR and VRRP are only supported since Juno

- DVR only supports the use of the vxlan overlay network for Juno

- l2-population must be disabled with VRRP before Newton

- l2-population must be enabled with DVR for all releases

- VRRP must be disabled with DVR before Newton

- DVR supports augmentation using VRRP since Newton,即支持将中心化的SNAT l3-agent添加VRRP HA支持 - https://review.openstack.org/#/c/143169/

- DVR对VPNaaS的影响。DVR分legency, dvr, dvr_snat三种模式。dvr只用于计算节点,legency/dvr_snat只用于网络节点。legency模式下只有qrouter-xxx ns(SNAT等设置在此ns里), dvr_snat模式下才有snat_xxx(SNAT设置在此ns里)。由于vpnaas的特性它只能中心化使用(neutron router-create vpn-router --distributed=False --ha=True),所以对于dvr router应该将VPNaaS服务只设置在中心化的snat_xxx ns中,并且将vpn流量从计算节点导到网络节点)。见:https://review.openstack.org/#/c/143203/ 。

- DVR对FWaaS的影响。legency时代neutron只有一个qrouter-xxx,所以东西南北向的防火墙功能都在qrouter-xxx里;而DVR时代在计算节点多出fip-xxx(此ns中不安装防火墙规则),在网络节点多出snat-xxx处理SNAT,我们确保计算节点上的qrouter-xxx里也有南北向防火墙功能,并确保DVR在东西向流量不出问题。见:https://review.openstack.org/#/c/113359/ 与https://specs.openstack.org/openstack/neutron-specs/specs/juno/neutron-dvr-fwaas.html

- DVR对LBaaS无影响

Juju DVR

juju config neutron-api enable_dvr=True

juju config neutron-api neutron-external-network=ext_net

juju config neutron-gateway bridge-mappings=physnet1:br-ex

juju config neutron-openvswitch bridge-mappings=physnet1:br-ex #dvr need this

juju config neutron-openvswitch ext-port='ens7' #dvr need this

juju config neutron-gateway ext-port='ens7'

#juju config neutron-openvswitch data-port='br-ex:ens7'

#juju config neutron-gateway data-port='br-ex:ens7'

juju config neutron-openvswitch enable-local-dhcp-and-metadata=True #enable dhcp-agent in every nova-compute

juju config neutron-api dhcp-agents-per-network=3

juju config neutron-openvswitch dns-servers=8.8.8.8

注: 下列juju代码说明只有在dvr时才需要ext_port, data-port的bridge_mappings需要neutron-l2-agent才能设置将外部网卡插到br-ex里, dvr在计算节点应该没用l2-agent所以才用ext_port在juju里就将外部网络插到br-ex网桥里了 (用br-ex时意味着无l2-agent, 所以一些port也无法管理即使能工作也状态也是DOWN, 改成provider network就好了, 见- https://bugzilla.redhat.com/show_bug.cgi?id=1054857#c3).

def configure_ovs():

status_set('maintenance', 'Configuring ovs')

if not service_running('openvswitch-switch'):

full_restart()

datapath_type = determine_datapath_type()

add_bridge(INT_BRIDGE, datapath_type)

add_bridge(EXT_BRIDGE, datapath_type)

ext_port_ctx = None

if use_dvr():

ext_port_ctx = ExternalPortContext()()

if ext_port_ctx and ext_port_ctx['ext_port']:

add_bridge_port(EXT_BRIDGE, ext_port_ctx['ext_port'])

class ExternalPortContext(NeutronPortContext):

def __call__(self):

ctxt = {}

ports = config('ext-port')

if ports:

ports = [p.strip() for p in ports.split()]

ports = self.resolve_ports(ports)

if ports:

ctxt = {"ext_port": ports[0]}

napi_settings = NeutronAPIContext()()

mtu = napi_settings.get('network_device_mtu')

if mtu:

ctxt['ext_port_mtu'] = mtu

return ctxt1, br-int与br-ex通过fg-与phy-br-ex相连, fg-是在br-int上, br-ex有外部网卡ens7

Bridge br-ex

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "ens7"

Interface "ens7"

Port br-ex

Interface br-ex

type: internal

Port phy-br-ex

Interface phy-br-ex

type: patch

options: {peer=int-br-ex}

Bridge br-int

Port "fg-8e1bdebc-b0"

tag: 2

Interface "fg-8e1bdebc-b0"

type: internal

如果没有多余的外部网卡ens7呢? 只有一个网卡且做成了linux bridge呢, 可以这样:

https://bugs.launchpad.net/charms/+source/neutron-openvswitch/+bug/1635067

on each compute node

# create veth pair between br-bond0 and veth-tenant

ip l add name veth-br-bond0 type veth peer name veth-tenant# set mtu if needed on veth interfaces and turn up

#ip l set dev veth-br-bond0 mtu 9000

#ip l set dev veth-tenant mtu 9000

ip l set dev veth-br-bond0 up

ip l set dev veth-tenant up

# add br-bond0 as master for veth-br-bond0

ip l set veth-br-bond0 master br-bond0

juju set neutron-openvswitch data-port="br-ex:veth-tenant"

2, fip-xxx上有arp proxy

root@juju-23f84c-queens-dvr-7:~# ip netns exec fip-01471212-b65f-4735-9c7e-45bf9ec5eee8 sysctl net.ipv4.conf.fg-8e1bdebc-b0.proxy_arp

net.ipv4.conf.fg-8e1bdebc-b0.proxy_arp = 1

3, qrouter-xxx上有NAT rule, FIP并不需要设置在具体的网卡上, 只需要在SNAT/DNAT中指明即可.

root@juju-23f84c-queens-dvr-7:~# ip netns exec qrouter-909c6b55-9bc6-476f-9d28-c32d031c41d7 iptables-save |grep NAT

-A neutron-l3-agent-POSTROUTING ! -i rfp-909c6b55-9 ! -o rfp-909c6b55-9 -m conntrack ! --ctstate DNAT -j ACCEPT

-A neutron-l3-agent-PREROUTING -d 10.5.150.9/32 -i rfp-909c6b55-9 -j DNAT --to-destination 192.168.21.10

-A neutron-l3-agent-float-snat -s 192.168.21.10/32 -j SNAT --to-source 10.5.150.9

-A neutron-postrouting-bottom -m comment --comment "Perform source NAT on outgoing traffic." -j neutron-l3-agent-snat

root@juju-23f84c-queens-dvr-7:~# ip netns exec qrouter-909c6b55-9bc6-476f-9d28-c32d031c41d7 ip addr show |grep 10.5.150.9

附件 - how to set up dvr test env

# set up the basic non-dvr openstack

./generate-bundle.sh --series xenial --release ocata

juju add-model xenial-ocata

juju deploy ./b/openstack.yaml

juju add-unit nova-compute

./configure

# enable dvr mode

source ~/novarc

nova interface-attach $(nova list |grep $(juju ssh nova-compute/0 -- hostname) |awk '{print $2}') --net-id=$(neutron net-show zhhuabj_admin_net -c id -f value)

nova interface-attach $(nova list |grep $(juju ssh nova-compute/1 -- hostname) |awk '{print $2}') --net-id=$(neutron net-show zhhuabj_admin_net -c id -f value)

juju ssh nova-compute/0 -- sudo ovs-vsctl add-port br-data ens7

juju ssh nova-compute/0 -- sudo ifconfig ens7 up

juju ssh nova-compute/1 -- sudo ovs-vsctl add-port br-data ens7

juju ssh nova-compute/1 -- sudo ifconfig ens7 up

juju ssh nova-compute/0 -- ip addr show ens7

juju ssh nova-compute/1 -- ip addr show ens7

#juju config neutron-openvswitch bridge-mappings=physnet1:br-data

juju config neutron-openvswitch data-port=br-data:ens7

juju config neutron-api overlay-network-type='gre vxlan'

# wait until block status is become well

juju config neutron-api enable-dvr=True

# transform the existing non-dvr router into dvr router

source ~/stsstack-bundles/openstack/novarc

ROUTER_ID=$(neutron router-show provider-router -c id -f value)

neutron router-update $ROUTER_ID --admin_state_up=false

neutron router-update $ROUTER_ID --ha=false --distributed=true

neutron router-update $ROUTER_ID --admin_state_up=true

# remove router from l3-agent, and let it reschedule to dvr compute-node

l3_agent_id=$(neutron agent-list |grep 'L3 agent' |grep $(juju ssh neutron-gateway/0 -- hostname) |awk -F '|' '{print $2}')

neutron l3-agent-router-remove $l3_agent_id $ROUTER_ID

# create two test VMs, and must create a FIP for the VIP

openstack port create --network private --fixed-ip subnet=private_subnet,ip-address=192.168.21.122 vip-port

vip=$(openstack port show vip-port -c fixed_ips -f value |awk -F "'" '{print $2}')

neutron port-create private --name vm1-port --allowed-address-pair ip_address=$vip

neutron port-create private --name vm2-port --allowed-address-pair ip_address=$vip

#openstack port set xx --allowed-address ip-address=$vip

ip_vm1=$(openstack port show vm1-port -c fixed_ips -f value |awk -F "'" '{print $2}')

ip_vm2=$(openstack port show vm2-port -c fixed_ips -f value |awk -F "'" '{print $2}')

ext_net=$(openstack network show ext_net -f value -c id)

fip_vm1=$(openstack floating ip create $ext_net -f value -c floating_ip_address)

fip_vm2=$(openstack floating ip create $ext_net -f value -c floating_ip_address)

openstack floating ip set $fip_vm1 --fixed-ip-address $ip_vm1 --port vm1-port

openstack floating ip set $fip_vm2 --fixed-ip-address $ip_vm2 --port vm2-port

# must create a FIP for the VIP

fip_vip=$(openstack floating ip create $ext_net -f value -c floating_ip_address)

openstack floating ip set $fip_vip --fixed-ip-address $vip --port vip-port

openstack floating ip list

neutron security-group-create sg_vrrp

neutron security-group-rule-create --protocol 112 sg_vrrp

neutron security-group-rule-create --protocol tcp --port-range-min 22 --port-range-max 22 sg_vrrp

neutron security-group-rule-create --protocol icmp sg_vrrp

neutron port-update --security-group sg_vrrp vm1-port

neutron port-update --security-group sg_vrrp vm2-port

nova keypair-add --pub-key ~/.ssh/id_rsa.pub mykey

openstack flavor create --ram 2048 --disk 20 --vcpu 1 --id 122 myflavor

cat << EOF > user-data

#cloud-config

user: ubuntu

password: password

chpasswd: { expire: False }

EOF

openstack server create --wait --image xenial --flavor myflavor --key-name mykey --port $(openstack port show vm1-port -f value -c id) --user-data ./user-data --config-drive true vm1

openstack server create --wait --image xenial --flavor myflavor --key-name mykey --port $(openstack port show vm2-port -f value -c id) --user-data ./user-data --config-drive true vm2

# install keepalived inside two test VMs

sudo apt install -y keepalived

sudo bash -c 'cat > /etc/keepalived/keepalived.conf' << EOF

vrrp_instance VIP_1 {

state MASTER

interface ens2

virtual_router_id 51

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass supersecretpassword

}

virtual_ipaddress {

192.168.21.122

}

}

EOF

sudo systemctl restart keepalived

# 在Ocata上, 可以看到, 由于它的vip unbound port的binding:host_id继承自VM了(它应该为空之后DNAT规则在网络节点的snatxxx上做, 但它有bug不为空现在在计算节点的qrouter-xxx里做的DNAT, 这样当vrrp切换之后, DNAT并没有从一个计算节点移到另一个计算节点从而导致针对unbount VIP的FIP失效 ), 见: https://paste.ubuntu.com/p/KyP26vTycW/

# 这是针对queens的NAT规则 - https://paste.ubuntu.com/p/h7JT5Y5Y2x/ , 不过SNAT时, VM不能ping网络节点snat-xxx里的sg口, 暂不清楚为什么附录 - DVR时计算节点也使用dvr-snat模式并创建snat-xxx与网络节点上的snat-xxx通过vrrp组成主备

# https://bugs.launchpad.net/ubuntu/cosmic/+source/neutron/+bug/1606741

./generate-bundle.sh --series xenial --release queens

juju add-model dvr-lp1825966

juju deploy ./b/openstack.yaml

# just flat/vlan need this extra NIC

#source ~/novarc && nova interface-attach $(nova list |grep $(juju ssh nova-compute/1 -- hostname) |awk '{print $2}') --net-id=$(neutron net-show zhhuabj_admin_net -c id -f value)

./bin/add-data-ports.sh

#juju config neutron-openvswitch bridge-mappings="physnet1:br-data"

#juju config neutron-api flat-network-providers="physnet1"

juju config neutron-openvswitch data-port="br-data:ens7"

juju config neutron-api flat-network-providers="physnet1"

# vlan - must configure external switch to truck mode to allow vlan 1000 to 2000

#juju config neutron-api vlan-ranges="physnet1:1000:2000"

#neutron net-create net1 --provider:network_type vlan --provider:physical_network physnet1 --provider:segmentation_id 1001

#neutron net-create net1 --provider:network_type vlan --provider:physical_network physnet1 --provider:segmentation_id 1002

git clone https://github.com/openstack/charm-neutron-openvswitch.git neutron-openvswitch

cd neutron-openvswitch/

patch -p1 < 0001-Enable-keepalived-VRRP-health-check.patch

juju upgrade-charm neutron-openvswitch --path $PWD

juju config neutron-api enable-dvr=True

juju config neutron-openvswitch use-dvr-snat=True

juju config neutron-api enable-l3ha=true

juju config neutron-openvswitch enable-local-dhcp-and-metadata=True

./configure

./tools/sec_groups.sh

source ~/stsstack-bundles/openstack/novarc

# non-flat tenant router

neutron router-show provider-router

#neutron router-update --admin-state-up False provider-router

#neutron router-update provider-router --distributed True --ha=True

#neutron router-update --admin-state-up True provider-router

# can use flat network ext_net directly by using enable-local-dhcp-and-metadata=True to bypass metadata feature instead of using tenant network

# but ext_net without dhcp, so vm can't get IP

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

nova boot --key-name mykey --image xenial --flavor m1.small --nic net-id=$(neutron net-list |grep ' ext_net ' |awk '{print $2}') i1

nova boot --key-name mykey --image xenial --flavor m1.small --nic net-id=$(neutron net-list |grep ' private ' |awk '{print $2}') --nic net-id=$(neutron net-list |grep ' ext_net ' |awk '{print $2}') i1

# in nova-compute/1 unit. compute node will have snat-xxx namespace due to use-dvr-snat=True

wget https://gist.githubusercontent.com/dosaboy/cf8422f16605a76affa69a8db47f0897/raw/8e045160440ecf0f9dc580c8927b2bff9e9139f6/check_router_vrrp_transitions.sh

chmod +x check_router_vrrp_transitions.sh

# https://paste.ubuntu.com/p/PYPptZRhrn/

date; neutron l3-agent-list-hosting-router $(neutron router-show provider-router -c id -f value); juju ssh nova-compute/1 -- bash /home/ubuntu/check_router_vrrp_transitions.sh; juju ssh nova-compute/1 -- bash /home/ubuntu/check_router_vrrp_transitions.sh; sleep 40; date; neutron l3-agent-list-hosting-router $(neutron router-show provider-router -c id -f value); juju ssh nova-compute/1 -- bash /home/ubuntu/check_router_vrrp_transitions.sh; juju ssh nova-compute/1 -- bash /home/ubuntu/check_router_vrrp_transitions.sh;20200818 更新

在add FIP与del FIP之后restart l3-agent可能造成虚机无法访问外网,因为qrouter-xxx中之前关联这个FIP的流(table 16)未被删除导致流量仍然经rfp-/fpr-到fip-xxx了,而不是到中心化 - https://bugs.launchpad.net/neutron/+bug/1891673

通过下列方法创建dvr+vrrp环境

./generate-bundle.sh -s focal --dvr-snat-l3ha --name dvr --num-compute 3

./generate-bundle.sh --name dvr:stsstack --replay --run

#juju config neutron-api enable-dvr=True

#juju config neutron-openvswitch use-dvr-snat=True

#juju config neutron-api enable-l3ha=true

#juju config neutron-openvswitch enable-local-dhcp-and-metadata=True

#./bin/add-data-ports.sh

#juju config neutron-openvswitch data-port="br-data:ens7"

./configure #will run add-data-ports.sh as well

source novarc

./tools/sec_groups.sh

./tools/instance_launch.sh 1 bionic

1, 对于dvr router, 如果虚机没有FIP,流量通过qrouter-xxx中的qr-xxx(三个节点中的所有qr-xxx都有IP的)经一个rule table 3232240897走snat-xxx中的snat-xxx(三个节点中sg-xxx与qg-xxx只在master中有IP, 3232240897这个rule也是在三个节点中都有的).

# ip netns exec qrouter-919bb6a3-4618-4aa2-aed7-263fda53781f ip rule list

0: from all lookup local

32766: from all lookup main

32767: from all lookup default

3232240897: from 192.168.21.1/24 lookup 3232240897

# ip netns exec qrouter-919bb6a3-4618-4aa2-aed7-263fda53781f ip route list

169.254.88.134/31 dev rfp-919bb6a3-4 proto kernel scope link src 169.254.88.134

192.168.21.0/24 dev qr-710394f3-2a proto kernel scope link src 192.168.21.1

# ip netns exec qrouter-919bb6a3-4618-4aa2-aed7-263fda53781f ip route list table 3232240897

default via 192.168.21.177 dev qr-710394f3-2a proto static

# ip netns exec snat-919bb6a3-4618-4aa2-aed7-263fda53781f ip addr show sg-a27af7ea-db |grep global

inet 192.168.21.177/24 scope global sg-a27af7ea-db

# ip netns exec snat-919bb6a3-4618-4aa2-aed7-263fda53781f ip addr show qg-9d60afab-6c |grep global

inet 10.5.153.169/16 scope global qg-9d60afab-6c

iptables rule如下:

# sudo ip netns exec snat-919bb6a3-4618-4aa2-aed7-263fda53781f iptables -t nat -S |grep NAT

-A neutron-l3-agent-POSTROUTING ! -o qg-9d60afab-6c -m conntrack ! --ctstate DNAT -j ACCEPT

-A neutron-l3-agent-snat -o qg-9d60afab-6c -j SNAT --to-source 10.5.153.169 --random-fully

-A neutron-l3-agent-snat -m mark ! --mark 0x2/0xffff -m conntrack --ctstate DNAT -j SNAT --to-source 10.5.153.169 --random-fully

-A neutron-postrouting-bottom -m comment --comment "Perform source NAT on outgoing traffic." -j neutron-l3-agent-snat

2, 给虚机添加一个FIP后(192.168.21.210 -> 10.5.151.17)后,下列变化直接拦截虚机来的流量导到fip-xxx ns

#只有master节点会多出查询16的rule

# ip netns exec qrouter-919bb6a3-4618-4aa2-aed7-263fda53781f ip rule list

0: from all lookup local

32766: from all lookup main

32767: from all lookup default

36419: from 192.168.21.210 lookup 16

3232240897: from 192.168.21.1/24 lookup 3232240897

#三个节点都会有table 16

# ip netns exec qrouter-919bb6a3-4618-4aa2-aed7-263fda53781f ip route list table 16

default via 169.254.88.135 dev rfp-919bb6a3-4 proto static

iptables rule 如下:

# sudo ip netns exec qrouter-919bb6a3-4618-4aa2-aed7-263fda53781f iptables -t nat -S |grep 192.168

-A neutron-l3-agent-PREROUTING -d 10.5.152.142/32 -i rfp-919bb6a3-4 -j DNAT --to-destination 192.168.21.210

-A neutron-l3-agent-float-snat -s 192.168.21.210/32 -j SNAT --to-source 10.5.152.142 --random-fully

同时fip-xxx也会有一个rule让路由走fg-xxx

# ip netns exec fip-3501e489-bc96-4792-bd81-6d9f9451fd18 ip route list table 2852018311

default via 10.5.0.1 dev fg-16675357-43 proto static

3,删除FIP(openstack floating ip delete 10.5.151.17), 上面那个rule 16会消失,但table 16应该保留

注意:三个节点上都有qr-xxx但只有虚机所在的机器上的qr-xxx能ping虚机,同样,只有master上的snat-xxx能ping虚机参考

https://wiki.openstack.org/wiki/Neutron/DVR/HowTo

https://blueprints.launchpad.net/neutron/+spec/neutron-ovs-dvr

https://wiki.openstack.org/wiki/Neutron/DVR_L2_Agent

http://www.cnblogs.com/sammyliu/p/4713562.html

https://kimizhang.wordpress.com/2014/11/25/building-redundant-and-distributed-l3-network-in-juno/

https://docs.openstack.org/newton/networking-guide/deploy-ovs-ha-dvr.html#deploy-ovs-ha-dvr

5640

5640

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?