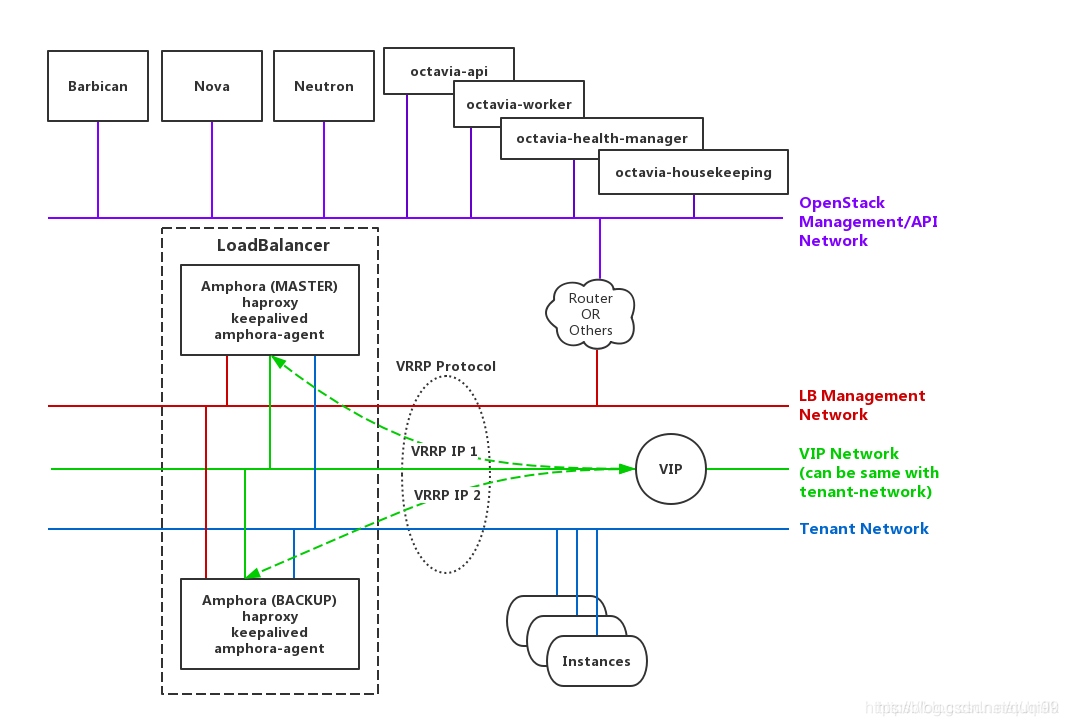

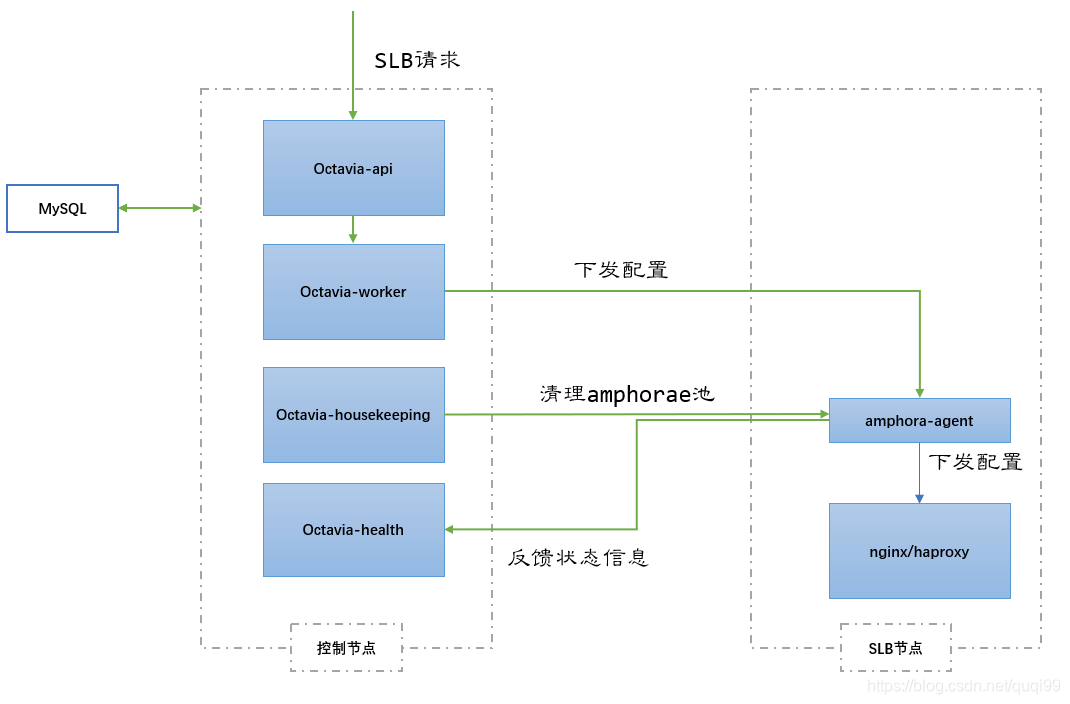

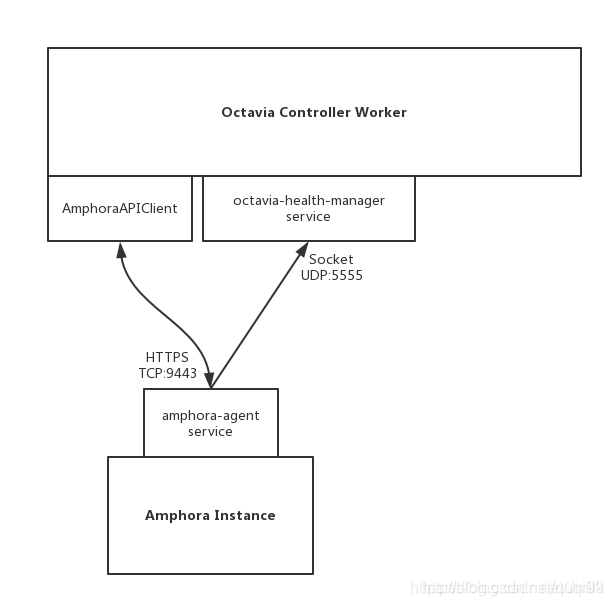

几张图感性认识ocatvia

问题

采用Neutron LBaaS v2实现HTTPS Health Monitors时的配置如下(步骤见附件 - Neutron LBaaS v2)

backend 52112201-05ce-4f4d-b5a8-9e67de2a895a

mode tcp

balance leastconn

timeout check 10s

option httpchk GET /

http-check expect rstatus 200

option ssl-hello-chk

server 37a1f5a8-ec7e-4208-9c96-27d2783a594f 192.168.21.13:443 weight 1 check inter 5s fall 2

server 8e722b4b-08b8-4089-bba5-8fa5dd26a87f 192.168.21.8:443 weight 1 check inter 5s fall 2

这种配置会有一个问题, 当使用自定义签名证书时一切正常, 但使用机构颁发的证书时反而有问题.

1, 对于ssl check, 严格一点的是check-ssl, 但haproxy没有证书不支持严格的客户端认证, 所以需添加"check check-ssl verify none"参数禁止对客户端参数进行验证. lbaasv2由于久远不支持(那时都还是haproxy 1.7以前必须不支持), ocatavia则有对ssl check的支持.(https://github.com/openstack/octavia/blob/master/octavia/common/jinja/haproxy/templates/macros.j2)

154 {% if pool.health_monitor.type == constants.HEALTH_MONITOR_HTTPS %}

155 {% set monitor_ssl_opt = " check-ssl verify none" %}

下面的配置works

backend 79024d4d-4de4-492c-a3e2-21730b096a37

mode tcp

balance roundrobin

timeout check 10s

option httpchk GET /

http-check expect rstatus 200

fullconn 1000000

option allbackups

timeout connect 5000

timeout server 50000

server 317e4dea-5a62-4df0-a2f1-ea7bad4a9c5d 192.168.21.7:443 weight 1 check check-ssl verify none inter 5s fall 3 rise 4

但是似乎ocatavia的client有点问题, 它设置出来的是:

option httpchk None None

http-check expect rstatus None

server 317e4dea-5a62-4df0-a2f1-ea7bad4a9c5d 192.168.21.7:443 weight 1 check check-ssl verify none inter 5s fall 3 rise 4

ubuntu@zhhuabj-bastion:~/ca3$ openstack loadbalancer healthmonitor create --delay 5 --max-retries 4 --timeout 10 --type HTTPS --name https-monitor --http-method GET --url-path / --expected-codes 200 pool1

http_method is not a valid option for health monitors of type HTTPS (HTTP 400) (Request-ID: req-2d81bafa-1240-4f73-8e2e-cb0dd7691fdb)

2, ssl backend side采用了严格的客户端认证的话, 需改用TLS-HELLO check (https://docs.openstack.org/octavia/latest/user/guides/basic-cookbook.html)其实已经有说明, 如下:

HTTPS health monitors operate exactly like HTTP health monitors, but with ssl back-end servers. Unfortunately, this causes problems if the servers are performing client certificate validation, as HAProxy won’t have a valid cert. In this case, using TLS-HELLO type monitoring is an alternative.

TLS-HELLO health monitors simply ensure the back-end server responds to SSLv3 client hello messages. It will not check any other health metrics, like status code or body contents.

https://review.openstack.org/#/c/475944/

实际上客户并未使用客户端认证, 所以不是上面的原因, 应该是SNI所致. 因为后端有SNI认证, haproxy端需传入hostname, 但haproxy端无法传入hostname, 所以出错.但octavia-worker应该可以传SNI到后端.

ubuntu@zhhuabj-bastion:~/ca3$ curl --cacert ca.crt https://10.5.150.5

curl: (51) SSL: certificate subject name (www.server1.com) does not match target host name '10.5.150.5'

ubuntu@zhhuabj-bastion:~/ca3$ curl --resolve www.server1.com:443:10.5.150.5 -k https://www.server1.com

test1

什么是SNI, 就是ssl server端可能会根据每个节点的hostname生成不同的cert, 并启用SNI. 这样ssl client访问ssl server端时也应该将hostname也传过去. (注: SNIProxy是一个适用于 HTTPS 和 HTTP 的类似于透明代理的反向代理工具。它可以在 TCP 层直接将流量在不解包的情况下转发出来,实现不需要在代理服务器配置证书就能反向代理 HTTPS 网站的功能)

'curl -k’的方式测试无法很好的测试SNI, 最好是通过’openstack s_client’测试:

#Ideal Test to connect with SSLv3/No SNI

openssl s_client -ssl3 -connect 103.245.215.4:443

#openssl s_client -connect 192.168.254.214:9443 | openssl x509 -noout -text | grep DNS

#We can also send SNI using -servername:

openssl s_client -ssl3 -servername CERT_HOSTNAME -connect 103.245.215.4:443

这个文档 (https://cbonte.github.io/haproxy-dconv/1.7/configuration.html#option%20ssl-hello-chk )指出ssl-hello-chk只检查SSLv3不检查HTTP, 事实上, HTTPS health check也不检查HTTP只是多了个SSL negotiation. check-ssl check似乎能做更多.

LBaas v2模板目前只支持"httpchk"与"ssl-hello-chk", 这只有SSL check, 没有HTTP check. 所以问题很可能是出在SSLv3 hello (without SNI)有问题.做个SNI相关的实验验证一下:

首先, charm应该将ca传给octavia, ocavia应该根据ca再去创建SNI证书, 并且传SNI证书到backend, octavia-worker的相关处理代码如下:

LOG.debug("request url %s", path)

_request = getattr(self.session, method.lower())

_url = self._base_url(amp.lb_network_ip) + path

LOG.debug("request url %s", _url)

reqargs = {

'verify': CONF.haproxy_amphora.server_ca,

'url': _url,

'timeout': (req_conn_timeout, req_read_timeout), }

reqargs.update(kwargs)

headers = reqargs.setdefault('headers', {})

headers['User-Agent'] = OCTAVIA_API_CLIENT

self.ssl_adapter.uuid = amp.id

exception = None

# Keep retrying

def get_create_amphora_flow(self):

"""Creates a flow to create an amphora.

:returns: The flow for creating the amphora

"""

create_amphora_flow = linear_flow.Flow(constants.CREATE_AMPHORA_FLOW)

create_amphora_flow.add(database_tasks.CreateAmphoraInDB(

provides=constants.AMPHORA_ID))

create_amphora_flow.add(lifecycle_tasks.AmphoraIDToErrorOnRevertTask(

requires=constants.AMPHORA_ID))

if self.REST_AMPHORA_DRIVER:

create_amphora_flow.add(cert_task.GenerateServerPEMTask(

create_amphora_flow.add(

database_tasks.UpdateAmphoraDBCertExpiration(

requires=(constants.AMPHORA_ID, constants.SERVER_PEM)))

create_amphora_flow.add(compute_tasks.CertComputeCreate(

requires=(constants.AMPHORA_ID, constants.SERVER_PEM,

constants.BUILD_TYPE_PRIORITY, constants.FLAVOR),

provides=constants.COMPUTE_ID))

1, 两个证书, 略. lb_tls_secret_1的hostname是www.server1.com, lb_tls_secret_2的hostname是www.server2.com

secret1_id=$(openstack secret list |grep lb_tls_secret_1 |awk '{print $2}')

secret2_id=$(openstack secret list |grep lb_tls_secret_2 |awk '{print $2}')

2, 创建listener时使用( --sni-container-refs $secret1_id $secret2_id )加入了两个域名的SNI

IP=192.168.21.7

secret1_id=$(openstack secret list |grep lb_tls_secret_1 |awk '{print $2}')

secret2_id=$(openstack secret list |grep lb_tls_secret_2 |awk '{print $2}')

subnetid=$(openstack subnet show private_subnet -f value -c id); echo $subnetid

lb_id=$(openstack loadbalancer show lb3 -f value -c id)

#lb_id=$(openstack loadbalancer create --name test_tls_termination --vip-subnet-id $subnetid -f value -c id); echo $lb_id

listener_id=$(openstack loadbalancer listener create $lb_id --name https_listener --protocol-port 443 --protocol TERMINATED_HTTPS --default-tls-container=$secret1_id --sni-container-refs $secret1_id $secret2_id -f value -c id); echo $listener_id

pool_id=$(openstack loadbalancer pool create --protocol HTTP --listener $listener_id --lb-algorithm ROUND_ROBIN -f value -c id); echo $pool_id

openstack loadbalancer member create --address ${IP} --subnet-id $subnetid --protocol-port 80 $pool_id

public_network=$(openstack network show ext_net -f value -c id)

fip=$(openstack floating ip create $public_network -f value -c floating_ip_address)

vip=$(openstack loadbalancer show $lb_id -c vip_address -f value)

vip_port=$(openstack port list --fixed-ip ip-address=$vip -c ID -f value)

openstack floating ip set $fip --fixed-ip-address $vip --port $vip_port

3, 测试, client传入www.server1.com或www.server2.com两个域名时, server端能正常响应, 但传入一个域名www.server3.com时就报了这个错:‘does not match target host name ‘www.server3.com’’

ubuntu@zhhuabj-bastion:~/ca3$ curl --resolve www.server1.com:443:10.5.150.5 --cacert ca.crt https://www.server1.com

Hello World!

ubuntu@zhhuabj-bastion:~/ca3$ curl --resolve www.server2.com:443:10.5.150.5 --cacert ca.crt https://www.server2.com

Hello World!

ubuntu@zhhuabj-bastion:~/ca3$ curl --resolve www.server3.com:443:10.5.150.5 --cacert ca.crt https://www.server3.com

curl: (51) SSL: certificate subject name (www.server1.com) does not match target host name 'www.server3.com'

为什么会这样呢?

LBaaS v2中的ssl check将在haproxy中添加下列配置, 实际上有ssl-hello-chk时httpchk将被覆盖(haproxy忽略的). haproxy 1.7开始添加了更高级的check-ssl(xenial使用haproxy 1.6, 不支持), 估计就是早期的lbaas为ssl check添加ssl-hello-chk的原因

mode tcp

option httpchk GET /

http-check expect rstatus 303

option ssl-hello-chk

haproxy(http://cbonte.github.io/haproxy-dconv/1.6/configuration.html#4-option%20ssl-hello-chk)将为ssl-hello-chk伪造SSLv3包.

When some SSL-based protocols are relayed in TCP mode through HAProxy, it is

possible to test that the server correctly talks SSL instead of just testing

that it accepts the TCP connection. When "option ssl-hello-chk" is set, pure

SSLv3 client hello messages are sent once the connection is established to

the server, and the response is analyzed to find an SSL server hello message.

The server is considered valid only when the response contains this server

hello message.

All servers tested till there correctly reply to SSLv3 client hello messages,

and most servers tested do not even log the requests containing only hello

messages, which is appreciable.

Note that this check works even when SSL support was not built into haproxy

because it forges the SSL message. When SSL support is available, it is best

to use native SSL health checks instead of this one.

这是haproxy相关处理的源代码, 它没使用SSL libray, 先发硬编码的SSLv3 hello消息, 然后从response里找0x15 (SSL3_RT_ALERT) or 0x16 (SSL3_RT_HANDSHAKE), 若没找着就返回HCHK_STATUS_L6RSP(Layer6 invalid response) - https://github.com/haproxy/haproxy/blob/master/src/checks.c#L915

case PR_O2_SSL3_CHK:

if (!done && b_data(&check->bi) < 5)

goto wait_more_data;

/* Check for SSLv3 alert or handshake */

if ((b_data(&check->bi) >= 5) && (*b_head(&check->bi) == 0x15 || *b_head(&check->bi) == 0x16))

set_server_check_status(check, HCHK_STATUS_L6OK, NULL);

else

set_server_check_status(check, HCHK_STATUS_L6RSP, NULL);

break;

错误’Layer6 invalid response’正是从客户日志中看到的:

Oct 25 04:50:34 neut002 haproxy[54990]: Server aaa0a533-073b-4b0f-8b81-777b6a8f3900/f2dc685f-58f7-4201-8060-3409d2d73a0d is DOWN, reason: Layer6 invalid response, check duration: 4ms. 0 active and 0 backup servers left. 0 sessions active, 0 requeued, 0 remaining in queue.

所以backend ssl server端应该返回0x15, 客户究竟在haproxy之前运行什么ssl backend端, 我们不清楚. 假设它们运行的是apache2. 我们搭建一个测试环境, apache2采用默认的tls1.2, 而haproxy里还使用老的sslv3 hello时, apache2 ssl backend将返回下列的ssl协商错误:

ubuntu@zhhuabj-bastion:~/ca3$ openssl s_client -ssl3 -connect www.server1.com:443

140306875094680:error:140A90C4:SSL routines:SSL_CTX_new:null ssl method passed:ssl_lib.c:1878:

ubuntu@zhhuabj-bastion:~/ca3$ openssl s_client -ssl3 -servername www.server1.com -connect www.server1.com:443

139626113296024:error:140A90C4:SSL routines:SSL_CTX_new:null ssl method passed:ssl_lib.c:1878:

ubuntu@zhhuabj-bastion:~/ca3$ openssl s_client -ssl3 -servername www.server2.com -connect www.server1.com:443

140564176807576:error:140A90C4:SSL routines:SSL_CTX_new:null ssl method passed:ssl_lib.c:1878:

为什么ssl backend端不返回0x15或0x16呢, 理论上可能有以下几个原因

a, SSLv3现在已经被废弃了, 主流http server已经禁用了SSLv3支持, apach2收到haproxy过来的SSLv3 hello包时, apache2的SSL实现可能会响应别的消息而不是0x15/0x15

b, 因为haproxy过来的SSLv3 hello请求里没有SNI, 这样若启用了SNI的backend端(如apache2)就会ssl协商失败了, 这样也就未返回0x15/0x16

c, 其他原因

具体原因还需继续在backend抓包(tcpdump -eni ens3 -w ssl-test.pcap -s 0 port 443 or port 8443)确认.

更新, 原因已找到:

octavia/0会创建amphorae service vm, octavia/0上的/usr/lib/python3/dist-packages/octavia/amphorae/drivers/haproxy/rest_api_driver.py采用python requests模块去连接service vm上的9443端口. 这块代码类似下面这句所以不work:

curl --cacert /etc/octavia/certs/issuing_ca.pem 192.168.254.31:9443

改试下面的都work , 其中26835e25-7c4f-4776-940f-209eb9a9e826是SNI (loadbalance-id).

curl -k 192.168.254.31:9443

openssl s_client -connect 192.168.254.31:9443 -key /etc/octavia/certs/issuing_ca_key.pem

curl --cacert /etc/octavia/certs/issuing_ca.pem https://26835e25-7c4f-4776-940f-209eb9a9e826:9443 --resolve 26835e25-7c4f-4776-940f-209eb9a9e826:9443:192.168.254.31

#ipv6

#tlsv13 alert certificate required, it shows ssh client verfication is required

curl -6 -k https://[fc00:ea96:ae23:2d4f:f816:3eff:fe76:d87a]:9443/

#no alternative certificate subject name matches target host name, it shows it's about sni

curl -6 --cacert /etc/octavia/certs/issuing_ca.pem https://[fc00:ea96:ae23:2d4f:f816:3eff:fe76:d87a]:9443/

curl -6 --cacert /etc/octavia/certs/issuing_ca.pem --resolve backend1.domain:9443:[fc00:ea96:ae23:2d4f:f816:3eff:fe76:d87a] https://backend1.domain:9443 -v

在octavia/0上测试:

openssl s_client -connect 192.168.254.31:9443 -key /etc/octavia/certs/issuing_ca_key.pem

最后的原因是, 创建证书时未指明CN=$DOMAIN1:

SUBJECT="/C=CN/ST=BJ/L=BJ/O=STS/OU=Joshua/CN=$DOMAIN1"

openssl req -new -nodes -subj $SUBJECT -key $DOMAIN1.key -out $DOMAIN1.csr

我们知道, python requests module中的assert_hostname用来往backend传递SNI, 见 - https://medium.com/@yzlin/python-requests-ssl-ip-binding-6df25a9a8f6a

而下列代码(https://github.com/openstack/octavia/blob/master/octavia/amphorae/drivers/haproxy/rest_api_driver.py#L542), 将传self.uuid(self.ssl_adapter.uuid = amp.id)到conn.asser_hostname.

class CustomHostNameCheckingAdapter(requests.adapters.HTTPAdapter):

def cert_verify(self, conn, url, verify, cert):

conn.assert_hostname = self.uuid

return super(CustomHostNameCheckingAdapter,

self).cert_verify(conn, url, verify, cert)

见设计文档: https://docs.openstack.org/octavia/ocata/specs/version0.5/tls-data-security.html

安装Octavia

./generate-bundle.sh --name octavia --create-model --run --octavia -r stein --dvr-snat --num-compute 2 #can also use br-data:ens7 here

juju config neutron-openvswitch data-port="br-data:ens7"

./bin/add-data-ports.sh #it will add another NIC ens7 for every nova-compute nodes

或者:

# https://blog.ubuntu.com/2019/01/28/taking-octavia-for-a-ride-with-kubernetes-on-openstack

sudo snap install --classic charm

charm pull cs:openstack-base

cd openstack-base/

curl https://raw.githubusercontent.com/openstack-charmers/openstack-bundles/master/stable/overlays/loadbalancer-octavia.yaml -o loadbalancer-octavia.yaml

juju deploy ./bundle.yaml --overlay loadbalancer-octavia.yaml

或者

sudo bash -c 'cat >overlays/octavia.yaml" <<EOF

debug: &debug True

openstack_origin: &openstack_origin cloud:bionic-rocky

applications:

octavia:

#series: bionic

charm: cs:octavia

num_units: 1

constraints: mem=2G

options:

debug: *debug

openstack-origin: *openstack_origin

octavia-dashboard:

charm: cs:octavia-dashboard

relations:

- - mysql:shared-db

- octavia:shared-db

- - keystone:identity-service

- octavia:identity-service

- - rabbitmq-server:amqp

- octavia:amqp

- - neutron-api:neutron-load-balancer

- octavia:neutron-api

- - neutron-openvswitch:neutron-plugin

- octavia:neutron-openvswitch

- - openstack-dashboard:dashboard-plugin

- octavia-dashboard:dashboard

EOF

sudo bash -c 'cat >overlays/octavia.yaml" <<EOF

debug: &debug True

verbose: &verbose True

openstack_origin: &openstack_origin

applications:

barbican:

charm: cs:~openstack-charmers-next/barbican

num_units: 1

constraints: mem=1G

options:

debug: *debug

openstack-origin: *openstack_origin

relations:

- [ barbican, rabbitmq-server ]

- [ barbican, mysql ]

- [ barbican, keystone ]

EOF

./generate-bundle.sh --series bionic --release rocky --barbican

juju deploy ./b/openstack.yaml --overlay ./b/o/barbican.yaml --overlay overlays/octavia.yaml

或者:

juju add-model bionic-barbican-octavia

./generate-bundle.sh --series bionic --barbican

#./generate-bundle.sh --series bionic --release rocky --barbican

juju deploy ./b/openstack.yaml --overlay ./b/o/barbican.yaml

#https://github.com/openstack-charmers/openstack-bundles/blob/master/stable/overlays/loadbalancer-octavia.yaml

#NOTE: need to comment to:lxd related lines from loadbalancer-octavia.yaml, and change nova-compute num to 3

juju deploy ./b/openstack.yaml --overlay ./overlays/loadbalancer-octavia.yaml

# Or we can:

# 2018-12-25 03:30:39 DEBUG update-status fatal error: runtime: out of memory

juju deploy octavia --config openstack-origin=cloud:bionic:queens --constraints mem=4G

juju deploy octavia-dashboard

juju add-relation octavia-dashboard openstack-dashboard

juju add-relation octavia rabbitmq-server

juju add-relation octavia mysql

juju add-relation octavia keystone

juju add-relation octavia neutron-openvswitch

juju add-relation octavia neutron-api

# Initialize and unseal vault

# https://docs.openstack.org/project-deploy-guide/charm-deployment-guide/latest/app-vault.html

# https://lingxiankong.github.io/2018-07-16-barbican-introduction.html

# /snap/vault/1315/bin/vault server -config /var/snap/vault/common/vault.hcl

sudo snap install vault

export VAULT_ADDR="http://$(juju run --unit vault/0 unit-get private-address):8200"

ubuntu@zhhuabj-bastion:~$ vault operator init -key-shares=5 -key-threshold=3

Unseal Key 1: UB7XDri5FRcMLirKBIysdUb2PN7Ia5EVMP0Z9wD9Hyll

Unseal Key 2: mD8Gnr3hdB2LjjNB4ugxvvsvb8+EQQ/0AXm2p+c2qYFT

Unseal Key 3: vymYLAdou3qky24IEKDufYsZXAIPLWtErAKy/RkfgghS

Unseal Key 4: xOwDbqgNLLipsZbp+FAmVhBc3ZxA8CI3DchRc4AClRyQ

Unseal Key 5: nRlZ8WX6CS9nOw2ct5U9o0Za5jlUAtjN/6XLxjf62CnR

Initial Root Token: s.VJKGhNvIFCTgHVbQ6WvL0OLe

vault operator unseal UB7XDri5FRcMLirKBIysdUb2PN7Ia5EVMP0Z9wD9Hyll

vault operator unseal mD8Gnr3hdB2LjjNB4ugxvvsvb8+EQQ/0AXm2p+c2qYFT

vault operator unseal vymYLAdou3qky24IEKDufYsZXAIPLWtErAKy/RkfgghS

export VAULT_TOKEN=s.VJKGhNvIFCTgHVbQ6WvL0OLe

vault token create -ttl=10m

$ vault token create -ttl=10m

Key Value

--- -----

token s.7ToXh9HqE6FiiJZybFhevL9v

token_accessor 6dPkFpsPmx4D7g8yNJXvEpKN

token_duration 10m

token_renewable true

token_policies ["root"]

identity_policies []

policies ["root"]

# Authorize vault charm to use a root token to be able to create secrets storage back-ends and roles to allow other app to access vault

juju run-action vault/0 authorize-charm token=s.7ToXh9HqE6FiiJZybFhevL9v

# upload Amphora image

source ~/stsstack-bundles/openstack/novarc

http_proxy=http://squid.internal:3128 wget http://tarballs.openstack.org/octavia/test-images/test-only-amphora-x64-haproxy-ubuntu-xenial.qcow2

#openstack image create --tag octavia-amphora --disk-format=qcow2 --container-format=bare --private amphora-haproxy-xenial --file ./test-only-amphora-x64-haproxy-ubuntu-xenial.qcow2

glance image-create --tag octavia-amphora --disk-format qcow2 --name amphora-haproxy-xenial --file ./test-only-amphora-x64-haproxy-ubuntu-xenial.qcow2 --visibility public --container-format bare --progress

cd stsstack-bundles/openstack/

./configure

./tools/sec_groups.sh

./tools/instance_launch.sh 2 xenial

neutron floatingip-create ext_net

neutron floatingip-associate $(neutron floatingip-list |grep 10.5.150.4 |awk '{print $2}') $(neutron port-list |grep '192.168.21.3' |awk '{print $2}')

or

fix_ip=192.168.21.3

public_network=$(openstack network show ext_net -f value -c id)

fip=$(openstack floating ip create $public_network -f value -c floating_ip_address)

openstack floating ip set $fip --fixed-ip-address $fix_ip --port $(openstack port list --fixed-ip ip-address=$fix_ip -c id -f value)

cd ~/ca #https://docs.openstack.org/project-deploy-guide/charm-deployment-guide/latest/app-octavia.html

juju config octavia \

lb-mgmt-issuing-cacert="$(base64 controller_ca.pem)" \

lb-mgmt-issuing-ca-private-key="$(base64 controller_ca_key.pem)" \

lb-mgmt-issuing-ca-key-passphrase=foobar \

lb-mgmt-controller-cacert="$(base64 controller_ca.pem)" \

lb-mgmt-controller-cert="$(base64 controller_cert_bundle.pem)"

注: 也遇到一个问题, 上述命令没有更新service vm里的cert, ssh登录service vm之后查看/etc/octavia/certs/目录发现证书不同. 证书不对, 会导致service vm里的amphora-agent在9443端口起不来. service vm是通过cloud-init来写的证书, 那错误出在哪个环节呢?

配置资源:

# the code search 'configure_resources'

juju config octavia create-mgmt-network

juju run-action --wait octavia/0 configure-resources

# some deubg ways:

openstack security group rule create $(openstack security group show lb-mgmt-sec-grp -f value -c id) --protocol udp --dst-port 546 --ethertype IPv6

openstack security group rule create $(openstack security group show lb-mgmt-sec-grp -f value -c id) --protocol icmp --ethertype IPv6

neutron security-group-rule-create --protocol icmpv6 --direction egress --ethertype IPv6 lb-mgmt-sec-grp

neutron security-group-rule-create --protocol icmpv6 --direction ingress --ethertype IPv6 lb-mgmt-sec-grp

neutron port-show octavia-health-manager-octavia-0-listen-port -f value -c status

neutron port-update --admin-state-up True octavia-health-manager-octavia-0-listen-port

AGENT=$(neutron l3-agent-list-hosting-router lb-mgmt -f value -c id)

neutron l3-agent-router-remove $AGENT lb-mgmt

neutron l3-agent-router-add $AGENT lb-mgmt

上面configure-resources命令 (juju run-action --wait octavia/0 configure-resources)将会自动配置IPv6管理网段, 并且会配置一个binding:host在octavia/0节点上的名为octavia-health-manager-octavia-0-listen-port的port.

ubuntu@zhhuabj-bastion:~$ neutron router-list |grep mgmt

| 0a839377-6b19-419b-9868-616def4d749f | lb-mgmt | null | False | False |

ubuntu@zhhuabj-bastion:~$ neutron net-list |grep mgmt

| ae580dc8-31d6-4ec3-9d44-4a9c7b9e80b6 | lb-mgmt-net | ea9c7d5c-d224-4dd3-b40c-3acae9690657 fc00:4a9c:7b9e:80b6::/64 |

ubuntu@zhhuabj-bastion:~$ neutron subnet-list |grep mgmt

| ea9c7d5c-d224-4dd3-b40c-3acae9690657 | lb-mgmt-subnetv6 | fc00:4a9c:7b9e:80b6::/64 | {"start": "fc00:4a9c:7b9e:80b6::2", "end": "fc00:4a9c:7b9e:80b6:ffff:ffff:ffff:ffff"} |

ubuntu@zhhuabj-bastion:~$ neutron port-list |grep fc00

| 5cb6e3f3-ebe5-4284-9c05-ea272e8e599b | | fa:16:3e:9e:82:6a | {"subnet_id": "ea9c7d5c-d224-4dd3-b40c-3acae9690657", "ip_address": "fc00:4a9c:7b9e:80b6::1"} |

| 983c56d2-46dd-416c-abc8-5096d76f75e2 | octavia-health-manager-octavia-0-listen-port | fa:16:3e:99:8c:ab | {"subnet_id": "ea9c7d5c-d224-4dd3-b40c-3acae9690657", "ip_address": "fc00:4a9c:7b9e:80b6:f816:3eff:fe99:8cab"} |

| af38a60d-a370-4ddb-80ac-517fda175535 | | fa:16:3e:5f:cd:ae | {"subnet_id": "ea9c7d5c-d224-4dd3-b40c-3acae9690657", "ip_address": "fc00:4a9c:7b9e:80b6:f816:3eff:fe5f:cdae"} |

| b65f90d1-2e1f-4994-a0e9-2bb13ead4cab | | fa:16:3e:10:34:84 | {"subnet_id": "ea9c7d5c-d224-4dd3-b40c-3acae9690657", "ip_address": "fc00:4a9c:7b9e:80b6:f816:3eff:fe10:3484"} |

并且在octavia/0上会创建一个名为o-hm0的接口, 此接口的IP地址与octavia-health-manager-octavia-0-listen-port port同.

ubuntu@zhhuabj-bastion:~$ juju ssh octavia/0 -- ip addr show o-hm0 |grep global

Connection to 10.5.0.110 closed.

inet6 fc00:4a9c:7b9e:80b6:f816:3eff:fe99:8cab/64 scope global dynamic mngtmpaddr noprefixroute

ubuntu@zhhuabj-bastion:~$ juju ssh octavia/0 -- sudo ovs-vsctl show

490bbb36-1c7d-412d-8b44-31e6f796306a

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge br-tun

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "gre-0a05006b"

Interface "gre-0a05006b"

type: gre

options: {df_default="true", in_key=flow, local_ip="10.5.0.110", out_key=flow, remote_ip="10.5.0.107"}

Port br-tun

Interface br-tun

type: internal

Port patch-int

Interface patch-int

type: patch

options: {peer=patch-tun}

Port "gre-0a050016"

Interface "gre-0a050016"

type: gre

options: {df_default="true", in_key=flow, local_ip="10.5.0.110", out_key=flow, remote_ip="10.5.0.22"}

Bridge br-data

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port phy-br-data

Interface phy-br-data

type: patch

options: {peer=int-br-data}

Port br-data

Interface br-data

type: internal

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port "o-hm0"

tag: 1

Interface "o-hm0"

type: internal

Port br-int

Interface br-int

type: internal

Port int-br-data

Interface int-br-data

type: patch

options: {peer=phy-br-data}

Bridge br-ex

Port br-ex

Interface br-ex

type: internal

ovs_version: "2.10.0"

ubuntu@zhhuabj-bastion:~$ juju ssh neutron-gateway/0 -- sudo ovs-vsctl show

ec3e2cb6-5261-4c22-8afd-5bacb0e8ce85

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge br-ex

Port br-ex

Interface br-ex

type: internal

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "tap62c03d3b-b1"

tag: 2

Interface "tap62c03d3b-b1"

Port "tapb65f90d1-2e"

tag: 3

Interface "tapb65f90d1-2e"

Port int-br-data

Interface int-br-data

type: patch

options: {peer=phy-br-data}

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port "tap6f1478be-b1"

tag: 1

Interface "tap6f1478be-b1"

Port "tap01efd82b-53"

tag: 2

Interface "tap01efd82b-53"

Port "tap5cb6e3f3-eb"

tag: 3

Interface "tap5cb6e3f3-eb"

Port br-int

Interface br-int

type: internal

Bridge br-data

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "ens7"

Interface "ens7"

Port br-data

Interface br-data

type: internal

Port phy-br-data

Interface phy-br-data

type: patch

options: {peer=int-br-data}

Bridge br-tun

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port patch-int

Interface patch-int

type: patch

options: {peer=patch-tun}

Port "gre-0a05007a"

Interface "gre-0a05007a"

type: gre

options: {df_default="true", in_key=flow, local_ip="10.5.0.22", out_key=flow, remote_ip="10.5.0.122"}

Port "gre-0a05006b"

Interface "gre-0a05006b"

type: gre

options: {df_default="true", in_key=flow, local_ip="10.5.0.22", out_key=flow, remote_ip="10.5.0.107"}

Port br-tun

Interface br-tun

type: internal

Port "gre-0a050079"

Interface "gre-0a050079"

type: gre

options: {df_default="true", in_key=flow, local_ip="10.5.0.22", out_key=flow, remote_ip="10.5.0.121"}

Port "gre-0a05006e"

Interface "gre-0a05006e"

type: gre

options: {df_default="true", in_key=flow, local_ip="10.5.0.22", out_key=flow, remote_ip="10.5.0.110"}

ovs_version: "2.10.0"

ubuntu@zhhuabj-bastion:~⟫ juju ssh neutron-gateway/0 -- cat /var/lib/neutron/ra/0a839377-6b19-419b-9868-616def4d749f.radvd.conf

interface qr-5cb6e3f3-eb

{

AdvSendAdvert on;

MinRtrAdvInterval 30;

MaxRtrAdvInterval 100;

AdvLinkMTU 1458;

prefix fc00:4a9c:7b9e:80b6::/64

{

AdvOnLink on;

AdvAutonomous on;

};

};

ubuntu@zhhuabj-bastion:~$ openstack security group rule list lb-health-mgr-sec-grp

+--------------------------------------+-------------+----------+------------+-----------------------+

| ID | IP Protocol | IP Range | Port Range | Remote Security Group |

+--------------------------------------+-------------+----------+------------+-----------------------+

| 09a92cb2-9942-44d4-8a96-9449a6758967 | None | None | | None |

| 20daa06c-9de6-4c91-8a1e-59645f23953a | udp | None | 5555:5555 | None |

| 8f7b9966-c255-4727-a172-60f22f0710f9 | None | None | | None |

| 90f86b27-12f8-4a9a-9924-37b31d26cbd8 | icmpv6 | None | | None |

+--------------------------------------+-------------+----------+------------+-----------------------+

ubuntu@zhhuabj-bastion:~$ openstack security group rule list lb-mgmt-sec-grp

+--------------------------------------+-------------+----------+------------+-----------------------+

| ID | IP Protocol | IP Range | Port Range | Remote Security Group |

+--------------------------------------+-------------+----------+------------+-----------------------+

| 54f79f92-a6c5-411d-a309-a02b39cc384b | icmpv6 | None | | None |

| 574f595e-3d96-460e-a3f2-329818186492 | None | None | | None |

| 5ecb0f58-f5dd-4d52-bdfa-04fd56968bd8 | tcp | None | 22:22 | None |

| 7ead3a3a-bc45-4434-b7a2-e2a6c0dc3ce9 | None | None | | None |

| cf82d108-e0f8-4916-95d4-0c816b6eb156 | tcp | None | 9443:9443 | None |

+--------------------------------------+-------------+----------+------------+-----------------------+

ubuntu@zhhuabj-bastion:~$ source ~/novarc

ubuntu@zhhuabj-bastion:~$ openstack security group rule list default

+--------------------------------------+-------------+-----------+------------+--------------------------------------+

| ID | IP Protocol | IP Range | Port Range | Remote Security Group |

+--------------------------------------+-------------+-----------+------------+--------------------------------------+

| 15b56abd-c2af-4c0a-8585-af68a8f09e3c | icmpv6 | None | | None |

| 2ad77fa3-32c7-4a20-a572-417bea782eff | icmp | 0.0.0.0/0 | | None |

| 2c2aec15-e4ad-4069-abd2-0191fe80f9bb | None | None | | None |

| 3b775807-3c61-45a3-9677-aaf9631db677 | udp | 0.0.0.0/0 | 3389:3389 | None |

| 3e9a6e7f-b9a2-47c9-97ca-042b22fbf308 | icmpv6 | None | | None |

| 42a3c09e-91c8-471d-b4a8-c1fe87dab066 | None | None | | None |

| 47f9cec2-4bc0-4d71-9a02-3a27d46b59f8 | icmp | None | | None |

| 94297175-9439-4df2-8c93-c5576e52e138 | udp | None | 546:546 | None |

| 9c6ac9d2-3b9e-4bab-a55a-04a1679b66be | None | None | | c48a1bf5-7b7e-4337-afdf-8057ae8025af |

| b6e95f76-1b64-4135-8b62-b058ec989f7e | None | None | | c48a1bf5-7b7e-4337-afdf-8057ae8025af |

| de5132a5-72e2-4f03-8b6a-dcbc2b7811c3 | tcp | 0.0.0.0/0 | 3389:3389 | None |

| e72bea9f-84ce-4e3a-8597-c86d40b9b5ef | tcp | 0.0.0.0/0 | 22:22 | None |

| ecf1415c-c6e9-4cf6-872c-4dac1353c014 | tcp | 0.0.0.0/0 | | None |

+--------------------------------------+-------------+-----------+------------+--------------------------------------+

底层OpenStack环境(OpenStack Over Openstack)需要做 (见: https://blog.csdn.net/quqi99/article/details/78437988 ):

openstack security group rule create $secgroup --protocol udp --dst-port 546 --ethertype IPv6

最容易出现的问题是health-manager-octavia-0-listen-port port为DOWN, 从而o-hm0网络不通而无法从dhcp server处获得IP, 网段不通多半是br-int上的flow rules的问题, 我多次遇到这种情况, 但后来重建环境不知为什么又好了.

root@juju-50fb86-bionic-rocky-barbican-octavia-11:~# ovs-ofctl dump-flows br-int

cookie=0x5dc634635bd398eb, duration=424018.932s, table=0, n_packets=978, n_bytes=76284, priority=10,icmp6,in_port="o-hm0",icmp_type=136 actions=resubmit(,24)

cookie=0x5dc634635bd398eb, duration=424018.930s, table=0, n_packets=0, n_bytes=0, priority=10,arp,in_port="o-hm0" actions=resubmit(,24)

cookie=0x5dc634635bd398eb, duration=425788.219s, table=0, n_packets=0, n_bytes=0, priority=2,in_port="int-br-data" actions=drop

cookie=0x5dc634635bd398eb, duration=424018.943s, table=0, n_packets=10939, n_bytes=2958167, priority=9,in_port="o-hm0" actions=resubmit(,25)

cookie=0x5dc634635bd398eb, duration=425788.898s, table=0, n_packets=10032, n_bytes=1608826, priority=0 actions=resubmit(,60)

cookie=0x5dc634635bd398eb, duration=425788.903s, table=23, n_packets=0, n_bytes=0, priority=0 actions=drop

cookie=0x5dc634635bd398eb, duration=424018.940s, table=24, n_packets=675, n_bytes=52650, priority=2,icmp6,in_port="o-hm0",icmp_type=136,nd_target=fc00:4a9c:7b9e:80b6:f816:3eff:fe99:8cab actions=resubmit(,60)

cookie=0x5dc634635bd398eb, duration=424018.938s, table=24, n_packets=0, n_bytes=0, priority=2,icmp6,in_port="o-hm0",icmp_type=136,nd_target=fe80::f816:3eff:fe99:8cab actions=resubmit(,60)

cookie=0x5dc634635bd398eb, duration=425788.879s, table=24, n_packets=303, n_bytes=23634, priority=0 actions=drop

cookie=0x5dc634635bd398eb, duration=424018.951s, table=25, n_packets=10939, n_bytes=2958167, priority=2,in_port="o-hm0",dl_src=fa:16:3e:99:8c:ab actions=resubmit(,60)

cookie=0x5dc634635bd398eb, duration=425788.896s, table=60, n_packets=21647, n_bytes=4620009, priority=3 actions=NORMAL

root@juju-50fb86-bionic-rocky-barbican-octavia-11:~# ovs-ofctl dump-flows br-data

cookie=0xb41c0c7781ded568, duration=426779.130s, table=0, n_packets=16816, n_bytes=3580386, priority=2,in_port="phy-br-data" actions=drop

cookie=0xb41c0c7781ded568, duration=426779.201s, table=0, n_packets=0, n_bytes=0, priority=0 actions=NORMAL

如果o-hm0总是无法获得IP, 我们也可以手工配置一个IPv4管理网段试试.

neutron router-gateway-clear lb-mgmt

neutron router-interface-delete lb-mgmt lb-mgmt-subnetv6

neutron subnet-delete lb-mgmt-subnetv6

neutron port-list |grep fc00

#neutron port-delete 464e6d47-9830-4966-a2b7-e188c19c407a

openstack subnet create --subnet-range 192.168.0.0/24 --allocation-pool start=192.168.0.2,end=192.168.0.200 --network lb-mgmt-net lb-mgmt-subnet

neutron router-interface-add lb-mgmt lb-mgmt-subnet

#neutron router-gateway-set lb-mgmt ext_net

neutron port-list |grep 192.168.0.1

#openstack security group create lb-mgmt-sec-grp --project $(openstack security group show lb-mgmt-sec-grp -f value -c project_id)

openstack security group rule create --protocol udp --dst-port 5555 lb-mgmt-sec-grp

openstack security group rule create --protocol tcp --dst-port 22 lb-mgmt-sec-grp

openstack security group rule create --protocol tcp --dst-port 9443 lb-mgmt-sec-grp

openstack security group rule create --protocol icmp lb-mgmt-sec-grp

openstack security group show lb-mgmt-sec-grp

openstack security group rule create --protocol udp --dst-port 5555 lb-health-mgr-sec-grp

openstack security group rule create --protocol icmp lb-health-mgr-sec-grp

# create a management port o-hm0 on octavia/0 node, first use neutron to allocate a port, then call ovs-vsctl to add-port

LB_HOST=$(juju ssh octavia/0 -- hostname)

juju ssh octavia/0 -- sudo ovs-vsctl del-port br-int o-hm0

# Use LB_HOST to replace juju-70ea4e-bionic-barbican-octavia-11, don't know why it said 'bind failed' when using $LB_HOST directly

neutron port-create --name mgmt-port --security-group $(openstack security group show lb-health-mgr-sec-grp -f value -c id) --device-owner Octavia:health-mgr --binding:host_id=juju-acadb9-bionic-rocky-barbican-octavia-without-vault-9 lb-mgmt-net --tenant-id $(openstack security group show lb-health-mgr-sec-grp -f value -c project_id)

juju ssh octavia/0 -- sudo ovs-vsctl --may-exist add-port br-int o-hm0 -- set Interface o-hm0 type=internal -- set Interface o-hm0 external-ids:iface-status=active -- set Interface o-hm0 external-ids:attached-mac=$(neutron port-show mgmt-port -f value -c mac_address) -- set Interface o-hm0 external-ids:iface-id=$(neutron port-show mgmt-port -f value -c id)

juju ssh octavia/0 -- sudo ip link set dev o-hm0 address $(neutron port-show mgmt-port -f value -c mac_address)

ping 192.168.0.2

测试虚机中安装HTTPS测试服务

# Prepare CA and ssl pairs for lb server

openssl genrsa -passout pass:password -out ca.key

openssl req -x509 -passin pass:password -new -nodes -key ca.key -days 3650 -out ca.crt -subj "/C=CN/ST=BJ/O=STS"

openssl genrsa -passout pass:password -out lb.key

openssl req -new -key lb.key -out lb.csr -subj "/C=CN/ST=BJ/O=STS/CN=www.quqi.com"

openssl x509 -req -in lb.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out lb.crt -days 3650

cat lb.crt lb.key > lb.pem

#openssl pkcs12 -export -inkey lb.key -in lb.crt -certfile ca.crt -passout pass:password -out lb.p12

# Create two test servers and run

sudo apt install python-minimal -y

sudo bash -c 'cat >simple-https-server.py' <<EOF

#!/usr/bin/env python

# coding=utf-8

import BaseHTTPServer, SimpleHTTPServer

import ssl

httpd = BaseHTTPServer.HTTPServer(('0.0.0.0', 443), SimpleHTTPServer.SimpleHTTPRequestHandler)

httpd.socket = ssl.wrap_socket (httpd.socket, certfile='./lb.pem', server_side=True)

httpd.serve_forever()

EOF

sudo bash -c 'cat >index.html' <<EOF

test1

EOF

nohup sudo python simple-https-server.py &

ubuntu@zhhuabj-bastion:~$ curl -k https://10.5.150.4

test1

ubuntu@zhhuabj-bastion:~$ curl -k https://10.5.150.5

test2

ubuntu@zhhuabj-bastion:~$ curl --cacert ~/ca/ca.crt https://10.5.150.4

curl: (51) SSL: certificate subject name (www.quqi.com) does not match target host name '10.5.150.4'

ubuntu@zhhuabj-bastion:~$ curl --resolve www.quqi.com:443:10.5.150.4 --cacert ~/ca/ca.crt https://www.quqi.com

test1

ubuntu@zhhuabj-bastion:~$ curl --resolve www.quqi.com:443:10.5.150.4 -k https://www.quqi.com

test1

20191109更新, 上面的方法使用–cacert时并不work, 改成下列方法works

openssl req -new -x509 -keyout lb.pem -out lb.pem -days 365 -nodes -subj "/C=CN/ST=BJ/O=STS/CN=www.quqi.com"

$ curl --resolve www.quqi.com:443:192.168.99.135 --cacert ./lb.pem https://www.quqi.com

test1

经进一步调试, 原来是因为 这一句"curl --resolve www.quqi.com:443:10.5.150.4 --cacert ~/ca/ca.crt https://www.quqi.com"应该是"curl --resolve www.quqi.com:443:10.5.150.4 --cacert ~/ca/lb.pem https://www.quqi.com"

或者使用apache2安装ssl

vim /etc/apache2/sites-available/default-ssl.conf

ServerName server1.com

ServerAlias www.server1.com

SSLCertificateFile /home/ubuntu/www.server1.com.crt

SSLCertificateKeyFile /home/ubuntu/www.server1.com.key

vim /etc/apache2/sites-available/000-default.conf

ServerName server1.com

ServerAlias www.server1.com

sudo apachectl configtest

sudo a2enmod ssl

sudo a2ensite default-ssl

sudo systemctl restart apache2.service

测试虚机中安装HTTP测试服务

下面的这种HTTP测试服务实际上有问题, 会导致haproxy对backend作check时报下列错误.

ae847e94-5aeb-4da6-9b66-07e1a385465b is UP, reason: Layer7 check passed, code: 200, info: "HTTP status check returned code <3C>200<3E>", check duration: 7ms. 1 active and 0 backup servers online. 0 sessions requeued, 0 total in queue.

1, deploy http server in backend

MYIP=$(ifconfig ens2|grep 'inet addr'|awk -F: '{print $2}'| awk '{print $1}')

while true; do echo -e "HTTP/1.0 200 OK\r\n\r\nWelcome to $MYIP" | sudo nc -l -p 80 ; done

2, test it

sudo ip netns exec qrouter-2ea1fc45-69e5-4c77-b6c9-d4fabc57145b curl http://192.168.21.7:80

3, add it into haproxy

openstack loadbalancer member create --subnet-id private_subnet --address 192.168.21.7 --protocol-port 80 pool1

并且还会导致在作haproxy vip作curl测试时返回Bad Gateway的错误.

所以最后在backend上运行"sudo python -m SimpleHTTPServer 80"之后解决.

How to ssh into amphora service vm

# NOTE: (base64 -w 0 $HOME/.ssh/id_amphora.pub)

sudo mkdir -p /etc/octavia/.ssh && sudo chown -R $(id -u):$(id -g) /etc/octavia/.ssh

ssh-keygen -b 2048 -t rsa -N "" -f /etc/octavia/.ssh/octavia_ssh_key

openstack user list --domain service_domain

# NOTE: we must add '--user' option to avoid the error 'Invalid key_name provided'

nova keypair-add --pub-key=/etc/octavia/.ssh/octavia_ssh_key.pub octavia_ssh_key --user $(openstack user show octavia --domain service_domain -f value -c id)

# openstack cli doesn't support to list user scope keypairs

nova keypair-list --user $(openstack user show octavia --domain service_domain -f value -c id)

vim /etc/octavia/octavia.conf

vim /var/lib/juju/agents/unit-octavia-0/charm/templates/rocky/octavia.conf

vim /usr/lib/python3/dist-packages/octavia/compute/drivers/nova_driver.py

vim /usr/lib/python3/dist-packages/octavia/controller/worker/tasks/compute_tasks.py #import pdb;pdb.set_trace()

[controller_worker]

amp_ssh_key_name = octavia_ssh_key #for sts, name is called amphora-backdoor

sudo ip netns exec qrouter-0a839377-6b19-419b-9868-616def4d749f ssh -6 -i

~/octavia_ssh_key ubuntu@fc00:4a9c:7b9e:80b6:f816:3eff:fe5f:cdae

NOTE:

we can't ssh by: ssh -i /etc/octavia/octavia_ssh_key ubuntu@10.5.150.15

but we can ssh by:

sudo ip netns exec qrouter-0a839377-6b19-419b-9868-616def4d749f ssh -i ~/octavia_ssh_key ubuntu@192.168.0.12 -v

ubuntu@zhhuabj-bastion:~$ nova list --all

+--------------------------------------+----------------------------------------------+----------------------------------+--------+------------+-------------+-------------------------------------------------------------+

| ID | Name | Tenant ID | Status | Task State | Power State | Networks |

+--------------------------------------+----------------------------------------------+----------------------------------+--------+------------+-------------+-------------------------------------------------------------+

| 1f50fa16-bbbe-47a7-b66b-86de416d0c5e | amphora-2fae038f-08b5-4bce-a2b7-f7bd543c0314 | 5165bc7f79304f67a135fcde3cd78ae1 | ACTIVE | - | Running | lb-mgmt-net=192.168.0.12; private=192.168.21.6, 10.5.150.15 |

openstack security group rule create lb-300e5ee7-2793-4df3-b901-17ce76da0b09 --protocol icmp --remote-ip 0.0.0.0/0

openstack security group rule create lb-300e5ee7-2793-4df3-b901-17ce76da0b09 --protocol tcp --dst-port 22

Deploy a non-terminated HTTPS load balancer

sudo apt install python-octaviaclient python3-octaviaclient

openstack complete |sudo tee /etc/bash_completion.d/openstack

source <(openstack complete)

#No module named 'oslo_log'

#openstack loadbalancer delete --cascade --wait lb1

openstack loadbalancer create --name lb1 --vip-subnet-id private_subnet

#lb_vip_port_id=$(openstack loadbalancer create -f value -c vip_port_id --name lb1 --vip-subnet-id private_subnet)

# Re-run the following until lb1 shows ACTIVE and ONLINE statuses:

openstack loadbalancer show lb1

nova list --all

openstack loadbalancer listener create --name listener1 --protocol HTTPS --protocol-port 443 lb1

openstack loadbalancer pool create --name pool1 --lb-algorithm ROUND_ROBIN --listener listener1 --protocol HTTPS

#openstack loadbalancer healthmonitor create --delay 5 --max-retries 4 --timeout 10 --type HTTPS --url-path / pool1

openstack loadbalancer healthmonitor create --delay 5 --max-retries 4 --timeout 10 --type TLS-HELLO pool1

openstack loadbalancer member create --subnet-id private_subnet --address 192.168.21.10 --protocol-port 443 pool1

openstack loadbalancer member create --subnet-id private_subnet --address 192.168.21.12 --protocol-port 443 pool1

openstack loadbalancer member list pool1

root@amphora-a9cf0b97-7f30-4b9c-b16a-7bc54526e0d0:~# ps -ef |grep haproxy

root 1459 1 0 04:34 ? 00:00:00 /usr/sbin/haproxy-systemd-wrapper -f /var/lib/octavia/b9d5a192-1a6a-4df7-83d4-fe96ac9574c0/haproxy.cfg -f /var/lib/octavia/haproxy-default-user-group.conf -p /var/lib/octavia/b9d5a192-1a6a-4df7-83d4-fe96ac9574c0/b9d5a192-1a6a-4df7-83d4-fe96ac9574c0.pid -L UlKGE8M_cxJTcktjV8M-eKJkh-g

nobody 1677 1459 0 04:35 ? 00:00:00 /usr/sbin/haproxy -f /var/lib/octavia/b9d5a192-1a6a-4df7-83d4-fe96ac9574c0/haproxy.cfg -f /var/lib/octavia/haproxy-default-user-group.conf -p /var/lib/octavia/b9d5a192-1a6a-4df7-83d4-fe96ac9574c0/b9d5a192-1a6a-4df7-83d4-fe96ac9574c0.pid -L UlKGE8M_cxJTcktjV8M-eKJkh-g -Ds -sf 1625

nobody 1679 1677 0 04:35 ? 00:00:00 /usr/sbin/haproxy -f /var/lib/octavia/b9d5a192-1a6a-4df7-83d4-fe96ac9574c0/haproxy.cfg -f /var/lib/octavia/haproxy-default-user-group.conf -p /var/lib/octavia/b9d5a192-1a6a-4df7-83d4-fe96ac9574c0/b9d5a192-1a6a-4df7-83d4-fe96ac9574c0.pid -L UlKGE8M_cxJTcktjV8M-eKJkh-g -Ds -sf 1625

root 1701 1685 0 04:36 pts/0 00:00:00 grep --color=auto haproxy

root@amphora-a9cf0b97-7f30-4b9c-b16a-7bc54526e0d0:~#

root@amphora-a9cf0b97-7f30-4b9c-b16a-7bc54526e0d0:~# cat /var/lib/octavia/b9d5a192-1a6a-4df7-83d4-fe96ac9574c0/haproxy.cfg

# Configuration for loadbalancer eda3efa5-dd91-437c-81d9-b73d28b5312f

global

daemon

user nobody

log /dev/log local0

log /dev/log local1 notice

stats socket /var/lib/octavia/b9d5a192-1a6a-4df7-83d4-fe96ac9574c0.sock mode 0666 level user

maxconn 1000000

defaults

log global

retries 3

option redispatch

frontend b9d5a192-1a6a-4df7-83d4-fe96ac9574c0

option tcplog

maxconn 1000000

bind 192.168.21.16:443

mode tcp

default_backend 502b6689-40ad-4201-b704-f221e0fddd58

timeout client 50000

backend 502b6689-40ad-4201-b704-f221e0fddd58

mode tcp

balance roundrobin

timeout check 10s

option ssl-hello-chk

fullconn 1000000

option allbackups

timeout connect 5000

timeout server 50000

server 49f16402-69f4-49bb-8dc0-5ec13a0f1791 192.168.21.10:443 weight 1 check inter 5s fall 3 rise 4

server 1ab624e1-9cd8-49f3-9297-4fa031a3ca58 192.168.21.12:443 weight 1 check inter 5s fall 3 rise 4

有时候service vm已经创建好, 但octavia-worker因为下列原因退出导致"openstack loadbalancer create --name lb1 --vip-subnet-id private_subnet"这步执行后状态总不对.

2019-01-04 06:30:45.574 8173 INFO cotyledon._service [-] Caught SIGTERM signal, graceful exiting of service ConsumerService(0) [8173]

2019-01-04 06:30:45.573 7983 INFO cotyledon._service_manager [-] Caught SIGTERM signal, graceful exiting of master process

2019-01-04 06:30:45.581 8173 INFO octavia.controller.queue.consumer [-] Stopping consumer...

2019-01-04 06:30:45.593 8173 INFO cotyledon._service [-] Caught SIGTERM signal, graceful exiting of service ConsumerService(0) [8173]

Deploy a TLS-terminated HTTPS load balancer

# 确实不能加密码: https://opendev.org/openstack/octavia/commit/a501714a76e04b33dfb24c4ead9956ed4696d1df

openssl genrsa -passout pass:password -out ca.key

openssl req -x509 -passin pass:password -new -nodes -key ca.key -days 3650 -out ca.crt -subj "/C=CN/ST=BJ/O=STS"

openssl genrsa -passout pass:password -out lb.key

openssl req -new -key lb.key -out lb.csr -subj "/C=CN/ST=BJ/O=STS/CN=www.quqi.com"

openssl x509 -req -in lb.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out lb.crt -days 3650

cat lb.crt lb.key > lb.pem

openssl pkcs12 -export -inkey lb.key -in lb.crt -certfile ca.crt -passout pass:password -out lb.p12

sudo apt install python-barbicanclient

#openstack secret delete $(openstack secret list | awk '/ tls_lb_secret / {print $2}')

openstack secret store --name='tls_lb_secret' -t 'application/octet-stream' -e 'base64' --payload="$(base64 < lb.p12)"

openstack acl user add -u $(openstack user show octavia --domain service_domain -f value -c id) $(openstack secret list | awk '/ tls_lb_secret / {print $2}')

openstack secret list

openstack loadbalancer create --name lb1 --vip-subnet-id private_subnet

# Re-run the following until lb1 shows ACTIVE and ONLINE statuses:

openstack loadbalancer show lb1

openstack loadbalancer member list pool1

openstack loadbalancer member delete pool1 <member>

openstack loadbalancer pool delete pool1

openstack loadbalancer listener delete listener1

openstack loadbalancer listener create --protocol-port 443 --protocol TERMINATED_HTTPS --name listener1 --default-tls-container=$(openstack secret list | awk '/ tls_lb_secret / {print $2}') lb1

openstack loadbalancer pool create --name pool1 --lb-algorithm ROUND_ROBIN --listener listener1 --protocol HTTP

openstack loadbalancer healthmonitor create --delay 5 --max-retries 4 --timeout 10 --type HTTP --url-path / pool1

openstack loadbalancer member create --subnet-id private_subnet --address 192.168.21.10 --protocol-port 80 pool1

openstack loadbalancer member create --subnet-id private_subnet --address 192.168.21.12 --protocol-port 80 pool1

但是出错了:

ubuntu@zhhuabj-bastion:~/ca⟫ openstack loadbalancer listener create --protocol-port 443 --protocol TERMINATED_HTTPS --name listener1 --default-tls-container=$(openstack secret list | awk '/ tls_lb_secret / {print $2}') lb1

Could not retrieve certificate: ['http://10.5.0.25:9312/v1/secrets/7c706fb2-4319-46fc-b78d-81f34393f581'] (HTTP 400) (Request-ID: req-c0c0e4d5-f395-424c-9aab-5c4c4e72fb3d)

出错的原因找到, 是创建密钥时不能加密码:

openssl genrsa -out ca.key

openssl req -x509 -new -nodes -key ca.key -days 3650 -out ca.crt -subj "/C=CN/ST=BJ/O=STS"

openssl genrsa -out lb.key

openssl req -new -key lb.key -out lb.csr -subj "/C=CN/ST=BJ/O=STS/CN=www.quqi.com"

openssl x509 -req -in lb.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out lb.crt -days 3650

cat lb.crt lb.key > lb.pem

openssl pkcs12 -export -inkey lb.key -in lb.crt -certfile ca.crt -passout pass: -out lb.p12

但是仍然不成功, 原因已查明, 与密钥无关, 而是之前没有执行这一句(octavia_user_id=$(openstack user show octavia --domain service_domain -f value -c id); openstack acl user add -u $octavia_user_id $secret_id) 所致, 一个完整的脚本如下:

#https://wiki.openstack.org/wiki/Network/LBaaS/docs/how-to-create-tls-loadbalancer#Update_neutron_config

#https://lingxiankong.github.io/2018-04-29-octavia-tls-termination-test.html

DOMAIN1=www.server1.com

DOMAIN2=www.server2.com

echo "Create CA cert(self-signed) and key..."

CA_SUBJECT="/C=CN/ST=BJ/L=BJ/O=STS/OU=Joshua/CN=CA"

openssl req -new -x509 -nodes -days 3650 -newkey rsa:2048 -keyout ca.key -out ca.crt -subj $CA_SUBJECT

openssl genrsa -des3 -out $DOMAIN1_encrypted.key 1024

openssl rsa -in $DOMAIN1_encrypted.key -out $DOMAIN1.key

openssl genrsa -des3 -out $DOMAIN2_encrypted.key 1024

openssl rsa -in $DOMAIN2_encrypted.key -out $DOMAIN2.key

echo "Create server certificate signing request..."

SUBJECT="/C=CN/ST=BJ/L=BJ/O=STS/OU=Joshua/CN=$DOMAIN1"

openssl req -new -nodes -subj $SUBJECT -key $DOMAIN1.key -out $DOMAIN1.csr

SUBJECT="/C=CN/ST=BJ/L=BJ/O=STS/OU=Joshua/CN=$DOMAIN2"

openssl req -new -nodes -subj $SUBJECT -key $DOMAIN2.key -out $DOMAIN2.csr

echo "Sign SSL certificate..."

openssl x509 -req -days 3650 -in $DOMAIN1.csr -CA ca.crt -CAkey ca.key -set_serial 01 -out $DOMAIN1.crt

openssl x509 -req -days 3650 -in $DOMAIN2.csr -CA ca.crt -CAkey ca.key -set_serial 01 -out $DOMAIN2.crt

# NOTE: must without password when using barbican to save p12

openssl pkcs12 -export -inkey www.server1.com.key -in www.server1.com.crt -certfile ca.crt -passout pass: -out www.server1.com.p12

openssl pkcs12 -export -inkey www.server2.com.key -in www.server2.com.crt -certfile ca.crt -passout pass: -out www.server2.com.p12

secret1_id=$(openstack secret store --name='lb_tls_secret_1' -t 'application/octet-stream' -e 'base64' --payload="$(base64 < www.server1.com.p12)" -f value -c "Secret href")

secret2_id=$(openstack secret store --name='lb_tls_secret_2' -t 'application/octet-stream' -e 'base64' --payload="$(base64 < www.server2.com.p12)" -f value -c "Secret href")

# allow octavia service user to visit the cert saved in barbican by the user in the novarc

octavia_user_id=$(openstack user show octavia --domain service_domain -f value -c id); echo $octavia_user_id;

openstack acl user add -u $octavia_user_id $secret1_id

openstack acl user add -u $octavia_user_id $secret2_id

IP=192.168.21.7

subnetid=$(openstack subnet show private_subnet -f value -c id); echo $subnetid

#lb_id=$(openstack loadbalancer create --name lb3 --vip-subnet-id $subnetid -f value -c id); echo $lb_id

lb_id=22ce64e5-585d-43bd-80eb-5c3ff22abacd

listener_id=$(openstack loadbalancer listener create $lb_id --name https_listener --protocol-port 443 --protocol TERMINATED_HTTPS --default-tls-container=$secret1_id --sni-container-refs $secret1_id $secret2_id -f value -c id); echo $listener_id

pool_id=$(openstack loadbalancer pool create --protocol HTTP --listener $listener_id --lb-algorithm ROUND_ROBIN -f value -c id); echo $pool_id

openstack loadbalancer member create --address ${IP} --subnet-id $subnetid --protocol-port 80 $pool_id

public_network=$(openstack network show ext_net -f value -c id)

fip=$(openstack floating ip create $public_network -f value -c floating_ip_address)

vip=$(openstack loadbalancer show $lb_id -c vip_address -f value)

vip_port=$(openstack port list --fixed-ip ip-address=$vip -c ID -f value)

#openstack floating ip set $fip --fixed-ip-address $vip --port $vip_port

neutron floatingip-associate $fip $vip_port

curl -k https://$fip

curl --resolve www.server1.com:443:$fip --cacert ~/ca3/ca.crt https://www.server1.com

curl --resolve www.server2.com:443:$fip --cacert ~/ca3/ca.crt https://www.server2.com

nobody 2202 2200 0 07:23 ? 00:00:00 /usr/sbin/haproxy -f /var/lib/octavia/dfa44538-2c12-411b-b3b3-c709bc139523/haproxy.cfg -f /var/lib/octavia/haproxy-default-user-group.conf -p /var/lib/octavia/dfa44538-2c12-411b-b3b3-c709bc139523/dfa44538-2c12-411b-b3b3-c709bc139523.pid -L 1_a8OAWpvKuB7hMNzt8UwaJ2M00 -Ds -sf 2148

root@amphora-2fae038f-08b5-4bce-a2b7-f7bd543c0314:~# cat /var/lib/octavia/dfa44538-2c12-411b-b3b3-c709bc139523/haproxy.cfg

# Configuration for loadbalancer 22ce64e5-585d-43bd-80eb-5c3ff22abacd

global

daemon

user nobody

log /dev/log local0

log /dev/log local1 notice

stats socket /var/lib/octavia/dfa44538-2c12-411b-b3b3-c709bc139523.sock mode 0666 level user

maxconn 1000000

defaults

log global

retries 3

option redispatch

frontend dfa44538-2c12-411b-b3b3-c709bc139523

option httplog

maxconn 1000000

redirect scheme https if !{ ssl_fc }

bind 192.168.21.16:443 ssl crt /var/lib/octavia/certs/dfa44538-2c12-411b-b3b3-c709bc139523/b16771bdb053d138575d60e3035d77fa0598ef5c.pem crt /var/lib/octavia/certs/dfa44538-2c12-411b-b3b3-c709bc139523

mode http

default_backend e6c4444a-a06e-4c1c-80d4-389516059d46

timeout client 50000

backend e6c4444a-a06e-4c1c-80d4-389516059d46

mode http

balance roundrobin

fullconn 1000000

option allbackups

timeout connect 5000

timeout server 50000

server 234ff0d3-5196-4536-bd49-dfbab94732d4 192.168.21.7:80 weight 1

目前剩下的问题是service vm无法访问backend 192.168.21.7, 原理应该是:

octavia通过_plug_amphora_vip方法添加一个vip port (octavia-lb-vrrp-7e56de03-298e-43dd-a78f-33aa8d4af735), 它应该往amphora虚机上再添加一个port, 然后为此vip添加allowed_address_pairs. 但是在amphora虚机上我们没有发现这块新添的vip NIC, 重新运行下列’nova interface-attach’也不好使

nova list --all

nova interface-attach --port-id $(neutron port-show octavia-lb-vrrp-f63f0c5b-a541-442a-929c-b8ed7f7b3604 -f value -c id) 044f42c9-d205-4a11-aa8f-6b9aea896861

使用下列方法也不好使:

lb_id=$(openstack loadbalancer show lb3 -f value -c id)

old_vip=$(openstack loadbalancer show lb3 -f value -c vip_address)

private_subnet_id=$(neutron subnet-show private_subnet -f value -c id)

# delete old vip port (named 'octavia-lb-$lb_id')

neutron port-delete octavia-lb-$lb_id

# create new vip port with the same name and vip and binding:host_id is amphora service vm's host

# nova show $(nova list --all |grep amphora |awk '{print $2}') |grep OS-EXT-SRV-ATTR:host

neutron port-create --name octavia-lb-$lb_id --device-owner Octavia --binding:host_id=juju-50fb86-bionic-rocky-barbican-octavia-8 --fixed-ip subnet_id=${private_subnet_id},ip_address=${old_vip} private

mac=$(neutron port-show octavia-lb-$lb_id -f value -c mac_address)

nova interface-attach --port-id $(neutron port-show octavia-lb-$lb_id -f value -c id) $(nova list --all |grep amphora |awk '{print $2}')

接着发现admin-state-up为False, 但enable(neutron port-update --admin-state-up True 6dc5b0bd-d0b7-4b29-9fce-b8f7b23c7914)后status仍然为DOWN. 继续检查设置如下;

root@amphora-3d906381-f49f-4efa-bfa7-b5f84eb3b1af:~# cat /etc/netns/amphora-haproxy/network/interfaces

auto lo

iface lo inet loopback

source /etc/netns/amphora-haproxy/network/interfaces.d/*.cfg

root@amphora-3d906381-f49f-4efa-bfa7-b5f84eb3b1af:~# cat /etc/netns/amphora-haproxy/network/interfaces.d/eth1.cfg

# Generated by Octavia agent

auto eth1 eth1:0

iface eth1 inet static

address 192.168.21.34

broadcast 192.168.21.255

netmask 255.255.255.0

gateway 192.168.21.1

mtu 1458

iface eth1:0 inet static

address 192.168.21.5

broadcast 192.168.21.255

netmask 255.255.255.0

# Add a source routing table to allow members to access the VIP

post-up /sbin/ip route add default via 192.168.21.1 dev eth1 onlink table 1

post-down /sbin/ip route del default via 192.168.21.1 dev eth1 onlink table 1

post-up /sbin/ip route add 192.168.21.0/24 dev eth1 src 192.168.21.5 scope link table 1

post-down /sbin/ip route del 192.168.21.0/24 dev eth1 src 192.168.21.5 scope link table 1

post-up /sbin/ip rule add from 192.168.21.5/32 table 1 priority 100

post-down /sbin/ip rule del from 192.168.21.5/32 table 1 priority 100

post-up /sbin/iptables -t nat -A POSTROUTING -p udp -o eth1 -j MASQUERADE

post-down /sbin/iptables -t nat -D POSTROUTING -p udp -o eth1 -j MASQUERADE

root@amphora-3d906381-f49f-4efa-bfa7-b5f84eb3b1af:~# cat /var/lib/octavia/plugged_interfaces

fa:16:3e:e2:3a:7f eth1

ip netns exec amphora-haproxy ifdown eth1

ip netns exec amphora-haproxy ifup eth1

ip netns exec amphora-haproxy ifup eth1.0

ip netns exec amphora-haproxy ip addr show

但发现无法ifup eth1.0:

root@amphora-3d906381-f49f-4efa-bfa7-b5f84eb3b1af:~# ip netns exec amphora-haproxy ifup eth1.0

Unknown interface eth1.0

手工执行它:

ip netns exec amphora-haproxy ifdown eth1

ip netns exec amphora-haproxy ip addr add 192.168.21.34/24 dev eth1

ip netns exec amphora-haproxy ip addr add 192.168.21.5/24 dev eth1

ip netns exec amphora-haproxy ifconfig eth1 up

ip netns exec amphora-haproxy ip route add default via 192.168.21.1 dev eth1 onlink table 1

ip netns exec amphora-haproxy ip route add 192.168.21.0/24 dev eth1 src 192.168.21.5 scope link table 1

ip netns exec amphora-haproxy ip rule add from 192.168.21.5/32 table 1 priority 100

ip netns exec amphora-haproxy iptables -t nat -A POSTROUTING -p udp -o eth1 -j MASQUERADE

注: 要重做image的话, 可以参考: https://github.com/openstack/octavia/tree/master/diskimage-create

此时, 可以从amphora-haproxy ping backedn vm 192.168.21.7

root@amphora-3d906381-f49f-4efa-bfa7-b5f84eb3b1af:~# ip netns exec amphora-haproxy ping -c 1 192.168.21.7

PING 192.168.21.7 (192.168.21.7) 56(84) bytes of data.

64 bytes from 192.168.21.7: icmp_seq=1 ttl=64 time=3.83 ms

但是从neutron-gateway节点仍然无法ping vip 192.168.21.5

root@juju-50fb86-bionic-rocky-barbican-octavia-6:~# ip netns exec qrouter-2ea1fc45-69e5-4c77-b6c9-d4fabc57145b ping 192.168.21.5

PING 192.168.21.5 (192.168.21.5) 56(84) bytes of data.

继续为这个ha port添加allowed-address-pairs port, 但仍然无果.

neutron port-update 6dc5b0bd-d0b7-4b29-9fce-b8f7b23c7914 --allowed-address-pairs type=dict list=true mac_address=fa:16:3e:e2:3a:7f,ip_address=192.168.21.5

root@juju-50fb86-bionic-rocky-barbican-octavia-9:~# iptables-save |grep 'IP/MAC pairs'

-A neutron-openvswi-s650aa1af-5 -s 192.168.21.5/32 -m mac --mac-source FA:16:3E:E2:3A:7F -m comment --comment "Allow traffic from defined IP/MAC pairs." -j RETURN

-A neutron-openvswi-s650aa1af-5 -s 192.168.21.34/32 -m mac --mac-source FA:16:3E:E2:3A:7F -m comment --comment "Allow traffic from defined IP/MAC pairs." -j RETURN

-A neutron-openvswi-sfad3d0f9-3 -s 192.168.0.16/32 -m mac --mac-source FA:16:3E:EA:54:4F -m comment --comment "Allow traffic from defined IP/MAC pairs." -j RETURN

root@juju-50fb86-bionic-rocky-barbican-octavia-9:~# iptables-save |grep 'IP/MAC pairs'

-A neutron-openvswi-s650aa1af-5 -s 192.168.21.5/32 -m mac --mac-source FA:16:3E:E2:3A:7F -m comment --comment "Allow traffic from defined IP/MAC pairs." -j RETURN

-A neutron-openvswi-s650aa1af-5 -s 192.168.21.34/32 -m mac --mac-source FA:16:3E:E2:3A:7F -m comment --comment "Allow traffic from defined IP/MAC pairs." -j RETURN

-A neutron-openvswi-sfad3d0f9-3 -s 192.168.0.16/32 -m mac --mac-source FA:16:3E:EA:54:4F -m comment --comment "Allow traffic from defined IP/MAC pairs." -j RETURN

root@juju-50fb86-bionic-rocky-barbican-octavia-9:~# ovs-appctl bridge/dump-flows br-int |grep 192.168.21

table_id=24, duration=513s, n_packets=6, n_bytes=252, priority=2,arp,in_port=4,arp_spa=192.168.21.5,actions=goto_table:25

table_id=24, duration=513s, n_packets=1, n_bytes=42, priority=2,arp,in_port=4,arp_spa=192.168.21.34,actions=goto_table:25

root@juju-50fb86-bionic-rocky-barbican-octavia-9:~# ovs-appctl bridge/dump-flows br-int |grep 25,

table_id=25, duration=48s, n_packets=0, n_bytes=0, priority=2,in_port=4,dl_src=fa:16:3e:e2:3a:7f,actions=goto_table:60

table_id=25, duration=48s, n_packets=6, n_bytes=1396, priority=2,in_port=3,dl_src=fa:16:3e:ea:54:4f,actions=goto_table:60

root@juju-50fb86-bionic-rocky-barbican-octavia-9:~# ovs-appctl bridge/dump-flows br-int |grep 60,

table_id=60, duration=76s, n_packets=20, n_bytes=3880, priority=3,actions=NORMAL

继续查找原因, 既然从service vm能ping backend说明网络都没问题, 现在只是无法从gateway ping service vm那说明应该还是防火墙的问题. 采用’neutron port-show '查看该vip port关联的是一个新security group, 添加之后问题解决:

openstack security group rule create --protocol tcp --dst-port 22 lb-7f2985f0-c0d5-47ab-b805-7f5dafe20d3e

openstack security group rule create --protocol icmp lb-7f2985f0-c0d5-47ab-b805-7f5dafe20d3e

接着就是报这个错, 后来确认是上面在backend模拟HTTP服务的方法有问题, 后改成"sudo python -m SimpleHTTPServer 80"后问题解决 .

root@juju-50fb86-bionic-rocky-barbican-octavia-6:~# ip netns exec qrouter-2ea1fc45-69e5-4c77-b6c9-d4fabc57145b curl -k https://192.168.21.5

<html><body><h1>502 Bad Gateway</h1>

Jan 6 05:14:38 amphora-3d906381-f49f-4efa-bfa7-b5f84eb3b1af haproxy[2688]: 192.168.21.1:41372 [06/Jan/2019:05:14:38.038] a5b442f3-7d40-4849-8b88-7f02697bfd5b~ e25b432a-ea45-4191-9448-c364661326dc/ae847e94-5aeb-4da6-9b66-07e1a385465b 28/0/9/-1/40 502 250 - - PH-- 0/0/0/0/0 0/0 "GET / HTTP/1.1

整个实验结果见链接- https://paste.ubuntu.com/p/PPHv9Zfdf6/

ubuntu@zhhuabj-bastion:~⟫ curl -k https://10.5.150.5

Hello World!

ubuntu@zhhuabj-bastion:~⟫ curl --resolve www.quqi.com:443:10.5.150.5 --cacert ~/ca2_without_pass/ca.crt https://www.quqi.com

Hello World!

ubuntu@zhhuabj-bastion:~⟫ curl --resolve www.quqi.com:443:10.5.150.5 -k https://www.quqi.com

Hello World!

root@juju-50fb86-bionic-rocky-barbican-octavia-6:~# ip netns exec qrouter-2ea1fc45-69e5-4c77-b6c9-d4fabc57145b curl -k https://192.168.21.5

Hello World!

root@amphora-3d906381-f49f-4efa-bfa7-b5f84eb3b1af:~# ip netns exec amphora-haproxy curl 192.168.21.7

Hello World!

root@amphora-3d906381-f49f-4efa-bfa7-b5f84eb3b1af:~# cat /var/lib/octavia/a5b442f3-7d40-4849-8b88-7f02697bfd5b/haproxy.cfg

# Configuration for loadbalancer 7f2985f0-c0d5-47ab-b805-7f5dafe20d3e

global

daemon

user nobody

log /dev/log local0

log /dev/log local1 notice

stats socket /var/lib/octavia/a5b442f3-7d40-4849-8b88-7f02697bfd5b.sock mode 0666 level user

maxconn 1000000

defaults

log global

retries 3

option redispatch

frontend a5b442f3-7d40-4849-8b88-7f02697bfd5b

option httplog

maxconn 1000000

redirect scheme https if !{ ssl_fc }

bind 192.168.21.5:443 ssl crt /var/lib/octavia/certs/a5b442f3-7d40-4849-8b88-7f02697bfd5b/4aa85f186d19a766c29109577d88734a8fca6385.pem crt /var/lib/octavia/certs/a5b442f3-7d40-4849-8b88-7f02697bfd5b

mode http

default_backend e25b432a-ea45-4191-9448-c364661326dc

timeout client 50000

backend e25b432a-ea45-4191-9448-c364661326dc

mode http

balance roundrobin

timeout check 10s

option httpchk GET /

http-check expect rstatus 200

fullconn 1000000

option allbackups

timeout connect 5000

timeout server 50000

server ae847e94-5aeb-4da6-9b66-07e1a385465b 192.168.21.7:80 weight 1 check inter 5s fall 3 rise 4

附件 - Neutron LBaaS v2

https://docs.openstack.org/mitaka/networking-guide/config-lbaas.html

neutron lbaas-loadbalancer-create --name test-lb private_subnet

neutron lbaas-listener-create --name test-lb-https --loadbalancer test-lb --protocol HTTPS --protocol-port 443

neutron lbaas-pool-create --name test-lb-pool-https --lb-algorithm LEAST_CONNECTIONS --listener test-lb-https --protocol HTTPS

neutron lbaas-member-create --subnet private_subnet --address 192.168.21.13 --protocol-port 443 test-lb-pool-https

neutron lbaas-member-create --subnet private_subnet --address 192.168.21.8 --protocol-port 443 test-lb-pool-https

neutron lbaas-healthmonitor-create --delay 5 --max-retries 2 --timeout 10 --type HTTPS --pool test-lb-pool-https --name monitor1

root@juju-c9d701-xenail-queens-184313-vrrp-5:~# ip netns exec qlbaas-84fd9a6c-24a2-4c0f-912b-eedc254ac1f4 curl -k https://192.168.21.14

test1

root@juju-c9d701-xenail-queens-184313-vrrp-5:~# ip netns exec qlbaas-84fd9a6c-24a2-4c0f-912b-eedc254ac1f4 curl -k https://192.168.21.14

test2

root@juju-c9d701-xenail-queens-184313-vrrp-5:~# ip netns exec qlbaas-84fd9a6c-24a2-4c0f-912b-eedc254ac1f4 curl --cacert /home/ubuntu/lb.pem https://192.168.21.14

curl: (51) SSL: certificate subject name (www.quqi.com) does not match target host name '192.168.21.14'

root@juju-c9d701-xenail-queens-184313-vrrp-5:~# ip netns exec qlbaas-84fd9a6c-24a2-4c0f-912b-eedc254ac1f4 curl --cacert /home/ubuntu/lb.pem --resolve www.quqi.com:443:192.168.21.14 https://www.quqi.com

test1

root@juju-c9d701-xenail-queens-184313-vrrp-5:~# echo 'show stat;show table' | socat stdio /var/lib/neutron/lbaas/v2/84fd9a6c-24a2-4c0f-912b-eedc254ac1f4/haproxy_stats.sock

# pxname,svname,qcur,qmax,scur,smax,slim,stot,bin,bout,dreq,dresp,ereq,econ,eresp,wretr,wredis,status,weight,act,bck,chkfail,chkdown,lastchg,downtime,qlimit,pid,iid,sid,throttle,lbtot,tracked,type,rate,rate_lim,rate_max,check_status,check_code,check_duration,hrsp_1xx,hrsp_2xx,hrsp_3xx,hrsp_4xx,hrsp_5xx,hrsp_other,hanafail,req_rate,req_rate_max,req_tot,cli_abrt,srv_abrt,comp_in,comp_out,comp_byp,comp_rsp,lastsess,last_chk,last_agt,qtime,ctime,rtime,ttime,

c2a42906-e160-44dd-8590-968af2077b4a,FRONTEND,,,0,0,2000,0,0,0,0,0,0,,,,,OPEN,,,,,,,,,1,2,0,,,,0,0,0,0,,,,,,,,,,,0,0,0,,,0,0,0,0,,,,,,,,

52112201-05ce-4f4d-b5a8-9e67de2a895a,37a1f5a8-ec7e-4208-9c96-27d2783a594f,0,0,0,0,,0,0,0,,0,,0,0,0,0,no check,1,1,0,,,,,,1,3,1,,0,,2,0,,0,,,,,,,,,,0,,,,0,0,,,,,-1,,,0,0,0,0,

52112201-05ce-4f4d-b5a8-9e67de2a895a,8e722b4b-08b8-4089-bba5-8fa5dd26a87f,0,0,0,0,,0,0,0,,0,,0,0,0,0,no check,1,1,0,,,,,,1,3,2,,0,,2,0,,0,,,,,,,,,,0,,,,0,0,,,,,-1,,,0,0,0,0,

52112201-05ce-4f4d-b5a8-9e67de2a895a,BACKEND,0,0,0,0,200,0,0,0,0,0,,0,0,0,0,UP,2,2,0,,0,117,0,,1,3,0,,0,,1,0,,0,,,,,,,,,,,,,,0,0,0,0,0,0,-1,,,0,0,0,0,

root@juju-c9d701-xenail-queens-184313-vrrp-5:~# cat /var/lib/neutron/lbaas/v2/84fd9a6c-24a2-4c0f-912b-eedc254ac1f4/haproxy.conf

# Configuration for test-lb

global

daemon

user nobody

group nogroup

log /dev/log local0

log /dev/log local1 notice

maxconn 2000

stats socket /var/lib/neutron/lbaas/v2/84fd9a6c-24a2-4c0f-912b-eedc254ac1f4/haproxy_stats.sock mode 0666 level user

defaults

log global

retries 3

option redispatch

timeout connect 5000

timeout client 50000

timeout server 50000

frontend c2a42906-e160-44dd-8590-968af2077b4a

option tcplog

bind 192.168.21.14:443

mode tcp

default_backend 52112201-05ce-4f4d-b5a8-9e67de2a895a

backend 52112201-05ce-4f4d-b5a8-9e67de2a895a

mode tcp

balance leastconn

timeout check 10s

option httpchk GET /

http-check expect rstatus 200

option ssl-hello-chk

server 37a1f5a8-ec7e-4208-9c96-27d2783a594f 192.168.21.13:443 weight 1 check inter 5s fall 2

server 8e722b4b-08b8-4089-bba5-8fa5dd26a87f 192.168.21.8:443 weight 1 check inter 5s fall 2

# TCP monitor

neutron lbaas-healthmonitor-delete monitor1

neutron lbaas-healthmonitor-create --delay 5 --max-retries 2 --timeout 10 --type TCP --pool test-lb-pool-https --name monitor1 --url-path /

backend 52112201-05ce-4f4d-b5a8-9e67de2a895a

mode tcp

balance leastconn

timeout check 10s

server 37a1f5a8-ec7e-4208-9c96-27d2783a594f 192.168.21.13:443 weight 1 check inter 5s fall 2

server 8e722b4b-08b8-4089-bba5-8fa5dd26a87f 192.168.21.8:443 weight 1 check inter 5s fall 2

附录 - 上层的k8s如何使用下层的openstack中的LBaaS资源

如果在一个OpenStack云上面再创建K8S的话, 在k8s中使用下列命令创建LoadBalancer服务会永远Pending状态.

kubectl run test-nginx --image=nginx --replicas=2 --port=80 --expose --service-overrides='{ "spec": { "type": "LoadBalancer" } }'

kubectl get svc test-nginx

那是因为k8s需要调用底层OpenStack LBaaS服务创建VIP资源, 然后将所有后端服务的NodeIP:NodePort作为backend. 那么该如何让k8s具有访问openstack lbaas资源的能力呢? 如下:

# https://blog.ubuntu.com/2019/01/28/taking-octavia-for-a-ride-with-kubernetes-on-openstack

# openstack上部署k8s, 'juju trust openstack-integrator'将让openstack-integrator具有访问bootstrap时所用的openstack credential的权限,

# 之后, 因为cdk实现了interface-openstack-integration接口, 所以cdk k8s可以使用这些openstack credential来直接使用openstack里的LBaaS等资源

juju deploy cs:~containers/openstack-integrator

juju add-relation openstack-integrator kubernetes-master

juju add-relation openstack-integrator kubernetes-worker

juju config openstack-integrator subnet-id=<UUID of subnet>

juju config openstack-integrator floating-network-id=<UUID of ext_net>

# 'juju trust' grants openstack-integrator access to the credential used in the bootstrap command, this charm acts as a proxy for the

juju trust openstack-integrator

测试yaml, 有时要提供loadbalancer.openstack.org/floating-network-id, 见: https://github.com/kubernetes/cloud-provider-openstack/tree/master/examples/loadbalancers

kind: Service

apiVersion: v1

metadata:

name: external-http-nginx-service

annotations:

service.beta.kubernetes.io/openstack-internal-load-balancer: "false"

loadbalancer.openstack.org/floating-network-id: "6f05a9de-4fc9-41f5-9c51-d5f43cd244b9"

spec:

selector:

app: nginx

type: LoadBalancer

ports:

- name: http

port: 80

targetPort: 80

同时也应该确保service tenant下的security group的quata别超了.quata超了的现象是例如对于FIP, 有时可以有时不可以.

后面继续搭建k8s可参见 - https://ubuntu.com/blog/taking-octavia-for-a-ride-with-kubernetes-on-openstack

# deploy underlying openstack model

./generate-bundle.sh --name o7k:stsstack --create-model --octavia -r stein --dvr-snat --num-compute 8

sed -i "s/mem=8G/mem=8G cores=4/g" ./b/o7k/openstack.yaml

./generate-bundle.sh --name o7k:stsstack --replay --run

./bin/add-data-ports.sh

juju config neutron-openvswitch data-port="br-data:ens7"

#refere this page to generate certs - https://docs.openstack.org/project-deploy-guide/charm-deployment-guide/latest/app-octavia.html

juju config octavia \

lb-mgmt-issuing-cacert="$(base64 controller_ca.pem)" \

lb-mgmt-issuing-ca-private-key="$(base64 controller_ca_key.pem)" \

lb-mgmt-issuing-ca-key-passphrase=foobar \

lb-mgmt-controller-cacert="$(base64 controller_ca.pem)" \

lb-mgmt-controller-cert="$(base64 controller_cert_bundle.pem)"

juju run-action --wait octavia/0 configure-resources

source ~/stsstack-bundles/openstack/novarc

./configure

#juju config octavia loadbalancer-topology=ACTIVE_STANDBY

juju run-action octavia-diskimage-retrofit/0 --wait retrofit-image source-image=$(openstack image list |grep bionic |awk '{print $2}')

openstack image list

# enable all ingress traffic

PROJECT_ID=$(openstack project show --domain admin_domain admin -f value -c id)

SECGRP_ID=$(openstack security group list --project ${PROJECT_ID} | awk '/default/ {print $2}')

openstack security group rule create ${SECGRP_ID} --protocol any --ethertype IPv6 --ingress

openstack security group rule create ${SECGRP_ID} --protocol any --ethertype IPv4 --ingress

# disable quotas for project, neutron and nova

openstack quota set --instances -1 ${PROJECT_ID}

openstack quota set --floating-ips -1 ${PROJECT_ID}

openstack quota set --cores -1 ${PROJECT_ID}

openstack quota set --ram -1 ${PROJECT_ID}

openstack quota set --gigabytes -1 ${PROJECT_ID}

openstack quota set --volumes -1 ${PROJECT_ID}

openstack quota set --secgroups -1 ${PROJECT_ID}

openstack quota set --secgroup-rules -1 ${PROJECT_ID}

neutron quota-update --network -1

neutron quota-update --floatingip -1

neutron quota-update --port -1

neutron quota-update --router -1

neutron quota-update --security-group -1

neutron quota-update --security-group-rule -1

neutron quota-update --subnet -1

neutron quota-show

# set up router to allow bastion to access juju controller

GATEWAY_IP=$(openstack router show provider-router -f value -c external_gateway_info \

|awk '/ip_address/ { for (i=1;i<NF;i++) if ($i~"ip_address") print $(i+1)}' |cut -f2 -d\')

CIDR=$(openstack subnet show private_subnet -f value -c cidr)

sudo ip route add ${CIDR} via ${GATEWAY_IP}

# define juju cloud

sudo bash -c 'cat > mystack.yaml' << EOF

clouds:

mystack:

type: openstack

auth-types: [ userpass ]

regions:

RegionOne:

endpoint: $OS_AUTH_URL

EOF

juju remove-cloud --local mystack

juju add-cloud --local mystack mystack.yaml

juju show-cloud mystack --local

sudo bash -c 'cat > mystack_credentials.txt' << EOF

credentials:

mystack:

admin:

auth-type: userpass

password: openstack

tenant-name: admin

domain-name: "" # ensure we don't get a domain-scoped token

project-domain-name: admin_domain

user-domain-name: admin_domain

username: admin

version: "3"

EOF

juju remove-credential --local mystack admin

juju add-credential --local mystack -f ./mystack_credentials.txt

juju show-credential --local mystack admin

# deploy juju controller

mkdir -p ~/simplestreams/images && rm -rf ~/simplestreams/images/*

source ~/stsstack-bundles/openstack/novarc

IMAGE_ID=$(openstack image list -f value |grep 'bionic active' |awk '{print $1}')

OS_SERIES=$(openstack image list -f value |grep 'bionic active' |awk '{print $2}')

juju metadata generate-image -d ~/simplestreams -i $IMAGE_ID -s $OS_SERIES -r $OS_REGION_NAME -u $OS_AUTH_URL

ls ~/simplestreams/*/streams/*

NETWORK_ID=$(openstack network show private -f value -c id)

# can remove juju controller 'mystack-regionone' from ~/.local/share/juju/controllers.yaml if it exists

juju bootstrap mystack --config network=${NETWORK_ID} --model-default network=${NETWORK_ID} --model-default use-default-secgroup=true --metadata-source ~/simplestreams

# deploy upper k8s model

juju switch mystack-regionone

juju destroy-model --destroy-storage --force upperk8s -y && juju add-model upperk8s

wget https://api.jujucharms.com/charmstore/v5/bundle/canonical-kubernetes-933/archive/bundle.yaml

sed -i "s/num_units: 2/num_units: 1/g" ./bundle.yaml

sed -i "s/num_units: 3/num_units: 2/g" ./bundle.yaml

sed -i "s/cores=4/cores=2/g" ./bundle.yaml

juju deploy ./bundle.yaml

juju deploy cs:~containers/openstack-integrator

juju add-relation openstack-integrator:clients kubernetes-master:openstack

juju add-relation openstack-integrator:clients kubernetes-worker:openstack

juju config openstack-integrator subnet-id=$(openstack subnet show private_subnet -c id -f value)

juju config openstack-integrator floating-network-id=$(openstack network show ext_net -c id -f value)

juju trust openstack-integrator

watch -c juju status --color

# we don't use the following way to deploy k8s because it can't be customized to use bionic

juju deploy charmed-kubernetes

# we don't use the following way as well because it says no networks exist with label "zhhuabj_port_sec_enabled"

~/stsstack-bundles/kubernetes/generate-bundle.sh --name k8s:mystack --create-model -s bionic --run

# deploy test pod

mkdir -p ~/.kube

juju scp kubernetes-master/0:config ~/.kube/config

sudo snap install kubectl --classic

#Flag --replicas has been deprecated

#kubectl run hello-world --replicas=2 --labels="run=lb-test" --image=gcr.io/google-samples/node-hello:1.0 --port=8080

kubectl create deployment hello-world --image=gcr.io/google-samples/node-hello:1.0 -o yaml --dry-run=client > helloworld.yaml

sudo bash -c 'cat > helloworld.yaml' << EOF

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: hello-world

name: hello-world

spec:

replicas: 2

selector:

matchLabels:

app: hello-world

template:

metadata:

labels:

app: hello-world

spec:

containers:

- image: gcr.io/google-samples/node-hello:1.0

name: node-hello

ports:

- containerPort: 8080

EOF

kubectl create -f ./helloworld.yaml

kubectl get deployments hello-world -o wide

# deploy LoadBalancer service

# # remember to relace the following <ext_net_id> to avoid 'pending' status for FIP

sudo bash -c 'cat > helloservice.yaml' << EOF

kind: Service

apiVersion: v1

metadata:

name: hello

annotations:

service.beta.kubernetes.io/openstack-internal-load-balancer: "false"

loadbalancer.openstack.org/floating-network-id: "<ext_net_id>"

spec:

selector:

app: hello-world

type: LoadBalancer

ports:

- name: http

port: 80

targetPort: 8080

EOF

kubectl create -f ./helloservice.yaml

watch kubectl get svc -o wide hello

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

hello LoadBalancer 10.152.183.88 10.5.150.220 80:32315/TCP 2m47s app=hello-world

$ curl http://10.5.150.220:80