这是一篇综述的论文。

领域是车辆检测领域,主要是智能交通系统相关的方法。

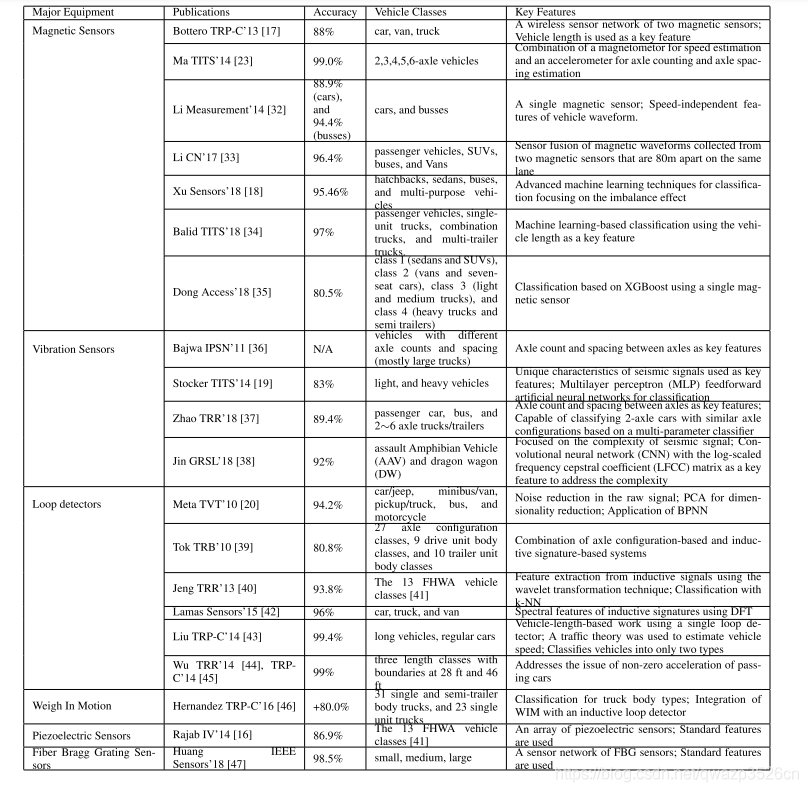

方法如下:

文章主要讲了三个点:

1)基于 In Roadway

2)基于Over Roadway

3)基于Side Roadway

1)基于 In Roadway

2)基于Over Roadway

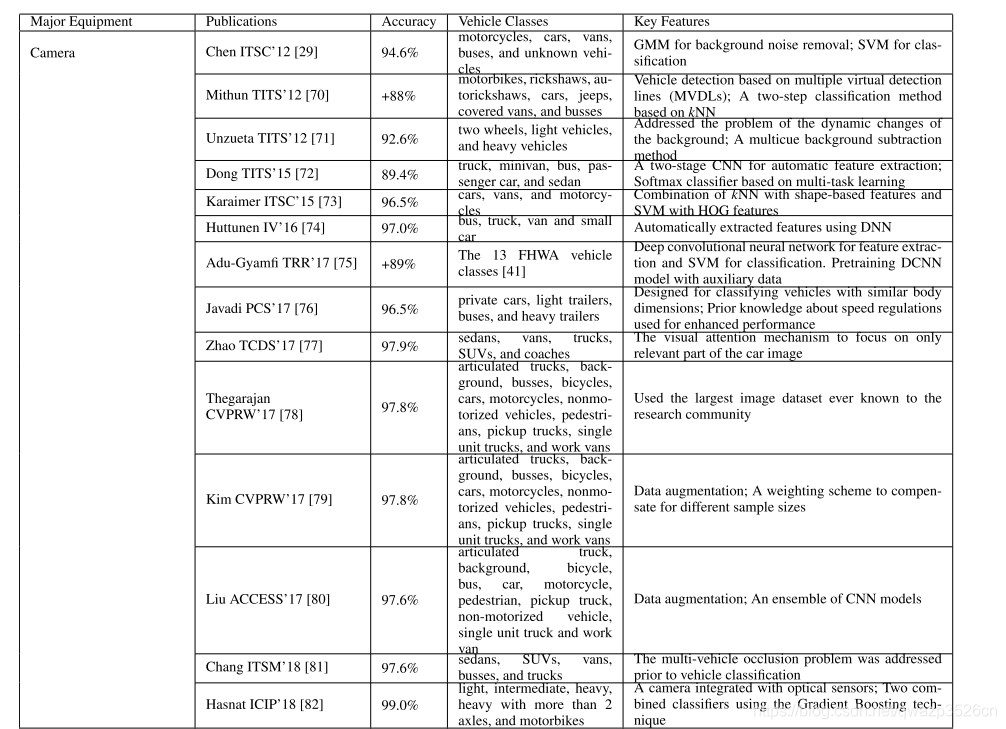

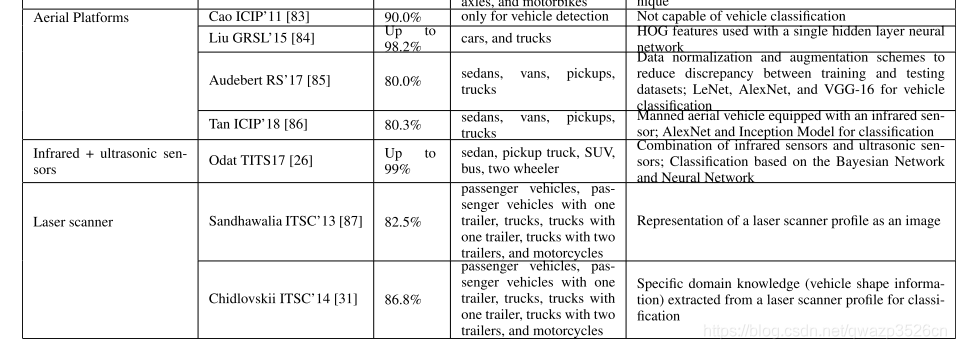

基于路面的车辆分类系统在路面上安装传感器,提供不需要路面物理变化的非侵入性解决方案,大大降低了施工和维护成本。此外,这些分类系统能够覆盖多个车道,在某些情况下可以覆盖整个道路段(如空中平台[831])。由于相机是这些车辆分类系统[29][30]中应用最广泛的传感器,因此本节主要介绍基于相机的车辆分类系统。我们也讨论基于相机的分类系统使用空中平台,如无人驾驶飞行器(uav)和卫星。尽管基于摄像头的车辆分类系统有许多优点,如高分类精度和覆盖多车道的能力,但一个主要缺点是隐私问题。我们讨论了一些隐私保护的解决方案,如基于红外传感器[26]和激光扫描仪[871。表2总结了本节讨论的基于路波的车辆分类系统的特点。

一个相机在基于道路的车辆分类系统中,最广泛采用的传感器是[29]、[30]摄像头。相机为车辆分类提供了丰富的信息,如过往车辆的视觉特征和几何形状等[88]。与基于道路的车辆分类系统相比,基于道路的车辆分类系统需要多个传感器覆盖多个车道(即每个车道至少有一个传感器),一个摄像头就足以对多车道的车辆进行分类(图5)充分的处理能力支持的先进图像处理技术可以非常快速和准确地对多辆车辆进行分类。图5。基于摄像头的交通监控系统[7]。

基于摄像头的车辆分类系统的一般工作是捕捉路过车辆的图像,从图像中提取特征,运行算法进行车辆分类。

因此,基于摄像头的系统可以根据如何捕捉车辆图像(例如,减少背景图像影响的方法)、从车辆图像中提取的特征类型以及基于提取的特征进行分类的机制进行分类。

最近的一个趋势是,越来越多的机器学习技术被用于自动有效地提取特征,并对特征进行处理,建立分类模型。

而早期的系统使用基于支持向量机的简单分类模型。

kNN和决策树,更先进的机器学习算法,如深度学习,被越来越多地采用。

Chen等人专注于从[29]视频片段中有效地捕捉汽车图像。

采用背景高斯混合模型(GMM)[89]和阴影去除算法[90]来减少阴影、相机振动、光照变化等对车辆分类的负面影响。

采用卡尔曼滤波对车辆进行跟踪,支持向量机对车辆进行分类。

实验用的是部署在英国泰晤士河畔金斯敦的摄像机拍摄的真实视频。

车辆分为五类,即摩托车、轿车、货车、公共汽车和未知车辆。这些车型的分类准确率为94.6%。

Unzueta等人也致力于有效地捕捉汽车图像[711]。具体来说,作者解决了光照变化和前照灯反射等具有挑战性的环境下背景的动态变化问题,以提高分类精度。提出了一种多背景减法,动态调整分割阈值以适应背景的动态变化,并补充从梯度差异中提取的额外特征来增强分割[71]。

提出了一种两步法来获取车辆的空间和时间特征,用于分类,即首先生成二维轮廓,然后将其扩展到三维车辆体积,以获得更准确的车辆分类。

三种类型的车辆考虑分类,即两轮,轻型车辆,和重型车辆。分类精度是92.6%。

Mithun等人提出了一种基于多虚拟检测线(MVDLs)的车辆分类系统[70]。

VDL是一帧的线索引集,其位置垂直于车辆的移动方向(图6)。按时间顺序帧的VDL上的像素条创建了时间空间图像(TSI)。

采用多个TSIs对车辆进行检测和分类,以减少由于遮挡造成的误检。

具体来说,提出了两步分类方法。

首先根据形状特征将车辆分为四类。

在此之后,另一个基于纹理和形状不变特征的分类方案将车辆分类为更具体的类型,包括摩托车、人力车、机动三轮车、汽车、吉普车、有篷货车和公共汽车。

分类正确率在88% ~ 91之间。

从汽车图像中识别有效特征是基于摄像头的车辆分类系统面临的另一个重要挑战。

为了提高分类性能,Karaimer等人将基于形状的分类方法与基于梯度方向直方图(Histogram of Oriented Gradient, HOG)特征的分类方法相结合[73]。

具体来说,基于形状的特征包括凸性、矩形性和伸长率使用kNN, SVM与HOG特征一起使用。

这两种方法采用不同的组合方案,即求和规则和乘积规则进行组合。

求和规则确定车辆类别,使两个分类器的两种概率之和最大,乘积规则根据两种概率的乘积确定车辆类别。

实验使用了三种车辆类别,即汽车、货车和摩托车。分类准确率为96.5%。

使用机器学习算法自动提取有效特征。Huttunen等人设计了一种深度神经网络(DNN),从带有背景的汽车图像中提取特征,去除从图像中检测汽车的预处理步骤,并对准汽车周围的包围框[74]。

神经网络的超参数是通过随机搜索来选择的,并找到参数的良好组合[91]。该系统通过一个数据库进行评估,该数据库包含6555张图像,包含四种不同的车辆类型,即小型轿车、公共汽车、卡车和货车。分类准确率为97%。

Dong等人采用半监督卷积神经网络(CNN)进行特征提取[72]。在本研究中,使用汽车前视图像进行分类。具体来说,我们提出的CNN分为两个阶段。

在第一阶段,作者设计了一种无监督学习机制来获取有效的CNN滤波器组来捕捉车辆的判别特征。

在第二阶段,基于多任务学习训练Softmax分类器[92],提供每种车型的概率。

实验使用了两个数据集,分别是位车数据集[93]和彭等[94]使用的数据集。

前者的数据集包括9,850辆汽车图像,分为六大类型:客车、微型客车、小型货车、轿车、SUV和卡车;

后者包括3,618张白天图像和1,306张夜间图像,包括卡车、小型货车、公共汽车、乘用车和轿车。

两组数据的分类准确率分别为88.1%和89.4%。

Adu-Gyamfi等人基于先进机器学习技术的研究,利用深度卷积神经网络(deep convolutional neural network, DCNN)开发了一种车辆分类系统,旨在快速准确地提取车辆特征[75]。

与其他方法相比,DCNN模型使用一个辅助数据集进行预训练[95],然后使用从弗吉尼亚和爱荷华州DOT CCTV摄像机数据库收集的领域特定数据进行微调。

车辆被分为FHWA的13种车辆类型。

结果表明,该方法的分类准确率达到89%以上。

尽管机器学习技术使特征提取过程更加有效,从而提高了车辆分类的准确性,但仍有许多挑战有待解决。

其中一个挑战是如何对视觉上相似的车辆进行分类。

Javadi等人提出基于车速的模糊c均值(FCM)聚类[96]作为解决这一挑战的附加特征[76]。

具体来说,他们利用不同的交通规则和车速的先验知识来提高相似尺寸车辆的分类精度。

该分类方法通过10小时的真实公路车辆图像进行评估,将车辆分为四种类型,即私家车、轻型挂车、巴士和重型挂车。分类准确率达到96.5%。将机器学习技术用于自动背景处理和特征提取的另一个挑战是,对经过的汽车图像的不同部分进行无区别处理,降低了性能[61],[97]。

Zhao等人关注的是这个问题可能会忽略汽车图像的关键部分[77]。他们的工作受到人类视觉系统的推动,该系统将图像的关键部分与背景区分开来,这被称为多一瞥和视觉注意机制[98]。

这种只关注图像相关部分的非凡能力使人类能够非常准确地对图像进行分类。

因此,他们工作的关键思想是利用视觉注意机制首先生成聚焦图像,并将图像作为输入给CNN,以获得更准确的车辆分类。

他们通过实验将一辆车分为轿车、货车、卡车、suv和客车五种类型,分类准确率达到了97.9%。

Theagarajan等人观察到,机器学习算法只有在极其大量的图像数据下才能有效工作[78]。

作者还发现,大多数基于摄像头的分类系统是建立在小型交通数据集之上的,这些数据集没有充分考虑到天气条件、摄像头视角和道路配置的变化。为了解决这个问题,他们开发了一种基于深度网络的车辆分类机制,利用了迄今为止研究社区所知的最大数据集。该数据集包含了来自美国和加拿大8000个不同地点的摄像机的786,702张汽车图像。根据大量的数据,他们将车辆分为11种类型,包括铰接式卡车、背景车、公共汽车、自行车、汽车、摩托车、非机动车辆、行人、皮卡、单件卡车和工作货车。

分类准确率达到97.8%。

Kim和Lim[79]使用了相同的数据集[78]。与其他基于CNN的研究不同的是,作者应用了一种数据增强技术来增强不同类型的汽车在不同样本大小下的性能。

作者还应用了一种称重机制,根据不同的车辆类型关联一个重量。分类准确率为97.8%。

Liu等[80]也解决了数据集不平衡的问题。具体来说,为了增加某些车型的样本数量,他们应用了各种数据增强技术,如随机旋转、裁剪、翻转和移位,并根据从增强数据集获得的参数创建了CNN模型集成。在MIO-TCD分类挑战数据集上测试了所提出的工作,该数据集将车辆划分为11种类型。

分类准确率达到97.7%。

车辆遮挡问题是将机器学习算法应用于基于摄像头的车辆分类的另一个挑战。Chang等人提出了一种基于递归分割和凸包(RSCH)的有效模型来解决这一问题[81]。

将车辆假设为凸区域,推导出一种分解优化模型,将车辆从多车辆遮挡中分离出来。解决遮挡问题后,使用常规的CNN进行车辆分类。我们使用CompCars数据集[99]进行了实验,该数据集由136,726辆汽车图像组成,包括5种类型:轿车、suv、面包车、巴士和卡车。对于该数据集,作者的分类准确率达到了97.6%。

一些车辆分类系统集成了摄像头和不同类型的传感器。Hasnat等通过将相机与光学传感器集成,显著提高了分类精度[82]。

他们称之为混合分类系统。具体来说,该系统包括基于光学传感器的分类器和基于cnn的分类器。然后,他们应用梯度增强技术[100]结合这些分类器的决策,在基础预测器的基础上构建一个更强的预测器。将车辆分为五类:轻型(高度小于2米)、中型(高度在2米至3米之间)、重型(高度大于3米)、两轴以上重型车辆和摩托车。

分类准确率为99.0%。

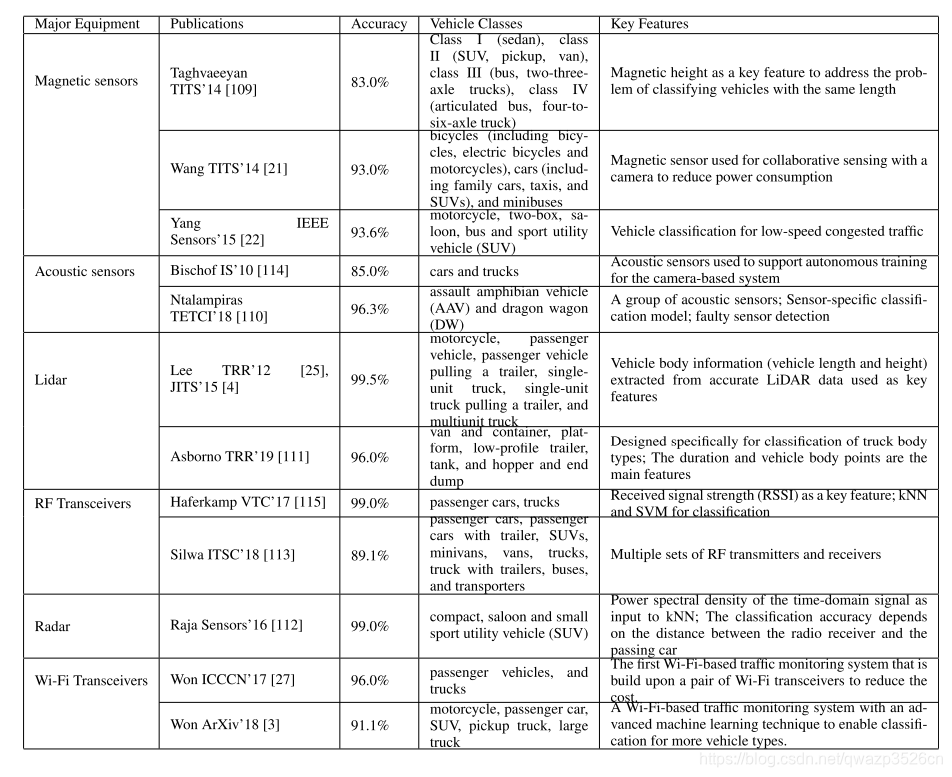

3)基于Side Roadway

4)参考文献:

[1] M. Won, T. Park, and S. H. Son, ‘‘Toward mitigating phantom jam using vehicle-to-vehicle communication,’’ IEEE Trans. Intell. Transp. Syst., vol. 18, no. 5, pp. 1313–1324, May 2017.

[2] FHWA. The 2016 Traffic Monitoring Guide. Accessed: Apr. 12, 2019. [Online]. Available: https://www.fhwa.dot.gov/policyinformation/ tmguide/tmg_fhwa_pl_17_003.pdf

[3] M. Won, S. Sahu, and K.-J. Park, ‘‘DeepWiTraffic: Low cost WiFi-based traffic monitoring system using deep learning,’’ 2018, arXiv:1812.08208. [Online]. Available: http://arxiv.org/abs/1812.08208

[4] H. Lee and B. Coifman, ‘‘Using LiDAR to validate the performance of vehicle classification stations,’’ J. Intell. Transp. Syst., vol. 19, no. 4, pp. 355–369, Oct. 2015.

[5] FHWA. The 2016 Traffic Detector Handbook. Accessed: Apr. 12, 2019. [Online]. Available: https://www.fhwa.dot.gov/publications/research/ operations/its/06108/06108.pdf

[6] R. Tyburski, ‘‘A review of road sensor technology for monitoring vehicle traffic,’’ Inst. Transp. Eng. J., vol. 59, no. 8, pp. 27–29, 1988.

[7] S. R. E. Datondji, Y. Dupuis, P. Subirats, and P. Vasseur, ‘‘A survey of vision-based traffic monitoring of road intersections,’’ IEEE Trans. Intell. Transp. Syst., vol. 17, no. 10, pp. 2681–2698, Oct. 2016.

[8] B. Tian, Q. Yao, Y. Gu, K. Wang, and Y. Li, ‘‘Video processing techniques for traffic flow monitoring: A survey,’’ in Proc. 14th Int. IEEE Conf. Intell. Transp. Syst. (ITSC), Oct. 2011, pp. 1103–1108.

[9] R. M. Inigo, ‘‘Traffic monitoring and control using machine vision: A survey,’’ IEEE Trans. Ind. Electron., vol. IE-32, no. 3, pp. 177–185, Aug. 1985.

[10] K. Yousaf, A. Iftikhar, and A. Javed, ‘‘Comparative analysis of automatic vehicle classification techniques: A survey,’’ Int. J. Image, Graph. Signal Process., vol. 4, no. 9, pp. 52–59, Sep. 2012.

[11] S. Kul, S. Eken, and A. Sayar, ‘‘A concise review on vehicle detection and classification,’’ in Proc. Int. Conf. Eng. Technol. (ICET), Aug. 2017, pp. 1–4.

[12] N. K. Jain, R. Saini, and P. Mittal, ‘‘A review on traffic monitoring system techniques,’’ in Soft Computing: Theories and Applications. Singapore: Springer, 2019, pp. 569–577.

[13] A. Puri, ‘‘A survey of unmanned aerial vehicles (UAV) for traffic surveillance,’’ Dept. Comput. Sci. Eng., Univ. South Florida, Tampa, FL, USA, Tech. Rep., 2005, pp. 1–29.

[14] K. Kanistras, G. Martins, M. J. Rutherford, and K. P. Valavanis, ‘‘Survey of unmanned aerial vehicles (UAVs) for traffic monitoring,’’ in Proc. Int. Conf. Unmanned Aircr. Syst. (ICUAS), 2013, pp. 221–234.

[15] M. Bernas, B. Płaczek, W. Korski, P. Loska, J. Smyła, and P. Szymała, ‘‘A survey and comparison of low-cost sensing technologies for road traffic monitoring,’’ Sensors, vol. 18, no. 10, p. 3243, 2018.

[16] S. A. Rajab, A. Mayeli, and H. H. Refai, ‘‘Vehicle classification and accurate speed calculation using multi-element piezoelectric sensor,’’ in Proc. IEEE Intell. Vehicles Symp. Proc., Jun. 2014, pp. 894–899.

[17] M. Bottero, B. D. Chiara, and F. P. Deflorio, ‘‘Wireless sensor networks for traffic monitoring in a logistic centre,’’ Transp. Res. C, Emerg. Technol., vol. 26, pp. 99–124, Jan. 2013.

[18] C. Xu, Y. Wang, X. Bao, and F. Li, ‘‘Vehicle classification using an imbalanced dataset based on a single magnetic sensor,’’ Sensors, vol. 18, no. 6, p. 1690, 2018.

[19] M. Stocker, M. Rönkkö, and M. Kolehmainen, ‘‘Situational knowledge representation for traffic observed by a pavement vibration sensor network,’’ IEEE Trans. Intell. Transp. Syst., vol. 15, no. 4, pp. 1441–1450, Aug. 2014.

[20] S. Meta and M. G. Cinsdikici, ‘‘Vehicle-classification algorithm based on component analysis for single-loop inductive detector,’’ IEEE Trans. Veh. Technol., vol. 59, no. 6, pp. 2795–2805, Jul. 2010.

[21] R. Wang, L. Zhang, K. Xiao, R. Sun, and L. Cui, ‘‘EasiSee: Realtime vehicle classification and counting via low-cost collaborative sensing,’’ IEEE Trans. Intell. Transp. Syst., vol. 15, no. 1, pp. 414–424, Feb. 2014.

[22] B. Yang and Y. Lei, ‘‘Vehicle detection and classification for low-speed congested traffic with anisotropic magnetoresistive sensor,’’ IEEE Sensors J., vol. 15, no. 2, pp. 1132–1138, Feb. 2015.

[23] W. Ma, D. Xing, A. McKee, R. Bajwa, C. Flores, B. Fuller, and P. Varaiya, ‘‘A wireless accelerometer-based automatic vehicle classification prototype system,’’ IEEE Trans. Intell. Transp. Syst., vol. 15, no. 1, pp. 104–111, Feb. 2014.

[24] J. George, L. Mary, and K. S. Riyas, ‘‘Vehicle detection and classification from acoustic signal using ANN and KNN,’’ in Proc. Int. Conf. Control Commun. Comput. (ICCC), Dec. 2013, pp. 436–439.

[25] H. Lee and B. Coifman, ‘‘Side-fire LiDAR-based vehicle classification,’’ Transp. Res. Rec., J. Transp. Res. Board, vol. 2308, no. 1, pp. 173–183, Jan. 2012.

[26] E. Odat, J. S. Shamma, and C. Claudel, ‘‘Vehicle classification and speed estimation using combined passive infrared/ultrasonic sensors,’’ IEEE Trans. Intell. Transp. Syst., vol. 19, no. 5, pp. 1593–1606, May 2018.

[27] M. Won, S. Zhang, and S. H. Son, ‘‘WiTraffic: Low-cost and non-intrusive traffic monitoring system using WiFi,’’ in Proc. 26th Int. Conf. Comput. Commun. Netw. (ICCCN), Jul. 2017, pp. 1–9.

[28] T. Tang, S. Zhou, Z. Deng, L. Lei, and H. Zou, ‘‘Arbitrary-oriented vehicle detection in aerial imagery with single convolutional neural networks,’’ Remote Sens., vol. 9, no. 11, p. 1170, 2017.

[29] Z. Chen, T. Ellis, and S. A. Velastin, ‘‘Vehicle detection, tracking and classification in urban traffic,’’ in Proc. 15th Int. IEEE Conf. Intell. Transp. Syst., Sep. 2012, pp. 951–956.

A most widely adopted sensor for over-roadway-based vehicle classification systems is a camera [29], [30].

Chen et al. focus on effectively capturing a car image from video footage [29].

[30] C. M. Bautista, C. A. Dy, M. I. Manalac, R. A. Orbe, and M. Cordel, ‘‘Convolutional neural network for vehicle detection in low resolution traffic videos,’’ in Proc. IEEE Region Symp. (TENSYMP), May 2016, pp. 277–281.

[31] B. Chidlovskii, G. Csurka, and J. Rodriguez-Serrano, ‘‘Vehicle type classification from laser scans with global alignment kernels,’’ in Proc. 17th Int. IEEE Conf. Intell. Transp. Syst. (ITSC), Oct. 2014, pp. 2840–2845.

[32] H. Li, H. Dong, L. Jia, and M. Ren, ‘‘Vehicle classification with single multi-functional magnetic sensor and optimal MNS-based CART,’’ Measurement, vol. 55, pp. 142–152, Sep. 2014.

[33] F. Li and Z. Lv, ‘‘Reliable vehicle type recognition based on information fusion in multiple sensor networks,’’ Comput. Netw., vol. 117, pp. 76–84, Apr. 2017.

[34] W. Balid, H. Tafish, and H. H. Refai, ‘‘Intelligent vehicle counting and classification sensor for real-time traffic surveillance,’’IEEE Trans. Intell. Transp. Syst., vol. 19, no. 6, pp. 1784–1794, Jun. 2018.

[35] H. Dong, X. Wang, C. Zhang, R. He, L. Jia, and Y. Qin, ‘‘Improved robust vehicle detection and identification based on single magnetic sensor,’’ IEEE Access, vol. 6, pp. 5247–5255, 2018.

[36] R. Bajwa, R. Rajagopal, P. Varaiya, and R. Kavaler, ‘‘In-pavement wireless sensor network for vehicle classification,’’ in Proc. Int. Conf. Inf. Process. Sensor Netw. (IPSN), 2011, pp. 85–96.

[37] H. Zhao, D. Wu, M. Zeng, and S. Zhong, ‘‘A vibration-based vehicle classification system using distributed optical sensing technology,’’ Transp. Res. Rec., J. Transp. Res. Board, vol. 2672, no. 43, pp. 12–23, Dec. 2018.

[38] G. Jin, B. Ye, Y. Wu, and F. Qu, ‘‘Vehicle classification based on seismic signatures using convolutional neural network,’’ IEEE Geosci. Remote Sens. Lett., vol. 16, no. 4, pp. 628–632, Apr. 2019.

[39] A. Tok and S. G. Ritchie, ‘‘Vector classification of commercial vehicles using a high-fidelity inductive loop detection system,’’ in Proc. 89th Annu. Meeting Transp. Res. Board, Washington, DC, USA, 2010, pp. 1–22.

[40] S.-T. Jeng, L. Chu, and S. Hernandez, ‘‘Wavelet–k nearest neighbor vehicle classification approach with inductive loop signatures,’’ Transp. Res. Rec., vol. 2380, no. 1, pp. 72–80, 2013.

[41] FHWA. FHWA Vehicle Types. Accessed: Sep. 24, 2019. [Online]. Available: http://www.fhwa.dot.gov/policy/ohpi/ vehclass.htm

[42] J. Lamas-Seco, P. Castro, A. Dapena, and F. Vazquez-Araujo, ‘‘Vehicle classification using the discrete Fourier transform with traffic inductive sensors,’’ Sensors, vol. 15, no. 10, pp. 27201–27214, 2015.

[43] H. X. Liu and J. Sun, ‘‘Length-based vehicle classification using eventbased loop detector data,’’ Transp. Res. C, Emerg. Technol., vol. 38, pp. 156–166, Jan. 2014.

[44] L. Wu and B. Coifman, ‘‘Vehicle length measurement and length-based vehicle classification in congested freeway traffic,’’ Transp. Res. Rec., J. Transp. Res. Board, vol. 2443, no. 1, pp. 1–11, Jan. 2014.

[45] L. Wu and B. Coifman, ‘‘Improved vehicle classification from dual-loop detectors in congested traffic,’’ Transp. Res. C, Emerg. Technol., vol. 46, pp. 222–234, Sep. 2014.

[46] S. V. Hernandez, A. Tok, and S. G. Ritchie, ‘‘Integration of weigh-inmotion (WIM) and inductive signature data for truck body classification,’’ Transp. Res. C, Emerg. Technol., vol. 68, pp. 1–21, Jul. 2016.

[47] M. Al-Tarawneh, Y. Huang, P. Lu, and D. Tolliver, ‘‘Vehicle classification system using in-pavement fiber Bragg grating sensors,’’ IEEE Sensors J., vol. 18, no. 7, pp. 2807–2815, Apr. 2018.

[48] P. T. Martin, Y. Feng, and X. Wang, ‘‘Detector technology evaluation,’’ Mountain-Plains Consortium, Fargo, ND, USA, MPC Rep. 03-154, 2003.

[49] B. Coifman and S. Neelisetty, ‘‘Improved speed estimation from singleloop detectors with high truck flow,’’ J. Intell. Transp. Syst., vol. 18, no. 2, pp. 138–148, Apr. 2014.

[50] S.-T. Jeng and L. Chu, ‘‘A high-definition traffic performance monitoring system with the inductive loop detector signature technology,’’ in Proc. 17th Int. IEEE Conf. Intell. Transp. Syst. (ITSC), Oct. 2014, pp. 1820–1825.

[51] D. Svozil, V. Kvasnicka, and J. Pospichal, ‘‘Introduction to multi-layer feed-forward neural networks,’’ Chemometric Intell. Lab. Syst., vol. 39, no. 1, pp. 43–62, Nov. 1997.

[52] D. F. Walnut, An Introduction to Wavelet Analysis. Boston, MA, USA: Birkhäuser, 2013.

[53] P. Cheevarunothai, Y. Wang, and N. L. Nihan, ‘‘Identification and correction of dual-loop sensitivity problems,’’ Transp. Res. Rec., J. Transp. Res. Board, vol. 1945, no. 1, pp. 73–81, Jan. 2006.

[54] V. G. Kovvali, V. Alexiadis, and P. Zhang, ‘‘Video-based vehicle trajectory data collection,’’ in Proc. 86th Annu. Meeting Transp. Res. Board, 2017, pp. 1–8.

[55] G. F. Newell, ‘‘A simplified car-following theory: A lower order model,’’ Transp. Res. B, Methodol., vol. 36, no. 3, pp. 195–205, Mar. 2002.

[56] S. Y. Cheung, S. Coleri, B. Dundar, S. Ganesh, C.-W. Tan, and P. Varaiya, ‘‘Traffic measurement and vehicle classification with single magnetic sensor,’’ Transp. Res. Rec., J. Transp. Res. Board, vol. 1917, no. 1, pp. 173–181, Jan. 2005.

[57] R. Wyman and G. Braley, ‘‘Field evaluation of FWHA vehicle classification categories, executive summary, materials and research,’’ Maine Dept. Transp., Bureau Highways, Mater. Res. Division, Washington, DC, USA, Tech. Paper 84-5, 1985.

[58] J. M. Keller, M. R. Gray, and J. A. Givens, ‘‘A fuzzy K-nearest neighbor algorithm,’’ IEEE Trans. Syst., Man, Cybern., vol. SMC-15, no. 4, pp. 580–585, Aug. 1985.

[59] J. A. Suykens and J. Vandewalle, ‘‘Least squares support vector machine classifiers,’’ Neural Process. Lett., vol. 9, no. 3, pp. 293–300, 1999.

[60] A. T. C. Goh, ‘‘Back-propagation neural networks for modeling complex systems,’’ Artif. Intell. Eng., vol. 9, no. 3, pp. 143–151, Jan. 1995.

[61] A. Krizhevsky, I. Sutskever, and G. E. Hinton, ‘‘ImageNet classification with deep convolutional neural networks,’’ in Proc. Adv. Neural Inf. Process. Syst., 2012, pp. 1097–1105.

[62] D. Steinberg and P. Colla, ‘‘CART: Classification and regression trees,’’ in The Top Ten Algorithms in Data Mining, vol. 9. San Diego, CA, USA: Salford Systems, 2009.

[63] T. Chen and C. Guestrin, ‘‘XGBoost: A scalable tree boosting system,’’ in Proc. 22nd ACM SIGKDD Int. Conf. Knowl. Discovery Data Mining (KDD), 2016, pp. 785–794.

[64] S. Haykin, Neural Networks: A Comprehensive Foundation. Upper Saddle River, NJ, USA: Prentice-Hall, 1994.

[65] X. Jin, S. Sarkar, A. Ray, S. Gupta, and T. Damarla, ‘‘Target detection and classification using seismic and PIR sensors,’’ IEEE Sensors J., vol. 12, no. 6, pp. 1709–1718, Jun. 2012.

[66] K. Hornik, M. Stinchcombe, and H. White, ‘‘Multilayer feedforward networks are universal approximators,’’ Neural Netw., vol. 2, no. 5, pp. 359–366, Jan. 1989.

[67] D. F. Specht, ‘‘Probabilistic neural networks,’’ Neural Netw., vol. 3, no. 1, pp. 109–118, Jan. 1990.

[68] L. I. Kuncheva, Combining Pattern Classifiers: Methods and Algorithms. Hoboken, NJ, USA: Wiley, 2004.

[69] R. Malla, A. Sen, and N. Garrick, ‘‘A special fiber optic sensor for measuring wheel loads of vehicles on highways,’’ Sensors, vol. 8, no. 4, pp. 2551–2568, 2008.

[70] N. C. Mithun, N. U. Rashid, and S. M. M. Rahman, ‘‘Detection and classification of vehicles from video using multiple time-spatial images,’’ IEEE Trans. Intell. Transp. Syst., vol. 13, no. 3, pp. 1215–1225, Sep. 2012.

Mithun et al. propose a multiple virtual detection lines (MVDLs)-based vehicle classification system [70].

The VDL is a set of line indices of a frame for which the position is perpendicular to the moving direction of a vehicle (Fig. 6). The pixel strips on a VDL in chronological frames create a time spatial image (TSI). Multiple TSIs are used for vehicle detection and classification to reduce misdetection mostly due to occlusion. Specifically, a two-step process is proposed for classification. Vehicles are first classified into four general types based on the shape-based features. After that, another classification scheme based on the texture-based and shape-invariant features is applied to classify a vehicle into more specific types including motorbikes, rickshaws, autorickshaws, cars, jeeps, covered vans, and busses. The classification accuracy was between 88% and 91%

[71] L. Unzueta, M. Nieto, A. Cortes, J. Barandiaran, O. Otaegui, and P. Sanchez, ‘‘Adaptive multicue background subtraction for robust vehicle counting and classification,’’ IEEE Trans. Intell. Transp. Syst., vol. 13, no. 2, pp. 527–540, Jun. 2012.

Unzueta et al. also focus on effectively capturing the car image [71].

Specifically, the authors address the problem of dynamic changes of the background in challenging environments such as illumination changes and headlight reflections to improve the classification accuracy. A multicue background subtraction method is developed that the segmentation thresholds are dynamically adjusted to account for dynamic changes of the background, and supplementing with extra features extracted from gradient differences to enhance the segmentation [71].

[72] Z. Dong, Y. Wu, M. Pei, and Y. Jia, ‘‘Vehicle type classification using a semisupervised convolutional neural network,’’ IEEE Trans. Intell. Transp. Syst., vol. 16, no. 4, pp. 2247–2256, Aug. 2015.

Dong et al. applies the semisupervised convolutional neural network (CNN) for feature extraction [72]. In this work, vehicle front view images are used for classification. Specifically, the proposed CNN consists of two stages. In the first stage, the authors design an unsupervised learning mechanism to obtain the effective filter bank of CNN to capture discriminative features of vehicles.

[73] H. C. Karaimer, I. Cinaroglu, and Y. Bastanlar, ‘‘Combining shape-based and gradient-based classifiers for vehicle classification,’’ in Proc. IEEE 18th Int. Conf. Intell. Transp. Syst., Sep. 2015, pp. 800–805.

Identifying effective features from the car images is another important challenge for camera-based vehicle classification systems. Karaimer et al. combine the shape-based classification and the Histogram of Oriented Gradient (HOG) feature-based classification methods in order to improve the classification performance [73]. Specifically, kNN is used for the shape-based features including convexity, rectangularity, and elongation, and SVM is used with the HOG features. The two methods are combined using different combination schemes, i.e., the sum rules and the product rules. The sum rule determines the vehicle class such that the sum of the two probabilities for the two classifiers is maximized, and the product rule determines based on the product of the two probabilities. Three vehicle classes were used for experiments, namely, cars, vans, and motorcycles. The classification accuracy was 96.5%.

[74] H. Huttunen, F. S. Yancheshmeh, and K. Chen, ‘‘Car type recognition with deep neural networks,’’ in Proc. IEEE Intell. Vehicles Symp. (IV), Jun. 2016, pp. 1115–1120.

Machine learning algorithms are used to extract effective features automatically. Huttunen et al. design a deep neural network (DNN) that extracts features from a car image with background, removing the preprocessing steps of detecting a car from an image and aligning a bounding box around the car [74].

[75] Y. O. Adu-Gyamfi, S. K. Asare, A. Sharma, and T. Titus, ‘‘Automated vehicle recognition with deep convolutional neural networks,’’ Transp. Res. Rec., vol. 2645, no. 1, pp. 113–122, 2017.

[76] S. Javadi, M. Rameez, M. Dahl, and M. I. Pettersson, ‘‘Vehicle classification based on multiple fuzzy C-means clustering using dimensions and speed features,’’ Procedia Comput. Sci., vol. 126, pp. 1344–1350, Jan. 2018.

[77] D. Zhao, Y. Chen, and L. Lv, ‘‘Deep reinforcement learning with visual attention for vehicle classification,’’ IEEE Trans. Cognit. Develop. Syst., vol. 9, no. 4, pp. 356–367, Dec. 2017.

[78] R. Theagarajan, F. Pala, and B. Bhanu, ‘‘EDeN: Ensemble of deep networks for vehicle classification,’’ in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. Workshops (CVPRW), Jul. 2017, pp. 33–40.

[79] P.-K. Kim and K.-T. Lim, ‘‘Vehicle type classification using bagging and convolutional neural network on multi view surveillance image,’’ in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. Workshops (CVPRW), Jul. 2017, pp. 41–46.

[80] W. Liu, M. Zhang, Z. Luo, and Y. Cai, ‘‘An ensemble deep learning method for vehicle type classification on visual traffic surveillance sensors,’’ IEEE Access, vol. 5, pp. 24417–24425, 2017.

[81] J. Chang, L. Wang, G. Meng, S. Xiang, and C. Pan, ‘‘Vision-based occlusion handling and vehicle classification for traffic surveillance systems,’’ IEEE Intell. Transp. Syst. Mag., vol. 10, no. 2, pp. 80–92, Apr. 2018.

[82] A. Hasnat, N. Shvai, A. Meicler, P. Maarek, and A. Nakib, ‘‘New vehicle classification method based on hybrid classifiers,’’ in Proc. 25th IEEE Int. Conf. Image Process. (ICIP), Oct. 2018, pp. 3084–3088.

[83] X. Cao, C. Wu, P. Yan, and X. Li, ‘‘Linear SVM classification using boosting HOG features for vehicle detection in low-altitude airborne videos,’’ in Proc. 18th IEEE Int. Conf. Image Process., Sep. 2011, pp. 2421–2424.

[84] K. Liu and G. Mattyus, ‘‘Fast multiclass vehicle detection on aerial images,’’ IEEE Geosci. Remote Sens. Lett., vol. 12, no. 9, pp. 1938–1942, Sep. 2015.

[85] N. Audebert, B. Le Saux, and S. Lefèvre, ‘‘Segment-before-detect: Vehicle detection and classification through semantic segmentation of aerial images,’’ Remote Sens., vol. 9, no. 4, p. 368, 2017.

[86] Y. Tan, Y. Xu, S. Das, and A. Chaudhry, ‘‘Vehicle detection and classification in aerial imagery,’’ in Proc. 25th IEEE Int. Conf. Image Process. (ICIP), Oct. 2018, pp. 86–90.

[87] H. Sandhawalia, J. A. Rodriguez-Serrano, H. Poirier, and G. Csurka, ‘‘Vehicle type classification from laser scanner profiles: A benchmark of feature descriptors,’’ in Proc. 16th Int. IEEE Conf. Intell. Transp. Syst. (ITSC), Oct. 2013, pp. 517–522.

[88] B. L. Tseng, C.-Y. Lin, and J. R. Smith, ‘‘Real-time video surveillance for traffic monitoring using virtual line analysis,’’ in Proc. IEEE Int. Conf. Multimedia Expo, vol. 2, Aug. 2002, pp. 541–544.

A camera provides rich information for vehicle classification such as the visual features and geometry of passing vehicles [88].

[89] Z. Zivkovic and F. van der Heijden, ‘‘Efficient adaptive density estimation per image pixel for the task of background subtraction,’’ Pattern Recognit. Lett., vol. 27, no. 7, pp. 773–780, May 2006.

The authors adopt the background Gaussian Mixture Model (GMM) [89]

[90] Z. Chen, N. Pears, M. Freeman, and J. Austin, ‘‘Background subtraction in video using recursive mixture models, spatio-temporal filtering and shadow removal,’’ in Proc. Int. Symp. Vis. Comput. Berlin, Germany: Springer, 2009, pp. 1141–1150.

and the shadow removal algorithm [90] to reduce the negative impacts on vehicle classification caused by shadow, camera vibration, illumination changes, etc. The Kalman filter is used for vehicle tracking and SVM is used to perform vehicle classification. Experiments were performed with real video footage obtained from cameras deployed in Kingston upon Thames, UK. Vehicles were classified into five categories, i.e., motorcycles, cars, vans, buses, and unknown vehicles. The classification accuracy for these vehicle types was 94.6%.

[91] J. Bergstra and Y. Bengio, ‘‘Random search for hyper-parameter optimization,’’ J. Mach. Learn. Res., vol. 13, pp. 281–305, Feb. 2012.

The hyper-parameters of the neural network are selected based on a random search that finds a good combination of the parameters [91]. The proposed system was evaluated with a database consisting of 6,555 images with four different vehicle types, i.e., small cars, busses, trucks, and vans. The classification accuracy was 97%.

[92] A. Kumar and H. Daume, III, ‘‘Learning task grouping and overlap in multi-task learning,’’ 2012, arXiv:1206.6417. [Online]. Available: http://arxiv.org/abs/1206.6417

In the second stage, the Softmax classifier is trained based on the multi-task learning [92] to provide the probability for each vehicle type. Experiments were conducted with two data sets, i.e., the BIT-Vehicle data set [93], and the data set used by Peng et al. [94]. The former data set consists of 9,850 vehicle images with six types: bus, microbus, minivan, sedan, SUV, and truck; the latter includes 3,618 daylight and 1,306 nighttime images with truck, minivan, bus, passenger car, and sedan. The classification accuracy for the two data sets were 88.1%, and 89.4%, respectively.

60

60

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?