在spark中存在隐式转换 将RDD转换成PairFunctionRDD

groupByKey

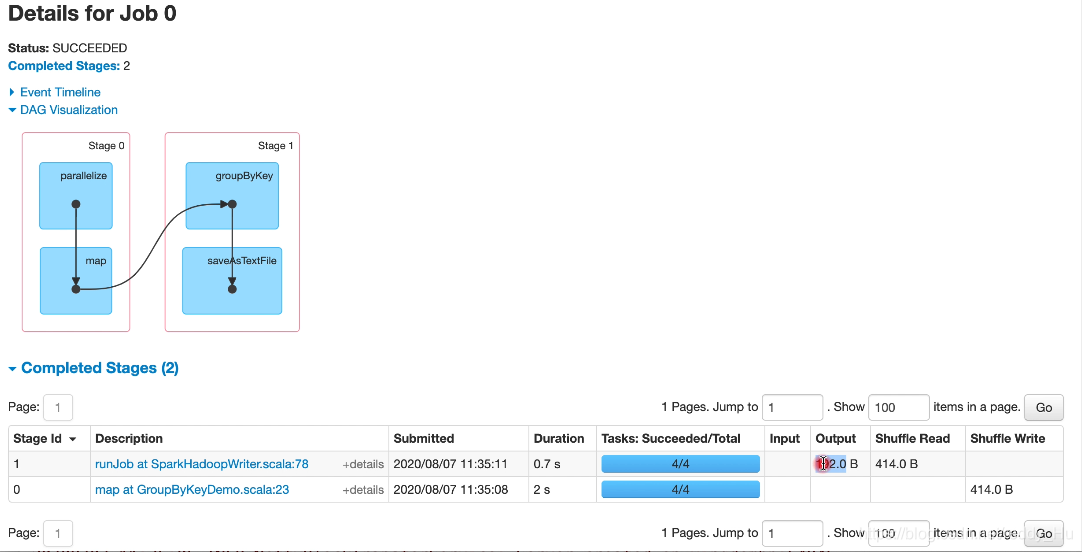

这个方法生成新的stage,并且源代码是写在PairRDDFunctions中,不是调用新建一个MapPartitionsRDD了,这个方法是map端和reduce端得交互,map端处理完数据会先将数据ShuffleWrite溢写到map端得磁盘,然后reduce端通过网络进行拉取ShuffleRead过来

之于为什么OutPut会变少,是因为输出的文件 key都合并了 没有之前那么多key了 但是value还是之前那么多

之于为什么OutPut会变少,是因为输出的文件 key都合并了 没有之前那么多key了 但是value还是之前那么多

还有一个问题值得讨论,就是怎么定的分区规则,其实如果不传一个分区方法,是有默认的分区方法的,就是HashPartitioner,和mapreduce用的是一样的方法.这个方法会跟去你传入分区数的最大值决定分几个区和决定他决定向谁取模

这个方法就是传进去一个分区器

package com.doit.spark.restart

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object GroupByKey {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("MapPartitionsDemo").setMaster("local[*]")

val sc = new SparkContext(conf)

val words = sc.parallelize(List(

"spark", "flink", "hadoop", "kafka",

"spark", "hive", "hadoop", "kafka",

"spark", "flink", "hive", "kafka",

"spark", "flink", "hadoop", "kafka"

), 4)

val wordAndOne: RDD[(String, Int)] = words.map((_, 1))

val value: RDD[(String, Iterable[Int])] = wordAndOne.groupByKey(6)

value.saveAsTextFile("zhao")

sc.stop()

}

}

p0 (kafka,CompactBuffer(1, 1, 1, 1))

p1 (hadoop,CompactBuffer(1, 1, 1))

p2 (hive,CompactBuffer(1, 1))

p3 啥也没有

p4 (flink,CompactBuffer(1, 1, 1))

p5 (spark,CompactBuffer(1, 1, 1, 1))

/**

* Group the values for each key in the RDD into a single sequence. Hash-partitions the

* resulting RDD with into `numPartitions` partitions. The ordering of elements within

* each group is not guaranteed, and may even differ each time the resulting RDD is evaluated.

*

* @note This operation may be very expensive. If you are grouping in order to perform an

* aggregation (such as a sum or average) over each key, using `PairRDDFunctions.aggregateByKey`

* or `PairRDDFunctions.reduceByKey` will provide much better performance.

*

* @note As currently implemented, groupByKey must be able to hold all the key-value pairs for any

* key in memory. If a key has too many values, it can result in an `OutOfMemoryError`.

*/

def groupByKey(numPartitions: Int): RDD[(K, Iterable[V])] = self.withScope {

groupByKey(new HashPartitioner(numPartitions))

/**

* Group the values for each key in the RDD into a single sequence. Allows controlling the

* partitioning of the resulting key-value pair RDD by passing a Partitioner.

* The ordering of elements within each group is not guaranteed, and may even differ

* each time the resulting RDD is evaluated.

*

* @note This operation may be very expensive. If you are grouping in order to perform an

* aggregation (such as a sum or average) over each key, using `PairRDDFunctions.aggregateByKey`

* or `PairRDDFunctions.reduceByKey` will provide much better performance.

*

* @note As currently implemented, groupByKey must be able to hold all the key-value pairs for any

* key in memory. If a key has too many values, it can result in an `OutOfMemoryError`.

*/

def groupByKey(partitioner: Partitioner): RDD[(K, Iterable[V])] = self.withScope {

// groupByKey shouldn't use map side combine because map side combine does not

// reduce the amount of data shuffled and requires all map side data be inserted

// into a hash table, leading to more objects in the old gen.

val createCombiner = (v: V) => CompactBuffer(v)

val mergeValue = (buf: CompactBuffer[V], v: V) => buf += v

val mergeCombiners = (c1: CompactBuffer[V], c2: CompactBuffer[V]) => c1 ++= c2

val bufs = combineByKeyWithClassTag[CompactBuffer[V]](

createCombiner, mergeValue, mergeCombiners, partitioner, mapSideCombine = false)

bufs.asInstanceOf[RDD[(K, Iterable[V])]]

}

/**

* A [[org.apache.spark.Partitioner]] that implements hash-based partitioning using

* Java's `Object.hashCode`.

*

* Java arrays have hashCodes that are based on the arrays' identities rather than their contents,

* so attempting to partition an RDD[Array[_]] or RDD[(Array[_], _)] using a HashPartitioner will

* produce an unexpected or incorrect result.

*/

class HashPartitioner(partitions: Int) extends Partitioner {

require(partitions >= 0, s"Number of partitions ($partitions) cannot be negative.")

def numPartitions: Int = partitions

def getPartition(key: Any): Int = key match {

case null => 0

case _ => Utils.nonNegativeMod(key.hashCode, numPartitions)

}

override def equals(other: Any): Boolean = other match {

case h: HashPartitioner =>

h.numPartitions == numPartitions

case _ =>

false

}

override def hashCode: Int = numPartitions

}

val createCombiner = (v: V) => CompactBuffer(v)

val mergeValue = (buf: CompactBuffer[V], v: V) => buf += v

val mergeCombiners = (c1: CompactBuffer[V], c2: CompactBuffer[V]) => c1 ++= c2

这个方法的实现主要是new了个 ShuffleRDD,第一步先在map端创建了个CompactBuffer(v),然后局部聚合向CompactBuffer(v)加入同一分区内相同key的所有value,再到reduce端全局聚合加入所有value

groupBy

这个方法需要传两个参数,一个是分组方法,一个是分区器,最终得到的是(调用函数之后的结果,compactBuffer(未经处理的样子))再进行groupByKey

def groupBy[K](f: T => K, p: Partitioner)(implicit kt: ClassTag[K], ord: Ordering[K] = null)

: RDD[(K, Iterable[T])] = withScope {

val cleanF = sc.clean(f)

this.map(t => (cleanF(t), t)).groupByKey(p)

}

package com.doit.spark.restart

import org.apache.spark.{SparkConf, SparkContext}

object GroupBy {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("MapPartitionsDemo").setMaster("local[*]")

val sc = new SparkContext(conf)

val words = sc.parallelize(List(

"spark", "flink", "hadoop", "kafka",

"spark", "hive", "hadoop", "kafka",

"spark", "flink", "hive", "kafka",

"spark", "flink", "hadoop", "kafka"

), 4)

val wordAndOne = words.map((_, 1))

val grouped = wordAndOne.groupBy(_._1)

//用GroupByKey实现GroupBy

wordAndOne.map(x =>(x._1,x)).groupByKey()

}

}

Distinct

这个方法是用来去重的, List(1, 1, 2, 2, 2, 3, 4, 5, 4, 3, 2, 4, 2, 5)=>(1,2,3,4,5)

case _ => map(x => (x, null)).reduceByKey((x, _) => x, numPartitions).map(_._1)

这个是实现方法,先把数据转成二维元组,然后通过调用reduceByKey传进去的函数只进行拿出每个key的第一个元组 不进行处理,原本的reduceByKey传入和函数常为_+_ 是reduce这个方法底层在map端和reduce分别进行了两次合并的事先,就是数值相加,这次只调出一个value也不进行相加,所以就只有null,再取出每个不同key元组的key 完成去重

183

183

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?