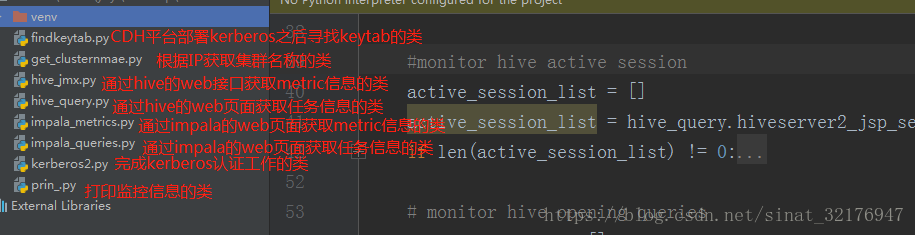

一、工程目录

二、原理解析

Hive和Impala是两个最常用的大数据查询工具,他们的主要区别是Hive适合对实时性要求不太高的业务,对资源的要求较低;而Impala的由于采用了全新的架构,处理速度非常的快,但同样的也对资源消耗比较大,适合实时性要求高的业务。

在我测试过程中发现,有些时候,即使通过shell命令来检测,发现Hive或者Impala的进程正在运行,但是无法访问他们的web页面,其实这也不能算是正常运行的,因为内部还是无法完成任务。利用这一点,我们可以通过爬虫工具beautifulsoup,获取Hive和Impala的web页面的信息。继而转化成字符串,丢到grafana里,即可实现监控功能了。

小弟也是新手一个,代码也只是仅仅实现了功能而已,大神看了希望不要见笑哈~~

三、代码解析

1、findkeytab.py

import os import re def findkeytab(): pre='/var/run/cloudera-scm-agent/process'#CDH的进程路径 p = os.listdir(pre) max=l=0 for i in range(len(p)): pattern = re.compile(r'(.*?)hive-HIVESERVER2$')#CDH每次重启进程会生成新的进程号,这里匹配最新(大)的进程目录 if pattern.match(p[i]): if int(p[i ].split('-')[0])>max: max=int(p[i].split('-')[0]) l=i suf=p[l] return pre+'/'+suf+'/hive.keytab'#拼接出keytab路径

2、kerberos2.py

import requests import commands import kerberos class KerberosTicket: def __init__(self, service, keytab, pricipal): kt_cmd = 'kinit -kt ' + keytab + ' ' + pricipal#通过命令认证kerberos用户 (status, output) = commands.getstatusoutput(kt_cmd) if status != 0: print ("kinit ERROR:") print (output) exit() __, krb_context = kerberos.authGSSClientInit(service) kerberos.authGSSClientStep(krb_context, "") self._krb_context = krb_context self.auth_header = ("Negotiate " + kerberos.authGSSClientResponse(krb_context)) def verify_response(self, auth_header): # Handle comma-separated lists of authentication fields for field in auth_header.split(","): kind, __, details = field.strip().partition(" ") if kind.lower() == "negotiate": auth_details = details.strip() break else: raise ValueError("Negotiate not found in %s" % auth_header) # Finish the Kerberos handshake krb_context = self._krb_context if krb_context is None: raise RuntimeError("Ticket already used for verification") self._krb_context = None kerberos.authGSSClientStep(krb_context, auth_details)

3、get_clustername.py

#!/usr/bin/env python def enabled(): return False cluster_dict = { 'cluster1': ["192.168.159.11-13"] #预先定义IP和clustername的映射 } def get_cluster_name(ip):#通过字符串的匹配来确定clustername cluster_name = '' for i in range(len(cluster_dict)): cluster_key = cluster_dict.keys()[i] locals()[str(cluster_key)+'_ip_list'] = [] ip_part1_list = [i.split('.')[:3] for i in cluster_dict.get(cluster_key)] ip_part2_list = [i.split('.')[3] for i in cluster_dict.get(cluster_key)] try: ip_part2_list_detail_list=[] for k in range(len(ip_part2_list)): loop_start = int(ip_part2_list[k].split("-")[0]) loop_end = int(ip_part2_list[k].split("-")[1]) ip_part2_list_detail = [x for x in range(loop_start , loop_end + 1)] ip_part2_list_detail_list.append(ip_part2_list_detail) ip_list= zip(ip_part1_list,ip_part2_list_detail_list) for o in range(len(ip_list)): for p in range(len(ip_list[o][1])): locals()[str(cluster_key) + '_ip_list'].append(str(ip_list[o][0][0])+"."+str(ip_list[o][0][1])+"."+str(ip_list[o][0][2])+"."+str(ip_list[o][1][p])) except IndexError: locals()[str(cluster_key) + '_ip_list'].append(str(ip_list[o][0][0]) + "." + str(ip_list[o][0][1]) + "." + str(ip_list[o][0][2]) + "." + str(ip_list[o][1][p])) if ip in locals()[str(cluster_key)+'_ip_list']: cluster_name = str(cluster_key) if cluster_name in cluster_dict.keys(): return cluster_name else: return "null"

4、hive_jmx.py

import requests import kerberos import socket #output all metric value输出所有信息,测试用 '''for i in range(len(js['beans'])): prename=js['beans'][i]['name'].split('=')[1] for key in (js['beans'][i]): if key == "modelerType": continue lastname=key name=prename+'.'+lastname timestamp = str(int(time.time())) value = str(js['beans'][i][key]) tag='hive' print name+' '+timestamp+' '+value+' '+tag''' #out put pointed metric value def hiveserver2_jmx(url): #在Hive的metric页面找到需要的属性 def jmxana(i, metric_name, value_name): list = [] dict = {} if js['beans'][i]['name'] == "metrics:name=" + metric_name: name = js['beans'][i]['name'].split('=')[1] + '.' + value_name list.append(metric_name) value = str(js['beans'][i][value_name]) list.append(value) list.append(dict) return list #kerbero认证部分 __, krb_context = kerberos.authGSSClientInit("HTTP/node2@HADOOP.COM") kerberos.authGSSClientStep(krb_context, "") negotiate_details = kerberos.authGSSClientResponse(krb_context) headers = {"Authorization": "Negotiate " + negotiate_details} r = requests.get(url, headers=headers, verify=False) hostname = socket.gethostname() js = r.json() jmx_list = [] file = open("/root/test.txt")#这里将要监控的属性名称存在一个文件里,通过读取文件内容获取具体要监控的信息 lines = file.readlines() for i in range(len(js['beans'])): for line in lines: tmp_list = jmxana(i,line.split(' ')[0].strip(),line.split(' ')[1].strip()) if len(tmp_list)!=0: jmx_list.append(tmp_list) file.close() return jmx_list

5、hive_query.py

import requests import kerberos import time from bs4 import BeautifulSoup last_query_list = [] open_query_list = [] active_session_list = [] total_dict = {} latest_query_timestamp=0 #beautifulsoup解析hive的监控页面,返回html文本 def getsoup(url): __, krb_context = kerberos.authGSSClientInit("HTTP/node2@HADOOP.COM") kerberos.authGSSClientStep(krb_context, "") negotiate_details = kerberos.authGSSClientResponse(krb_context) headers = {"Authorization": "Negotiate " + negotiate_details} r = requests.get(url, headers=headers,verify=False) return BeautifulSoup(r.content,"html5lib") #获取页面的session信息 def hiveserver2_jsp_session(url): soup=getsoup(url) del active_session_list[:] for h2 in soup.findAll('h2'): if h2.string == 'Active Sessions': tab = h2.parent.find('table') for tr in tab.findAll('tr'): list = [] dict = {} count = 0 for td in tr.findAll('td'): if len(tr.findAll('td')) == 1: td_num = tr.find('td').string.split(':')[1] global total_dict total_dict['Total number of sessions'] = td_num else: if count == 0: dict['UserName'] = td.getText().strip() elif count == 1: dict['IP'] = td.getText().strip() elif count == 2: dict['OperationCount'] = td.getText().strip() else: list.append(td.getText().strip()) count+=1 if len(dict)!=0: list.append(dict) if len(list) != 0: active_session_list.append(list) return active_session_list #获取正在执行的任务信息 def hiveserver2_jsp_open(url): soup = getsoup(url) del open_query_list[:] for h2 in soup.findAll('h2'): if h2.string == 'Open Queries': tab = h2.parent.find('table') for tr in tab.findAll('tr'): list = [] dict = {} if len(tr.findAll('td')) == 1: open_query_num = tr.find('td').string.split(':')[1] global total_dict total_dict['Total number of open queries'] = open_query_num if tr.find('a') != None: count = 0 for td in tr.findAll('td'): if count ==0: dict['UserName']=td.getText().strip() elif count == 1: list.append(td.getText().strip()) elif count == 2: dict['ExecutionEngine'] = td.getText().strip() elif count == 3: dict['State'] = td.getText().strip() elif count == 4: dt = td.getText().strip() timestamp = str(int(time.mktime(time.strptime(dt, '%a %b %d %H:%M:%S %Z %Y')))) dict['OpenedTimestamp'] = timestamp elif count == 5: dict['Opened'] = td.getText().strip() elif count == 6: dict['Latency'] = td.getText().strip() else: list.append(tr.find('a').get('href').split('=')[1]) count += 1 list.append(dict) open_query_list.append(list) return open_query_list #获取已经完成的任务信息 def hiveserver2_jsp_last(url): soup = getsoup(url) if len(last_query_list)!=0: global latest_query_timestamp latest_query_timestamp = int(last_query_list[len(last_query_list) - 1][2]['ClosedTimestamp']) del last_query_list[:] for h2 in soup.findAll('h2'): if h2.string == 'Last Max 25 Closed Queries': tab = h2.parent.find('table') for tr in tab.findAll('tr'): list = [] dict = {} if len(tr.findAll('td')) == 1: last_query_num = tr.find('td').string.split(':')[1] global total_dict total_dict['Total number of last queries'] = last_query_num if tr.find('a') != None: count = 0 for td in tr.findAll('td'): if count ==0: dict['UserName']=td.getText().strip() elif count == 1: list.append(td.getText().strip()) elif count == 2: dict['ExecutionEngine'] = td.getText().strip() elif count == 3: dict['State'] = td.getText().strip() elif count == 4: dict['Opened'] = td.getText().strip() elif count == 5: dt = td.getText().strip() timestamp = str(int(time.mktime(time.strptime(dt, '%a %b %d %H:%M:%S %Z %Y')))) dict['ClosedTimestamp'] = timestamp elif count == 6: dict['Latency'] = td.getText().strip() else: list.append(tr.find('a').get('href').split('=')[1]) count += 1 list.append(dict) if int(timestamp) <= latest_query_timestamp:

#为了避免重复输出,如果已经输出过就不要再输出

continue

else: last_query_list.append(list)

return last_query_list

#统计总的任务信息

def

hiveserver2_jsp_total():

dict={}

global total_dict

return total_dict

6、impala_metrics.py

import requests import re from bs4 import BeautifulSoup # get metric from impalad # 同Hive一样,用beautifulsoup解析impala三类节点上的metric信息 def impala_metrics(url): r = requests.get(url) pattern=re.compile(r'\-?\d*\.\d*\s(MB|KB|GB|B)')# 将G、M、K统一都转换为B soup = BeautifulSoup(r.content,"html5lib") metric_dict = {} return_dict = {} for tr in soup.findAll('tr'): list = [] count = 0 if len(tr.findAll('td'))!=0: for td in tr.findAll('td'): if count==0: list.append(td.getText().strip()) elif count==1: if pattern.match(td.getText().strip()): unit=td.getText().strip().split(' ')[1] if unit=='KB': list.append(str(float(td.getText().strip().split(' ')[0])*1024)[:-3]) elif unit=='MB': list.append(str(float(td.getText().strip().split(' ')[0])*1024*1024)[:-3]) elif unit=='GB': list.append(str(float(td.getText().strip().split(' ')[0])*1024*1024*1024)[:-3]) else: list.append(td.getText().strip().split(' ')[0]) else: list.append(td.getText().strip()) else: continue count+=1 metric_dict[list[0]]=list[1] serviceport = url.split(':')[2][0:5]#打开对应的文件,找到对应要监控的属性名 if serviceport=='25000': file = open('/root/impalad.txt') elif serviceport=='25010': file = open('/root/statestored.txt') elif serviceport=='25020': file = open('/root/catalogd.txt') else: print ("can't find impala service") lines = file.readlines() for key in metric_dict: for line in lines: if line.strip()==key: return_dict[key]=metric_dict[key] return return_dict

7、impala_queries.py

import requests from bs4 import BeautifulSoup import time latest_query_timestamp=0 closed_query_list = [] #获取正在运行的session信息,但往往impala运行的非常快,除非在生产环境,否则一般没有数据 def impala_session(url): r = requests.get(url) soup = BeautifulSoup(r.content, "html5lib") session_list = [] th_list=['SessionType','OpenQueries','TotalQueries','User','DelegatedUser','SessionID','NetworkAddress','Default Database', 'StartTime','LastAccessed','IdleTimeout','Expired','Closed','RefCount','Action'] for tr in soup.findAll('tr'): list = [] dict = {} count = 0 if len(tr.findAll('td'))!=0: for td in tr.findAll('td'): if count==5: list.append(td.getText().strip()) elif count==8: dt = td.getText().strip() timestamp = str(int(time.mktime(time.strptime(dt, '%Y-%m-%d %H:%M:%S')))) dict[th_list[count]]=timestamp elif count==9: dt = td.getText().strip() timestamp = str(int(time.mktime(time.strptime(dt, '%Y-%m-%d %H:%M:%S')))) dict[th_list[count]]=timestamp else: dict[th_list[count]]=td.getText().strip() count+=1 if len(dict)!=0: list.append(dict) if len(list) != 0: session_list.append(list) return session_list #获取已经完成的任务信息 def impala_query_closed(url): r = requests.get(url) soup = BeautifulSoup(r.content,"html5lib") th_list = ['User','DefaultDb','Statement','QueryType','StartTime','EndTime','Duration','ScanProgress','State','RowsFetched','ResourcePool'] if len(closed_query_list)!=0: global latest_query_timestamp latest_query_timestamp = int(closed_query_list[0][2]['EndTime']) del closed_query_list[:] for h3 in soup.findAll('h3'): if 'Completed' in h3.getText(): table= h3.find_next('table') for tr in table.findAll('tr'): list = [] dict = {} count = 0 for td in tr.findAll('td'): if count==2: list.append(td.getText()) elif count==4: sdt = td.getText().strip() second = sdt.split(':')[2][0:2] sdt = sdt.split(':')[0] + ':' + sdt.split(':')[1] + ':' + second stimestamp = str(int(time.mktime(time.strptime(sdt, '%Y-%m-%d %H:%M:%S')))) dict['StartTime'] = stimestamp elif count==5: edt = td.getText().strip() second = edt.split(':')[2][0:2] edt = edt.split(':')[0]+':'+edt.split(':')[1]+':'+second etimestamp = str(int(time.mktime(time.strptime(edt, '%Y-%m-%d %H:%M:%S')))) dict['EndTime'] = etimestamp elif count==11: list.append(td.find('a').get('href').split('=')[1]) else: dict[th_list[count]] = td.getText().strip() count+=1 if len(dict)!=0: list.append(dict) if len(list) != 0: if int(dict['EndTime']) <= latest_query_timestamp:#为了避免重复输出,如果已经输出过就不要再输出了 break else: closed_query_list.append(list) return closed_query_list

8、prin_.py(千万不要叫print.py,会跟python自带包冲突,坑了我好久)

import hive_query import hive_jmx import impala_queries import impala_metrics import get_clusternmae import time import socket import kerberos2 import findkeytab def printdict(dict): for key in dict: print key + '=' + dict[key], print def writedict(dict,file): count=1 for key in dict: file.write(key + '=' + dict[key]) if count==len(dict): file.write('\n') else: file.write(',') count+=1 krb = kerberos2.KerberosTicket('HTTP/'+socket.gethostname()+'@HADOOP.COM', findkeytab.findkeytab(), 'HTTP/'+socket.gethostname()+'@HADOOP.COM') while True: timestamp = str(int(time.time())) common_tag_dict={} endpoint=socket.gethostname() ipaddr=socket.gethostbyname(endpoint) clustername = get_clusternmae.get_cluster_name(ipaddr) common_tag_dict['endpoint']=endpoint common_tag_dict['cluster'] = clustername #monitor hive active session active_session_list = [] active_session_list = hive_query.hiveserver2_jsp_session('http://'+endpoint+':10002/hiveserver2.jsp') if len(active_session_list) != 0: for l in active_session_list: print 'hive.hiveserver2.session.ActiveTime ' + l[0] + ' ', l[2].update(common_tag_dict) del l[2]['IP'] printdict(l[2]) print 'hive.hiveserver2.session.IdleTime ' + l[1] + ' ', l[2].update(common_tag_dict) printdict(l[2]) # monitor hive opening queries open_query_list = [] open_query_list = hive_query.hiveserver2_jsp_open('http://'+endpoint+':10002/hiveserver2.jsp') if len(open_query_list) != 0: for l in open_query_list: print 'hive.hiveserver2.query.opening-uuid '+l[1]+' '+timestamp, l[2].update(common_tag_dict) printdict(l[2]) #monitor hive closed queries last_query_list = [] last_query_list = hive_query.hiveserver2_jsp_last('http://'+endpoint+':10002/hiveserver2.jsp') if len(last_query_list) != 0: for l in last_query_list: file = open("/root/hive_query.txt",'a') file.write('uuid='+l[1]+','+'Sql='+l[0]+',') writedict(l[2],file) file.close() del l[2]['Latency'] print 'hive.hiveserver2.query.closed-uuid '+l[1]+' '+timestamp, l[2].update(common_tag_dict) printdict(l[2]) #monitor the total hive data total_dict = hive_query.hiveserver2_jsp_total() for key in total_dict: if key == 'Total number of sessions': print 'hive.hiveserver2.total.session'+total_dict['Total number of sessions']+' '+timestamp, printdict(common_tag_dict) if key == 'Total number of open queries': print 'hive.hiveserver2.total.openingquery'+total_dict['Total number of open queries']+' '+timestamp, printdict(common_tag_dict) if key == 'Total number of last queries': print 'hive.hiveserver2.total.closedquery'+total_dict['Total number of last queries']+' '+timestamp, printdict(common_tag_dict) #monitor the hive jmx jmx_list = hive_jmx.hiveserver2_jmx('http://'+endpoint+':10002/jmx') for i in range (len(jmx_list)): print 'hive.'+jmx_list[i][0]+' '+ jmx_list[i][1]+' '+timestamp, jmx_list[i][2].update(common_tag_dict) printdict(jmx_list[i][2]) #monitor impala metrics impalad_url='http://'+ipaddr+':25000/metrics' impala_impalad_metrics = impala_metrics.impala_metrics(impalad_url) for key in impala_impalad_metrics: print 'impala.'+key+' '+impala_impalad_metrics[key]+' '+timestamp, printdict(common_tag_dict) statestored_url = 'http://' + ipaddr + ':25010/metrics' impala_statestored_metrics = impala_metrics.impala_metrics(statestored_url) for key in impala_statestored_metrics: print 'impala.'+key +' '+ impala_statestored_metrics[key]+' '+timestamp, printdict(common_tag_dict) catalogd_url = 'http://' + ipaddr + ':25020/metrics' impala_catalogd_metrics = impala_metrics.impala_metrics(catalogd_url) for key in impala_catalogd_metrics: print 'impala.'+key +' '+ impala_catalogd_metrics[key]+' '+timestamp, printdict(common_tag_dict) # monitor impala closed queries impala_closed_query_list = [] impala_closed_query_list = impala_queries.impala_query_closed('http://'+ipaddr+':25000/queries#') if len(impala_closed_query_list)!=0: for l in impala_closed_query_list: file = open("/root/impala_query.txt",'a') file.write(l[1]+' '+l[0]+'\n') file.write('uuid=' + l[1] + ',' + 'Sql=' + l[0]+',') writedict(l[2], file) file.close() del l[2]['ResourcePool'] del l[2]['ScanProgress'] del l[2]['DefaultDb'] del l[2]['EndTime'] del l[2]['QueryType'] print 'impala.query.closed-uuid '+l[1]+' '+timestamp, l[2].update(common_tag_dict) printdict(l[2]) # monitor impala session impala_session_list=[] impala_session_list = impala_queries.impala_session('http://'+ipaddr+':25000/sessions') if len(impala_session_list)!=0: for l in impala_session_list: print 'impala.active.session'+' '+ l[0] + ' '+timestamp, l[1].update(common_tag_dict) printdict(l[1]) time.sleep(10)

1099

1099

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?