router & dealer

这对模型是zmq最重要的模型,由这对模型也衍生出一些其他模型,比如接下来要说到的req-rep模型。再分析router-dealer之前先看一下zmq的两个队列 : fq和 lb。前者是一个用于接收消息的fair queueing队列,后者是一个用于发送消息的load balance队列。两者都使用了一个array_t来作为自己的队列数据结构,array_t的实现比较简单,主要是数组的扩展,每个元素用一个index来标记自己在数组中的位置,这样可以快速标记一个元素在数组中的位置,方便操作,用空间换取时间。下面是fq的一段主要函数:

int zmq::fq_t::recvpipe (msg_t *msg_, pipe_t **pipe_)

{

// Deallocate old content of the message.

int rc = msg_->close ();

errno_assert (rc == 0);

// Round-robin over the pipes to get the next message.

while (active > 0) {

// Try to fetch new message. If we've already read part of the message

// subsequent part should be immediately available.

bool fetched = pipes [current]->read (msg_);

// Note that when message is not fetched, current pipe is deactivated

// and replaced by another active pipe. Thus we don't have to increase

// the 'current' pointer.

if (fetched) {

if (pipe_)

*pipe_ = pipes [current];

more = msg_->flags () & msg_t::more? true: false;

if (!more) {

last_in = pipes [current];

current = (current + 1) % active;

}

return 0;

}

// Check the atomicity of the message.

// If we've already received the first part of the message

// we should get the remaining parts without blocking.

zmq_assert (!more);

active--;

pipes.swap (current, active);

if (current == active)

current = 0;

}

// No message is available. Initialise the output parameter

// to be a 0-byte message.

rc = msg_->init ();

errno_assert (rc == 0);

errno = EAGAIN;

return -1;

}

fq把所有active状态的pipe放在数组的前面,当有新的pipe或者是是已有pipe转入非active状态时都通过array_t的swap来进行快速移动。另外fq用一个游标记录当前正在读取的pipe,每次从游标标记的pipe中读取数据,当完整读取一条数据时(msg的flag没有more标记),移动游标,以此达到fair queueing的目标。lb的实现与fq类似。fq和lb必须要完整处理一条数据之后才能移动到下一个位置,即使其他pipe有数据也需要等待。说完fq和lb的实现,接下来看router和dealer是怎么使用他们的。

router类使用fq管理pipe,router的recv方法会调用fq的recvpipe来达到fair queueing的目的。router的send方法则需要指定管道,实现方式是强制第一条发送的msg必须是identity类型的msg以便指定对应的管道。

下面是router类的成员变量:

// Receive peer id and update lookup map

bool identify_peer (pipe_t *pipe_);

// Fair queueing object for inbound pipes.

fq_t fq;

// True iff there is a message held in the pre-fetch buffer.

bool prefetched;

// If true, the receiver got the message part with

// the peer's identity.

bool identity_sent;

// Holds the prefetched identity.

msg_t prefetched_id;

// Holds the prefetched message.

msg_t prefetched_msg;

// If true, more incoming message parts are expected.

bool more_in;

struct outpipe_t

{

zmq::pipe_t *pipe;

bool active;

};

// We keep a set of pipes that have not been identified yet.

std::set <pipe_t*> anonymous_pipes;

// Outbound pipes indexed by the peer IDs.

typedef std::map <blob_t, outpipe_t> outpipes_t;

outpipes_t outpipes;

// The pipe we are currently writing to.

zmq::pipe_t *current_out;

// If true, more outgoing message parts are expected.

bool more_out;

// Routing IDs are generated. It's a simple increment and wrap-over

// algorithm. This value is the next ID to use (if not used already).

uint32_t next_rid;

// If true, report EAGAIN to the caller instead of silently dropping

// the message targeting an unknown peer.

bool mandatory;

bool raw_sock;

// if true, send an empty message to every connected router peer

bool probe_router;

// If true, the router will reassign an identity upon encountering a

// name collision. The new pipe will take the identity, the old pipe

// will be terminated.

bool handover;其中prefetched_id和prefetched_msg分别用于临时存储identity和真正的数据。anonymous_pipes用来存储没有验证identity的匿名管道,outpipes存储已经验证过identity的管道。router的其他的变量都是一些状态记录变量。比如current_out是代表当前正在写入数据的管道,more_out代表一条完整的数据是否发送完。

int zmq::router_t::xrecv (msg_t *msg_)

{

if (prefetched) {

if (!identity_sent) {

int rc = msg_->move (prefetched_id);

errno_assert (rc == 0);

identity_sent = true;

}

else {

int rc = msg_->move (prefetched_msg);

errno_assert (rc == 0);

prefetched = false;

}

more_in = msg_->flags () & msg_t::more ? true : false;

return 0;

}

pipe_t *pipe = NULL;

int rc = fq.recvpipe (msg_, &pipe);

// It's possible that we receive peer's identity. That happens

// after reconnection. The current implementation assumes that

// the peer always uses the same identity.

while (rc == 0 && msg_->is_identity ())

rc = fq.recvpipe (msg_, &pipe);

if (rc != 0)

return -1;

zmq_assert (pipe != NULL);

// If we are in the middle of reading a message, just return the next part.

if (more_in)

more_in = msg_->flags () & msg_t::more ? true : false;

else {

// We are at the beginning of a message.

// Keep the message part we have in the prefetch buffer

// and return the ID of the peer instead.

rc = prefetched_msg.move (*msg_);

errno_assert (rc == 0);

prefetched = true;

blob_t identity = pipe->get_identity ();

rc = msg_->init_size (identity.size ());

errno_assert (rc == 0);

memcpy (msg_->data (), identity.data (), identity.size ());

msg_->set_flags (msg_t::more);

identity_sent = true;

}

return 0;

}当调用xrecv方法获取一条新的数据时,router会将这条数据缓存起来放入到prefetched_msg中,然后向上层返回一条identity类型的msg来标记对应的管道,下次再调用xrecv时才会返回真正的数据。如果more_in为真,表明已经向上层返回过identity和缓存的prefetched_msg,所以可以直接把该条msg返回给上层。

int zmq::router_t::xsend (msg_t *msg_)

{

// If this is the first part of the message it's the ID of the

// peer to send the message to.

if (!more_out) {

zmq_assert (!current_out);

// If we have malformed message (prefix with no subsequent message)

// then just silently ignore it.

// TODO: The connections should be killed instead.

if (msg_->flags () & msg_t::more) {

more_out = true;

// Find the pipe associated with the identity stored in the prefix.

// If there's no such pipe just silently ignore the message, unless

// router_mandatory is set.

blob_t identity ((unsigned char*) msg_->data (), msg_->size ());

outpipes_t::iterator it = outpipes.find (identity);

if (it != outpipes.end ()) {

current_out = it->second.pipe;

if (!current_out->check_write ()) {

it->second.active = false;

current_out = NULL;

if (mandatory) {

more_out = false;

errno = EAGAIN;

return -1;

}

}

}

else

if (mandatory) {

more_out = false;

errno = EHOSTUNREACH;

return -1;

}

}

int rc = msg_->close ();

errno_assert (rc == 0);

rc = msg_->init ();

errno_assert (rc == 0);

return 0;

}

// Ignore the MORE flag for raw-sock or assert?

if (options.raw_sock)

msg_->reset_flags (msg_t::more);

// Check whether this is the last part of the message.

more_out = msg_->flags () & msg_t::more ? true : false;

// Push the message into the pipe. If there's no out pipe, just drop it.

if (current_out) {

// Close the remote connection if user has asked to do so

// by sending zero length message.

// Pending messages in the pipe will be dropped (on receiving term- ack)

if (raw_sock && msg_->size() == 0) {

current_out->terminate (false);

int rc = msg_->close ();

errno_assert (rc == 0);

rc = msg_->init ();

errno_assert (rc == 0);

current_out = NULL;

return 0;

}

bool ok = current_out->write (msg_);

if (unlikely (!ok)) {

// Message failed to send - we must close it ourselves.

int rc = msg_->close ();

errno_assert (rc == 0);

current_out = NULL;

} else {

if (!more_out) {

current_out->flush ();

current_out = NULL;

}

}

}

else {

int rc = msg_->close ();

errno_assert (rc == 0);

}

// Detach the message from the data buffer.

int rc = msg_->init ();

errno_assert (rc == 0);

return 0;

}通过xsend发送的消息必须首先指定identity, 之后current_out会记录当前正在写数据的管道,之后该条数据的其他部分的msg都需要向current_out中写入。

与router相比,dealer的实现比较简单,dealer只负责消息的转发,下面是dealer的成员变量:

private:

// Messages are fair-queued from inbound pipes. And load-balanced to

// the outbound pipes.

fq_t fq;

lb_t lb;

// if true, send an empty message to every connected router peer

bool probe_router;dealer的fq负责接收数据,lb负责发送数据:

int zmq::dealer_t::xsend (msg_t *msg_)

{

return sendpipe (msg_, NULL);

}

int zmq::dealer_t::xrecv (msg_t *msg_)

{

return recvpipe (msg_, NULL);

}

int zmq::dealer_t::sendpipe (msg_t *msg_, pipe_t **pipe_)

{

return lb.sendpipe (msg_, pipe_);

}

int zmq::dealer_t::recvpipe (msg_t *msg_, pipe_t **pipe_)

{

return fq.recvpipe (msg_, pipe_);

}dealer的发送和接收数据都是调用lb和fq的对应方法。

router和dealer的使用非常广泛,下面是zeromq官网的guild上一个例子:

// 2015-02-27T11:40+08:00

// ROUTER-to-DEALER example

#include "zhelpers.h"

#include <pthread.h>

#define NBR_WORKERS 10

static void *

worker_task(void *args)

{

void *context = zmq_ctx_new();

void *worker = zmq_socket(context, ZMQ_DEALER);

#if (defined (WIN32))

s_set_id(worker, (intptr_t)args);

#else

s_set_id(worker); // Set a printable identity

#endif

zmq_connect (worker, "tcp://localhost:5671");

int total = 0;

while (1) {

// Tell the broker we're ready for work

s_sendmore(worker, "");

s_send(worker, "Hi Boss");

// Get workload from broker, until finished

free(s_recv(worker)); // Envelope delimiter

char *workload = s_recv(worker);

int finished = (strcmp(workload, "Fired!") == 0);

free(workload);

if (finished) {

printf("Completed: %d tasks\n", total);

break;

}

total++;

// Do some random work

s_sleep(randof(500) + 1);

}

zmq_close(worker);

zmq_ctx_destroy(context);

return NULL;

}

// While this example runs in a single process, that is just to make

// it easier to start and stop the example. Each thread has its own

// context and conceptually acts as a separate process.

int main(void)

{

void *context = zmq_ctx_new();

void *broker = zmq_socket(context, ZMQ_ROUTER);

zmq_bind(broker, "tcp://*:5671");

srandom((unsigned)time(NULL));

int worker_nbr;

for (worker_nbr = 0; worker_nbr < NBR_WORKERS; worker_nbr++) {

pthread_t worker;

pthread_create(&worker, NULL, worker_task, (void *)(intptr_t)worker_nbr);

}

// Run for five seconds and then tell workers to end

int64_t end_time = s_clock() + 5000;

int workers_fired = 0;

while (1) {

// Next message gives us least recently used worker

char *identity = s_recv(broker);

s_sendmore(broker, identity);

free(identity);

free(s_recv(broker)); // Envelope delimiter

free(s_recv(broker)); // Response from worker

s_sendmore(broker, "");

// Encourage workers until it's time to fire them

if (s_clock() < end_time)

s_send(broker, "Work harder");

else {

s_send(broker, "Fired!");

if (++workers_fired == NBR_WORKERS)

break;

}

}

zmq_close(broker);

zmq_ctx_destroy(context);

return 0;

}这个例子比较简单,注意其中的空msg只是为了和rep以及req模型兼容,如果只是router和dealer模型则不需要这个空的分割消息。

req & rep

req和rep也是一组比较常用的模型,他们分别继承自dealer和router同时也通常和router与dealer结合使用。req和rep是一对一的请求应答模型,即req在发送一个条消息后必须接收到应答才能发送下一条消息。rep和req的实现比较简单,就是在router和dealer上做了一些更改,req发送的消息会带一个空的头作为分隔符。req底层的dealer不做任何处理,直接将消息发送给rep。rep的底层router加上一个identity返回给rep,rep直接将identity和rep写回给底层router,而将真正的数据返回给上层。之后rep发送的数据会直接写入到router,而该数据需要的头信息在接收数据时已经写入到router了。

下面是一个req和rep的例子:

// Load-balancing broker

// Clients and workers are shown here in-process

#include "zhelpers.h"

#include <pthread.h>

#define NBR_CLIENTS 10

#define NBR_WORKERS 3

// Dequeue operation for queue implemented as array of anything

#define DEQUEUE(q) memmove (&(q)[0], &(q)[1], sizeof (q) - sizeof (q [0]))

// Basic request-reply client using REQ socket

// Because s_send and s_recv can't handle 0MQ binary identities, we

// set a printable text identity to allow routing.

//

static void *

client_task(void *args)

{

void *context = zmq_ctx_new();

void *client = zmq_socket(context, ZMQ_REQ);

#if (defined (WIN32))

s_set_id(client, (intptr_t)args);

zmq_connect(client, "tcp://localhost:5672"); // frontend

#else

s_set_id(client); // Set a printable identity

zmq_connect(client, "ipc://frontend.ipc");

#endif

// Send request, get reply

s_send(client, "HELLO");

char *reply = s_recv(client);

printf("Client: %s\n", reply);

free(reply);

zmq_close(client);

zmq_ctx_destroy(context);

return NULL;

}

// While this example runs in a single process, that is just to make

// it easier to start and stop the example. Each thread has its own

// context and conceptually acts as a separate process.

// This is the worker task, using a REQ socket to do load-balancing.

// Because s_send and s_recv can't handle 0MQ binary identities, we

// set a printable text identity to allow routing.

static void *

worker_task(void *args)

{

void *context = zmq_ctx_new();

void *worker = zmq_socket(context, ZMQ_REQ);

#if (defined (WIN32))

s_set_id(worker, (intptr_t)args);

zmq_connect(worker, "tcp://localhost:5673"); // backend

#else

s_set_id(worker);

zmq_connect(worker, "ipc://backend.ipc");

#endif

// Tell broker we're ready for work

s_send(worker, "READY");

while (1) {

// Read and save all frames until we get an empty frame

// In this example there is only 1, but there could be more

char *identity = s_recv(worker);

char *empty = s_recv(worker);

assert(*empty == 0);

free(empty);

// Get request, send reply

char *request = s_recv(worker);

printf("Worker: %s\n", request);

free(request);

s_sendmore(worker, identity);

s_sendmore(worker, "");

s_send(worker, "OK");

free(identity);

}

zmq_close(worker);

zmq_ctx_destroy(context);

return NULL;

}

// This is the main task. It starts the clients and workers, and then

// routes requests between the two layers. Workers signal READY when

// they start; after that we treat them as ready when they reply with

// a response back to a client. The load-balancing data structure is

// just a queue of next available workers.

int main(void)

{

// Prepare our context and sockets

void *context = zmq_ctx_new();

void *frontend = zmq_socket(context, ZMQ_ROUTER);

void *backend = zmq_socket(context, ZMQ_ROUTER);

#if (defined (WIN32))

zmq_bind(frontend, "tcp://*:5672"); // frontend

zmq_bind(backend, "tcp://*:5673"); // backend

#else

zmq_bind(frontend, "ipc://frontend.ipc");

zmq_bind(backend, "ipc://backend.ipc");

#endif

int client_nbr;

for (client_nbr = 0; client_nbr < NBR_CLIENTS; client_nbr++) {

pthread_t client;

pthread_create(&client, NULL, client_task, (void *)(intptr_t)client_nbr);

}

int worker_nbr;

for (worker_nbr = 0; worker_nbr < NBR_WORKERS; worker_nbr++) {

pthread_t worker;

pthread_create(&worker, NULL, worker_task, (void *)(intptr_t)worker_nbr);

}

// Here is the main loop for the least-recently-used queue. It has two

// sockets; a frontend for clients and a backend for workers. It polls

// the backend in all cases, and polls the frontend only when there are

// one or more workers ready. This is a neat way to use 0MQ's own queues

// to hold messages we're not ready to process yet. When we get a client

// reply, we pop the next available worker and send the request to it,

// including the originating client identity. When a worker replies, we

// requeue that worker and forward the reply to the original client

// using the reply envelope.

// Queue of available workers

int available_workers = 0;

char *worker_queue[10];

while (1) {

zmq_pollitem_t items[] = {

{ backend, 0, ZMQ_POLLIN, 0 },

{ frontend, 0, ZMQ_POLLIN, 0 }

};

// Poll frontend only if we have available workers

int rc = zmq_poll(items, available_workers ? 2 : 1, -1);

if (rc == -1)

break; // Interrupted

// Handle worker activity on backend

if (items[0].revents & ZMQ_POLLIN) {

// Queue worker identity for load-balancing

char *worker_id = s_recv(backend);

assert(available_workers < NBR_WORKERS);

worker_queue[available_workers++] = worker_id;

// Second frame is empty

char *empty = s_recv(backend);

assert(empty[0] == 0);

free(empty);

// Third frame is READY or else a client reply identity

char *client_id = s_recv(backend);

// If client reply, send rest back to frontend

if (strcmp(client_id, "READY") != 0) {

empty = s_recv(backend);

assert(empty[0] == 0);

free(empty);

char *reply = s_recv(backend);

s_sendmore(frontend, client_id);

s_sendmore(frontend, "");

s_send(frontend, reply);

free(reply);

if (--client_nbr == 0)

break; // Exit after N messages

}

free(client_id);

}

// Here is how we handle a client request:

if (items[1].revents & ZMQ_POLLIN) {

// Now get next client request, route to last-used worker

// Client request is [identity][empty][request]

char *client_id = s_recv(frontend);

char *empty = s_recv(frontend);

assert(empty[0] == 0);

free(empty);

char *request = s_recv(frontend);

s_sendmore(backend, worker_queue[0]);

s_sendmore(backend, "");

s_sendmore(backend, client_id);

s_sendmore(backend, "");

s_send(backend, request);

free(client_id);

free(request);

// Dequeue and drop the next worker identity

free(worker_queue[0]);

DEQUEUE(worker_queue);

available_workers--;

}

}

zmq_close(frontend);

zmq_close(backend);

zmq_ctx_destroy(context);

return 0;

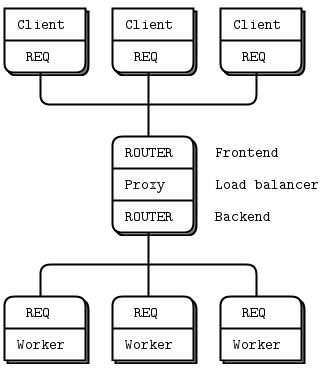

}下面这幅图是这个模型的示意图:

这个例子很好的解释了数据在req,rep和router之间传输de流程:

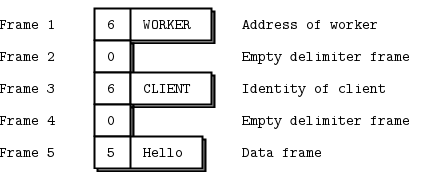

首先worker先去挂载router,之后就是任务分发,client发送一个数据,rep将他包装成三个msg:identity,empty和数据三部分。之后选择一个woker发送出去,此时backend需要发送的数据是五段,

真正到达req的是四条消息,req本身有去掉了空的分隔符,所以worker收到的消息是三条。之后worker把这三条发送出去,backend接收到的又是五条数据。然后通过frontend把后面三条发送出去,后面三条的第一条指定了clientid,所以这条消息又回到了发出任务的req。

这个流程比较复杂,但是只要搞清楚这个流程,rep,req,router和dealer的工作机制就非常明了了。

487

487

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?