包括两点:

1、mapreduce实现矩阵相乘

2、python脚本生成矩阵

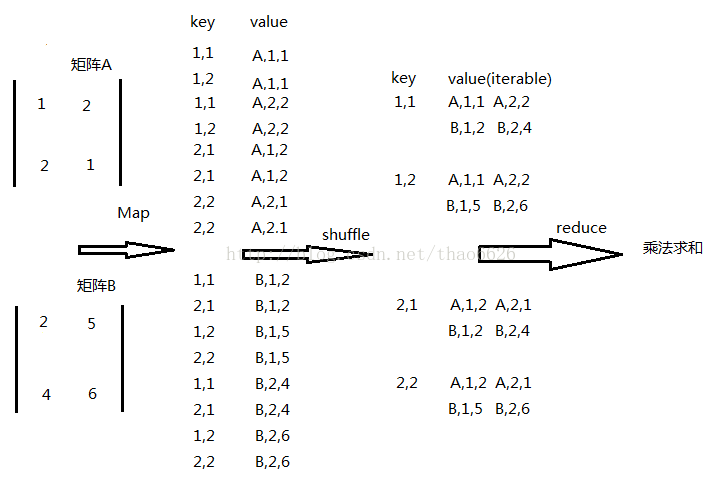

mapreduce实现矩阵相乘中数据组织方式变换的过程如下图所示:

mapreduce 实现代码:

import java.io.IOException;

import java.util.HashMap;

import java.util.Iterator;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class MatrixMutiply {

/*

* 矩阵存放在一个文件里面。

* 刚开始两个矩阵放在一个文件里面,hadoop会为两个文件做两次map导致先做一次map和reduce,

* 这样另外一个矩阵就没有数据,后面的reduce会出现问题

* 矩阵存放的形式是:

* A,1,1,2 表示A矩阵第一行第一列数据为2

* A,1,2,1

* A,2,1,3

* A,2,2,4

* 这样存放的目的是防止一次map在读取数据时分片而导致数据读取不完整

* 矩阵由python脚本产生,python脚本见BuildMatrix.py

*

* */

public static class MatrixMapper extends Mapper<Object, Text, Text, Text>{

/*

* rowNumA and colNumB need to be confirm manually

* map阶段:

* 将数据组织为KEY VALUE的形式

* key:结果矩阵的元素的位置号

* value:结果矩阵元素需要用到的原两个矩阵的数据

* 要注意运算矩阵前矩阵和后矩阵在map阶段处理数据在组织map输出数据时不一样

*

* */

private int rowNumA = 4; // matrix A row

private int colNumB = 3; // matrix B column

private Text mapOutputkey;

private Text mapOutputvalue;

@Override

protected void map(Object key, Text value,

Mapper<Object, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

System.out.println("map input key:" + key);

System.out.println("map input value:" + value);

String[] matrixStrings = value.toString().split("\n");

for(String item : matrixStrings){

System.out.println("item:"+ item);

String[] elemString = item.split(",");

for(String string : elemString){

System.out.println("element" + string);

}

System.out.println("elemString[0]:"+elemString[0]);

if(elemString[0].equals("A")){ // 此处一定要用equals,而不能用==来判断

/*

* 对A矩阵进行map化,outputkey outputvalue 在组织上要注意细节,处理好细节

* */

for(int i=1; i<=colNumB; i++){

mapOutputkey = new Text(elemString[1] + "," + String.valueOf(i));

mapOutputvalue = new Text("A:" + elemString[2] + "," + elemString[3]);

context.write(mapOutputkey, mapOutputvalue);

System.out.println("mapoutA:"+mapOutputkey+mapOutputvalue);

}

}

/*

* 对B矩阵map,mapoutput的组织和A矩阵的不同,细节要处理好

* */

else if(elemString[0].equals("B")){

for(int j=1; j<=rowNumA; j++){

mapOutputkey = new Text(String.valueOf(j) + "," + elemString[2]);

mapOutputvalue = new Text("B:" + elemString[1] + "," + elemString[3]);

context.write(mapOutputkey, mapOutputvalue);

System.out.println("mapoutB"+mapOutputkey+mapOutputvalue);

}

}

else{ // just for debug

System.out.println("mapout else else :--------------->"+ item);

}

}

}

}

public static class MatixReducer extends Reducer<Text, Text, Text, Text> {

private HashMap<String, String> MatrixAHashmap = new HashMap<String, String>();

private HashMap<String, String> MatrixBHashmap = new HashMap<String, String>();

private String val;

@Override

protected void reduce(Text key, Iterable<Text> value,

Reducer<Text, Text, Text, Text>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

System.out.println("reduce input key:" + key);

System.out.println("reduce input value:" + value.toString());

for(Text item : value){

val = item.toString();

System.out.println("val------------"+val);

if(!val.equals("0")){

String[] kv = val.substring(2).split(",");

if(val.startsWith("A:")){

MatrixAHashmap.put(kv[0], kv[1]);

}

if(val.startsWith("B:")){

MatrixBHashmap.put(kv[0], kv[1]);

}

}

}

/*just for debug*/

System.out.println("hashmapA:"+MatrixAHashmap);

System.out.println("hashmapB:"+MatrixBHashmap);

Iterator<String> iterator = MatrixAHashmap.keySet().iterator();

int sum = 0;

while(iterator.hasNext()){

String keyString = iterator.next();

sum += Integer.parseInt(MatrixAHashmap.get(keyString))*

Integer.parseInt(MatrixBHashmap.get(keyString));

}

//LongWritable reduceOutputvalue = new LongWritable(sum);

Text reduceOutputvalue = new Text(String.valueOf(sum));

context.write(key, reduceOutputvalue);

/*just for debug*/

System.out.println("reduce output key:" + key);

System.out.println("reduce output value:" + reduceOutputvalue);

}

}

public static void main(String[] args) throws Exception{

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if(otherArgs.length != 2){

System.err.println("Usage: matrix <in> <out>");

System.exit(2);

}

Job job = Job.getInstance(conf, "matrix");

job.setJarByClass(MatrixMutiply.class);

job.setMapperClass(MatrixMapper.class);

/*按照思路,这里不需要combiner操作,不需指明*/

// job.setCombinerClass(MatixReducer.class);

job.setReducerClass(MatixReducer.class);

/*这两个outputkeyclass outputvalueclass 对map output 和 reduce output同时起作用*/

/*注意是同时,所以在指定map 和 reduce的输出时要一致*/

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1); // 此处是怎么判断要结束的?

}

}

运行上述代码的脚本:

hadoop com.sun.tools.javac.Main MatrixMutiply.java

jar cf matrix.jar MatrixMutiply*.class

hadoop fs -rm -r /matrixoutput # 只是在再次运行时需要删掉上一次运行时生成的文件

hadoop jar matrix.jar MatrixMutiply /matrixinput/* /matrixoutput细节的地方要注意:判断字符串相等时,要用equals来判断

产生矩阵的python脚本

# coding:utf-8

__author__ = 'taohao'

import random

class BuildMatrix(object):

def build_matrix_a(self, row, col):

"""

matrix:

1 0 2

-1 3 1

turn to ->Matrix name,rowNum,colNum,elementNum

for example:

A,1,1,1

A,1,2,1

A,1,3,2

A,2,1,-1

A,2,2,3

A,2,3,1

save the matrix to file for hadoop to read data from file

:return:

"""

fd = open('Matrix.txt', 'a') # 'a' is to write the file at the end of old file

num = ''

for i in range(row):

for j in range(col):

num += ',' + str(i+1) + ',' + str(j+1) + ','

num += str(random.randint(1, 10))

fd.write('A' + num + '\n')

num = ''

fd.close()

def build_matrix_b(self, row, col):

"""

the same as def build_matrix_a

:param row:

:param col:

:return:

"""

fd = open('Matrix.txt', 'a')

num = ''

for i in range(row):

for j in range(col):

num += ',' + str(i+1) + ',' + str(j+1) + ','

num += str(random.randint(1, 10))

fd.write('B' + num + '\n')

num = ''

fd.close()

if __name__ == '__main__':

rowA = 4

colA = 2

rowB = 2

colB = 3

bulid = BuildMatrix()

bulid.build_matrix_a(rowA, colA)

bulid.build_matrix_b(rowB, colB)另一篇python脚本生成矩阵,矩阵相乘,请看: http://blog.csdn.net/thao6626/article/details/46472719

921

921

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?