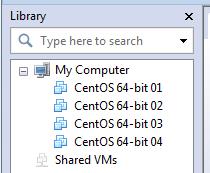

1. 用Vmware Workstation创建4个虚拟机,每个虚拟机都装上Centos(版本:CentOS-6.3-x86_64),示意图如下:

2. 在所有结点上修改/etc/hosts,使彼此之间都能够用机器名解析IP

192.168.231.131 node01

192.168.231.132 node02

192.168.231.133 node03

192.168.231.134 node043. 在所有结点上安装JDK

首先,把jdk安装包(jdk-6u38-linux-x64.bin)放到/usr/java

增加可执行权限:

[root@localhost java]# chmod a+xjdk-6u38-linux-x64.bin

[root@localhost java]# ls -lrt

total 70376

-rwxr-xr-x. 1 root root 72058033 Jan 2907:21 jdk-6u38-linux-x64.bin下面开始安装JDK:

[root@localhost java]# ./jdk-6u38-linux-x64.bin

更改/etc/profile,添加以下几行:

JAVA_HOME=/usr/java/jdk1.6.0_38

JRE_HOME=/usr/java/jdk1.6.0_38/jre/

CLASSPATH=.:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH 然后测试是否安装成功:

[root@localhost java]# source /etc/profile

[root@localhost java]# java -version

java version "1.6.0_38"

Java(TM) SE Runtime Environment (build1.6.0_38-b05)

Java HotSpot(TM) 64-Bit Server VM (build20.13-b02, mixed mode)4. 添加Hadoop用户

[root@node02 ~]# useradd hadoop

[root@node02 ~]# passwd hadoop

Changing password for user hadoop.

New password:

BAD PASSWORD: it is too short

BAD PASSWORD: is too simple

Retype new password:

passwd: all authentication tokens updatedsuccessfully.5. ssh 配置

注意:下面开始以hadoop用户操作

[hadoop@node01 ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key(/home/hadoop/.ssh/id_rsa):

Created directory '/home/hadoop/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in/home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

1d:03:8c:2f:99:95:98:c1:3d:8b:21:61:3e:a9:cb:bfhadoop@node01

The key's randomart image is:

+--[ RSA 2048]----+

| oo.B.. |

| o..* *. |

| +. B oo |

| ..= o. o |

| . .S . |

| . . |

| o |

| . |

| E. |

+-----------------+

[hadoop@node01 ~]$ cd .ssh

[hadoop@node01 .ssh]$ cp id_rsa.pubauthorized_keys把所有结点的authorized_keys的内容都互相拷贝,这样就可以免密码ssh连入。

6. 安装Hadoop

Hadoop的安装很简单,直接解压缩即可:

[hadoop@node01 ~]$ ls

hadoop-0.20.2 hadoop-0.20.2.tar.gz

[hadoop@node01 ~]$ tar xzvf./hadoop-0.20.2.tar.gz7. 配置namenode (node01)

修改hadoop-env.sh的JAVA_HOME,使其指向之前安装的java目录:

[hadoop@node01 conf]$ vi hadoop-env.sh

# The java implementation to use. Required.

export JAVA_HOME=/usr/java/jdk1.6.0_38修改core-site.xml(设置namenode的主机和端口,以及设置hadoop临时文件目录):

[hadoop@node01 conf]$ vi core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://192.168.231.131:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/tmp</value>

</property>

</configuration>修改hdfs-site.xml:

[hadoop@node01 conf]$ vi hdfs-site.xml

<configuration>

<property>

<name>dfs.data.dir</name>

<value>/home/hadoop/hadoop-0.20.2/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>修改mapred-site.xml,设置jobtracter的主机和端口

[hadoop@node01 conf]$ vi mapred-site.xml <configuration>

<property>

<name>mapred.job.tracker</name>

<value>192.168.231.131:9001</value>

</property>

</configuration>修改masters和slaves文件,记录集群中各个结点

[hadoop@node01 conf]$ vi masters

node01

[hadoop@node01 conf]$ vi slaves

node02

node03

node04向其它3个结点复制hadoop

[hadoop@node01 ~]$ scp -r ./hadoop-0.20.2node02:/home/hadoop

[hadoop@node01 ~]$ scp -r ./hadoop-0.20.2node03:/home/hadoop

[hadoop@node01 ~]$ scp -r ./hadoop-0.20.2node04:/home/hadoop8. 在各个结点上配置hadoop环境变量

[hadoop@node01 ~]$ su - root

Password:

[root@node01 ~]# vi /etc/profile

export HADOOP_INSTALL=/home/hadoop/hadoop-0.20.2

export PATH=$PATH:$HADOOP_INSTALL/bin9. 格式化HDFS

[hadoop@node01 bin]$ ./hadoop namenode-format

13/01/30 00:59:04 INFO namenode.NameNode:STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = node01/192.168.231.131

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 0.20.2

STARTUP_MSG: build =https://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.20 -r 911707;compiled by 'chrisdo' on Fri Feb 19 08:07:34 UTC 2010

************************************************************/

13/01/30 00:59:04 INFO namenode.FSNamesystem:fsOwner=hadoop,root

13/01/30 00:59:04 INFOnamenode.FSNamesystem: supergroup=supergroup

13/01/30 00:59:04 INFOnamenode.FSNamesystem: isPermissionEnabled=true

13/01/30 00:59:04 INFO common.Storage:Image file of size 96 saved in 0 seconds.

13/01/30 00:59:04 INFO common.Storage:Storage directory /tmp/hadoop-hadoop/dfs/name has been successfully formatted.

13/01/30 00:59:04 INFO namenode.NameNode:SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode atnode01/192.168.231.131

************************************************************/10.启动守护进程

注意,在启动守护进程之前,一定要先关闭防火墙(所有的结点都要),否则datanode启动失败。

[root@node04 ~]# /etc/init.d/iptables stop

iptables: Flushing firewall rules: [ OK ]

iptables: Setting chains to policy ACCEPT:filter [ OK ]

iptables: Unloading modules: [ OK ]

最好设置开机就不启动防火墙:

[root@node01 ~]# vi /etc/sysconfig/selinux

SELINUX=disable

[hadoop@node01bin]$ ./start-all.sh

startingnamenode, logging to/home/hadoop/hadoop-0.20.2/bin/../logs/hadoop-hadoop-namenode-node01.out

node03:starting datanode, logging to/home/hadoop/hadoop-0.20.2/bin/../logs/hadoop-hadoop-datanode-node03.out

node02:starting datanode, logging to/home/hadoop/hadoop-0.20.2/bin/../logs/hadoop-hadoop-datanode-node02.out

node04:starting datanode, logging to/home/hadoop/hadoop-0.20.2/bin/../logs/hadoop-hadoop-datanode-node04.out

hadoop@node01'spassword:

node01:starting secondarynamenode, logging to/home/hadoop/hadoop-0.20.2/bin/../logs/hadoop-hadoop-secondarynamenode-node01.out

startingjobtracker, logging to/home/hadoop/hadoop-0.20.2/bin/../logs/hadoop-hadoop-jobtracker-node01.out

node03:starting tasktracker, logging to /home/hadoop/hadoop-0.20.2/bin/../logs/hadoop-hadoop-tasktracker-node03.out

node02:starting tasktracker, logging to/home/hadoop/hadoop-0.20.2/bin/../logs/hadoop-hadoop-tasktracker-node02.out

node04:starting tasktracker, logging to /home/hadoop/hadoop-0.20.2/bin/../logs/hadoop-hadoop-tasktracker-node04.out检测守护进程启动情况:

Master结点:

[hadoop@node01 jdk1.6.0_38]$ /usr/java/jdk1.6.0_38/bin/jps

3986 Jps

3639 NameNode

3785 SecondaryNameNode

3858 JobTrackerSlave结点(以node02为例):

[root@node02 ~]# /usr/java/jdk1.6.0_38/bin/jps

3254 TaskTracker

3175 DataNode

3382 Jps

5875

5875

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?