15分钟用Rails开发一个Blog有什么意思?那是2005年的Hipster追捧的玩具。

现在都已经是2015年了。HTML应该读作Hipster's Toolkit Made Live了。

既然是15分钟,那当然不是零基础的。

在开始之前,你需要满足以下要求

运行4.0以上Linux内核,并安装好v4l2loopback内核模块

GStreamer

Firefox 浏览器版本 >= 40

检查设置 (about:config)

media.mediasource.enabled true

media.mediasource.mp4.enabled true

media.mediasource.webm.enabled true

media.mediasource.whitelist false (只有42以下版本需要)

Erlang/OTP >= 17.0

Emacs,以及erlang mode,能熟练使用C-c C-k

ebml-viewer https://code.google.com/p/ebml-viewer/

Matroska spec data foundation-source/specdata.xml at master · Matroska-Org/foundation-source · GitHub

正文开始了

都安装好以后,用gstreamer把测试信号输入到/dev/video0

gst-launch videotestsrc ! v4l2sink device=/dev/video0

第一步,先检查浏览器里能不能放这个视频信号。

media.html里就只放一个video标签。因为JavaScript可能要反复改,所以先叫media1.js了

<!doctype html>

<html>

<head>

<meta charset="utf-8" />

<title>hhhhh TV</title>

<script type="application/javascript" src="media1.js"></script>

<body>

<video id="video"></video>

</body>

</html>

media1.js很简单,直接把video的src设置成这个stream的url就完了。

MediaDevices.getUserMedia() ,URL.createObjectURL()

"use strict";

window.addEventListener(

"load",

function() {

var videoElem = document.getElementById("video");

navigator.mediaDevices.getUserMedia(

{ video: true }

).then(

function(stream) {

videoElem.src = URL.createObjectURL(stream);

videoElem.play();

}

);

}

);

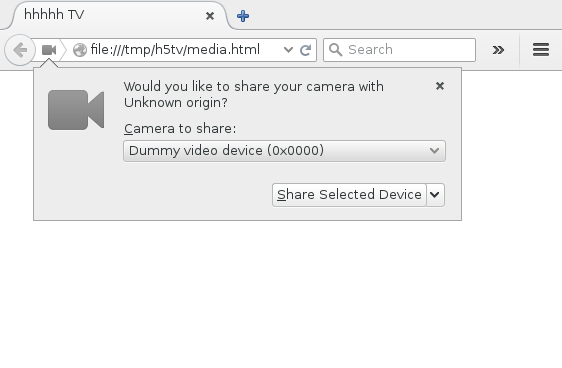

用Firefox打开这个media.html之后,先会询问是否要share selected device。

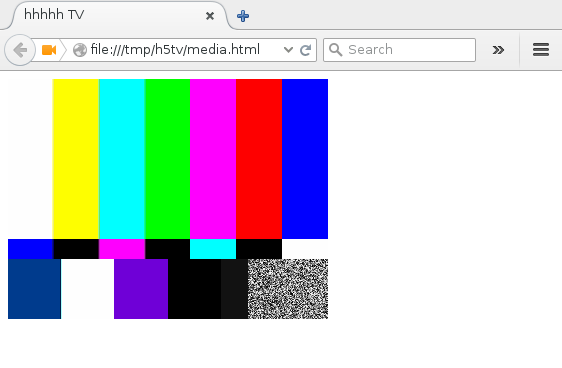

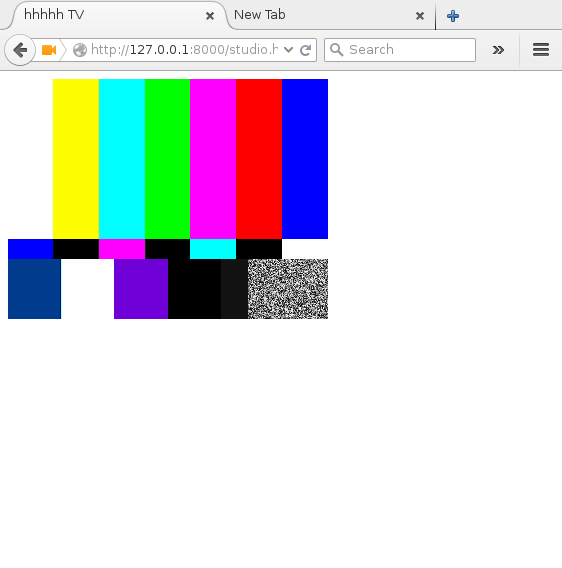

点了按钮之后,视频就开始播放了

第二步,直接把video.src设置成这个stream的url,只能在这一个浏览器里播。我们得拿到视频数据。这个很容易,使用 MediaRecorder API就可以了。

media2.js

"use strict";

window.addEventListener(

"load",

function() {

var videoElem = document.getElementById("video");

navigator.mediaDevices.getUserMedia(

{ video: true }

).then(

function(stream) {

var mediaRecorder = new MediaRecorder(stream);

mediaRecorder.addEventListener(

"dataavailable",

function(event) {

console.log(event);

}

);

mediaRecorder.start();

}

);

}

);

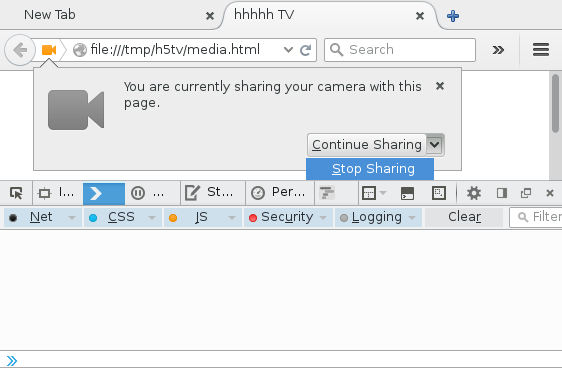

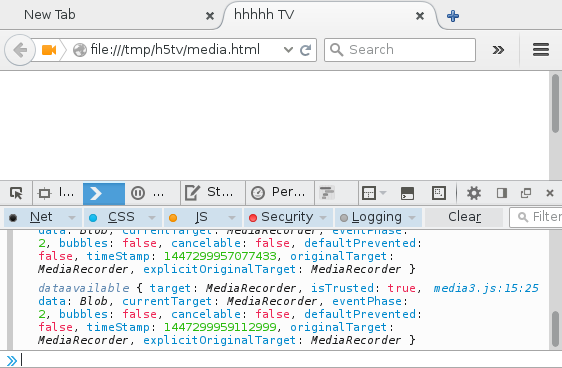

重新打开 media.html ,share了之后,再选stop sharing

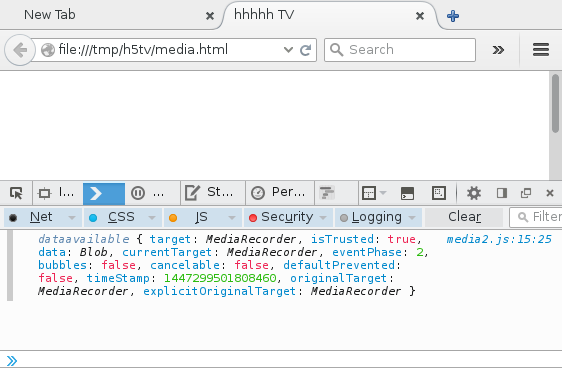

可以看到 dataavailable 事件

第三步,这样的问题是,所有视频数据都是stop sharing之后一次取到的,所以并不能直播。至少得能每隔一段时间取一次。这也很简单。

media3.js只是把 media2.js的

mediaRecorder.start();

改成了

mediaRecorder.start(2000);

这样,不需要点 stop sharing 就能每隔大约2秒取到视频数据了

第四步,播放取到的视频数据。先播放第二步取到的整段视频数据。MediaSource - Web APIs

很不幸,从MediaRecorder里得到的是Blob,而MediaSource需要的ArrayBuffer,所以还得先用 FileReader 转一下。

media4.js

"use strict";

window.addEventListener(

"load",

function() {

var videoElem = document.getElementById("video");

var mediaSource = new MediaSource();

var sourceBuffer;

videoElem.src = URL.createObjectURL(mediaSource);

mediaSource.addEventListener(

'sourceopen',

function () {

sourceBuffer = mediaSource.addSourceBuffer('video/webm; codecs="vp8,vorbis"');

sourceBuffer.addEventListener(

"updateend",

function () {

videoElem.play();

}

);

}

);

var reader = new FileReader();

reader.addEventListener(

"load",

function() {

sourceBuffer.appendBuffer(this.result);

}

);

navigator.mediaDevices.getUserMedia(

{ video: true }

).then(

function(stream) {

var mediaRecorder = new MediaRecorder(stream);

mediaRecorder.addEventListener(

"dataavailable",

function(event) {

reader.readAsArrayBuffer(event.data);

}

);

mediaRecorder.start();

}

);

}

);

点stop sharing之后,可以看到视频开始播放了。

第五步,播放第三步取到的分段视频数据。因为SourceBuffer同时只能有一个appendBuffer。作一些简单的处理,假如正在update,就等updateend之后,在来调用appendBuffer。另外,等append超过2个buffer之后再开始播放,而不是像media4.js那样加入一个buffer之后立即开始播放。

media5.js

"use strict";

window.addEventListener(

"load",

function() {

var videoElem = document.getElementById("video");

var mediaSource = new MediaSource();

var sourceBuffer;

var buffers = [];

var updating = false;

var buffer_count = 0;

var started_play = false;

videoElem.src = URL.createObjectURL(mediaSource);

function notify() {

if ((updating) || (buffers.length === 0)) {

return;

}

updating = true;

var buffer = buffers.shift();

sourceBuffer.appendBuffer(buffer);

}

mediaSource.addEventListener(

'sourceopen',

function () {

sourceBuffer = mediaSource.addSourceBuffer('video/webm; codecs="vp8,vorbis"');

sourceBuffer.addEventListener(

"updateend",

function () {

updating = false;

if (!started_play) {

buffer_count += 1;

if (buffer_count > 2) {

started_play = true;

videoElem.play();

}

}

notify();

}

);

}

);

var reader = new FileReader();

reader.addEventListener(

"load",

function() {

buffers.push(this.result);

notify();

}

);

navigator.mediaDevices.getUserMedia(

{ video: true }

).then(

function(stream) {

var mediaRecorder = new MediaRecorder(stream);

mediaRecorder.addEventListener(

"dataavailable",

function(event) {

reader.readAsArrayBuffer(event.data);

}

);

mediaRecorder.start(2000);

}

);

}

);

第六步,从第N个分段开始播放。这个看上去,很简单,只要跳过前几个分段就可以了。而实际上第一个分段是不一样的。

Firefox里MediaRecorder默认用的是webm格式。直播时,一开始是一个EBML header。接着定义一个size非常大(目前定义的范围内最大)的Segment,Segment里,一开始是一些Track信息之类的东西,后面就是Cluster了。除了第一个分段,后面都是很多个Cluster。

EBML

..

Segment size=2^56-1

...

Cluster

Cluster

Cluster

所以,我们要做的就是找出第一个Cluster所在位置,并把之前的部分取出来,加到SourceBuffer里,可是这样视频在videoElem.play()之后并没有开始播放 !!! 还需要把videoElem.currentTime 设置成,加到SourceBuffer里的第一个Cluster的时间。

media6.js

"use strict";

function get_vint_length(c) {

var i;

for (i=9; c>0; i--) {

c = c >> 1;

}

return i;

}

function get_vint_value(array, offset, length) {

var v = array[offset] - (1 << (8 - length));

for (var i=1; i<length; i++) {

v = (v << 8) + array[offset+i];

}

return v;

}

function parse_header_size(buffer) {

var view = new DataView(buffer);

var array = new Uint8Array(buffer);

if (view.getUint32(0, false) !== 0x1a45dfa3) {

throw "EBML Element ID not found";

}

if (array[4] === 0) {

throw "Bad EBML Size";

}

var length = get_vint_length(array[4]);

var ebml_size = get_vint_value(array, 4, length);

var segment_offset = 4 + length + ebml_size;

if (view.getUint32(segment_offset, false) !== 0x18538067) {

throw "Segment Element ID not found";

}

var size_length = get_vint_length(array[segment_offset+4]);

var offset = segment_offset + 4 + size_length;

while (view.getUint32(offset, false) != 0x1F43B675) {

offset += get_vint_length(array[offset]);

var elem_length = get_vint_length(array[offset]);

var elem_size = get_vint_value(array, offset, elem_length);

offset += elem_length + elem_size;

}

return offset;

}

window.addEventListener(

"load",

function() {

var videoElem = document.getElementById("video");

var mediaSource = new MediaSource();

var sourceBuffer;

var buffers = [];

var updating = false;

var buffer_count = 0;

var started_play = false;

var skipped = false;

videoElem.src = URL.createObjectURL(mediaSource);

function notify() {

if ((updating) || (buffers.length === 0)) {

return;

}

updating = true;

var buffer = buffers.shift();

sourceBuffer.appendBuffer(buffer);

}

mediaSource.addEventListener(

'sourceopen',

function () {

sourceBuffer = mediaSource.addSourceBuffer('video/webm; codecs="vp8,vorbis"');

sourceBuffer.addEventListener(

"updateend",

function () {

updating = false;

if (!started_play) {

buffer_count += 1;

if (buffer_count > 2) {

started_play = true;

videoElem.currentTime = 4;

videoElem.play();

}

}

notify();

}

);

}

);

var reader = new FileReader();

reader.addEventListener(

"load",

function() {

buffers.push(this.result);

if (skipped) {

notify();

return;

}

if (buffers.length < 3) {

return;

}

var buffer = buffers.shift();

var header = buffer.slice(0, parse_header_size(buffer));

buffers.shift();

buffers.unshift(header);

skipped = true;

notify();

}

);

navigator.mediaDevices.getUserMedia(

{ video: true }

).then(

function(stream) {

var mediaRecorder = new MediaRecorder(stream);

mediaRecorder.addEventListener(

"dataavailable",

function(event) {

reader.readAsArrayBuffer(event.data);

}

);

mediaRecorder.start(2000);

}

);

}

);

第七步,现在就是要把这个拆成两部分,一部分录制上传,一部分下载播放。

不妨,每5秒一个分段,而每个分段,都用时间戳作为文件名,那么播放的时候只需要知道第一个Cluster的时间戳就可以计算出currentTime了。

假设实际使用时上传到AWS S3,我们还需要一个服务端,定时向上传的一端发送签名。在这里我们简化一下,只是用Server Sent Event向浏览器发送上传路径,其中第一个是 header.webm 用来上传 cluster 前面的所有字节。

h5tv.erl 用来启动。h5tv_storage_connection,用来模拟一个简化的S3,PUT上传文件,GET下载文件,不作任何检查,且根据文件名设置Content-Typeh5tv_channel_manager,用来记录 channel 信息。 h5tv_live_connection,则是主要就四个功能,返回channel列表,返回当前时间戳,返回某个channel最早的时间戳,提供Server Sent Event不断发送新的上传地址。

h5tv.erl

-module(h5tv).

-export([start/0, stop/0, init/0]).

start() ->

Pid = spawn(?MODULE, init, []),

register(?MODULE, Pid).

stop() ->

?MODULE ! stop.

init() ->

h5tv_tcp_listener:start_link(

8000,

[binary, {active, false}, {packet, http_bin}, {reuseaddr, true}],

{h5tv_storage_connection, start, []}),

h5tv_tcp_listener:start_link(

8001,

[binary, {active, false}, {packet, http_bin}, {reuseaddr, true}],

{h5tv_live_connection, start, []}),

h5tv_channel_manager:start_link(),

receive

stop ->

exit(shutdown)

end.

h5tv_channel_manager.erl,仅仅是分配一个Channel编号,并把信息存入ets。因为这是一个named_table,所以怎么读就不用管了。

-module(h5tv_channel_manager).

-behaviour(gen_server).

-export([start_link/0]).

-export([init/1, handle_call/3, handle_cast/2, handle_info/2,

terminate/2, code_change/3]).

-define(SERVER, ?MODULE).

start_link() ->

gen_server:start_link({local, ?SERVER}, ?MODULE, [], []).

init([]) ->

ets:new(

h5tv_channels,

[named_table, set, protected, {read_concurrency, true}]),

{ok, 1}.

handle_call({create_channel, Name, Timestamp}, _From, NextId) ->

ets:insert_new(h5tv_channels, {NextId, Name, Timestamp}),

{reply, NextId, NextId + 1}.

handle_cast(_Msg, State) ->

{noreply, State}.

handle_info(_Info, State) ->

{noreply, State}.

terminate(_Reason, _State) ->

ok.

code_change(_OldVsn, State, _Extra) ->

{ok, State}.

h5tv_tcp_listener.erl,只管accept连接,并spawn process来处理。

-module(h5tv_tcp_listener).

-export([start_link/3, init/3]).

start_link(Port, Options, Handler) ->

spawn_link(?MODULE, init, [Port, Options, Handler]).

init(Port, Options, Handler) ->

{ok, Sock} = gen_tcp:listen(Port, Options),

loop(Sock, Handler).

loop(Sock, Handler = {M, F, A}) ->

{ok, Conn} = gen_tcp:accept(Sock),

Pid = spawn(M, F, [Conn|A]),

gen_tcp:controlling_process(Conn, Pid),

Pid ! continue,

loop(Sock, Handler).

h5tv_storage_connection.erl 。文件路径以当前目录下的static为根目录。

需要注意的是,这里会自动把 / 当成 /index.html

-module(h5tv_storage_connection).

-export([start/1]).

start(Conn) ->

handle_request(Conn, h5tv_http_util:read_http_headers(Conn)).

content_type(<<".html">>) ->

<<"text/html; charset=utf-8">>;

content_type(<<".js">>) ->

<<"application/javascript; charset=utf-8">>;

content_type(<<".json">>) ->

<<"application/json; charset=utf-8">>;

content_type(<<".css">>) ->

<<"text/css; charset=utf-8">>;

content_type(_) ->

<<"application/octet-stream">>.

handle_request(Conn, {'GET', {abs_path, <<"/">>}, Version, Headers}) ->

handle_request(Conn, {'GET', {abs_path, <<"/index.html">>}, Version, Headers});

handle_request(Conn, {'GET', {abs_path, <<"/", Name/binary>>}, _, _Headers}) ->

[Path|_] = binary:split(Name, <<"?">>),

case file:read_file(<<"static/", Path/binary>>) of

{ok, Bin} ->

h5tv_http_util:http_response(

Conn,

200,

content_type(filename:extension(Path)),

Bin);

{error, _} ->

h5tv_http_util:http_response(

Conn, 404, "text/html", <<"<h1>404 Not Found</h1>">>)

end;

handle_request(Conn, {'PUT', {abs_path, <<"/", Name/binary>>}, _, Headers}) ->

[Name1|_] = binary:split(Name, <<"?">>),

Path = <<"static/", Name1/binary>>,

Dirname = filename:dirname(Path),

case file:make_dir(Dirname) of

ok ->

ok;

{error, eexist} ->

ok

end,

{ok, File} = file:open(Path, [write, binary]),

Size = binary_to_integer(proplists:get_value('Content-Length', Headers)),

write_file(File, Conn, Size);

handle_request(Conn, Request) ->

io:format("Unknown Request: ~p~n", [Request]),

h5tv_http_util:http_response(

Conn, 400, "text/html", <<"<h1>400 Bad Request</h1>">>).

write_file(File, Conn, 0) ->

file:close(File),

h5tv_http_util:http_response(

Conn,

200,

"text/plain",

"OK");

write_file(File, Conn, Size) ->

case gen_tcp:recv(Conn, 0) of

{ok, Bin} ->

file:write(File, Bin),

write_file(File, Conn, Size-byte_size(Bin));

{error, closed} ->

file:close(File)

end.

h5tv_live_connection.erl 。这里值得注意的是把时钟间隔设置成了 2.5s ,这样很多时间戳会出现两次。

-module(h5tv_live_connection).

-export([start/1]).

start(Conn) ->

handle_request(Conn, h5tv_http_util:read_http_headers(Conn)).

handle_request(Conn, {'GET', {abs_path, <<"/">>}, _Version, _Headers}) ->

Body =

[ "[",

string:join(

[ io_lib:format("{\"id\": ~p, \"name\": \"~s\"}", [Id, Name])

|| [{Id, Name, _}] <- ets:match(h5tv_channels, '$1')

],

", "),

"]"],

h5tv_http_util:http_response(

Conn, 200, "application/json", Body);

handle_request(Conn, {'GET', {abs_path, <<"/timestamp">>}, _Version, _Headers}) ->

h5tv_http_util:http_response(

Conn,

200,

"application/json",

get_timestamp());

handle_request(Conn, {'GET', {abs_path, <<"/timestamp/", ID/binary>>}, _Version, _Headers}) ->

[{_,_,Timestamp}] = ets:lookup(h5tv_channels, binary_to_integer(ID)),

h5tv_http_util:http_response(

Conn,

200,

"application/json",

Timestamp);

handle_request(Conn, {'GET', {abs_path, <<"/live/", Name/binary>>}, _Version, _Headers}) ->

timer:send_interval(2500, refresh),

Timestamp = get_timestamp(),

ChannelId = gen_server:call(h5tv_channel_manager, {create_channel, Name, Timestamp}),

gen_tcp:send(

Conn,

[<<"HTTP/1.1 200 OK\r\n">>,

<<"Connection: close\r\n">>,

<<"Content-Type: text/event-stream\r\n">>,

<<"Access-Control-Allow-Origin: *\r\n">>,

<<"Transfer-Encoding: chunked">>,

<<"\r\n\r\n">>]),

send_header_path(Conn, ChannelId),

send_timestamp(Conn, ChannelId, Timestamp),

loop(Conn, ChannelId);

handle_request(Conn, Request) ->

io:format("Unknown Request: ~p~n", [Request]),

h5tv_http_util:http_response(

Conn, 400, "text/html", <<"<h1>400 Bad Request</h1>">>).

loop(Conn, ChannelId) ->

receive

refresh ->

send_timestamp(Conn, ChannelId)

end,

loop(Conn, ChannelId).

send_header_path(Conn, ChannelId) ->

send_chunked(

Conn,

io_lib:format(

"data: ~p/header.webm\r\n\r\n",

[ChannelId])).

send_timestamp(Conn, ChannelId) ->

send_timestamp(Conn, ChannelId, get_timestamp()).

send_timestamp(Conn, ChannelId, Timestamp) ->

send_chunked(

Conn,

io_lib:format(

"data: ~p/~s.webm\r\n\r\n",

[ChannelId, Timestamp])).

get_timestamp() ->

{M, S, _} = os:timestamp(),

TS = M * 1000000 + S,

integer_to_list((TS div 5) * 5, 10).

send_chunked(Conn, Data) ->

gen_tcp:send(

Conn,

[integer_to_list(iolist_size(Data), 16),

"\r\n",

Data,

"\r\n"]).

h5tv_http_util.erl 。这是接收Header,还有发送普通的Response。

-module(h5tv_http_util).

-export(

[ read_http_headers/1,

http_response/4]).

read_http_headers(Conn) ->

receive

continue ->

ok

after 5000 ->

throw(timeout)

end,

{ok, {http_request, Method, Path, Version}} = gen_tcp:recv(Conn, 0),

Headers = recv_headers(Conn),

ok = inet:setopts(Conn, [{packet, raw}]),

{Method, Path, Version, Headers}.

recv_headers(Conn) ->

case gen_tcp:recv(Conn, 0) of

{ok, {http_header, _, Field, _, Value}} ->

[{Field, Value}|recv_headers(Conn)];

{ok, http_eoh} ->

[]

end.

http_response(Conn, Code, ContentType, Body) ->

ok =

gen_tcp:send(

Conn,

[<<"HTTP/1.1 ">>, integer_to_list(Code), <<" ">>, httpd_util:reason_phrase(Code), <<"\r\n">>,

<<"Connection: close\r\n">>,

<<"Access-Control-Allow-Origin: *\r\n">>,

<<"Content-Type: ">>, ContentType, <<"\r\n">>,

<<"Content-Length: ">>, integer_to_list(iolist_size(Body)),

<<"\r\n\r\n">>, Body]),

ok = gen_tcp:close(Conn).

为了偷懒,直接把 html 和 javascript 都丢在 static 目录下。

index.html

<!doctype html>

<html>

<head>

<title>hhhhh TV</title>

<script type="application/javascript;version=1.8" src="channel-list.js"></script>

<body>

<form action="/studio.html" method="GET">

<input name="name" value="" />

<input type="submit" value="我要直播" />

</form>

<h2>正在直播</h2>

<div id="results">

</div>

</body>

</html>

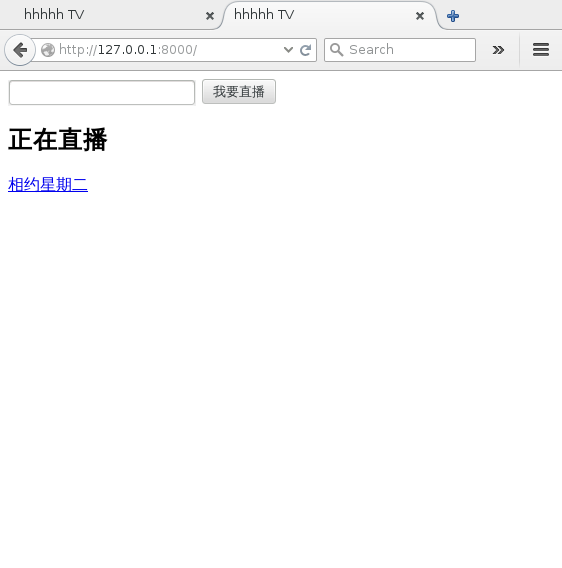

点 我要直播 之后,就到了studio.html,要注意,这里用的method是GET,studio.html的javascript会自己把 ?name=xxx 里的 xxx 取出来,拼成 http://127.0.0.1:8001/live/xxx 而服务端直接把这个名字存下来并返回了。

所以 channel-list.js 需要先 decodeURIComponent

function onload() {

var resultsElem = document.getElementById("results");

var xhr = new XMLHttpRequest()

xhr.open("GET", "http://127.0.0.1:8001/");

xhr.responseType = "json";

xhr.addEventListener(

"load",

function () {

for (channel of xhr.response) {

var aElem = document.createElement("a");

aElem.href = "/watch.html?" + channel.id;

aElem.appendChild(document.createTextNode(decodeURIComponent(channel.name)));

resultsElem.appendChild(aElem);

}

}

);

xhr.send();

}

window.addEventListener("load", onload);

而播放页面也是类似的。

studio.html, watch.html和之前的media.html除了javascript地址不一样,其他完全一样。

studio.js除了第一个buffer要分成两部分以外,其他都是从EventSource里拿到一个路径之后就可以立即开始上传了。

"use strict";

function get_vint_length(c) {

var i;

for (i=9; c>0; i--) {

c = c >> 1;

}

return i;

}

function get_vint_value(array, offset, length) {

var v = array[offset] - (1 << (8 - length));

for (var i=1; i<length; i++) {

v = (v << 8) + array[offset+i];

}

return v;

}

function parse_header_size(buffer) {

var view = new DataView(buffer);

var array = new Uint8Array(buffer);

if (view.getUint32(0, false) !== 0x1a45dfa3) {

throw "EBML Element ID not found";

}

if (array[4] === 0) {

throw "Bad EBML Size";

}

var length = get_vint_length(array[4]);

var ebml_size = get_vint_value(array, 4, length);

var segment_offset = 4 + length + ebml_size;

if (view.getUint32(segment_offset, false) !== 0x18538067) {

throw "Segment Element ID not found";

}

var length = get_vint_length(array[segment_offset+4]);

var offset = segment_offset + 4 + length;

while (view.getUint32(offset, false) != 0x1F43B675) {

offset += get_vint_length(array[offset]);

var length = get_vint_length(array[offset]);

var size = get_vint_value(array, offset, length);

offset += length + size;

}

return offset;

}

function onload() {

var videoElem = videoElem = document.getElementById("video");

var buffers = [];

var paths = [];

var uploading = false;

var header_uploaded = false;

function do_upload(path, data) {

var xhr = new XMLHttpRequest();

xhr.open("PUT", path);

xhr.addEventListener(

"loadend",

function() {

uploading = false;

notify();

}

);

xhr.send(data);

}

function upload() {

uploading = true;

var path = paths.shift();

var buffer = buffers.shift();

if (header_uploaded) {

do_upload(path, buffer);

return

}

header_uploaded = true;

var reader = new FileReader();

reader.addEventListener(

"load",

function() {

var header_size = parse_header_size(this.result);

var header_buffer = this.result.slice(0, header_size);

buffers.unshift(this.result.slice(header_size));

do_upload(path, header_buffer);

}

);

reader.readAsArrayBuffer(buffer);

}

function notify() {

if (uploading)

return;

if (buffers.length === 0)

return;

if (paths.length === 0)

return;

upload();

}

navigator.mediaDevices.getUserMedia(

{ video: true }

).then(

function(stream) {

videoElem.src = URL.createObjectURL(stream);

videoElem.play();

var mediaRecorder = new MediaRecorder(stream);

mediaRecorder.addEventListener(

"dataavailable",

function(event) {

buffers.push(event.data);

notify();

}

);

var name = window.location.search.slice(6);

var eventSource = new EventSource("http://127.0.0.1:8001/live/" + name);

eventSource.addEventListener(

"open",

function(event) {

mediaRecorder.start(5000);

}

);

eventSource.addEventListener(

"message",

function(event) {

if (paths.indexOf(event.data) == -1) {

paths.push(event.data);

notify();

}

}

);

}

);

}

window.addEventListener("load", onload);

watch.js 比之前复杂的地方就在于要减一下,计算时间戳的差值作为初始的 currentTime

"use strict";

function onload() {

var videoElem = document.getElementById("video");

var mediaSource = new MediaSource();

videoElem.src = URL.createObjectURL(mediaSource);

var channel = window.location.search.slice(1);

var sourceBuffer;

var loading = false;

var updating = false;

var loaded_timestamp;

var current_timestamp;

var buffers = [];

var offset_loaded = false;

var timeoffset;

var started_play = false;

var buffer_count = 0;

function do_load(path) {

var xhr = new XMLHttpRequest();

xhr.open("GET", path);

xhr.responseType = "arraybuffer";

xhr.addEventListener(

"load",

function() {

loading = false;

buffers.push(this.response);

notify_buffer_update();

notify_load();

if (started_play) {

return;

}

buffer_count += 1;

if (buffer_count > 3) {

videoElem.currentTime = timeoffset;

videoElem.play();

started_play = true;

}

}

);

xhr.send();

}

function notify_load() {

if (loading) {

return;

}

if (loaded_timestamp >= current_timestamp) {

return;

}

loading = true;

loaded_timestamp += 5;

do_load("/" + channel + "/" + loaded_timestamp + ".webm");

}

function notify_buffer_update() {

if (updating) {

return;

}

if (buffers.length === 0) {

return;

}

updating = true;

var buffer = buffers.shift();

sourceBuffer.appendBuffer(buffer);

}

function on_init_timestamp_load() {

var init_timestamp = this.response;

var xhr = new XMLHttpRequest();

xhr.open("GET", "http://127.0.0.1:8001/timestamp");

xhr.responseType = "json";

xhr.addEventListener(

"load",

function() {

current_timestamp = this.response - 10;

setInterval(

function() {

current_timestamp += 5;

notify_load();

},

5000);

loading = true;

loaded_timestamp = current_timestamp - 15;

timeoffset = loaded_timestamp - init_timestamp + 5;

do_load("/" + channel + "/header.webm");

}

);

xhr.send();

}

mediaSource.addEventListener(

"sourceopen",

function() {

sourceBuffer = this.addSourceBuffer('video/webm; codecs="vp8,vorbis"');

sourceBuffer.addEventListener(

'updateend',

function () {

updating = false;

notify_buffer_update();

}

);

if (offset_loaded) {

return;

}

offset_loaded = true;

var xhr = new XMLHttpRequest();

xhr.open("GET", "http://127.0.0.1:8001/timestamp/" + channel);

xhr.responseType = "json";

xhr.addEventListener("load", on_init_timestamp_load);

xhr.send();

}

);

}

window.addEventListener("load", onload);

启动服务端

$ erl -make

$ erl

1> h5tv:start().

true

2>

在输入框里填入channel名之后,点我要直播,就开始直播了

在一个新标签页里打开首页,就能看到刚刚开始播出的频道

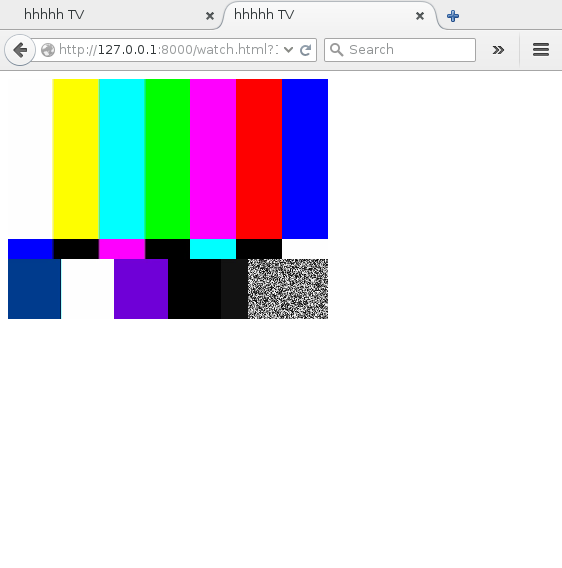

点进去之后,就可以看到直播的内容了

太棒了。15分钟就能开发出一个HTML5视频直播网站了。

------------------------------------------------------

【关注微信公众号二维码欢迎大家一起交流】

广告时间:

正在考虑要不要写一本《7天自制H5视频直播网站》,里面的例子当然会更实际一点。假如你是云存储/CDN厂商,你可以赞助钱以及免费帐号,这样书里面就可以以你们的服务为例子了。假如你是出版社,这可能是2016年网站开发里最火爆的烂书了,还不赶紧私信联系。

转载自:https://zhuanlan.zhihu.com/p/20325539

点了按钮之后,视频就开始播放了

点了按钮之后,视频就开始播放了

点进去之后,就可以看到直播的内容了

点进去之后,就可以看到直播的内容了 太棒了。15分钟就能开发出一个HTML5视频直播网站了。

太棒了。15分钟就能开发出一个HTML5视频直播网站了。

937

937

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?