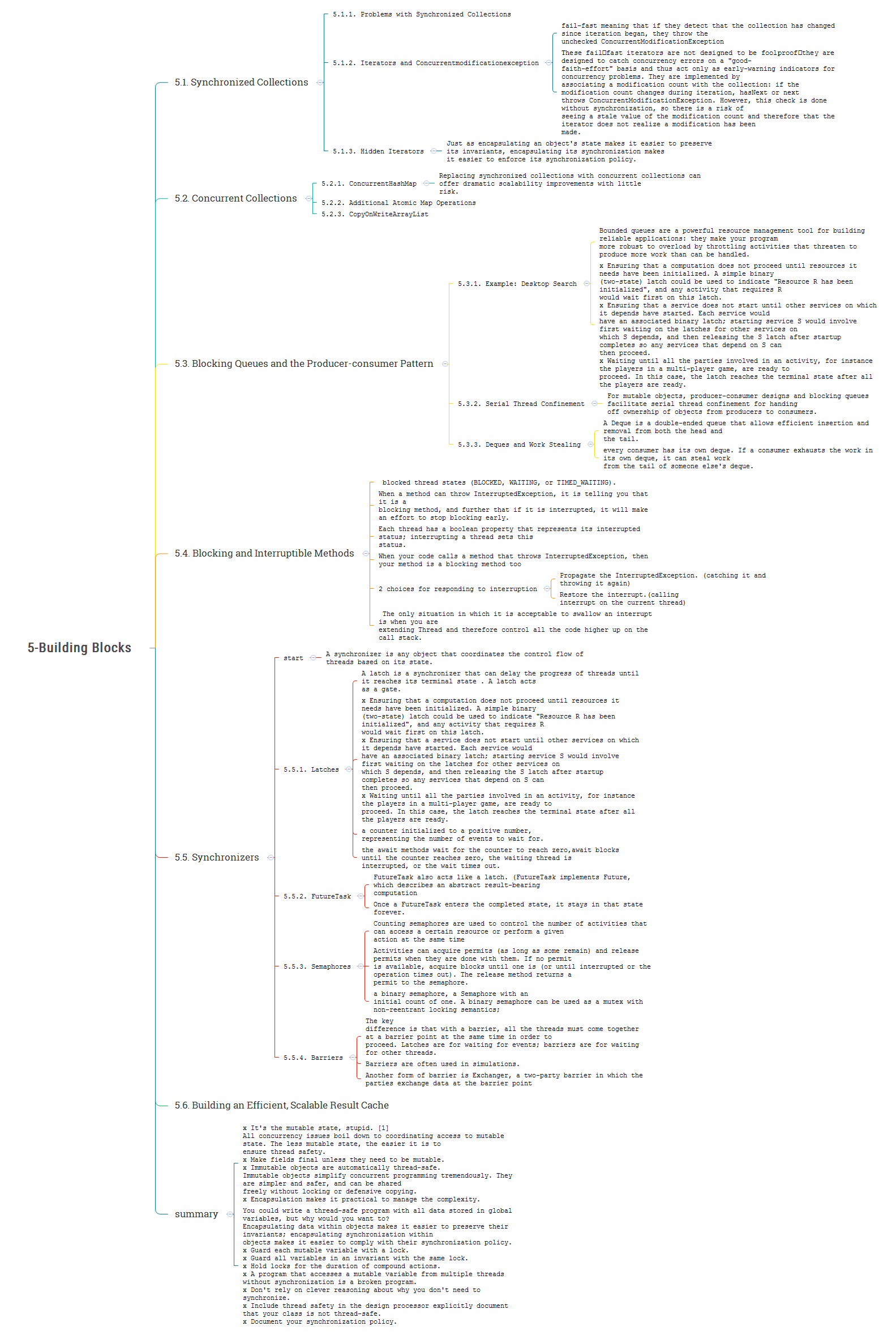

5-Building Blocks

5.1. Synchronized Collections

5.1.1. Problems with Synchronized Collections

5.1.2. Iterators and Concurrentmodificationexception

fail-fast meaning that if they detect that the collection has changed since iteration began, they throw the

unchecked ConcurrentModificationException

These failͲfast iterators are not designed to be foolproofͲthey are designed to catch concurrency errors on a “good-

faith-effort” basis and thus act only as early-warning indicators for concurrency problems. They are implemented by

associating a modification count with the collection: if the modification count changes during iteration, hasNext or next

throws ConcurrentModificationException. However, this check is done without synchronization, so there is a risk of

seeing a stale value of the modification count and therefore that the iterator does not realize a modification has been

made.

5.1.3. Hidden Iterators

Just as encapsulating an object’s state makes it easier to preserve its invariants, encapsulating its synchronization makes

it easier to enforce its synchronization policy.

5.2. Concurrent Collections

5.2.1. ConcurrentHashMap

Replacing synchronized collections with concurrent collections can offer dramatic scalability improvements with little

risk.

5.2.2. Additional Atomic Map Operations

5.2.3. CopyOnWriteArrayList

5.3. Blocking Queues and the Producer-consumer Pattern

5.3.1. Example: Desktop Search

Bounded queues are a powerful resource management tool for building reliable applications: they make your program

more robust to overload by throttling activities that threaten to produce more work than can be handled.

x Ensuring that a computation does not proceed until resources it needs have been initialized. A simple binary

(two-state) latch could be used to indicate “Resource R has been initialized”, and any activity that requires R

would wait first on this latch.

x Ensuring that a service does not start until other services on which it depends have started. Each service would

have an associated binary latch; starting service S would involve first waiting on the latches for other services on

which S depends, and then releasing the S latch after startup completes so any services that depend on S can

then proceed.

x Waiting until all the parties involved in an activity, for instance the players in a multi-player game, are ready to

proceed. In this case, the latch reaches the terminal state after all the players are ready.

5.3.2. Serial Thread Confinement

For mutable objects, producer-consumer designs and blocking queues facilitate serial thread confinement for handing

off ownership of objects from producers to consumers.

5.3.3. Deques and Work Stealing

A Deque is a double-ended queue that allows efficient insertion and removal from both the head and

the tail.

every consumer has its own deque. If a consumer exhausts the work in its own deque, it can steal work

from the tail of someone else’s deque.

5.4. Blocking and Interruptible Methods

blocked thread states (BLOCKED, WAITING, or TIMED_WAITING).

When a method can throw InterruptedException, it is telling you that it is a

blocking method, and further that if it is interrupted, it will make an effort to stop blocking early.

Each thread has a boolean property that represents its interrupted status; interrupting a thread sets this

status.

When your code calls a method that throws InterruptedException, then your method is a blocking method too

2 choices for responding to interruption

Propagate the InterruptedException. (catching it and

throwing it again)

Restore the interrupt.(calling

interrupt on the current thread)

The only situation in which it is acceptable to swallow an interrupt is when you are

extending Thread and therefore control all the code higher up on the call stack.

5.5. Synchronizers

start

A synchronizer is any object that coordinates the control flow of threads based on its state.

5.5.1. Latches

A latch is a synchronizer that can delay the progress of threads until it reaches its terminal state . A latch acts

as a gate.

x Ensuring that a computation does not proceed until resources it needs have been initialized. A simple binary

(two-state) latch could be used to indicate “Resource R has been initialized”, and any activity that requires R

would wait first on this latch.

x Ensuring that a service does not start until other services on which it depends have started. Each service would

have an associated binary latch; starting service S would involve first waiting on the latches for other services on

which S depends, and then releasing the S latch after startup completes so any services that depend on S can

then proceed.

x Waiting until all the parties involved in an activity, for instance the players in a multi-player game, are ready to

proceed. In this case, the latch reaches the terminal state after all the players are ready.

a counter initialized to a positive number,

representing the number of events to wait for.

the await methods wait for the counter to reach zero,await blocks until the counter reaches zero, the waiting thread is

interrupted, or the wait times out.

5.5.2. FutureTask

FutureTask also acts like a latch. (FutureTask implements Future, which describes an abstract result-bearing

computation

Once a FutureTask enters the completed state, it stays in that state forever.

5.5.3. Semaphores

Counting semaphores are used to control the number of activities that can access a certain resource or perform a given

action at the same time

Activities can acquire permits (as long as some remain) and release permits when they are done with them. If no permit

is available, acquire blocks until one is (or until interrupted or the operation times out). The release method returns a

permit to the semaphore.

a binary semaphore, a Semaphore with an

initial count of one. A binary semaphore can be used as a mutex with non-reentrant locking semantics;

5.5.4. Barriers

The key

difference is that with a barrier, all the threads must come together at a barrier point at the same time in order to

proceed. Latches are for waiting for events; barriers are for waiting for other threads.

Barriers are often used in simulations.

Another form of barrier is Exchanger, a two-party barrier in which the parties exchange data at the barrier point

5.6. Building an Efficient, Scalable Result Cache

summary

x It’s the mutable state, stupid. [1]

All concurrency issues boil down to coordinating access to mutable state. The less mutable state, the easier it is to

ensure thread safety.

x Make fields final unless they need to be mutable.

x Immutable objects are automatically thread-safe.

Immutable objects simplify concurrent programming tremendously. They are simpler and safer, and can be shared

freely without locking or defensive copying.

x Encapsulation makes it practical to manage the complexity.

You could write a thread-safe program with all data stored in global variables, but why would you want to?

Encapsulating data within objects makes it easier to preserve their invariants; encapsulating synchronization within

objects makes it easier to comply with their synchronization policy.

x Guard each mutable variable with a lock.

x Guard all variables in an invariant with the same lock.

x Hold locks for the duration of compound actions.

x A program that accesses a mutable variable from multiple threads without synchronization is a broken program.

x Don’t rely on clever reasoning about why you don’t need to synchronize.

x Include thread safety in the design processor explicitly document that your class is not thread-safe.

x Document your synchronization policy.

5.1. Synchronized Collections

5.1.1. Problems with Synchronized Collections

5.1.2. Iterators and Concurrentmodificationexception

fail-fast meaning that if they detect that the collection has changed since iteration began, they throw the

unchecked ConcurrentModificationException

These failͲfast iterators are not designed to be foolproofͲthey are designed to catch concurrency errors on a “good-

faith-effort” basis and thus act only as early-warning indicators for concurrency problems. They are implemented by

associating a modification count with the collection: if the modification count changes during iteration, hasNext or next

throws ConcurrentModificationException. However, this check is done without synchronization, so there is a risk of

seeing a stale value of the modification count and therefore that the iterator does not realize a modification has been

made.

5.1.3. Hidden Iterators

Just as encapsulating an object’s state makes it easier to preserve its invariants, encapsulating its synchronization makes

it easier to enforce its synchronization policy.

5.2. Concurrent Collections

5.2.1. ConcurrentHashMap

Replacing synchronized collections with concurrent collections can offer dramatic scalability improvements with little

risk.

5.2.2. Additional Atomic Map Operations

5.2.3. CopyOnWriteArrayList

5.3. Blocking Queues and the Producer-consumer Pattern

5.3.1. Example: Desktop Search

Bounded queues are a powerful resource management tool for building reliable applications: they make your program

more robust to overload by throttling activities that threaten to produce more work than can be handled.

x Ensuring that a computation does not proceed until resources it needs have been initialized. A simple binary

(two-state) latch could be used to indicate “Resource R has been initialized”, and any activity that requires R

would wait first on this latch.

x Ensuring that a service does not start until other services on which it depends have started. Each service would

have an associated binary latch; starting service S would involve first waiting on the latches for other services on

which S depends, and then releasing the S latch after startup completes so any services that depend on S can

then proceed.

x Waiting until all the parties involved in an activity, for instance the players in a multi-player game, are ready to

proceed. In this case, the latch reaches the terminal state after all the players are ready.

5.3.2. Serial Thread Confinement

For mutable objects, producer-consumer designs and blocking queues facilitate serial thread confinement for handing

off ownership of objects from producers to consumers.

5.3.3. Deques and Work Stealing

A Deque is a double-ended queue that allows efficient insertion and removal from both the head and

the tail.

every consumer has its own deque. If a consumer exhausts the work in its own deque, it can steal work

from the tail of someone else’s deque.

5.4. Blocking and Interruptible Methods

blocked thread states (BLOCKED, WAITING, or TIMED_WAITING).

When a method can throw InterruptedException, it is telling you that it is a

blocking method, and further that if it is interrupted, it will make an effort to stop blocking early.

Each thread has a boolean property that represents its interrupted status; interrupting a thread sets this

status.

When your code calls a method that throws InterruptedException, then your method is a blocking method too

2 choices for responding to interruption

Propagate the InterruptedException. (catching it and

throwing it again)

Restore the interrupt.(calling

interrupt on the current thread)

The only situation in which it is acceptable to swallow an interrupt is when you are

extending Thread and therefore control all the code higher up on the call stack.

5.5. Synchronizers

start

A synchronizer is any object that coordinates the control flow of threads based on its state.

5.5.1. Latches

A latch is a synchronizer that can delay the progress of threads until it reaches its terminal state . A latch acts

as a gate.

x Ensuring that a computation does not proceed until resources it needs have been initialized. A simple binary

(two-state) latch could be used to indicate “Resource R has been initialized”, and any activity that requires R

would wait first on this latch.

x Ensuring that a service does not start until other services on which it depends have started. Each service would

have an associated binary latch; starting service S would involve first waiting on the latches for other services on

which S depends, and then releasing the S latch after startup completes so any services that depend on S can

then proceed.

x Waiting until all the parties involved in an activity, for instance the players in a multi-player game, are ready to

proceed. In this case, the latch reaches the terminal state after all the players are ready.

a counter initialized to a positive number,

representing the number of events to wait for.

the await methods wait for the counter to reach zero,await blocks until the counter reaches zero, the waiting thread is

interrupted, or the wait times out.

5.5.2. FutureTask

FutureTask also acts like a latch. (FutureTask implements Future, which describes an abstract result-bearing

computation

Once a FutureTask enters the completed state, it stays in that state forever.

5.5.3. Semaphores

Counting semaphores are used to control the number of activities that can access a certain resource or perform a given

action at the same time

Activities can acquire permits (as long as some remain) and release permits when they are done with them. If no permit

is available, acquire blocks until one is (or until interrupted or the operation times out). The release method returns a

permit to the semaphore.

a binary semaphore, a Semaphore with an

initial count of one. A binary semaphore can be used as a mutex with non-reentrant locking semantics;

5.5.4. Barriers

The key

difference is that with a barrier, all the threads must come together at a barrier point at the same time in order to

proceed. Latches are for waiting for events; barriers are for waiting for other threads.

Barriers are often used in simulations.

Another form of barrier is Exchanger, a two-party barrier in which the parties exchange data at the barrier point

5.6. Building an Efficient, Scalable Result Cache

summary

x It’s the mutable state, stupid. [1]

All concurrency issues boil down to coordinating access to mutable state. The less mutable state, the easier it is to

ensure thread safety.

x Make fields final unless they need to be mutable.

x Immutable objects are automatically thread-safe.

Immutable objects simplify concurrent programming tremendously. They are simpler and safer, and can be shared

freely without locking or defensive copying.

x Encapsulation makes it practical to manage the complexity.

You could write a thread-safe program with all data stored in global variables, but why would you want to?

Encapsulating data within objects makes it easier to preserve their invariants; encapsulating synchronization within

objects makes it easier to comply with their synchronization policy.

x Guard each mutable variable with a lock.

x Guard all variables in an invariant with the same lock.

x Hold locks for the duration of compound actions.

x A program that accesses a mutable variable from multiple threads without synchronization is a broken program.

x Don’t rely on clever reasoning about why you don’t need to synchronize.

x Include thread safety in the design processor explicitly document that your class is not thread-safe.

x Document your synchronization policy.

JCIP-5-Building Blocks

最新推荐文章于 2023-11-28 11:09:58 发布

2022

2022

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?