1、Hadoop集群环境的搭建步骤

(1)下载hadoop-2.7.1.tar.gz,并将文件保存到/opt目录

下载地址:http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-2.7.1/hadoop-2.7.1.tar.gz

(2)使用cd命令切换到/opt目录

(3)使用tar命令解压hadoop-2.7.1.tar.gz

[root@localhost opt]# tar -zxvf hadoop-2.7.1.tar.gz解压成功后目录结构如下图所示:

(4)使用cd命令切换到/opt/hadoop-2.7.1目录

(5)使用mkdir命令新建指定名称的目录

[root@localhost hadoop-2.7.1]# mkdir tmp

[root@localhost hadoop-2.7.1]# mkdir hdfs

[root@localhost hadoop-2.7.1]# mkdir hdfs/data

[root@localhost hadoop-2.7.1]# mkdir hdfs/name新建目录成功后目录结构如下图所示:

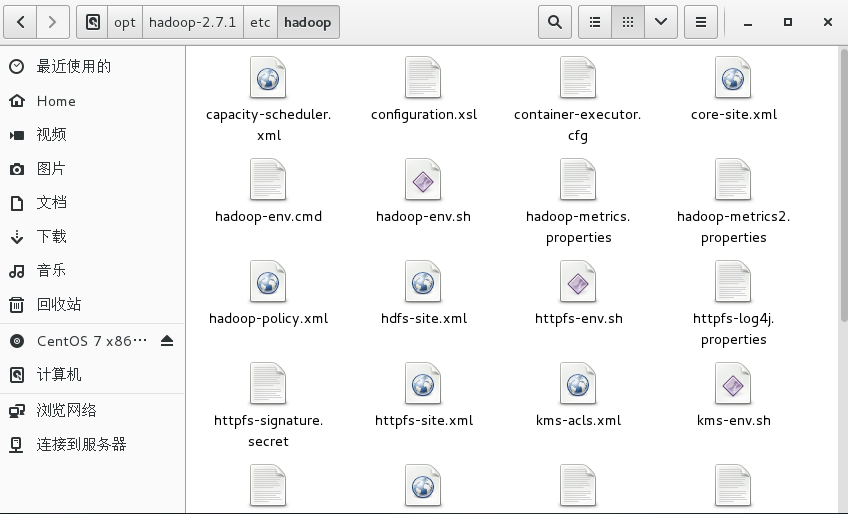

(6)使用cd命令切换到/opt/hadoop-2.7.1/etc/hadoop目录

目录结构如下图所示:

(7)使用vim命令编辑core-site.xml配置文件,其中localhost.localdomain是计算机名称,下同

[root@localhost hadoop]# vim core-site.xml添加内容如下:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost.localdomain:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/opt/hadoop-2.7.1/tmp</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131702</value>

</property>

</configuration>(8)使用vim命令编辑hdfs-site.xml配置文件

[root@localhost hadoop]# vim hdfs-site.xml添加内容如下:

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/opt/hadoop-2.7.1/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/opt/hadoop-2.7.1/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>localhost.localdomain:9001</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>(9)复制mapred-site.xml.template并重命名为mapred-site.xml(复制到当前位置),然后使用vim命令编辑mapred-site.xml配置文件

[root@localhost hadoop]# vim mapred-site.xml添加内容如下:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>localhost.localdomain:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>localhost.localdomain:19888</value>

</property>

</configuration>(10)使用vim命令编辑yarn-site.xml配置文件

[root@localhost hadoop]# vim yarn-site.xml添加内容如下:

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>localhost.localdomain:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>localhost.localdomain:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>localhost.localdomain:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>localhost.localdomain:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>localhost.localdomain:8088</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>768</value>

</property>

</configuration>(11)使用vim命令编辑hadoop-env.sh配置文件

[root@localhost hadoop]# vim hadoop-env.sh添加内容如下:

export JAVA_HOME=/usr/java/jdk1.8.0_111(12)使用vim命令编辑yarn-env.sh配置文件

[root@localhost hadoop]# vim yarn-env.sh添加内容如下:

export JAVA_HOME=/usr/java/jdk1.8.0_111(13)修改并生效/etc/profile配置文件

[root@localhost lib]# vim /etc/profile

[root@localhost lib]# source /etc/profile修改内容如下(添加了HADOOP_HOME和$HADOOP_HOME/bin,其它内容是jdk的环境变量的配置信息):

export JAVA_HOME=/usr/java/jdk1.8.0_111

export JRE_HOME=$JAVA_HOME/jre

export HADOOP_HOME=/opt/hadoop-2.7.1

export CLASSPATH=$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$HADOOP_HOME/bin:$PATH(14)使用cd命令切换到/opt/hadoop-2.7.1/bin目录

(15)使用hadoop namenode -format命令执行集群格式化操作

[root@localhost bin]# hadoop namenode -format(16)使用cd命令切换到/opt/hadoop-2.7.1/sbin目录

(17)使用sh start-all.sh命令执行启动集群操作(如果执行过程中会提示输入密码确认启动操作,那么密码是当前用户的密码,下同)

[root@localhost sbin]# sh start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [localhost.localdomain]

localhost.localdomain: starting namenode, logging to /opt/hadoop-2.7.1/logs/hadoop-root-namenode-localhost.localdomain.out

localhost: starting datanode, logging to /opt/hadoop-2.7.1/logs/hadoop-root-datanode-localhost.localdomain.out

Starting secondary namenodes [localhost.localdomain]

localhost.localdomain: starting secondarynamenode, logging to /opt/hadoop-2.7.1/logs/hadoop-root-secondarynamenode-localhost.localdomain.out

starting yarn daemons

starting resourcemanager, logging to /opt/hadoop-2.7.1/logs/yarn-root-resourcemanager-localhost.localdomain.out

localhost: starting nodemanager, logging to /opt/hadoop-2.7.1/logs/yarn-root-nodemanager-localhost.localdomain.out(18)使用jps命令查看当前java进程

[root@localhost sbin]# jps

18464 NameNode

18592 DataNode

18756 SecondaryNameNode

19017 NodeManager

19294 Jps

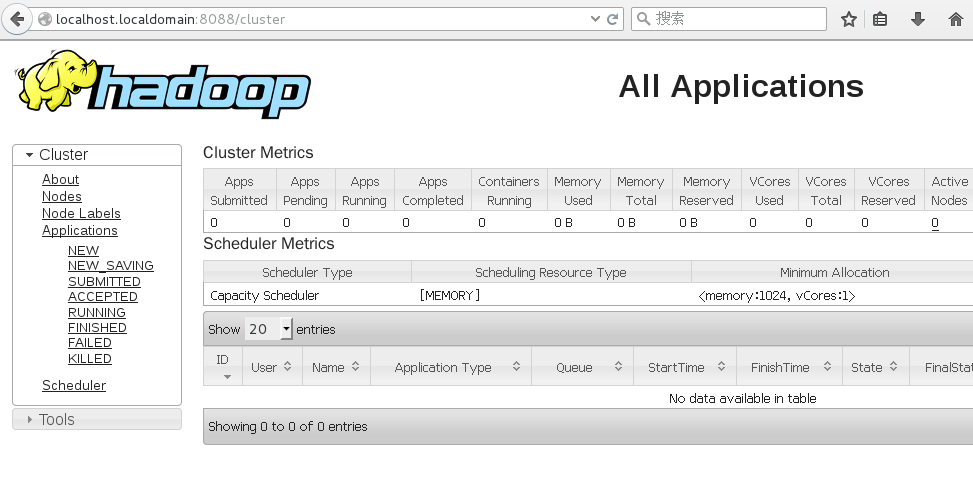

18911 ResourceManager(19)浏览器输入http://localhost.localdomain:8088/cluster查看集群信息

(20)使用sh stop-all.sh命令停止hadoop集群

[root@localhost sbin]# sh stop-all.sh

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

Stopping namenodes on [localhost.localdomain]

localhost.localdomain: stopping namenode

localhost: stopping datanode

Stopping secondary namenodes [localhost.localdomain]

localhost.localdomain: stopping secondarynamenode

stopping yarn daemons

stopping resourcemanager

localhost: no nodemanager to stop

no proxyserver to stop

1311

1311

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?