仅供个人笔记使用

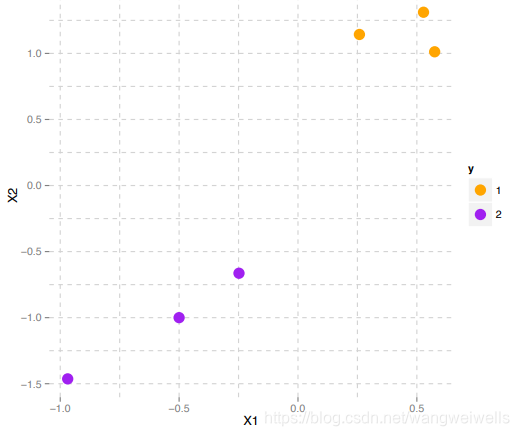

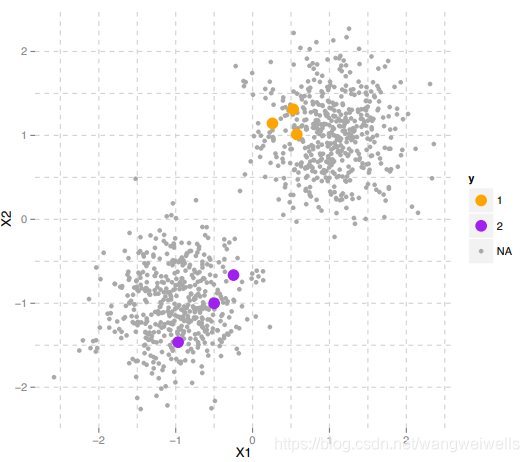

A pattern recognition problem

- goal

there are large “labeled” data online e.g. tweets using hash #

can we use these unlabel data to improve our classifier

- labeled data

- unlabeled data

-some applications

- image classification (easy to obtain images e.g, from flicker)

- protein function prediction

- document classification

- part of speech tagging

-semi-supervised classification

- similar but with continuous out come measure

- using some labels to improve a clustering solution

- measure how well the unlabeled data could help to improve

Content

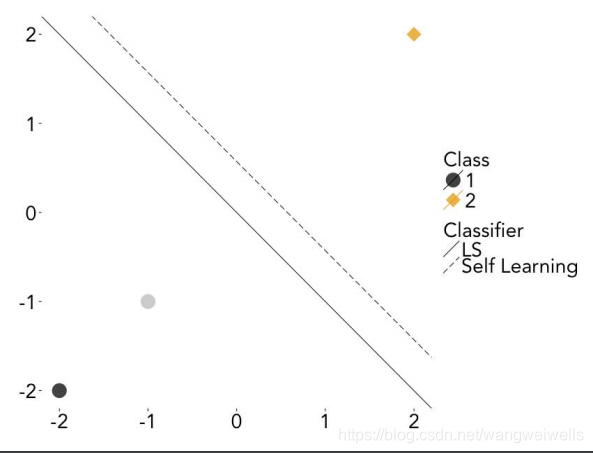

self-learning

One of the earliest studies on SSL (Hartley & Rao 1968):

• Maximum likelihood trying all possible labelings (!)

(the problem of treating unlabeled data is dealing with explosive parameter)

More feasible suggestion (McLachlan 1975):

• Start with supervised solution

• Label unlabeled objects using this classifier

• Retrain classifier treating labels as true labels

Also known as self-training, self-labeling or pseudo-labeling

self-learning ≈ \approx ≈ EXPECTATION MAXIMIZATION

- Linear Discriminant Analysis (LDA)

p ( X , y ; θ ) = ∏ i = 1 L [ π 0 N ( x i , μ 0 , Σ ) ] 1 − y i [ π 1 N ( x i , μ 1 , Σ ) ] y 1 p(X,y;\theta)=\prod_{i=1}^L[\pi_0N(x_i,\mu_0,\Sigma)]^{1-y_i}[\pi_1N(x_i,\mu_1,\Sigma)]^{y_1} p(X,y;θ)=i=1∏L[π0N(xi,μ0,Σ)]1−yi[π1N(xi,μ1,Σ)]y1

share the covariance Σ \Sigma Σ

N ( x i , μ 0 , Σ ) N(x_i,\mu_0,\Sigma) N(xi,μ0,Σ) gaussians for each class - LDA + unlabeled data

p

(

X

,

y

,

X

u

,

h

;

θ

)

=

∏

i

=

1

L

[

π

0

N

(

x

i

,

μ

0

,

Σ

)

]

1

−

y

i

[

π

1

N

(

x

i

,

μ

1

,

Σ

)

]

y

1

×

∏

i

=

1

u

[

π

0

N

(

x

i

,

μ

0

,

Σ

)

]

1

−

h

i

[

π

1

N

(

x

i

,

μ

1

,

Σ

)

]

h

1

p(X,y,X_u,h;\theta)=\prod_{i=1}^L[\pi_0N(x_i,\mu_0,\Sigma)]^{1-y_i}[\pi_1N(x_i,\mu_1,\Sigma)]^{y_1}\\ \times\prod_{i=1}^u[\pi_0N(x_i,\mu_0,\Sigma)]^{1-h_i}[\pi_1N(x_i,\mu_1,\Sigma)]^{h_1}

p(X,y,Xu,h;θ)=i=1∏L[π0N(xi,μ0,Σ)]1−yi[π1N(xi,μ1,Σ)]y1×i=1∏u[π0N(xi,μ0,Σ)]1−hi[π1N(xi,μ1,Σ)]h1

But we do not know h… Integrate it out!

p

(

X

,

y

,

X

u

;

θ

)

=

∫

h

p

(

X

,

y

,

X

u

,

h

;

θ

)

d

h

p(X, y, X_u; \theta) = \int_hp(X, y, X_u, h; \theta)dh

p(X,y,Xu;θ)=∫hp(X,y,Xu,h;θ)dh

LDA +

unlabeled data

∏

i

=

1

L

[

π

0

N

(

x

i

,

μ

0

,

Σ

)

]

1

−

y

i

[

π

1

N

(

x

i

,

μ

1

,

Σ

)

]

y

1

×

∏

i

=

1

u

∑

c

=

0

1

π

c

N

(

x

i

,

μ

c

,

Σ

)

\prod_{i=1}^L[\pi_0N(x_i,\mu_0,\Sigma)]^{1-y_i}[\pi_1N(x_i,\mu_1,\Sigma)]^{y_1}\\ \times\prod_{i=1}^u\sum^1_{c=0}\pi_cN(x_i,\mu_c,\Sigma)

i=1∏L[π0N(xi,μ0,Σ)]1−yi[π1N(xi,μ1,Σ)]y1×i=1∏uc=0∑1πcN(xi,μc,Σ)

Like LDA + a gaussian mixture with the same parameters

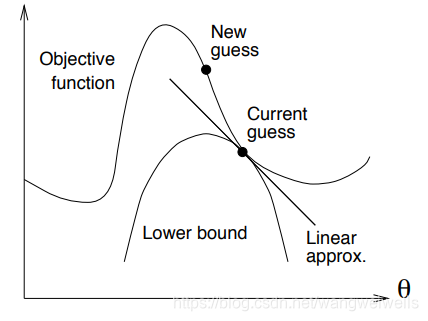

EM algorithm

• Log sum makes optimization difficult

• Change goal: find a local maximum of this function

EM algorithm: finding a lower bound

what we want is construct a lower bound and touch exactyl the objective function ,and get the best lower bound which you can get

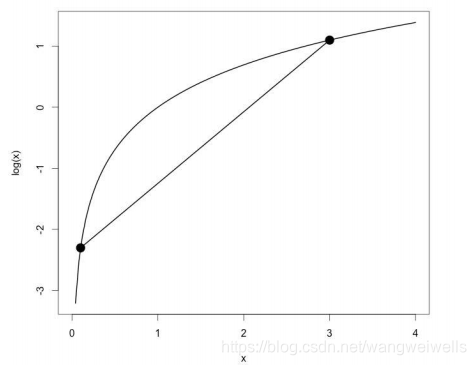

Jensen’s inequality

If f ( x ) f(x) f(x) concave then f ( E [ X ] ) ≥ E [ f ( X ) ] f(E[X]) \geq E[f(X)] f(E[X])≥E[f(X)]

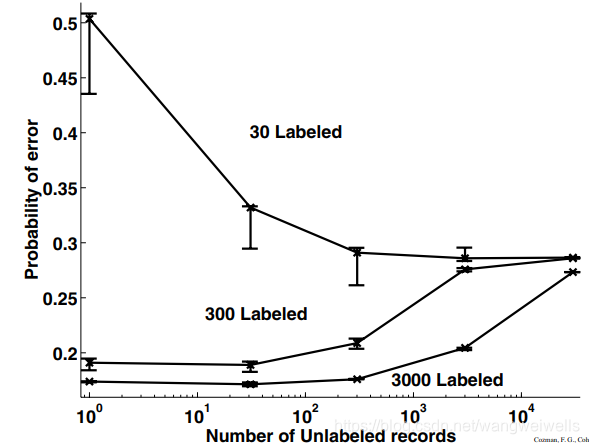

Does unlabeled data help?

θ

x

→

X

\theta_x \rightarrow X

θx→X

X

→

Y

X \rightarrow Y

X→Y

θ

Y

∣

X

→

Y

\theta_{Y|X} \rightarrow Y

θY∣X→Y

Self-learning and EM conclusions

• For generative models:

• Integrate out the missing variables

• Difficult optimization problem can often be “solved” efficiently using

expectation maximization

• Only guaranteed to improve performance asymptotically, if the model is

correct

• Self-learning is a closely related technique that is applicable to any classifier

• Related: co-training (multi-view learning)

• Use labels predicted by other view(s) as newly labeled objects

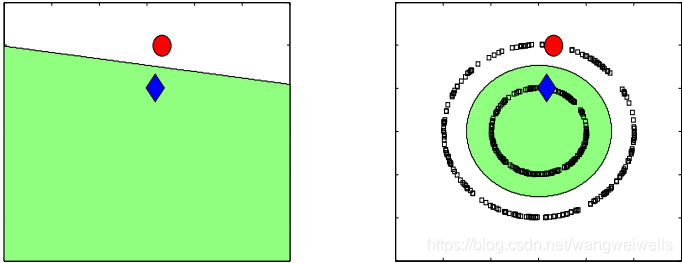

Low-density assumption

Low-density assumption conclusion

• “Natural” extension for the SVM

• Local minima may be a problem

• Lots of work on optimization

• My experience: quite sensitive to parameter settings

• Other low-density approaches:

• Entropy Regularization (Bengio & Grandvalet 2005)

manifold assumption

- manifold regularization

-consistency regularication

∥ f ( x ; w ) − g ( x ′ ; w t ) ∥ 2 \Vert f(x;w)-g(x';w^t) \Vert^2 ∥f(x;w)−g(x′;wt)∥2

Semi-Supervised Conclusion

• Unlabeled data is often available

• Semi-supervised learning attempts to use it to improve classifier

• Often worthwhile, but it does not come for free

• Modeling time

• Computational cost

• Remember: an unlabeled object is less valuable than a labeled one

• Labeling a few more objects can be more effective

• Remember the goal: transductive or inductive?

6393

6393

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?