aws eks

In this tutorial, we will focus on deploying a Spark application on AWS EKS end to end. We will do the following steps:

在本教程中,我们将集中精力在端到端在AWS EKS上部署Spark应用程序。 我们将执行以下步骤:

- deploy an EKS cluster inside a custom VPC in AWS 在AWS的自定义VPC内部署EKS集群

- install the Spark Operator 安装Spark Operator

- run a simple PySpark application 运行一个简单的PySpark应用程序

TL;DR: Github code repo

TL; DR: Github代码仓库

步骤1:部署Kubernetes基础架构 (Step 1: Deploying the Kubernetes infrastructure)

To deploy Kubernetes on AWS we will need at a minimum to deploy :

要在AWS上部署Kubernetes,我们至少需要部署:

VPC, subnets and security groups to take care of the networking in the cluster

VPC,子网和安全组来照顾群集中的网络

EKS control plane to basically run the Kubernetes services such as

etcdandKubernetes APIEKS控制平面基本运行Kubernetes服务,例如

etcd和Kubernetes APIEKS worker nodes to be able to run pods and more specific for our case spark jobs

EKS工作者节点能够运行Pod,并且针对我们的案例火花作业更具体

Let’s dive into the Terraform code. First, let’s see the VPC:

让我们深入了解Terraform代码。 首先,让我们看一下VPC:

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "2.6.0"name = join("-", [var.cluster_name, var.environment, "vpc"])

cidr = var.vpc_cidr

azs = data.aws_availability_zones.available.names

private_subnets = [var.private_subnet_az_1, var.private_subnet_az_2, var.private_subnet_az_3]

public_subnets = [var.public_subnet_az_1, var.public_subnet_az_2, var.public_subnet_az_3]

enable_nat_gateway = true

single_nat_gateway = false

one_nat_gateway_per_az = true

enable_dns_hostnames = true

enable_dns_support = truetags = {

"kubernetes.io/cluster/${var.cluster_name}" = "shared"

}public_subnet_tags = {

"kubernetes.io/cluster/${var.cluster_name}" = "shared"

"kubernetes.io/role/elb" = "1"

}private_subnet_tags = {

"kubernetes.io/cluster/${var.cluster_name}" = "shared"

"kubernetes.io/role/internal-elb" = "1"

}

}A VPC is an isolated network where we can have different infrastructure components. We can break down this network into smaller blocks and on AWS we call them subnets. Some subnets can have access to the internet and that is why we call them public subnets and some don’t have any access to the internet and they are called private subnets. Another terminology that we will use in context to the network traffic is egress and ingress. Egress means traffic from inside the network to the outside world and ingress traffic from the outside world to the network. As you can expect these rules can be different depending on the use case. We also use security groups, which are traffic rules inside the VPC, that define how the EC2 instances “talk” with each other, basically on which network ports.

VPC是一个隔离的网络,我们可以在其中拥有不同的基础架构组件。 我们可以将该网络分解为较小的块,在AWS上我们称其为子网。 有些子网可以访问Internet,这就是为什么我们将其称为公共子网,而有些子网则无法访问Internet,因此它们被称为专用子网。 我们将在上下文中使用的另一种术语是网络流量。 出口表示从网络内部到外部的流量,而从外部世界到网络的入口流量。 如您所料,这些规则可能会因使用案例而有所不同。 我们还使用安全组,这些组是VPC内的流量规则,用于定义EC2实例如何彼此“对话”,基本上是在哪个网络端口上。

For the Spark EKS cluster see will use private subnets for the workers. All the data processing is done in total isolation. But we need egress traffic to the internet, to do updates or install open source libraries. To enable traffic to the internet we use NAT gateways into our VPC. We have to add them to public subnets. In the Terraform code, this is done using the flag enable_nat_gateway.

对于Spark EKS集群,请参阅将专用子网用作工作服务器。 所有数据处理都是完全隔离的。 但是,我们需要将流量发送到互联网,进行更新或安装开源库。 为了启用到Internet的流量,我们使用NAT网关进入VPC。 我们必须将它们添加到公共子网中。 在Terraform代码中,这是使用标志enable_nat_gateway.完成的enable_nat_gateway.

Another thing we can notice is that we are using three public and private subnets. This is because we want to have network fault tolerance. The subnets are deployed in different availability zones in a region.

我们可以注意到的另一件事是,我们正在使用三个公用和专用子网。 这是因为我们要具有网络容错能力。 子网部署在区域中的不同可用性区域中。

The tags are created as per the requirements from AWS. They are needed for the Control plane to recognize the worker nodes. We can go into more detail about the networking, but it is outside of the scope of this tutorial, so if you need more details please have a look into the Github code where you can find the full example.

标签是根据AWS的要求创建的。 控制平面需要使用它们来识别工作节点。 我们可以详细介绍网络,但是它不在本教程的讨论范围之内,因此,如果您需要更多详细信息,请查看Github代码,您可以在其中找到完整的示例。

And let’s see the EKS cluster setup as well:

让我们也看看EKS集群设置:

module "eks" {

source = "terraform-aws-modules/eks/aws"

cluster_name = join("-", [var.cluster_name, var.environment, random_string.suffix.result])

subnets = module.vpc.private_subnetstags = {

Environment = var.environment

}vpc_id = module.vpc.vpc_id

cluster_endpoint_private_access = truecluster_enabled_log_types = ["api", "audit", "authenticator", "controllerManager", "scheduler"]worker_groups = [

{

name = "worker-group-spark"

instance_type = var.cluster_instance_type

additional_userdata = "worker nodes"

asg_desired_capacity = var.cluster_number_of_nodes

additional_security_group_ids = [aws_security_group.all_worker_mgmt.id, aws_security_group.inside_vpc_traffic.id]

}

]workers_group_defaults = {

key_name = "eks_key"

subnets = module.vpc.private_subnets

}

}Again in this snippet, we can see that we declare the cluster inside private subnets. We enable the Clowdwatch logs for all the components of the Control plane. We set the EC2 instance types and number for a config varmodule and as defaults, we use m5.xlarge as the instance type and 3 nodes. We set an EC2 key eks_key if we need to ssh into the worker nodes.

再次在此代码段中,我们可以看到我们在专用子网中声明了集群。 我们为控制平面的所有组件启用Clowdwatch日志。 我们为config var模块设置EC2实例类型和编号,默认情况下,我们使用m5.xlarge作为实例类型和3个节点。 如果需要ssh进入工作节点,则设置EC2密钥eks_key 。

To be able to run the code in this tutorial we need to install a couple of tools. On Mac we can use brew:

为了能够运行本教程中的代码,我们需要安装一些工具。 在Mac上,我们可以使用brew:

brew install terraform aws-iam-authenticator kubernetes-cli helmAnd to reach AWS we need to also set up our AWS credentials.

为了访问AWS,我们还需要设置我们的AWS凭证 。

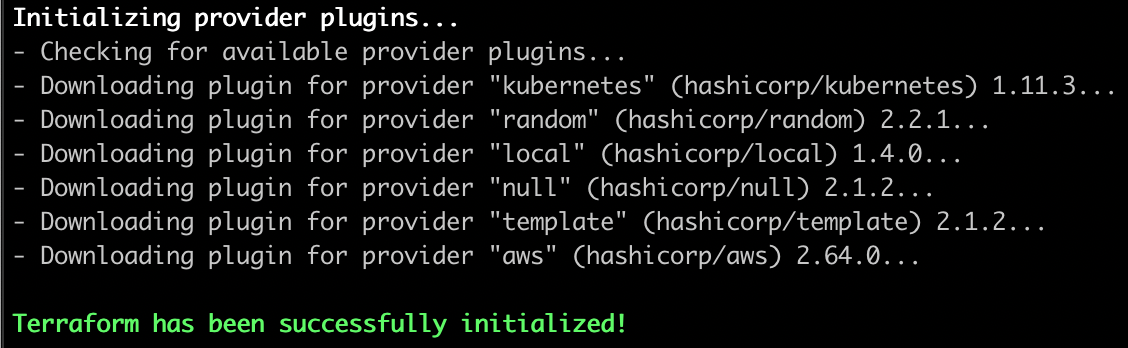

Now we can start to initialize Terraform in order to get all the dependencies needed to deploy the infrastructure:

现在我们可以开始初始化Terraform以获得部署基础架构所需的所有依赖项:

cd deployment/ && terraform initIf everything runs successfully you should be able to see something similar to the image:

如果一切顺利运行,您应该能够看到类似于该图像的内容:

We are ready to deploy the infrastructure. To do so run:

我们准备部署基础架构。 为此,请运行:

terraform applyIt will take some time until the deployment is done, so we can sit back and relax for a bit.

部署完成需要一些时间,因此我们可以稍作休息。

Once done you should see the message Apply complete! Resources: 55 added, 0 changed, 0 destroyed. and the names of the resources deployed.

完成后,您应该会看到消息“ Apply complete! Resources: 55 added, 0 changed, 0 destroyed. Apply complete! Resources: 55 added, 0 changed, 0 destroyed. 以及已部署资源的名称。

One additional step we can do to check if the deployment was correct is to see if the worker nodes have been attached to the cluster. For that we setup kubectl:

我们可以检查部署是否正确的另一步骤是查看辅助节点是否已连接到集群。 为此,我们设置kubectl:

aws --region your-region eks update-kubeconfig --name your-cluster-nameWe should be able to see three nodes when we run the following command:

运行以下命令时,我们应该能够看到三个节点:

kubectl get nodes步骤2:安装Spark Operator (Step 2: Installing the Spark Operator)

Usually, we deploy spark jobs using the spark-submit , but in Kubernetes, we have a better option, more integrated with the environment called the Spark Operator. Some of the improvements that it brings are automatic application re-submission, automatic restarts with a custom restart policy, automatic retries of failed submissions, and easy integration with monitoring tools such as Prometheus.

通常,我们使用spark-submit部署spark作业,但是在Kubernetes中,我们有一个更好的选择,它与称为Spark Operator的环境更加集成。 它带来的一些改进包括自动重新提交应用程序,使用自定义重新启动策略自动重新启动,自动重试失败的提交以及与监视工具(例如Prometheus)轻松集成。

We can install it via helm:

我们可以通过helm:安装它helm:

helm repo add incubator http://storage.googleapis.com/kubernetes-charts-incubator

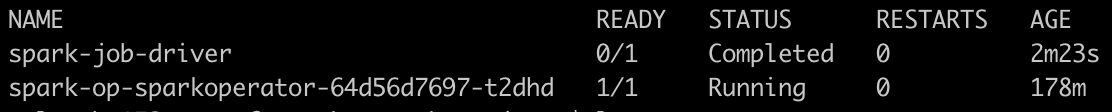

helm install spark-op incubator/sparkoperatorIf we run helm list in the terminal the spark-op chart should be available. Also, we should have a running pod for the spark operator. We can watch what pods are running in the default namespace with the command kubectl get pods.

如果我们在终端中运行helm list ,则spark-op图应可用。 另外,我们应该为spark操作员提供一个运行中的吊舱。 我们可以使用命令kubectl get pods.来观察default名称空间中正在运行的kubectl get pods.

步骤3:运行PySpark应用 (Step 3: Running a PySpark app)

Now we can finally run python spark apps in K8s. The first thing we need to do is to create a spark user, in order to give the spark jobs, access to the Kubernetes resources. We create a service account and a cluster role binding for this purpose:

现在我们终于可以在K8s中运行python spark应用了。 我们需要做的第一件事是创建一个spark用户,以便给spark作业提供对Kubernetes资源的访问权限。 为此,我们创建一个服务帐户和一个群集角色绑定:

apiVersion: v1

kind: ServiceAccount

metadata:

name: spark

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: spark-role

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: edit

subjects:

- kind: ServiceAccount

name: spark

namespace: defaultTo execute the creation of the role:

要执行角色的创建:

kubectl apply -f spark/spark-rbac.ymlYou will get notified with serviceaccount/spark created and clusterrolebinding.rbac.authorization.k8s.io/spark-role created.

您将收到有关serviceaccount/spark created和clusterrolebinding.rbac.authorization.k8s.io/spark-role created.通知clusterrolebinding.rbac.authorization.k8s.io/spark-role created.

The Spark Operator job definition:

Spark Operator作业定义:

apiVersion: "sparkoperator.k8s.io/v1beta2"

kind: SparkApplication

metadata:

name: spark-job

namespace: default

spec:

type: Python

pythonVersion: "3"

mode: cluster

image: "uprush/apache-spark-pyspark:2.4.5"

imagePullPolicy: Always

mainApplicationFile: local:opt/spark/examples/src/main/python/pi.py

sparkVersion: "2.4.5"

restartPolicy:

type: OnFailure

onFailureRetries: 2

driver:

cores: 1

memory: "1G"

labels:

version: 2.4.5

serviceAccount: spark

executor:

cores: 1

instances: 1

memory: "1G"

labels:

version: 2.4.5We define our spark run parameters in a yml file, similar to any other resource declarations on Kubernetes. Basically we are defining that we are running a Python 3 spark app and we are the image uprush/apache-spark-pyspark:2.4.5. I recommend using this image because it comes with a newer version of yarn that handles writes to s3a more efficiently. We have a retry policy if the job fails, it will be restarted. Some resource allocations for the driver and the executors. As the job is very simple, we use just an executor. Another thing we can notice is that we use the spark service account that we defined earlier. To code we are using is a classic example of computing the pi number.

我们在yml文件中定义了火花运行参数,类似于Kubernetes上的任何其他资源声明。 基本上,我们定义的是正在运行的Python 3 uprush/apache-spark-pyspark:2.4.5.应用程序,并且是图像uprush/apache-spark-pyspark:2.4.5. 我建议使用此映像,因为它带有较新版本的yarn,可以更有效地处理对s3a写入。 如果作业失败,我们有一个重试策略,它将重新启动。 驱动程序和执行程序的一些资源分配。 由于工作非常简单,我们只使用执行器。 我们可以注意到的另一件事是,我们使用了之前定义的spark服务帐户。 我们正在使用的编码是计算pi编号的经典示例。

To submit the code we are using kubectl again:

要再次提交代码,我们将再次使用kubectl :

kubectl apply -f spark/spark-job.ymlUpon completion, if we inspect the pods again we should have a similar result:

完成后,如果我们再次检查豆荚,我们应该得到类似的结果:

And if we check the logs by running kubectl logs spark-job-driver we should find one line in the logs giving an approximate value of pi Pi is roughly 3.142020.

如果我们通过运行kubectl logs spark-job-driver检查日志,我们应该在日志中找到一行,给出pi Pi is roughly 3.142020.的近似值Pi is roughly 3.142020.

That was all folks. I hope you enjoyed this tutorial. We’ve seen how we can create our own AWS EKS cluster using terraform, to easily re-deploy it in different environments and how we can submit PySpark jobs using a more Kubernetes friendly syntax.

那是所有人。 希望您喜欢本教程。 我们已经看到了如何使用terraform创建我们自己的AWS EKS集群,如何在不同环境中轻松地重新部署它以及如何使用更加Kubernetes友好的语法提交PySpark作业。

翻译自: https://towardsdatascience.com/how-to-run-a-pyspark-job-in-kubernetes-aws-eks-d886193dac3c

aws eks

6575

6575

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?