HBase是基于列存储的,每个列族都由几个文件保存,不同列族的文件是分离的

HBase只有一个索引——行键,通过巧妙的设计,HBase中的所有访问方法,或者通过行键访问,或者通过行键扫描,从而使得整个系统不会慢下来

HBase是一个稀疏、多维度、排序的映射表,这张表的索引是行键、列族、列限定符和时间戳,数据坐标HBase中需要根据行键、列族、列限定符和时间戳来确定一个单元格,因此,可以视为一个"四维坐标",即[行键,列族, 列限定符,时间戳]

每个值是一个未经解释的字符串,没有数据类型

HBase实现原理

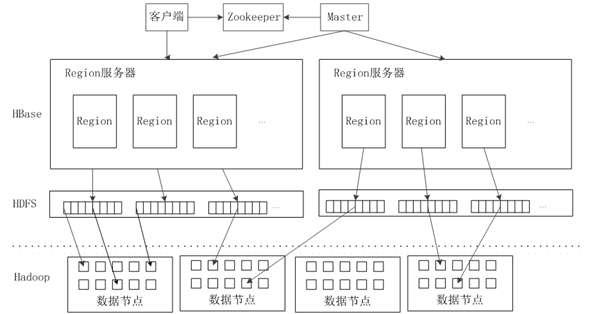

1、HBase的实现包括三个主要的功能组件:

(1)库函数:链接到每个客户端

(2)一个Master主服务器

(3)许多个Region服务器

(1、客户端包含访问HBase的接口,同时在缓存中维护着已经访问过的Region位置信息,用来加快后续数据访问过程

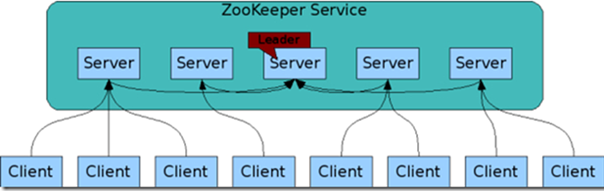

(2、Zookeeper可以帮助选举出一个Master作为集群的总管,并保证在任何时刻总有唯一一个Master在运行,这就避免了Master的"单点失效"问题

(Zookeeper是一个很好的集群管理工具,被大量用于分布式计算,提供配置维护、域名服务、分布式同步、组服务等。)

(3. Master

主服务器Master主要负责表和Region的管理工作:

管理用户对表的增加、删除、修改、查询等操作

实现不同Region服务器之间的负载均衡

在Region分裂或合并后,负责重新调整Region的分布

对发生故障失效的Region服务器上的Region进行迁移

(4. Region服务器

Region服务器是HBase中最核心的模块,负责维护分配给自己的Region,并响应用户的读写请求

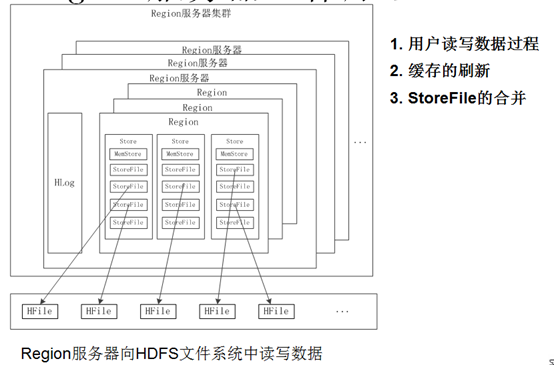

(1、用户读写数据过程

用户写入数据时,被分配到相应Region服务器去执行

用户数据首先被写入到MemStore和Hlog中

只有当操作写入Hlog之后,commit()调用才会将其返回给客户端

当用户读取数据时,Region服务器会首先访问MemStore缓存,如果找不到,再去磁盘上面的StoreFile中寻找

(2、缓存的刷新

系统会周期性地把MemStore缓存里的内容刷写到磁盘的StoreFile文件中,清空缓存,并在Hlog里面写入一个标记、

每次刷写都生成一个新的StoreFile文件,因此,每个Store包含多个StoreFile文件

每个Region服务器都有一个自己的HLog文件,每次启动都检查该文件,确认最近一次执行缓存刷新操作之后是否发生新的写入操作;如果发现更新,则先写入MemStore,再刷写到StoreFile,最后删除旧的Hlog文件,开始为用户提供服务

(3、StroreFile的合并

每次刷写都生成一个新的StoreFile,数量太多,影响查找速度

调用Store.compact()把多个合并成一个

合并操作比较耗费资源,只有数量达到一个阈值才启动合并

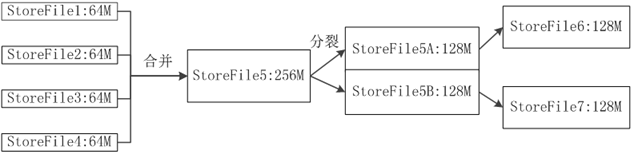

3、Store工作原理

Store是Region服务器的核心

多个StoreFile合并成一个

触发分裂操作,1个父Region被分裂成两个子Region

单个StoreFile过大时,又

单个StoreFile过大时,又

4、HLog工作原理

分布式环境必须要考虑系统出错。HBase采用HLog保证系统恢复

HBase系统为每个Region服务器配置了一个HLog文件,它是一种预写式日志(WriteAhead Log)

用户更新数据必须首先写入日志后,才能写入MemStore缓存,并且,直到MemStore缓存内容对应的日志已经写入磁盘,该缓存内容才能被刷写到磁盘

Zookeeper会实时监测每个Region服务器的状态,当某个Region服务器发生故障时,Zookeeper会通知Master

Master首先会处理该故障Region服务器上面遗留的HLog文件,这个遗留的HLog文件中包含了来自多个Region对象的日志记录

系统会根据每条日志记录所属的Region对象对HLog数据进行拆分,分别放到相应Region对象的目录下,然后,再将失效的Region重新分配到可用的Region服务器中,并把与该Region对象相关的HLog日志记录也发送给相应的Region服务器

Region服务器领取到分配给自己的Region对象以及与之相关的HLog日志记录以后,会重新做一遍日志记录中的各种操作,把日志记录中的数据写入到MemStore缓存中,然后,刷新到磁盘的StoreFile文件中,完成数据恢复

共用日志优点:提高对表的写操作性能;缺点:恢复时需要分拆日志

2、HBaseMaster默认基于Web的UI服务端口为60010,HBase region服务器默认基于Web的UI服务端口为60030.如果master运行在名为master.foo.com的主机中,mater的主页地址就是http://master.foo.com:60010,用户可以通过Web浏览器输入这个地址查看该页面

可以查看HBase集群的当前状态

HBase的版本:0.98.8-hadoop2

所需的依赖包:

commons-codec-1.7.jar

commons-collections-3.2.1.jar

commons-configuration-1.6.jar

commons-lang-2.6.jar

commons-logging-1.1.3.jar

guava-12.0.1.jar

hadoop-auth-2.5.0.jar

hadoop-common-2.5.0.jar

hbase-client-0.98.8-hadoop2.jar

hbase-common-0.98.8-hadoop2.jar

hbase-protocol-0.98.8-hadoop2.jar

htrace-core-2.04.jar

jackson-core-asl-1.9.13.jar

jackson-mapper-asl-1.9.13.jar

log4j-1.2.17.jar

mysql-connector-java-5.1.7-bin.jar

netty-3.6.6.Final.jar

protobuf-java-2.5.0.jar

slf4j-api-1.7.5.jar

slf4j-log4j12-1.7.5.jar

zookeeper-3.4.6.jar

首先我们要确保Web项目中已经把必要的Jar包添加到ClassPath了,下面我对一些HBase的连接做了小封装:

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.HBaseAdmin;

/**

* @author a01513

*

*/

public class HBaseConnector {

private static final String QUOREM = "192.168.103.50,192.168.103.51,192.168.103.52,192.168.103.53,192.168.103.54,192.168.103.55,192.168.103.56,192.168.103.57,192.168.103.58,192.168.103.59,192.168.103.60";

private static final String CLIENT_PORT = "2181";

private HBaseAdmin admin;

private Configuration conf;

public HBaseAdmin getHBaseAdmin(){

getConfiguration();

try {

admin = new HBaseAdmin(conf);

} catch (Exception e) {

e.printStackTrace();

}

return admin;

}

public Configuration getConfiguration(){

if(conf == null){

conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", QUOREM);

conf.set("hbase.zookeeper.property.clientPort", CLIENT_PORT);

}

return conf;

}

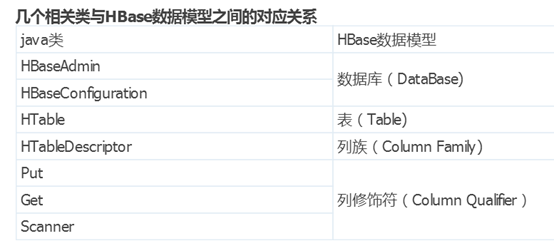

HBase JavaAPI

HBaseConfiguration

关系:org.apache.hadoop.hbase.HBaseConfiguration

作用:对HBase进行配置

| 返回值 | 函数 | 描述 |

| void | addResource(Path file) | 通过给定的路径所指的文件来添加资源 |

| void | clear() | 清空所有已设置的属性 |

| string | get(String name) | 获取属性名对应的值 |

| String | getBoolean(String name, boolean defaultValue) | 获取为boolean类型的属性值,如果其属性值类型部位boolean,则返回默认属性值 |

| void | set(String name, String value) | 通过属性名来设置值 |

| void | setBoolean(String name, boolean value) | 设置boolean类型的属性值 |

用法示例:

-

HBaseConfiguration hconfig = new HBaseConfiguration();

-

hconfig.set("hbase.zookeeper.property.clientPort","2181");

HBaseAdmin

关系:org.apache.hadoop.hbase.client.HBaseAdmin

作用:提供了一个接口来管理HBase数据库的表信息。它提供的方法包括:创建表,删除表,列出表项,使表有效或无效,以及添加或删除表列族成员等。

| 返回值 | 函数 | 描述 |

| void | addColumn(String tableName, HColumnDescriptor column) | 向一个已经存在的表添加咧 |

| checkHBaseAvailable(HBaseConfiguration conf) | 静态函数,查看HBase是否处于运行状态 | |

| createTable(HTableDescriptor desc) | 创建一个表,同步操作 | |

| deleteTable(byte[] tableName) | 删除一个已经存在的表 | |

| enableTable(byte[] tableName) | 使表处于有效状态 | |

| disableTable(byte[] tableName) | 使表处于无效状态 | |

| HTableDescriptor[] | listTables() | 列出所有用户控件表项 |

| void | modifyTable(byte[] tableName, HTableDescriptor htd) | 修改表的模式,是异步的操作,可能需要花费一定的时间 |

| boolean | tableExists(String tableName) | 检查表是否存在 |

用法示例:

-

HBaseAdmin admin = new HBaseAdmin(config);

-

admin.disableTable("tablename")

HTableDescriptor

关系:org.apache.hadoop.hbase.HTableDescriptor

作用:包含了表的名字极其对应表的列族

| 返回值 | 函数 | 描述 |

| void | addFamily(HColumnDescriptor) | 添加一个列族 |

| HColumnDescriptor | removeFamily(byte[] column) | 移除一个列族 |

| byte[] | getName() | 获取表的名字 |

| byte[] | getValue(byte[] key) | 获取属性的值 |

| void | setValue(String key, String value) | 设置属性的值 |

用法示例:

-

HTableDescriptor htd = new HTableDescriptor(table);

-

htd.addFamily(new HcolumnDescriptor("family"));

HTableDescriptor

关系:org.apache.hadoop.hbase.HTableDescriptor

作用:包含了表的名字极其对应表的列族

| 返回值 | 函数 | 描述 |

| void | addFamily(HColumnDescriptor) | 添加一个列族 |

| HColumnDescriptor | removeFamily(byte[] column) | 移除一个列族 |

| byte[] | getName() | 获取表的名字 |

| byte[] | getValue(byte[] key) | 获取属性的值 |

| void | setValue(String key, String value) | 设置属性的值 |

用法示例:

-

HTableDescriptor htd = new HTableDescriptor(table);

-

htd.addFamily(new HcolumnDescriptor("family"));

HTable

关系:org.apache.hadoop.hbase.client.HTable

作用:可以用来和HBase表直接通信。此方法对于更新操作来说是非线程安全的。

| 返回值 | 函数 | 描述 |

| void | checkAdnPut(byte[] row, byte[] family, byte[] qualifier, byte[] value, Put put | 自动的检查row/family/qualifier是否与给定的值匹配 |

| void | close() | 释放所有的资源或挂起内部缓冲区中的更新 |

| Boolean | exists(Get get) | 检查Get实例所指定的值是否存在于HTable的列中 |

| Result | get(Get get) | 获取指定行的某些单元格所对应的值 |

| byte[][] | getEndKeys() | 获取当前一打开的表每个区域的结束键值 |

| ResultScanner | getScanner(byte[] family) | 获取当前给定列族的scanner实例 |

| HTableDescriptor | getTableDescriptor() | 获取当前表的HTableDescriptor实例 |

| byte[] | getTableName() | 获取表名 |

| static boolean | isTableEnabled(HBaseConfiguration conf, String tableName) | 检查表是否有效 |

| void | put(Put put) | 向表中添加值 |

用法示例:

-

HTable table = new HTable(conf, Bytes.toBytes(tablename));

-

ResultScanner scanner = table.getScanner(family);

Put

关系:org.apache.hadoop.hbase.client.Put

作用:用来对单个行执行添加操作

| 返回值 | 函数 | 描述 |

| Put | add(byte[] family, byte[] qualifier, byte[] value) | 将指定的列和对应的值添加到Put实例中 |

| Put | add(byte[] family, byte[] qualifier, long ts, byte[] value) | 将指定的列和对应的值及时间戳添加到Put实例中 |

| byte[] | getRow() | 获取Put实例的行 |

| RowLock | getRowLock() | 获取Put实例的行锁 |

| long | getTimeStamp() | 获取Put实例的时间戳 |

| boolean | isEmpty() | 检查familyMap是否为空 |

| Put | setTimeStamp(long timeStamp) | 设置Put实例的时间戳 |

用法示例:

-

HTable table = new HTable(conf,Bytes.toBytes(tablename));

-

Put p = new Put(brow);//为指定行创建一个Put操作

-

p.add(family,qualifier,value);

-

table.put(p);

Get

关系:org.apache.hadoop.hbase.client.Get

作用:用来获取单个行的相关信息

| 返回值 | 函数 | 描述 |

| Get | addColumn(byte[] family, byte[] qualifier) | 获取指定列族和列修饰符对应的列 |

| Get | addFamily(byte[] family) | 通过指定的列族获取其对应列的所有列 |

| Get | setTimeRange(long minStamp,long maxStamp) | 获取指定取件的列的版本号 |

| Get | setFilter(Filter filter) | 当执行Get操作时设置服务器端的过滤器 |

用法示例:

-

HTable table = new HTable(conf, Bytes.toBytes(tablename));

-

Get g = new Get(Bytes.toBytes(row));

Result

关系:org.apache.hadoop.hbase.client.Result

作用:存储Get或者Scan操作后获取表的单行值。使用此类提供的方法可以直接获取值或者各种Map结构(key-value对)

| 返回值 | 函数 | 描述 |

| boolean | containsColumn(byte[] family, byte[] qualifier) | 检查指定的列是否存在 |

| NavigableMap<byte[],byte[]> | getFamilyMap(byte[] family) | 获取对应列族所包含的修饰符与值的键值对 |

| byte[] | getValue(byte[] family, byte[] qualifier) | 获取对应列的最新值 |

ResultScanner

关系:Interface

作用:客户端获取值的接口

| 返回值 | 函数 | 描述 |

| void | close() | 关闭scanner并释放分配给它的资源 |

| Result | next() | 获取下一行的值 |

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.KeyValue;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HBaseAdmin;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.HTablePool;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.util.Bytes;

public class Hbase {

// 声明静态配置

static Configuration conf = null;

static {

conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "localhost");

}

/*

* 创建表

*

* @tableName 表名

*

* @family 列族列表

*/

public static void creatTable(String tableName, String[] family)

throws Exception {

HBaseAdmin admin = new HBaseAdmin(conf);

HTableDescriptor desc = new HTableDescriptor(tableName);

for (int i = 0; i < family.length; i++) {

desc.addFamily(new HColumnDescriptor(family[i]));

}

if (admin.tableExists(tableName)) {

System.out.println("table Exists!");

System.exit(0);

} else {

admin.createTable(desc);

System.out.println("create table Success!");

}

}

/*

* 为表添加数据(适合知道有多少列族的固定表)

*

* @rowKey rowKey

*

* @tableName 表名

*

* @column1 第一个列族列表

*

* @value1 第一个列的值的列表

*

* @column2 第二个列族列表

*

* @value2 第二个列的值的列表

*/

public static void addData(String rowKey, String tableName,

String[] column1, String[] value1, String[] column2, String[] value2)

throws IOException {

Put put = new Put(Bytes.toBytes(rowKey));// 设置rowkey

HTable table = new HTable(conf, Bytes.toBytes(tableName));// HTabel负责跟记录相关的操作如增删改查等//

// 获取表

HColumnDescriptor[] columnFamilies = table.getTableDescriptor() // 获取所有的列族

.getColumnFamilies();

for (int i = 0; i < columnFamilies.length; i++) {

String familyName = columnFamilies[i].getNameAsString(); // 获取列族名

if (familyName.equals("article")) { // article列族put数据

for (int j = 0; j < column1.length; j++) {

put.add(Bytes.toBytes(familyName),

Bytes.toBytes(column1[j]), Bytes.toBytes(value1[j]));

}

}

if (familyName.equals("author")) { // author列族put数据

for (int j = 0; j < column2.length; j++) {

put.add(Bytes.toBytes(familyName),

Bytes.toBytes(column2[j]), Bytes.toBytes(value2[j]));

}

}

}

table.put(put);

System.out.println("add data Success!");

}

/*

* 根据rwokey查询

*

* @rowKey rowKey

*

* @tableName 表名

*/

public static Result getResult(String tableName, String rowKey)

throws IOException {

Get get = new Get(Bytes.toBytes(rowKey));

HTable table = new HTable(conf, Bytes.toBytes(tableName));// 获取表

Result result = table.get(get);

for (KeyValue kv : result.list()) {

System.out.println("family:" + Bytes.toString(kv.getFamily()));

System.out

.println("qualifier:" + Bytes.toString(kv.getQualifier()));

System.out.println("value:" + Bytes.toString(kv.getValue()));

System.out.println("Timestamp:" + kv.getTimestamp());

System.out.println("-------------------------------------------");

}

return result;

}

/*

* 遍历查询hbase表

*

* @tableName 表名

*/

public static void getResultScann(String tableName) throws IOException {

Scan scan = new Scan();

ResultScanner rs = null;

HTable table = new HTable(conf, Bytes.toBytes(tableName));

try {

rs = table.getScanner(scan);

for (Result r : rs) {

for (KeyValue kv : r.list()) {

System.out.println("row:" + Bytes.toString(kv.getRow()));

System.out.println("family:"

+ Bytes.toString(kv.getFamily()));

System.out.println("qualifier:"

+ Bytes.toString(kv.getQualifier()));

System.out

.println("value:" + Bytes.toString(kv.getValue()));

System.out.println("timestamp:" + kv.getTimestamp());

System.out

.println("-------------------------------------------");

}

}

} finally {

rs.close();

}

}

/*

* 遍历查询hbase表

*

* @tableName 表名

*/

public static void getResultScann(String tableName, String start_rowkey,

String stop_rowkey) throws IOException {

Scan scan = new Scan();

scan.setStartRow(Bytes.toBytes(start_rowkey));

scan.setStopRow(Bytes.toBytes(stop_rowkey));

ResultScanner rs = null;

HTable table = new HTable(conf, Bytes.toBytes(tableName));

try {

rs = table.getScanner(scan);

for (Result r : rs) {

for (KeyValue kv : r.list()) {

System.out.println("row:" + Bytes.toString(kv.getRow()));

System.out.println("family:"

+ Bytes.toString(kv.getFamily()));

System.out.println("qualifier:"

+ Bytes.toString(kv.getQualifier()));

System.out

.println("value:" + Bytes.toString(kv.getValue()));

System.out.println("timestamp:" + kv.getTimestamp());

System.out

.println("-------------------------------------------");

}

}

} finally {

rs.close();

}

}

/*

* 查询表中的某一列

*

* @tableName 表名

*

* @rowKey rowKey

*/

public static void getResultByColumn(String tableName, String rowKey,

String familyName, String columnName) throws IOException {

HTable table = new HTable(conf, Bytes.toBytes(tableName));

Get get = new Get(Bytes.toBytes(rowKey));

get.addColumn(Bytes.toBytes(familyName), Bytes.toBytes(columnName)); // 获取指定列族和列修饰符对应的列

Result result = table.get(get);

for (KeyValue kv : result.list()) {

System.out.println("family:" + Bytes.toString(kv.getFamily()));

System.out

.println("qualifier:" + Bytes.toString(kv.getQualifier()));

System.out.println("value:" + Bytes.toString(kv.getValue()));

System.out.println("Timestamp:" + kv.getTimestamp());

System.out.println("-------------------------------------------");

}

}

/*

* 更新表中的某一列

*

* @tableName 表名

*

* @rowKey rowKey

*

* @familyName 列族名

*

* @columnName 列名

*

* @value 更新后的值

*/

public static void updateTable(String tableName, String rowKey,

String familyName, String columnName, String value)

throws IOException {

HTable table = new HTable(conf, Bytes.toBytes(tableName));

Put put = new Put(Bytes.toBytes(rowKey));

put.add(Bytes.toBytes(familyName), Bytes.toBytes(columnName),

Bytes.toBytes(value));

table.put(put);

System.out.println("update table Success!");

}

/*

* 查询某列数据的多个版本

*

* @tableName 表名

*

* @rowKey rowKey

*

* @familyName 列族名

*

* @columnName 列名

*/

public static void getResultByVersion(String tableName, String rowKey,

String familyName, String columnName) throws IOException {

HTable table = new HTable(conf, Bytes.toBytes(tableName));

Get get = new Get(Bytes.toBytes(rowKey));

get.addColumn(Bytes.toBytes(familyName), Bytes.toBytes(columnName));

get.setMaxVersions(5);

Result result = table.get(get);

for (KeyValue kv : result.list()) {

System.out.println("family:" + Bytes.toString(kv.getFamily()));

System.out

.println("qualifier:" + Bytes.toString(kv.getQualifier()));

System.out.println("value:" + Bytes.toString(kv.getValue()));

System.out.println("Timestamp:" + kv.getTimestamp());

System.out.println("-------------------------------------------");

}

/*

* List<?> results = table.get(get).list(); Iterator<?> it =

* results.iterator(); while (it.hasNext()) {

* System.out.println(it.next().toString()); }

*/

}

/*

* 删除指定的列

*

* @tableName 表名

*

* @rowKey rowKey

*

* @familyName 列族名

*

* @columnName 列名

*/

public static void deleteColumn(String tableName, String rowKey,

String falilyName, String columnName) throws IOException {

HTable table = new HTable(conf, Bytes.toBytes(tableName));

Delete deleteColumn = new Delete(Bytes.toBytes(rowKey));

deleteColumn.deleteColumns(Bytes.toBytes(falilyName),

Bytes.toBytes(columnName));

table.delete(deleteColumn);

System.out.println(falilyName + ":" + columnName + "is deleted!");

}

/*

* 删除指定的列

*

* @tableName 表名

*

* @rowKey rowKey

*/

public static void deleteAllColumn(String tableName, String rowKey)

throws IOException {

HTable table = new HTable(conf, Bytes.toBytes(tableName));

Delete deleteAll = new Delete(Bytes.toBytes(rowKey));

table.delete(deleteAll);

System.out.println("all columns are deleted!");

}

/*

* 删除表

*

* @tableName 表名

*/

public static void deleteTable(String tableName) throws IOException {

HBaseAdmin admin = new HBaseAdmin(conf);

admin.disableTable(tableName);

admin.deleteTable(tableName);

System.out.println(tableName + "is deleted!");

}

public static void main(String[] args) throws Exception {

// 创建表

String tableName = "blog2";

String[] family = { "article", "author" };

// creatTable(tableName, family);

// 为表添加数据

String[] column1 = { "title", "content", "tag" };

String[] value1 = {

"Head First HBase",

"HBase is the Hadoop database. Use it when you need random, realtime read/write access to your Big Data.",

"Hadoop,HBase,NoSQL" };

String[] column2 = { "name", "nickname" };

String[] value2 = { "nicholas", "lee" };

addData("rowkey1", "blog2", column1, value1, column2, value2);

addData("rowkey2", "blog2", column1, value1, column2, value2);

addData("rowkey3", "blog2", column1, value1, column2, value2);

// 遍历查询

getResultScann("blog2", "rowkey4", "rowkey5");

// 根据row key范围遍历查询

getResultScann("blog2", "rowkey4", "rowkey5");

// 查询

getResult("blog2", "rowkey1");

// 查询某一列的值

getResultByColumn("blog2", "rowkey1", "author", "name");

// 更新列

updateTable("blog2", "rowkey1", "author", "name", "bin");

// 查询某一列的值

getResultByColumn("blog2", "rowkey1", "author", "name");

// 查询某列的多版本

getResultByVersion("blog2", "rowkey1", "author", "name");

// 删除一列

deleteColumn("blog2", "rowkey1", "author", "nickname");

// 删除所有列

deleteAllColumn("blog2", "rowkey1");

// 删除表

deleteTable("blog2");

}

}

Hbase基本使用示例:

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.KeyValue;

import org.apache.hadoop.hbase.MasterNotRunningException;

import org.apache.hadoop.hbase.ZooKeeperConnectionException;

import org.apache.hadoop.hbase.client.Delete;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HBaseAdmin;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.HTablePool;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.filter.Filter;

import org.apache.hadoop.hbase.filter.FilterList;

import org.apache.hadoop.hbase.filter.SingleColumnValueFilter;

import org.apache.hadoop.hbase.filter.CompareFilter.CompareOp;

import org.apache.hadoop.hbase.util.Bytes;

public class HbaseTest {

public static Configuration configuration;

static {

configuration = HBaseConfiguration.create();

configuration.set("hbase.zookeeper.property.clientPort", "2181");

configuration.set("hbase.zookeeper.quorum", "192.168.1.100");

configuration.set("hbase.master", "192.168.1.100:600000");

}

public static void main(String[] args) {

// createTable("wujintao");

// insertData("wujintao");

// QueryAll("wujintao");

// QueryByCondition1("wujintao");

// QueryByCondition2("wujintao");

//QueryByCondition3("wujintao");

//deleteRow("wujintao","abcdef");

deleteByCondition("wujintao","abcdef");

}

public static void createTable(String tableName) {

System.out.println("start create table ......");

try {

HBaseAdmin hBaseAdmin = new HBaseAdmin(configuration);

if (hBaseAdmin.tableExists(tableName)) {// 如果存在要创建的表,那么先删除,再创建

hBaseAdmin.disableTable(tableName);

hBaseAdmin.deleteTable(tableName);

System.out.println(tableName + " is exist,detele....");

}

HTableDescriptor tableDescriptor = new HTableDescriptor(tableName);

tableDescriptor.addFamily(new HColumnDescriptor("column1"));

tableDescriptor.addFamily(new HColumnDescriptor("column2"));

tableDescriptor.addFamily(new HColumnDescriptor("column3"));

hBaseAdmin.createTable(tableDescriptor);

} catch (MasterNotRunningException e) {

e.printStackTrace();

} catch (ZooKeeperConnectionException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

System.out.println("end create table ......");

}

public static void insertData(String tableName) {

System.out.println("start insert data ......");

HTablePool pool = new HTablePool(configuration, 1000);

HTable table = (HTable) pool.getTable(tableName);

Put put = new Put("112233bbbcccc".getBytes());// 一个PUT代表一行数据,再NEW一个PUT表示第二行数据,每行一个唯一的ROWKEY,此处rowkey为put构造方法中传入的值

put.add("column1".getBytes(), null, "aaa".getBytes());// 本行数据的第一列

put.add("column2".getBytes(), null, "bbb".getBytes());// 本行数据的第三列

put.add("column3".getBytes(), null, "ccc".getBytes());// 本行数据的第三列

try {

table.put(put);

} catch (IOException e) {

e.printStackTrace();

}

System.out.println("end insert data ......");

}

public static void dropTable(String tableName) {

try {

HBaseAdmin admin = new HBaseAdmin(configuration);

admin.disableTable(tableName);

admin.deleteTable(tableName);

} catch (MasterNotRunningException e) {

e.printStackTrace();

} catch (ZooKeeperConnectionException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

public static void deleteRow(String tablename, String rowkey) {

try {

HTable table = new HTable(configuration, tablename);

List list = new ArrayList();

Delete d1 = new Delete(rowkey.getBytes());

list.add(d1);

table.delete(list);

System.out.println("删除行成功!");

} catch (IOException e) {

e.printStackTrace();

}

}

public static void deleteByCondition(String tablename, String rowkey) {

//目前还没有发现有效的API能够实现根据非rowkey的条件删除这个功能能,还有清空表全部数据的API操作

}

public static void QueryAll(String tableName) {

HTablePool pool = new HTablePool(configuration, 1000);

HTable table = (HTable) pool.getTable(tableName);

try {

ResultScanner rs = table.getScanner(new Scan());

for (Result r : rs) {

System.out.println("获得到rowkey:" + new String(r.getRow()));

for (KeyValue keyValue : r.raw()) {

System.out.println("列:" + new String(keyValue.getFamily())

+ "====值:" + new String(keyValue.getValue()));

}

}

} catch (IOException e) {

e.printStackTrace();

}

}

public static void QueryByCondition1(String tableName) {

HTablePool pool = new HTablePool(configuration, 1000);

HTable table = (HTable) pool.getTable(tableName);

try {

Get scan = new Get("abcdef".getBytes());// 根据rowkey查询

Result r = table.get(scan);

System.out.println("获得到rowkey:" + new String(r.getRow()));

for (KeyValue keyValue : r.raw()) {

System.out.println("列:" + new String(keyValue.getFamily())

+ "====值:" + new String(keyValue.getValue()));

}

} catch (IOException e) {

e.printStackTrace();

}

}

public static void QueryByCondition2(String tableName) {

try {

HTablePool pool = new HTablePool(configuration, 1000);

HTable table = (HTable) pool.getTable(tableName);

Filter filter = new SingleColumnValueFilter(Bytes

.toBytes("column1"), null, CompareOp.EQUAL, Bytes

.toBytes("aaa")); // 当列column1的值为aaa时进行查询

Scan s = new Scan();

s.setFilter(filter);

ResultScanner rs = table.getScanner(s);

for (Result r : rs) {

System.out.println("获得到rowkey:" + new String(r.getRow()));

for (KeyValue keyValue : r.raw()) {

System.out.println("列:" + new String(keyValue.getFamily())

+ "====值:" + new String(keyValue.getValue()));

}

}

} catch (Exception e) {

e.printStackTrace();

}

}

public static void QueryByCondition3(String tableName) {

try {

HTablePool pool = new HTablePool(configuration, 1000);

HTable table = (HTable) pool.getTable(tableName);

List<Filter> filters = new ArrayList<Filter>();

Filter filter1 = new SingleColumnValueFilter(Bytes

.toBytes("column1"), null, CompareOp.EQUAL, Bytes

.toBytes("aaa"));

filters.add(filter1);

Filter filter2 = new SingleColumnValueFilter(Bytes

.toBytes("column2"), null, CompareOp.EQUAL, Bytes

.toBytes("bbb"));

filters.add(filter2);

Filter filter3 = new SingleColumnValueFilter(Bytes

.toBytes("column3"), null, CompareOp.EQUAL, Bytes

.toBytes("ccc"));

filters.add(filter3);

FilterList filterList1 = new FilterList(filters);

Scan scan = new Scan();

scan.setFilter(filterList1);

ResultScanner rs = table.getScanner(scan);

for (Result r : rs) {

System.out.println("获得到rowkey:" + new String(r.getRow()));

for (KeyValue keyValue : r.raw()) {

System.out.println("列:" + new String(keyValue.getFamily())

+ "====值:" + new String(keyValue.getValue()));

}

}

rs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

Hbase数据获取示例:

/*

* Need Packages:

* commons-codec-1.4.jar

*

* commons-logging-1.1.1.jar

*

* hadoop-0.20.2-core.jar

*

* hbase-0.90.2.jar

*

* log4j-1.2.16.jar

*

* zookeeper-3.3.2.jar

*

*/

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.KeyValue;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.filter.CompareFilter.CompareOp;

import org.apache.hadoop.hbase.filter.FilterList;

import org.apache.hadoop.hbase.filter.SingleColumnValueFilter;

import org.apache.hadoop.hbase.util.Bytes;

public class HbaseSelecter

{

public static Configuration configuration = null;

static

{

configuration = HBaseConfiguration.create();

//configuration.set("hbase.master", "192.168.0.201:60000");

configuration.set("hbase.zookeeper.quorum", "idc01-hd-nd-03,idc01-hd-nd-04,idc01-hd-nd-05");

//configuration.set("hbase.zookeeper.property.clientPort", "2181");

}

public static void selectRowKey(String tablename, String rowKey) throws IOException

{

HTable table = new HTable(configuration, tablename);

Get g = new Get(rowKey.getBytes());

Result rs = table.get(g);

for (KeyValue kv : rs.raw())

{

System.out.println("--------------------" + new String(kv.getRow()) + "----------------------------");

System.out.println("Column Family: " + new String(kv.getFamily()));

System.out.println("Column :" + new String(kv.getQualifier()));

System.out.println("value : " + new String(kv.getValue()));

}

}

public static void selectRowKeyFamily(String tablename, String rowKey, String family) throws IOException

{

HTable table = new HTable(configuration, tablename);

Get g = new Get(rowKey.getBytes());

g.addFamily(Bytes.toBytes(family));

Result rs = table.get(g);

for (KeyValue kv : rs.raw())

{

System.out.println("--------------------" + new String(kv.getRow()) + "----------------------------");

System.out.println("Column Family: " + new String(kv.getFamily()));

System.out.println("Column :" + new String(kv.getQualifier()));

System.out.println("value : " + new String(kv.getValue()));

}

}

public static void selectRowKeyFamilyColumn(String tablename, String rowKey, String family, String column)

throws IOException

{

HTable table = new HTable(configuration, tablename);

Get g = new Get(rowKey.getBytes());

g.addColumn(family.getBytes(), column.getBytes());

Result rs = table.get(g);

for (KeyValue kv : rs.raw())

{

System.out.println("--------------------" + new String(kv.getRow()) + "----------------------------");

System.out.println("Column Family: " + new String(kv.getFamily()));

System.out.println("Column :" + new String(kv.getQualifier()));

System.out.println("value : " + new String(kv.getValue()));

}

}

public static void selectFilter(String tablename, List<String> arr) throws IOException

{

HTable table = new HTable(configuration, tablename);

Scan scan = new Scan();// 实例化一个遍历器

FilterList filterList = new FilterList(); // 过滤器List

for (String v : arr)

{ // 下标0为列簇,1为列名,3为条件

String[] wheres = v.split(",");

filterList.addFilter(new SingleColumnValueFilter(// 过滤器

wheres[0].getBytes(), wheres[1].getBytes(),

CompareOp.EQUAL,// 各个条件之间是" and "的关系

wheres[2].getBytes()));

}

scan.setFilter(filterList);

ResultScanner ResultScannerFilterList = table.getScanner(scan);

for (Result rs = ResultScannerFilterList.next(); rs != null; rs = ResultScannerFilterList.next())

{

for (KeyValue kv : rs.list())

{

System.out.println("--------------------" + new String(kv.getRow()) + "----------------------------");

System.out.println("Column Family: " + new String(kv.getFamily()));

System.out.println("Column :" + new String(kv.getQualifier()));

System.out.println("value : " + new String(kv.getValue()));

}

}

}

public static void main(String[] args) throws Exception

{

if(args.length < 2){

System.out.println("Usage: HbaseSelecter table key");

System.exit(-1);

}

System.out.println("Table: " + args[0] + " , key: " + args[1]);

selectRowKey(args[0], args[1]);

/*

System.out.println("------------------------行键 查询----------------------------------");

selectRowKey("b2c", "yihaodian1002865");

selectRowKey("b2c", "yihaodian1003396");

System.out.println("------------------------行键+列簇 查询----------------------------------");

selectRowKeyFamily("riapguh", "用户A", "user");

selectRowKeyFamily("riapguh", "用户B", "user");

System.out.println("------------------------行键+列簇+列名 查询----------------------------------");

selectRowKeyFamilyColumn("riapguh", "用户A", "user", "user_code");

selectRowKeyFamilyColumn("riapguh", "用户B", "user", "user_code");

System.out.println("------------------------条件 查询----------------------------------");

List<String> arr = new ArrayList<String>();

arr.add("dpt,dpt_code,d_001");

arr.add("user,user_code,u_0001");

selectFilter("riapguh", arr);

*/

}

}

Hbase 导出特定列 示例(小量数据):

/*

* Need Packages:

* commons-codec-1.4.jar

*

* commons-logging-1.1.1.jar

*

* hadoop-0.20.2-core.jar

*

* hbase-0.90.2.jar

*

* log4j-1.2.16.jar

*

* zookeeper-3.3.2.jar

*

* Example: javac -classpath ./:/data/chenzhenjing/code/panama/lib/hbase-0.90.2.jar:/data/chenzhenjing/code/panama/lib/hadoop-core-0.20-append-for-hbase.jar:/data/chenzhenjing/code/panama/lib/commons-logging-1.0.4.jar:/data/chenzhenjing/code/panama/lib/commons-lang-2.4.jar:/data/chenzhenjing/code/panama/lib/commons-io-1.2.jar:/data/chenzhenjing/code/panama/lib/zookeeper-3.3.2.jar:/data/chenzhenjing/code/panama/lib/log4j-1.2.15.jar:/data/chenzhenjing/code/panama/lib/commons-codec-1.3.jar DiffHbase.java

*/

import java.io.BufferedReader;

import java.io.File;

import java.io.IOException;

import java.io.FileInputStream;

import java.io.InputStreamReader;

import java.io.FileOutputStream;

import java.io.OutputStreamWriter;

import java.io.StringReader;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.KeyValue;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.filter.CompareFilter.CompareOp;

import org.apache.hadoop.hbase.filter.FilterList;

import org.apache.hadoop.hbase.filter.SingleColumnValueFilter;

import org.apache.hadoop.hbase.util.Bytes;

class ColumnUtils {

public static byte[] getFamily(String column){

return getBytes(column, 0);

}

public static byte[] getQualifier(String column){

return getBytes(column, 1);

}

private static byte[] getBytes(String column , int offset){

String[] split = column.split(":");

return Bytes.toBytes(offset > split.length -1 ? split[0] :split[offset]);

}

}

public class DiffHbase

{

public static Configuration configuration = null;

static

{

configuration = HBaseConfiguration.create();

configuration.set("hbase.zookeeper.quorum", "idc01-hd-ds-01,idc01-hd-ds-02,idc01-hd-ds-03");

}

public static void selectRowKey(String tablename, String rowKey) throws IOException

{

HTable table = new HTable(configuration, tablename);

Get g = new Get(rowKey.getBytes());

Result rs = table.get(g);

for (KeyValue kv : rs.raw())

{

System.out.println("--------------------" + new String(kv.getRow()) + "----------------------------");

System.out.println("Column Family: " + new String(kv.getFamily()));

System.out.println("Column :" + new String(kv.getQualifier()) + "t");

System.out.println("value : " + new String(kv.getValue()));

}

}

public static void selectRowKeyFamily(String tablename, String rowKey, String family) throws IOException

{

HTable table = new HTable(configuration, tablename);

Get g = new Get(rowKey.getBytes());

g.addFamily(Bytes.toBytes(family));

Result rs = table.get(g);

for (KeyValue kv : rs.raw())

{

System.out.println("--------------------" + new String(kv.getRow()) + "----------------------------");

System.out.println("Column Family: " + new String(kv.getFamily()));

System.out.println("Column :" + new String(kv.getQualifier()) + "t");

System.out.println("value : " + new String(kv.getValue()));

}

}

public static void selectRowKeyFamilyColumn(String tablename, String rowKey, String family, String column)

throws IOException

{

HTable table = new HTable(configuration, tablename);

Get g = new Get(rowKey.getBytes());

g.addColumn(family.getBytes(), column.getBytes());

Result rs = table.get(g);

for (KeyValue kv : rs.raw())

{

System.out.println("--------------------" + new String(kv.getRow()) + "----------------------------");

System.out.println("Column Family: " + new String(kv.getFamily()));

System.out.println("Column :" + new String(kv.getQualifier()) + "t");

System.out.println("value : " + new String(kv.getValue()));

}

}

private static final String USAGE = "Usage: DiffHbase [-o outfile] tablename infile filterColumns...";

/**

* Prints the usage message and exists the program.

*

* @param message The message to print first.

*/

private static void printUsage(String message) {

System.err.println(message);

System.err.println(USAGE);

throw new RuntimeException(USAGE);

}

private static void PrintId(String id, Result rs){

String value = Bytes.toString( rs.getValue(ColumnUtils.getFamily("info:url"), ColumnUtils.getQualifier("info:url")));

if(value == null){

System.out.println( id + "\tNULL");

}else{

System.out.println( id + "\t" + value);

}

}

private static void WriteId(String id, Result rs, FileOutputStream os){

String value = Bytes.toString( rs.getValue(ColumnUtils.getFamily("info:url"), ColumnUtils.getQualifier("info:url")));

try{

if(value == null){

os.write( (id + "\tNULL\n").getBytes());

}else{

os.write( (id + "\t" + value + "\n").getBytes());

}

}

catch (IOException e) {

e.printStackTrace();

}

}

private static void PrintRow(String id, Result rs){

System.out.println("--------------------" + id + "----------------------------");

for (KeyValue kv : rs.raw())

{

System.out.println(new String(kv.getFamily()) + ":" + new String(kv.getQualifier()) + " : " + new String(kv.getValue()));

}

}

public static void main(String[] args) throws Exception

{

if (args.length < 3) {

printUsage("Too few arguments");

}

String outfile = null;

String tablename = args[0];

String dictfile = args[1];

int skilLen = 2;

if( args[0].equals("-o")){

outfile = args[1];

tablename = args[2];

dictfile = args[3];

skilLen = 4;

}

HTable table = new HTable(configuration, tablename);

String[] filterColumns = new String[args.length - skilLen];

System.arraycopy(args, skilLen, filterColumns, 0, args.length - skilLen);

System.out.println("filterColumns: ");

for(int i=0; i<filterColumns.length; ++i){

System.out.println("\t" + filterColumns[i]);

}

FileOutputStream os = null;

if(outfile != null){

os = new FileOutputStream(outfile);

}

int count = 0;

SimpleDateFormat df = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");//设置日期格式

File srcFile = new File(dictfile);

FileInputStream in = new FileInputStream(srcFile);

InputStreamReader isr = new InputStreamReader(in);

BufferedReader br = new BufferedReader(isr);

String read = null;

while ((read = br.readLine()) != null) {

String[] split = read.trim().split("\\s"); // space split

if( split.length < 1 ){

System.out.println("Error line: " + read);

continue;

}

if( ++count % 1000 == 0){

System.out.println(df.format(new Date()) + " : " + count + " rows processed." ); // new Date()为获取当前系统时间

}

// System.out.println("ROWKEY:" + split[0]);

Get g = new Get(split[0].getBytes());

Result rs = table.get(g);

if( rs == null){

System.out.println("No Result for " + split[0]);

continue;

}

for(int i=0; i<filterColumns.length; ++i){

String value = Bytes.toString(rs.getValue(ColumnUtils.getFamily(filterColumns[i]), ColumnUtils.getQualifier(filterColumns[i])));

if(value == null){

if( os == null){

PrintId(split[0], rs);

}else{

WriteId(split[0], rs, os);

}

// PrintRow(split[0], rs);

break;

}

}

}

br.close();

isr.close();

in.close();

}

}

Hbase Mapreduce示例:全库扫描(大量数据):

package com.hbase.mapreduce;

import java.io.File;

import java.io.FileInputStream;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HConstants;

import org.apache.hadoop.hbase.client.Scan;

import org.apache.hadoop.hbase.mapreduce.IdentityTableMapper;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapred.JobConf;

import org.apache.hadoop.util.GenericOptionsParser;

import org.apache.hadoop.hbase.filter.SingleColumnValueFilter;

import org.apache.hadoop.hbase.filter.CompareFilter;

import org.apache.hadoop.hbase.filter.CompareFilter.CompareOp;

import org.apache.hadoop.hbase.filter.BinaryComparator;

import org.apache.hadoop.hbase.util.Bytes;

import com.goodhope.utils.ColumnUtils;

public class ExportHbase {

private static final String INFOCATEGORY = "info:storecategory";

private static final String USAGE = "Usage: ExportHbase " +

"-r <numReduceTasks> -indexConf <iconfFile>\n" +

"-indexDir <indexDir> -webSite <amazon> [-needupdate <true> -isVisible -startTime <long>] -table <tableName> -columns <columnName1> " +

"[<columnName2> ...]";

/**

* Prints the usage message and exists the program.

*

* @param message The message to print first.

*/

private static void printUsage(String message) {

System.err.println(message);

System.err.println(USAGE);

throw new RuntimeException(USAGE);

}

/**

* Creates a new job.

* @param conf

*

* @param args The command line arguments.

* @throws IOException When reading the configuration fails.

*/

public static Job createSubmittableJob(Configuration conf, String[] args)

throws IOException {

if (args.length < 7) {

printUsage("Too few arguments");

}

int numReduceTasks = 1;

String iconfFile = null;

String indexDir = null;

String tableName = null;

String website = null;

String needupdate = "";

String expectShopGrade = "";

String dino = "6";

String isdebug = "0";

long debugThreshold = 10000;

String debugThresholdStr = Long.toString(debugThreshold);

String queue = "offline";

long endTime = Long.MAX_VALUE;

int maxversions = 1;

long startTime = System.currentTimeMillis() - 28*24*60*60*1000l;

long distartTime = System.currentTimeMillis() - 30*24*60*60*1000l;

long diusedTime = System.currentTimeMillis() - 30*24*60*60*1000l;

String startTimeStr = Long.toString(startTime);

String diusedTimeStr = Long.toString(diusedTime);

String quorum = null;

String isVisible = "";

List<String> columns = new ArrayList<String>() ;

boolean bFilter = false;

// parse args

for (int i = 0; i < args.length - 1; i++) {

if ("-r".equals(args[i])) {

numReduceTasks = Integer.parseInt(args[++i]);

} else if ("-indexConf".equals(args[i])) {

iconfFile = args[++i];

} else if ("-indexDir".equals(args[i])) {

indexDir = args[++i];

} else if ("-table".equals(args[i])) {

tableName = args[++i];

} else if ("-webSite".equals(args[i])) {

website = args[++i];

} else if ("-startTime".equals(args[i])) {

startTimeStr = args[++i];

startTime = Long.parseLong(startTimeStr);

} else if ("-needupdate".equals(args[i])) {

needupdate = args[++i];

} else if ("-isVisible".equals(args[i])) {

isVisible = "true";

} else if ("-shopgrade".equals(args[i])) {

expectShopGrade = args[++i];

} else if ("-queue".equals(args[i])) {

queue = args[++i];

} else if ("-dino".equals(args[i])) {

dino = args[++i];

} else if ("-maxversions".equals(args[i])) {

maxversions = Integer.parseInt(args[++i]);

} else if ("-distartTime".equals(args[i])) {

distartTime = Long.parseLong(args[++i]);

} else if ("-diendTime".equals(args[i])) {

endTime = Long.parseLong(args[++i]);

} else if ("-diusedTime".equals(args[i])) {

diusedTimeStr = args[++i];

diusedTime = Long.parseLong(diusedTimeStr);

} else if ("-quorum".equals(args[i])) {

quorum = args[++i];

} else if ("-filter".equals(args[i])) {

bFilter = true;

} else if ("-columns".equals(args[i])) {

columns.add(args[++i]);

while (i + 1 < args.length && !args[i + 1].startsWith("-")) {

String columnname = args[++i];

columns.add(columnname);

System.out.println("args column----: " + columnname);

}

} else if ("-debugThreshold".equals(args[i])) {

isdebug = "1";

debugThresholdStr = args[++i];

debugThreshold = Long.parseLong( debugThresholdStr );

}

else {

printUsage("Unsupported option " + args[i]);

}

}

if (distartTime > endTime) {

printUsage("distartTime must <= diendTime");

}

if (indexDir == null || tableName == null || columns.isEmpty()) {

printUsage("Index directory, table name and at least one column must " +

"be specified");

}

if (iconfFile != null) {

// set index configuration content from a file

String content = readContent(iconfFile);

conf.set("hbase.index.conf", content);

conf.set("hbase.website.name", website);

conf.set("hbase.needupdate.productDB", needupdate);

conf.set("hbase.expect.shopgrade", expectShopGrade);

conf.set("hbase.di.no", dino);

conf.set("hbase.expect.item.visible", isVisible);

conf.set("hbase.index.startTime", startTimeStr);

conf.set("hbase.index.diusedTime", diusedTimeStr);

conf.set("hbase.index.debugThreshold", debugThresholdStr);

conf.set("hbase.index.debug", isdebug);

if (quorum != null) {

conf.set("hbase.zookeeper.quorum", quorum);

}

String temp = "";

for (String column : columns) {

temp = temp + column + "|";

}

temp = temp.substring(0, temp.length() - 1);

conf.set("hbase.index.column", temp);

System.out.println("hbase.index.column: " + temp);

}

Job job = new Job(conf, "export data from table " + tableName);

((JobConf) job.getConfiguration()).setQueueName(queue);

// number of indexes to partition into

job.setNumReduceTasks(numReduceTasks);

Scan scan = new Scan();

scan.setCacheBlocks(false);

// limit scan range

scan.setTimeRange(distartTime, endTime);

// scan.setMaxVersions(maxversions);

scan.setMaxVersions(1);

/* limit scan columns */

for (String column : columns) {

scan.addColumn(ColumnUtils.getFamily(column), ColumnUtils.getQualifier(column));

scan.addFamily(ColumnUtils.getFamily(column));

}

// set filter

if( bFilter ){

System.out.println("only export guangtaobao data. ");

SingleColumnValueFilter filter = new SingleColumnValueFilter(

Bytes.toBytes("info"),

Bytes.toBytes("producttype"),

CompareFilter.CompareOp.EQUAL,

new BinaryComparator(Bytes.toBytes("guangtaobao")) );

filter.setFilterIfMissing(true);

scan.setFilter(filter);

}

TableMapReduceUtil.initTableMapperJob(tableName, scan, ExportHbaseMapper.class,

Text.class, Text.class, job);

// job.setReducerClass(ExportHbaseReducer.class);

FileOutputFormat.setOutputPath(job, new Path(indexDir));

return job;

}

/**

* Reads xml file of indexing configurations. The xml format is similar to

* hbase-default.xml and hadoop-default.xml. For an example configuration,

* see the <code>createIndexConfContent</code> method in TestTableIndex.

*

* @param fileName The file to read.

* @return XML configuration read from file.

* @throws IOException When the XML is broken.

*/

private static String readContent(String fileName) throws IOException {

File file = new File(fileName);

int length = (int) file.length();

if (length == 0) {

printUsage("Index configuration file " + fileName + " does not exist");

}

int bytesRead = 0;

byte[] bytes = new byte[length];

FileInputStream fis = new FileInputStream(file);

try {

// read entire file into content

while (bytesRead < length) {

int read = fis.read(bytes, bytesRead, length - bytesRead);

if (read > 0) {

bytesRead += read;

} else {

break;

}

}

} finally {

fis.close();

}

return new String(bytes, 0, bytesRead, HConstants.UTF8_ENCODING);

}

/**

* The main entry point.

*

* @param args The command line arguments.

* @throws Exception When running the job fails.

*/

public static void main(String[] args) throws Exception {

Configuration conf = HBaseConfiguration.create();

String[] otherArgs =

new GenericOptionsParser(conf, args).getRemainingArgs();

Job job = createSubmittableJob(conf, otherArgs);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

//

package com.hbase.mapreduce;

import java.io.IOException;

import java.util.List;

import java.util.ArrayList;

import java.lang.String;

import java.lang.StringBuffer;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.conf.Configurable;

import org.apache.hadoop.conf.Configuration;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.TableMapper;

import org.apache.hadoop.hbase.util.Bytes;

import org.apache.hadoop.hbase.KeyValue;

import com.goodhope.utils.ColumnUtils;

/**

* Pass the given key and record as-is to the reduce phase.

*/

@SuppressWarnings("deprecation")

public class ExportHbaseMapper extends TableMapper<Text,Text> implements Configurable {

private static final Text keyTEXT = new Text();

private static final Text SENDTEXT = new Text();

private Configuration conf = null;

private long startTime = 0;

List<String> columnMap = null;

private long rCount = 0;

private long errCount = 0;

private int debug = 0;

private long thresCount = 10000;

public void map(ImmutableBytesWritable key, Result value, Context context) throws IOException, InterruptedException {

rCount++;

String itemid = Bytes.toString(key.get());

if (itemid.contains("&")) {

context.getCounter("Error", "rowkey contains \"&\"").increment(1);

return;

}

StringBuffer outstr = new StringBuffer();

for (String col : columnMap) {

String tmp = Bytes.toString(value.getValue(ColumnUtils.getFamily(col), ColumnUtils.getQualifier(col)));

if (tmp == null){

context.getCounter("Error", col+" No value in hbase").increment(1);

errCount++;

if( debug > 0 && (errCount % thresCount == 0)){

System.err.println( itemid + ": doesn't has " + col + " data!");

}

outstr.append("NULL" + "\t");

}else{

if( tmp.contains("guangtaobao") ){

outstr.append("1" + "\t");

}else{

outstr.append(tmp.trim() + "\t");

}

}

}

if ( ! outstr.toString().isEmpty() ) {

SENDTEXT.set( outstr.toString() );

keyTEXT.set(itemid);

context.write(keyTEXT, SENDTEXT);

if( debug > 0 && (rCount % thresCount*10000 == 0)){

System.out.println( SENDTEXT.toString() + keyTEXT.toString() );

}

}

else

{

context.getCounter("Error", "No Colume output").increment(1);

return;

}

}

/**

* Returns the current configuration.

*

* @return The current configuration.

* @see org.apache.hadoop.conf.Configurable#getConf()

*/

@Override

public Configuration getConf() {

return conf;

}

/**

* Sets the configuration. This is used to set up the index configuration.

*

* @param configuration

* The configuration to set.

* @see org.apache.hadoop.conf.Configurable#setConf(org.apache.hadoop.conf.Configuration)

*/

@Override

public void setConf(Configuration configuration) {

this.conf = configuration;

startTime = Long.parseLong(conf.get("hbase.index.startTime"));

thresCount = Long.parseLong(conf.get("hbase.index.debugThreshold"));

debug = Integer.parseInt(conf.get("hbase.index.debug"));

String[] columns = conf.get("hbase.index.column").split("\\|");

columnMap = new ArrayList<String>();

for (String column : columns) {

System.out.println("Output column: " + column);

columnMap.add(column);

}

}

}

//

package com.hbase.utils;

import org.apache.hadoop.hbase.util.Bytes;

public class ColumnUtils {

public static byte[] getFamily(String column){

return getBytes(column, 0);

}

public static byte[] getQualifier(String column){

return getBytes(column, 1);

}

private static byte[] getBytes(String column , int offset){

String[] split = column.split(":");

return Bytes.toBytes(offset > split.length -1 ? split[0] :split[offset]);

}

}

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?