- (void)viewDidLoad { [super viewDidLoad]; // Do any additional setup after loading the view, typically from a nib. UIImageView *imageView = [[UIImageView alloc] initWithFrame:self.view.bounds]; imageView.image = [UIImage imageNamed:@"timg.jpeg"]; [self.view addSubview:imageView]; CGFloat width = imageView.image.size.width; CGFloat height = imageView.image.size.height; CGFloat sWidth = imageView.bounds.size.width; CGFloat sHeight = imageView.bounds.size.height; CGFloat x = width/sWidth; CGFloat y = height/sHeight; // 根据image修改imageview的frame if (x > y) { imageView.frame = CGRectMake(0, (sHeight-height/x)/2, sWidth, height/x); }else{ imageView.frame = CGRectMake((sWidth-width/y)/2, 0, width/y, sHeight); } // 压缩image UIGraphicsBeginImageContext(CGSizeMake(imageView.bounds.size.width , imageView.bounds.size.height)); [imageView.image drawInRect:CGRectMake(0, 0, imageView.bounds.size.width, imageView.bounds.size.height)]; UIImage *scaledImage = UIGraphicsGetImageFromCurrentImageContext(); UIGraphicsEndImageContext(); imageView.image = scaledImage; //识别 dispatch_async(dispatch_get_global_queue(0, 0), ^{ CIImage *cImage = [CIImage imageWithCGImage:imageView.image.CGImage]; // 设置识别模式 NSDictionary *opts = [NSDictionary dictionaryWithObject:CIDetectorAccuracyHigh forKey:CIDetectorAccuracy]; /* Lower accuracy, higher performance */ //CORE_IMAGE_EXPORT NSString* const CIDetectorAccuracyLow NS_AVAILABLE(10_7, 5_0); /* Lower performance, higher accuracy */ //CORE_IMAGE_EXPORT NSString* const CIDetectorAccuracyHigh NS_AVAILABLE(10_7, 5_0); CIDetector *detector = [CIDetector detectorOfType:CIDetectorTypeFace context:nil options:opts]; NSArray *features = [detector featuresInImage:cImage]; if ([features count] == 0) { dispatch_async(dispatch_get_main_queue(), ^{ NSLog(@"检测失败"); }); return ; } for (CIFaceFeature *feature in features) { // 是否微笑 BOOL smile = feature.hasSmile; NSLog(smile ? @"微笑" : @"没微笑"); // 眼睛是否睁开 BOOL leftEyeClosed = feature.leftEyeClosed; BOOL rightEyeClosed = feature.rightEyeClosed; NSLog(leftEyeClosed ? @"左眼没睁开" : @"左眼睁开"); NSLog(rightEyeClosed ? @"右眼没睁开" : @"右眼睁开"); // 获取脸部frame CGRect rect = feature.bounds; rect.origin.y = imageView.bounds.size.height - rect.size.height - rect.origin.y;// Y轴旋转180度 faceRect = rect; NSLog(@"脸 %@",NSStringFromCGRect(rect)); // 左眼 if (feature.hasLeftEyePosition) { CGPoint eye = feature.leftEyePosition; eye.y = imageView.bounds.size.height - eye.y;// Y轴旋转180度 NSLog(@"左眼 %@",NSStringFromCGPoint(eye)); } // 右眼 if (feature.hasRightEyePosition) { CGPoint eye = feature.rightEyePosition; eye.y = imageView.bounds.size.height - eye.y;// Y轴旋转180度 NSLog(@"右眼 %@",NSStringFromCGPoint(eye)); } // 嘴 if (feature.hasMouthPosition) { CGPoint mouth = feature.mouthPosition; mouth.y = imageView.bounds.size.height - mouth.y;// Y轴旋转180度 NSLog(@"嘴 %@",NSStringFromCGPoint(mouth)); } } dispatch_async(dispatch_get_main_queue(), ^{ NSLog(@"检测完成"); UIView *view = [[UIView alloc] initWithFrame:faceRect]; view.backgroundColor = [UIColor blueColor]; view.alpha = 0.3; [imageView addSubview:view]; }); }); }

关于图片压缩:imageview的大小与image的大小不一致,识别的时候,是按照image的大小进行计算,通过重绘,使imageview与image保持一致。

关于坐标Y轴翻转:屏幕的坐标原点即(0,0)点在左上角,识别图片的坐标原点在左下角。

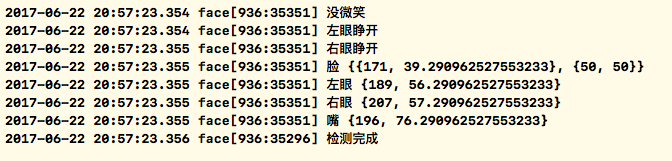

运行结果

控制台输出结果

3167

3167

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?