前情提要:

web服务往华为云上迁移

================内网的好环境,相关配置===================

1.web服务关于ES的集群配置如下:

elasticAddress1=192.168.6.16

elasticAddress2=192.168.6.17

elasticPort1=9300

elasticPort2=9301

elasticClusterName=elasticsearch-crm

是在内网两台服务器上启动的ES集群,分别配置的IP和端口如上.

ES的配置文件内容如下:

#192.168.6.16 ES配置文件

cluster.name: elasticsearch-crm

node.name: "node-1"

node.master: true

node.data: true

network.host : 192.168.6.16

http.port : 9200

transport.tcp.port: 9300

discovery.zen.ping.unicast.hosts: ["192.168.6.17:9301"]

discovery.zen.fd.connect_on_network_disconnect : true

discovery.zen.initial_ping_timeout : 10s

discovery.zen.fd.ping_interval : 2s

discovery.zen.fd.ping_retries : 5

#192.168.6.17 ES配置文件

cluster.name: elasticsearch-crm

node.name: "node-2"

node.master: true

node.data: true

network.host : 192.168.6.17

http.port : 9201

transport.tcp.port: 9301

discovery.zen.ping.unicast.hosts: ["192.168.6.16:9300"]

discovery.zen.fd.connect_on_network_disconnect : true

discovery.zen.initial_ping_timeout : 10s

discovery.zen.fd.ping_interval : 2s

discovery.zen.fd.ping_retries : 5

==================迁移的环境,一开始的配置========================

2.华为云上ES启动单节点,和web服务在同一服务器上,因此配置如下:

web服务配置如下:

elasticAddress1=127.0.0.1

elasticAddress2=127.0.0.1

elasticPort1=9200

elasticPort2=9200

elasticClusterName=elasticsearch-crm

同和web服务在一台服务器上的ES的配置文件如下[docker启动]:[只用了单节点,没有启动多节点的集群]

#集群名

cluster.name: elasticsearch-crm

node.master: true

node.data: true

network.host : 127.0.0.1

http.port : 9200

transport.tcp.port: 9300

docker启动命令如下:[docker镜像使用版本为6.5.4]

docker run -itd --name es1 -p 9200:9200 -p 9300:9300 --restart=always -v /mnt/apps/es/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /mnt/apps/es/data:/usr/share/elasticsearch/data --net=host elasticsearch:6.5.4

报错开始:

报错1:

Exception in thread "main" NoNodeAvailableException[None of the configured nodes are available: [{#transport#-1}{GRphuiRLRgOVTP0707mGFQ}{127.0.0.1}{127.0.0.1:9200}]]

at org.elasticsearch.client.transport.TransportClientNodesService.ensureNodesAreAvailable(TransportClientNodesService.java:347)

at org.elasticsearch.client.transport.TransportClientNodesService.execute(TransportClientNodesService.java:245)

at org.elasticsearch.client.transport.TransportProxyClient.execute(TransportProxyClient.java:60)

at org.elasticsearch.client.transport.TransportClient.doExecute(TransportClient.java:378)

at org.elasticsearch.client.support.AbstractClient.execute(AbstractClient.java:405)

at org.elasticsearch.client.support.AbstractClient.execute(AbstractClient.java:394)

at org.elasticsearch.action.ActionRequestBuilder.execute(ActionRequestBuilder.java:46)

at org.elasticsearch.action.ActionRequestBuilder.get(ActionRequestBuilder.java:53)

at com.es.test.EsTest.addIndex1(EsTest.java:70)

at com.es.test.EsTest.main(EsTest.java:89)

错误原因:

ES提供的端口号

9200作为Http协议,主要用于外部通讯

9300作为Tcp协议,jar之间就是通过tcp协议通讯

ES集群之间是通过9300进行通讯,web服务连接调用ES,也是使用9300端口

修改位置:

将web服务的连接ES配置更改为如下:

elasticAddress1=127.0.0.1

elasticAddress2=127.0.0.1

elasticPort1=9300

elasticPort2=9300

elasticClusterName=elasticsearch-crm

报错2:

Exception in thread "main" NoNodeAvailableException[None of the configured nodes are available: [{#transport#-1}{GRphuiRLRgOVTP0707mGFQ}{127.0.0.1}{127.0.0.1:9300}]]

at org.elasticsearch.client.transport.TransportClientNodesService.ensureNodesAreAvailable(TransportClientNodesService.java:347)

at org.elasticsearch.client.transport.TransportClientNodesService.execute(TransportClientNodesService.java:245)

at org.elasticsearch.client.transport.TransportProxyClient.execute(TransportProxyClient.java:60)

at org.elasticsearch.client.transport.TransportClient.doExecute(TransportClient.java:378)

at org.elasticsearch.client.support.AbstractClient.execute(AbstractClient.java:405)

at org.elasticsearch.client.support.AbstractClient.execute(AbstractClient.java:394)

at org.elasticsearch.action.ActionRequestBuilder.execute(ActionRequestBuilder.java:46)

at org.elasticsearch.action.ActionRequestBuilder.get(ActionRequestBuilder.java:53)

at com.es.test.EsTest.addIndex1(EsTest.java:70)

at com.es.test.EsTest.main(EsTest.java:89)

错误原因:

web服务中配置连接使用127.0.0.1不可以。

应该使用本机IP配置才行。

本机IP,应该web服务和ES服务的配置文件都更改为使用本机真实IP

修改位置:

将web服务连接ES配置更改为:

elasticAddress1=10.10.10.60

elasticAddress2=10.10.10.60

elasticPort1=9300

elasticPort2=9300

elasticClusterName=elasticsearch-crm

将ES配置文件中的配置内容改为:

#集群名

cluster.name: elasticsearch-crm

node.master: true

node.data: true

network.host : 10.10.10.60

http.port : 9200

transport.tcp.port: 9300

==============本类问题总结========================

java程序连接ES服务,报错NoNodeAvailableException[None of the configured nodes are available,解决的思路应该是:

第一、配置文件有问题,web服务和ES配置文件中,双方都应该

1.IP应该用本机真实IP

2.ES这边注意启动的端口是多少

3.web服务这边应该使用9300或者ES启用的另外的TCP端口,而不能使用9200端口

4.注意双方的集群名称 ,一定要一致

第二、这里确认一下,原环境中ES集群是两个ES节点,新环境中是单个ES节点

配置依旧写成:

elasticAddress1=10.10.10.60

elasticAddress2=10.10.10.60

elasticPort1=9300

elasticPort2=9300

elasticClusterName=elasticsearch-crm

一度怀疑,java程序识别不到可用节点,是不是因为我双节点的配置是重复的,这个问题引起的。

经过测试,这样重复的配置虽然不合适,但是完全不影响使用。

所以这一点也排除!

第三、经过上述两点,确认配置确实无误之后,需要排查一下,ES节点是不是真正的正常启动起来了?

如果你的ES虽然启动了,但是并没有启动成功,那肯定连不上的呀。

【至于因为网络问题,防火墙问题,端口未开放的问题,这些都是可排查的原因,但是这里我的WEB和ES是在同一台服务器上,所以排除这个问题】

=================新一类问题开启==========================

经过上面的问题解决和排查,配置方面没有问题了,现在看ES这边到底是什么鬼。。。。

这边启动ES,查看日志报错

报错3:

[2018-06-15T09:56:53,019][INFO ][o.e.n.Node ] [node1] initializing ...

[2018-06-15T09:56:53,141][INFO ][o.e.e.NodeEnvironment ] [node1] using [1] data paths, mounts [[/data (/dev/mapper/data-data)]], net usable_space [179.8gb], net total_space [179.9gb], spins? [possibly], types [xfs]

[2018-06-15T09:56:53,142][INFO ][o.e.e.NodeEnvironment ] [node1] heap size [1.9gb], compressed ordinary object pointers [true]

[2018-06-15T09:56:53,143][INFO ][o.e.n.Node ] [node1] node name [node1], node ID [F0PzQ9qSRPWq8YNcwjj0vg]

[2018-06-15T09:56:53,144][INFO ][o.e.n.Node ] [node1] version[5.6.0], pid[27627], build[781a835/2017-09-07T03:09:58.087Z], OS[Linux/3.10.0-514.el7.x86_64/amd64], JVM[Oracle Corporation/OpenJDK 64-Bit Server VM/1.8.0_102/25.102-b14]

[2018-06-15T09:56:53,144][INFO ][o.e.n.Node ] [node1] JVM arguments [-Xms2g, -Xmx2g, -XX:+UseConcMarkSweepGC, -XX:CMSInitiatingOccupancyFraction=75, -XX:+UseCMSInitiatingOccupancyOnly, -XX:+AlwaysPreTouch, -Xss1m, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djna.nosys=true, -Djdk.io.permissionsUseCanonicalPath=true, -Dio.netty.noUnsafe=true, -Dio.netty.noKeySetOptimization=true, -Dio.netty.recycler.maxCapacityPerThread=0, -Dlog4j.shutdownHookEnabled=false, -Dlog4j2.disable.jmx=true, -Dlog4j.skipJansi=true, -XX:+HeapDumpOnOutOfMemoryError, -Des.path.home=/usr/local/elasticsearch-5.6.0]

[2018-06-15T09:56:54,161][INFO ][o.e.p.PluginsService ] [node1] loaded module [aggs-matrix-stats]

[2018-06-15T09:56:54,162][INFO ][o.e.p.PluginsService ] [node1] loaded module [ingest-common]

[2018-06-15T09:56:54,162][INFO ][o.e.p.PluginsService ] [node1] loaded module [lang-expression]

[2018-06-15T09:56:54,162][INFO ][o.e.p.PluginsService ] [node1] loaded module [lang-groovy]

[2018-06-15T09:56:54,162][INFO ][o.e.p.PluginsService ] [node1] loaded module [lang-mustache]

[2018-06-15T09:56:54,162][INFO ][o.e.p.PluginsService ] [node1] loaded module [lang-painless]

[2018-06-15T09:56:54,163][INFO ][o.e.p.PluginsService ] [node1] loaded module [parent-join]

[2018-06-15T09:56:54,163][INFO ][o.e.p.PluginsService ] [node1] loaded module [percolator]

[2018-06-15T09:56:54,163][INFO ][o.e.p.PluginsService ] [node1] loaded module [reindex]

[2018-06-15T09:56:54,163][INFO ][o.e.p.PluginsService ] [node1] loaded module [transport-netty3]

[2018-06-15T09:56:54,163][INFO ][o.e.p.PluginsService ] [node1] loaded module [transport-netty4]

[2018-06-15T09:56:54,164][INFO ][o.e.p.PluginsService ] [node1] no plugins loaded

[2018-06-15T09:56:55,941][INFO ][o.e.d.DiscoveryModule ] [node1] using discovery type [zen]

[2018-06-15T09:56:56,770][INFO ][o.e.n.Node ] [node1] initialized

[2018-06-15T09:56:56,770][INFO ][o.e.n.Node ] [node1] starting ...

[2018-06-15T09:56:57,059][WARN ][o.e.b.ElasticsearchUncaughtExceptionHandler] [node1] uncaught exception in thread [main]

org.elasticsearch.bootstrap.StartupException: BindTransportException[Failed to bind to [9300-9400]]; nested: BindException[Cannot assign requested address];

at org.elasticsearch.bootstrap.Elasticsearch.init(Elasticsearch.java:136) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.bootstrap.Elasticsearch.execute(Elasticsearch.java:123) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.cli.EnvironmentAwareCommand.execute(EnvironmentAwareCommand.java:67) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.cli.Command.mainWithoutErrorHandling(Command.java:134) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.cli.Command.main(Command.java:90) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:91) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:84) ~[elasticsearch-5.6.0.jar:5.6.0]

Caused by: org.elasticsearch.transport.BindTransportException: Failed to bind to [9300-9400]

at org.elasticsearch.transport.TcpTransport.bindToPort(TcpTransport.java:771) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.transport.TcpTransport.bindServer(TcpTransport.java:736) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.transport.netty4.Netty4Transport.doStart(Netty4Transport.java:173) ~[?:?]

at org.elasticsearch.common.component.AbstractLifecycleComponent.start(AbstractLifecycleComponent.java:69) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.transport.TransportService.doStart(TransportService.java:209) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.common.component.AbstractLifecycleComponent.start(AbstractLifecycleComponent.java:69) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.node.Node.start(Node.java:694) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.bootstrap.Bootstrap.start(Bootstrap.java:278) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.bootstrap.Bootstrap.init(Bootstrap.java:351) ~[elasticsearch-5.6.0.jar:5.6.0]

at org.elasticsearch.bootstrap.Elasticsearch.init(Elasticsearch.java:132) ~[elasticsearch-5.6.0.jar:5.6.0]

... 6 more

Caused by: java.net.BindException: Cannot assign requested address

at sun.nio.ch.Net.bind0(Native Method) ~[?:?]

at sun.nio.ch.Net.bind(Net.java:433) ~[?:?]

at sun.nio.ch.Net.bind(Net.java:425) ~[?:?]

at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:223) ~[?:?]

at io.netty.channel.socket.nio.NioServerSocketChannel.doBind(NioServerSocketChannel.java:128) ~[?:?]

at io.netty.channel.AbstractChannel$AbstractUnsafe.bind(AbstractChannel.java:554) ~[?:?]

at io.netty.channel.DefaultChannelPipeline$HeadContext.bind(DefaultChannelPipeline.java:1258) ~[?:?]

at io.netty.channel.AbstractChannelHandlerContext.invokeBind(AbstractChannelHandlerContext.java:501) ~[?:?]

at io.netty.channel.AbstractChannelHandlerContext.bind(AbstractChannelHandlerContext.java:486) ~[?:?]

at io.netty.channel.DefaultChannelPipeline.bind(DefaultChannelPipeline.java:980) ~[?:?]

at io.netty.channel.AbstractChannel.bind(AbstractChannel.java:250) ~[?:?]

at io.netty.bootstrap.AbstractBootstrap$2.run(AbstractBootstrap.java:365) ~[?:?]

at io.netty.util.concurrent.AbstractEventExecutor.safeExecute(AbstractEventExecutor.java:163) ~[?:?]

at io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:403) ~[?:?]

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:462) ~[?:?]

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858) ~[?:?]

at java.lang.Thread.run(Thread.java:745) [?:1.8.0_102]

[2018-06-15T09:56:57,822][INFO ][o.e.n.Node ] [node1] stopping ...

[2018-06-15T09:56:57,826][INFO ][o.e.n.Node ] [node1] stopped

[2018-06-15T09:56:57,826][INFO ][o.e.n.Node ] [node1] closing ...

[2018-06-15T09:56:57,839][INFO ][o.e.n.Node ] [node1] closed

错误原因:

此时的ES配置文件中,network.host : 10.10.10.60 配置的是本机的真实IP。[对于本机的web服务,就是内网IP就可以了,不用外网IP]

但是就是绑定不起,ES启动不起来。到底为什么?

经过反复对比和排查,发现可能是因为ES版本的问题。

解决方案:

所以,docker pull一个更低版本的ES,重新使用命令重启docker容器,就使用咱们的ES配置文件中的配置

docker命令:

docker run -itd --name es1 -p 9200:9200 -p 9300:9300 --restart=always -v /mnt/apps/es/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /mnt/apps/es/data:/usr/share/elasticsearch/data --net=host elasticsearch:5.5.0

此时的ES配置文件内容依旧是:

elasticAddress1=10.10.10.60

elasticAddress2=10.10.10.60

elasticPort1=9300

elasticPort2=9300

elasticClusterName=elasticsearch-crm

发现,这次ES节点成功启动了,本机真实IP绑定成功啦。

这下应该web服务这边调用,就可以通了吧应该,结果一调用还是报错:

报错4:

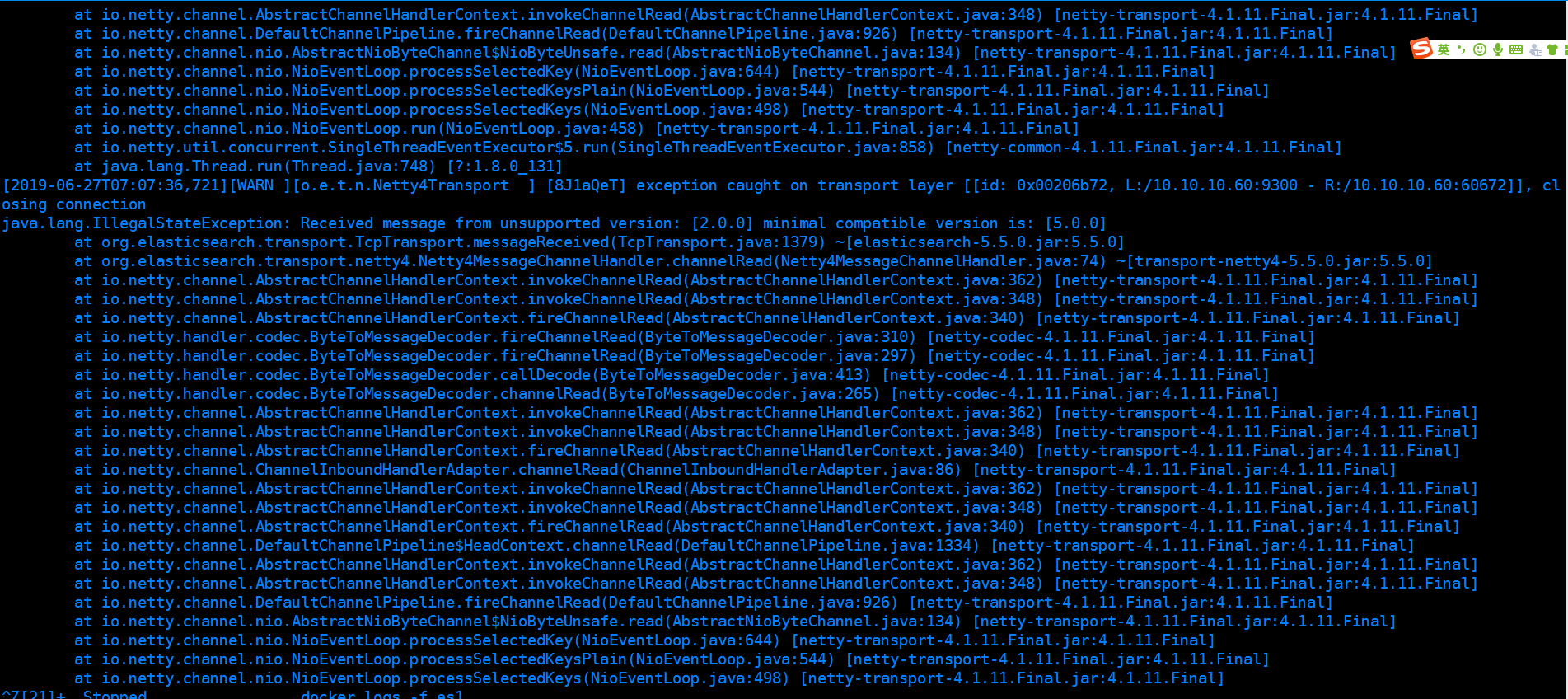

[2019-06-27T07:07:36,721][WARN ][o.e.t.n.Netty4Transport ] [8J1aQeT] exception caught on transport layer [[id: 0x00206b72, L:/10.10.10.60:9300 - R:/10.10.10.60:60672]], closing connection

java.lang.IllegalStateException: Received message from unsupported version: [2.0.0] minimal compatible version is: [5.0.0]

at org.elasticsearch.transport.TcpTransport.messageReceived(TcpTransport.java:1379) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.transport.netty4.Netty4MessageChannelHandler.channelRead(Netty4MessageChannelHandler.java:74) ~[transport-netty4-5.5.0.jar:5.5.0]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:310) [netty-codec-4.1.11.Final.jar:4.1.11.Final]

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:297) [netty-codec-4.1.11.Final.jar:4.1.11.Final]

at io.netty.handler.codec.ByteToMessageDecoder.callDecode(ByteToMessageDecoder.java:413) [netty-codec-4.1.11.Final.jar:4.1.11.Final]

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:265) [netty-codec-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.ChannelInboundHandlerAdapter.channelRead(ChannelInboundHandlerAdapter.java:86) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1334) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:926) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:134) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:644) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeysPlain(NioEventLoop.java:544) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:498) [netty-transport-4.1.11.Final.jar:4.1.11.Final]

错误原因:

注意上面报错中,标红的部分,提示 你正在用2.X的jar包调用5.X的ES服务!!

解决方法:

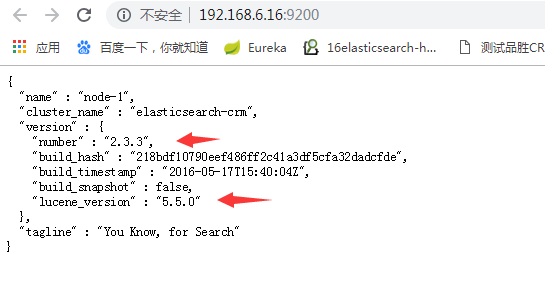

因为是原服务的迁移,所以去查看一下原环境ES的版本,我上面更换低版本不就是去查看了原环境的ES版本了么?应该不是版本的问题呀,然后再次确认一下

原环境版本

新环境版本:

这下错误原因真正的找到了,原本查看的5.5.0 是lucene_version。而实际原环境的ES版本是2.3.3

好了,现在再docker pull2.3.3版本的ES,docker启动命令依旧如上面:

docker run -itd --name es1 -p 9200:9200 -p 9300:9300 --restart=always -v /mnt/apps/es/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /mnt/apps/es/data:/usr/share/elasticsearch/data --net=host elasticsearch:2.3.3

最终,ES成功启动,web服务成功调通。

=======================================

最后,竟然是因为ES各个版本不兼容,导致的一系列的问题!!!

2956

2956

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?