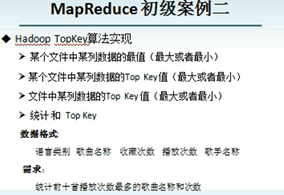

1、需求说明

2、 某个文件中某列数据的最大值。

思路:对每一个列的值依次进行比较,保存最大的值进行输出,算法的思想类似于排序算法(快速和冒泡排序)。

Mapper:因为只是在wordcount统计的基础上统计的,只是针对一个列,故可以找到最大值后,将最大值和对应的text给,cleanup中的context.write()方法,然后输出。此时不需要Reducer。

1 package org.dragon.hadoop.mapreduce.app.topk; 2 3 import java.io.IOException; 4 5 import org.apache.hadoop.conf.Configuration; 6 import org.apache.hadoop.fs.Path; 7 import org.apache.hadoop.io.LongWritable; 8 import org.apache.hadoop.io.Text; 9 import org.apache.hadoop.mapreduce.Job; 10 import org.apache.hadoop.mapreduce.Mapper; 11 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 12 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 13 14 /** 15 * 功能:某个文件中某列数据的最大值某个文件中 16 * 17 * 针对wordcount程序输出的单词统计信息,求出单词出现频率最高的那个。 即:求给定的键值对中,value的最大值 18 * @author ZhuXY 19 * @time 2016-3-12 下午3:43:23 20 * 21 */ 22 public class TopKMapReduce { 23 24 /* 25 * ****************************************************** 26 * 这个程序很好解释了splitsize对应一个map task,而一行数据对应一个map()函数。 即一个map task对应几个map()函数 27 * ****************************************************** 28 */ 29 30 // Mapper class 31 static class TopKMapper extends 32 Mapper<LongWritable, Text, Text, LongWritable> { 33 // map output key 34 private Text mapOutputKey = new Text();//java的变量(对象)使用前一定要先创建 35 36 // map output value 37 private LongWritable mapOutputValue = new LongWritable(); 38 39 /* 40 * ******************************** 41 * 此处创建对所有的map()函数有效 42 * ******************************* 43 */ 44 45 46 // store max value,init long.MIN_VALUE 47 private long topKValue = Long.MIN_VALUE; 48 49 @Override 50 protected void map(LongWritable key, Text value, Context context) 51 throws IOException, InterruptedException { 52 // get value 53 String lineValue =value.toString(); 54 String[] str = lineValue.split("\t"); 55 56 Long tempValue = Long.valueOf(str[1]); 57 58 // comparator 59 if (topKValue < tempValue) { 60 topKValue = tempValue; 61 // set mapout key当找到相对的最大值给topKValue时,将该单词同时赋值给输出key 62 mapOutputKey.set(str[0]); 63 } 64 65 // 此处的context不需要填写,查看源码发现context是个内部类,源码中是由cleanup负责处理 66 } 67 68 @Override 69 protected void setup(Context context) throws IOException, 70 InterruptedException { 71 super.setup(context); 72 } 73 74 @Override 75 protected void cleanup(Context context) throws IOException, 76 InterruptedException { 77 // set map output value 78 mapOutputValue.set(topKValue); 79 80 // set mapoutput context 81 context.write(mapOutputKey, mapOutputValue); 82 } 83 } 84 85 // Driver Code 86 public int run(String[] args) throws Exception, IOException, 87 InterruptedException { 88 // get conf 89 Configuration conf = new Configuration(); 90 91 // create job 92 Job job = new Job(conf, TopKMapReduce.class.getSimpleName()); 93 94 // set job 95 job.setJarByClass(TopKMapReduce.class); 96 // 1) input 97 Path inputDirPath = new Path(args[0]); 98 FileInputFormat.addInputPath(job, inputDirPath); 99 100 // 2) map 101 job.setMapperClass(TopKMapper.class); 102 job.setMapOutputKeyClass(Text.class); 103 job.setMapOutputValueClass(LongWritable.class); 104 105 // 3) reduce 106 // job.setReducerClass(DataTotalReducer.class); 107 // job.setOutputKeyClass(Text.class); 108 // job.setOutputValueClass(DataWritable.class); 109 job.setNumReduceTasks(0);// 因为本程序没有Reducer的过程,这里必须设置为0 110 111 // 4) output 112 Path outputDir = new Path(args[1]); 113 FileOutputFormat.setOutputPath(job, outputDir); 114 115 // submit job 116 boolean isSuccess = job.waitForCompletion(true); 117 118 // return status 119 return isSuccess ? 0 : 1; 120 } 121 122 // run mapreduce 123 public static void main(String[] args) throws Exception, IOException, 124 InterruptedException { 125 // set args 126 args = new String[] { "hdfs://hadoop-master:9000/wc/wcoutput", 127 "hdfs://hadoop-master:9000/wc/output" }; 128 129 // run job 130 int status = new TopKMapReduce().run(args); 131 // exit 132 System.exit(status); 133 } 134 135 }

3、 某个文件某列数据的Top Key的值(最大或者最小)

思路:用一个TreeMap保存,TreeMap可以自动根据Key排序,故将出现的次数当做Key进行hash存储。然后TreeMap.size()>NUM时,删除最小的就行了。

Mapper:在原有的基础上增加TreeMap

1 package org.dragon.hadoop.mapreduce.app.topk; 2 3 import java.io.IOException; 4 import java.util.Iterator; 5 import java.util.Set; 6 import java.util.TreeMap; 7 8 import org.apache.hadoop.conf.Configuration; 9 import org.apache.hadoop.fs.Path; 10 import org.apache.hadoop.io.LongWritable; 11 import org.apache.hadoop.io.Text; 12 import org.apache.hadoop.mapreduce.Job; 13 import org.apache.hadoop.mapreduce.Mapper; 14 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 15 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 16 17 import com.sun.org.apache.bcel.internal.generic.NEW; 18 19 /** 20 * 功能:某列的TopKey的值,即最大(小)的几个。K小值问题 21 * 22 * @author ZhuXY 23 * @time 2016-3-12 下午3:43:23 24 * 25 */ 26 public class TopKMapReduceV2 { 27 28 /* 29 * 思想:用一個集合TreeSet存儲對應的鍵值對,然後當大於三個的時候進行刪除。 30 * 该集合自动按照键进行排序,然后保留最大的三个 31 * 注意:集合 key value 32 * 真是 value key 33 */ 34 35 // Mapper class 36 static class TopKMapper extends 37 Mapper<LongWritable, Text, Text, LongWritable> { 38 39 // 用作保存TopKey的键和值 40 private TreeMap<Long, String> topKTreeMap = new TreeMap<Long, String>(); 41 42 @Override 43 protected void map(LongWritable key, Text value, Context context) 44 throws IOException, InterruptedException { 45 // get value 46 String lineValue = value.toString(); 47 String[] str = lineValue.split("\t"); 48 49 long tempKey = Long.valueOf(str[1]); 50 String tempValue = String.valueOf(str[0]); 51 52 topKTreeMap.put(tempKey, tempValue); 53 if (topKTreeMap.size() > 3) { 54 topKTreeMap.remove(topKTreeMap.firstKey()); 55 } 56 } 57 58 @Override 59 protected void setup(Context context) throws IOException, 60 InterruptedException { 61 super.setup(context); 62 } 63 64 @Override 65 protected void cleanup(Context context) throws IOException, 66 InterruptedException { 67 Set<Long> mapValueSet = topKTreeMap.keySet();// long 名字起得不好 68 69 Text mapOutputKey = new Text(); 70 LongWritable mapOutputValue = new LongWritable(); 71 72 // for (Iterator<Long> iterator = mapValueSet.iterator(); iterator 73 // .hasNext();) { 74 // 75 // long iteratorNum=iterator.next(); 76 // mapOutputValue.set(iteratorNum);// long 77 // mapOutputKey.set(topKTreeMap.get(iteratorNum)); 78 // 79 // context.write(mapOutputKey, mapOutputValue); 80 // } 81 82 for(long key:mapValueSet){ 83 mapOutputValue.set(key);// long 84 mapOutputKey.set(topKTreeMap.get(key)); 85 86 context.write(mapOutputKey, mapOutputValue); 87 } 88 } 89 } 90 91 // Driver Code 92 public int run(String[] args) throws Exception, IOException, 93 InterruptedException { 94 // get conf 95 Configuration conf = new Configuration(); 96 97 // create job 98 Job job = new Job(conf, TopKMapReduceV2.class.getSimpleName()); 99 100 // set job 101 job.setJarByClass(TopKMapReduceV2.class); 102 // 1) input 103 Path inputDirPath = new Path(args[0]); 104 FileInputFormat.addInputPath(job, inputDirPath); 105 106 // 2) map 107 job.setMapperClass(TopKMapper.class); 108 job.setMapOutputKeyClass(Text.class); 109 job.setMapOutputValueClass(LongWritable.class); 110 111 // 3) reduce 112 // job.setReducerClass(DataTotalReducer.class); 113 // job.setOutputKeyClass(Text.class); 114 // job.setOutputValueClass(DataWritable.class); 115 job.setNumReduceTasks(0);// 因为本程序没有Reducer的过程,这里必须设置为0 116 117 // 4) output 118 Path outputDir = new Path(args[1]); 119 FileOutputFormat.setOutputPath(job, outputDir); 120 121 // submit job 122 boolean isSuccess = job.waitForCompletion(true); 123 124 // return status 125 return isSuccess ? 0 : 1; 126 } 127 128 // run mapreduce 129 public static void main(String[] args) throws Exception, IOException, 130 InterruptedException { 131 // set args 132 args = new String[] { "hdfs://hadoop-master:9000/wc/wcoutput", 133 "hdfs://hadoop-master:9000/wc/output1" }; 134 135 // run job 136 int status = new TopKMapReduceV2().run(args); 137 // exit 138 System.exit(status); 139 } 140 141 }

4、 多个文件中某列数据的Top Key的值(最大或者最小)

思路:此处因为是多个文件,故Reducer至少且只能为1个,即default也就是1个。将TreeMap放在Reducer内部的reduce函数进行处理。

1 package org.dragon.hadoop.mapreduce.app.topk; 2 3 import java.io.IOException; 4 import java.util.Iterator; 5 import java.util.Set; 6 import java.util.TreeMap; 7 8 import org.apache.hadoop.conf.Configuration; 9 import org.apache.hadoop.fs.Path; 10 import org.apache.hadoop.io.IntWritable; 11 import org.apache.hadoop.io.LongWritable; 12 import org.apache.hadoop.io.Text; 13 import org.apache.hadoop.mapreduce.Job; 14 import org.apache.hadoop.mapreduce.Mapper; 15 import org.apache.hadoop.mapreduce.Mapper.Context; 16 import org.apache.hadoop.mapreduce.Reducer; 17 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 18 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 19 import org.eclipse.core.internal.filesystem.local.Convert; 20 21 import com.sun.org.apache.bcel.internal.generic.NEW; 22 23 /** 24 * 功能:多个文件中某列数据和的最大值 25 * 26 * 思想:类似wordcount,把每行数据统计好,context出去---mapper 27 * 接收到经过shuffle和merge形成<key,list<1,2,3,4…>>----透明 28 * 在cleanup中,收集reduce中的TreeMap集合,进行排序删除-------reduce、cleanup 29 * 30 * @author ZhuXY 31 * @time 2016-3-12 下午3:43:23 32 * 33 */ 34 public class TopKMapReduceV3 { 35 36 /* 37 * 思想:用一個集合TreeSet存儲對應的鍵值對,然後當大於三個的時候進行刪除。 38 * 该集合自动按照键进行排序,然后保留最大的三个 39 * 注意:集合 key value 40 * 真是 value key 41 */ 42 43 // Mapper class 44 static class TopKMapper extends 45 Mapper<LongWritable, Text, Text, LongWritable> { 46 47 Text mapOutputKey=new Text(); //key 48 LongWritable mapOutputValue=new LongWritable();//value 49 50 @Override 51 protected void map(LongWritable key, Text value, Context context) 52 throws IOException, InterruptedException { 53 // get value 54 String lineValue = value.toString(); 55 String[] str = lineValue.split("\t"); 56 57 mapOutputKey.set(str[0]); 58 59 mapOutputValue.set(Long.parseLong(str[1])); 60 61 context.write(mapOutputKey, mapOutputValue); 62 63 } 64 } 65 66 static class TopKReducer extends 67 Reducer<Text, LongWritable, Text, LongWritable>{ 68 69 70 71 // 用作保存TopKey的键和值 72 private TreeMap<Long, String> topKTreeMap = new TreeMap<Long, String>(); 73 74 @Override 75 protected void reduce(Text key, Iterable<LongWritable> values,Context context) 76 throws IOException, InterruptedException { 77 int sum=0; 78 //循环遍历Interable 79 for(LongWritable value:values) 80 { 81 //累加 82 sum+=value.get(); 83 } 84 85 long tempKey = Long.valueOf(sum); 86 String tempValue = String.valueOf(key); 87 88 topKTreeMap.put(tempKey, tempValue); 89 if (topKTreeMap.size() > 3) { 90 topKTreeMap.remove(topKTreeMap.firstKey()); 91 } 92 } 93 94 @Override 95 protected void cleanup(Context context) 96 throws IOException, InterruptedException { 97 98 //TreeMap键的集合 99 Set<Long> mapValueSet = topKTreeMap.keySet();// long 名字起得不好 100 101 Text mapOutputKey = new Text(); 102 LongWritable mapOutputValue = new LongWritable(); 103 104 //根据键,获取值,并交叉赋予context中参数 105 for(long key:mapValueSet){ 106 mapOutputValue.set(key);// long 107 mapOutputKey.set(topKTreeMap.get(key)); 108 109 context.write(mapOutputKey, mapOutputValue); 110 } 111 } 112 113 } 114 115 // Driver Code 116 public int run(String[] args) throws Exception, IOException, 117 InterruptedException { 118 // get conf 119 Configuration conf = new Configuration(); 120 121 // create job 122 Job job = new Job(conf, TopKMapReduceV3.class.getSimpleName()); 123 124 // set job 125 job.setJarByClass(TopKMapReduceV3.class); 126 // 1) input 127 Path inputDirPath = new Path(args[0]); 128 FileInputFormat.addInputPath(job, inputDirPath); 129 130 // 2) map 131 job.setMapperClass(TopKMapper.class); 132 job.setMapOutputKeyClass(Text.class); 133 job.setMapOutputValueClass(LongWritable.class); 134 135 // 3) reduce 136 job.setReducerClass(TopKReducer.class); 137 job.setOutputKeyClass(Text.class); 138 job.setOutputValueClass(LongWritable.class); 139 //job.setNumReduceTasks(0);// default 1 140 141 // 4) output 142 Path outputDir = new Path(args[1]); 143 FileOutputFormat.setOutputPath(job, outputDir); 144 145 // submit job 146 boolean isSuccess = job.waitForCompletion(true); 147 148 // return status 149 return isSuccess ? 0 : 1; 150 } 151 152 // run mapreduce 153 public static void main(String[] args) throws Exception, IOException, 154 InterruptedException { 155 // set args 156 args = new String[] { "hdfs://hadoop-master:9000/wc/wcinput", 157 "hdfs://hadoop-master:9000/wc/output2" }; 158 159 // run job 160 int status = new TopKMapReduceV3().run(args); 161 // exit 162 System.exit(status); 163 } 164 165 }

5、 统计和Top Key

思路:这个比较难。没有在统计好的基础上进行操作,而是直接统计,然后进行比较。具体看下面应用

数据格式:

语言类别 歌曲名称 收藏次数 播放次数 歌手名称

需求:

统计前十首播放次数最多的歌曲名称和次数。

测试数据:

经典老歌 我只在乎你 1234 34535 邓丽君

流行歌曲 流着泪说分手 125 2342 金志文

流行歌曲 菠萝菠萝蜜 543 536 谢娜

经典老歌 大花轿 123 3465 火风

流行歌曲 无所谓 3453 87654 杨坤

定义一个数据类型TopKWritable:

1 package org.dragon.hadoop.mapreduce.app.topk; 2 3 import java.io.DataInput; 4 import java.io.DataOutput; 5 import java.io.IOException; 6 7 import org.apache.hadoop.io.WritableComparable; 8 9 /** 10 * 11 * @author ZhuXY 12 * @time 2016-3-13 下午7:30:21 13 * 14 */ 15 16 /** 17 * 18 * 数据格式: 19 * 语言类别 歌曲名称 收藏次数 播放次数 歌手名称 20 * 需求: 21 * 统计前十首播放次数最多的歌曲名称和次数。 22 */ 23 public class TopKWritable implements WritableComparable<TopKWritable>{ 24 private String languageType=null; 25 private String songName=null; 26 private long playNum=0; 27 28 public TopKWritable(){ 29 30 } 31 public TopKWritable(String languageType,String songName,long playNum){ 32 set(languageType, songName, playNum); 33 } 34 public void set(String languageType,String songName,Long playNum){ 35 this.languageType=languageType; 36 this.songName=songName; 37 this.playNum=playNum; 38 } 39 40 public String getLanguageType() { 41 return languageType; 42 } 43 public String getSongName() { 44 return songName; 45 } 46 public Long getPlayNum() { 47 return playNum; 48 } 49 50 @Override 51 public void write(DataOutput out) throws IOException { 52 out.writeUTF(languageType); 53 out.writeUTF(songName); 54 out.writeLong(playNum); 55 } 56 57 @Override 58 public void readFields(DataInput in) throws IOException { 59 this.languageType=in.readUTF(); 60 this.songName=in.readUTF(); 61 this.playNum=in.readLong(); 62 } 63 64 @Override 65 public int compareTo(TopKWritable o) { 66 67 //此处-1是为了在输出的时候是按照从大到小的次序输出 68 return -this.getPlayNum().compareTo(o.getPlayNum()); 69 } 70 71 @Override 72 public String toString() { 73 return languageType + "\t" 74 + songName + "\t" 75 + playNum; 76 } 77 78 @Override 79 public int hashCode() { 80 final int prime = 31; 81 int result = 1; 82 result = prime * result 83 + ((languageType == null) ? 0 : languageType.hashCode()); 84 result = prime * result + (int) (playNum ^ (playNum >>> 32)); 85 result = prime * result 86 + ((songName == null) ? 0 : songName.hashCode()); 87 return result; 88 } 89 90 @Override 91 public boolean equals(Object obj) { 92 if (this == obj) 93 return true; 94 if (obj == null) 95 return false; 96 if (getClass() != obj.getClass()) 97 return false; 98 TopKWritable other = (TopKWritable) obj; 99 if (languageType == null) { 100 if (other.languageType != null) 101 return false; 102 } else if (!languageType.equals(other.languageType)) 103 return false; 104 if (playNum != other.playNum) 105 return false; 106 if (songName == null) { 107 if (other.songName != null) 108 return false; 109 } else if (!songName.equals(other.songName)) 110 return false; 111 return true; 112 } 113 }

正式的Mapper和Reducer类;

1 package org.dragon.hadoop.mapreduce.app.topk; 2 3 import java.io.IOException; 4 import java.util.Iterator; 5 import java.util.TreeMap; 6 import java.util.TreeSet; 7 8 import javax.security.auth.callback.LanguageCallback; 9 10 import org.apache.hadoop.classification.InterfaceAudience.Private; 11 import org.apache.hadoop.conf.Configuration; 12 import org.apache.hadoop.fs.Path; 13 import org.apache.hadoop.io.LongWritable; 14 import org.apache.hadoop.io.NullWritable; 15 import org.apache.hadoop.io.Text; 16 import org.apache.hadoop.mapreduce.Job; 17 import org.apache.hadoop.mapreduce.Mapper; 18 import org.apache.hadoop.mapreduce.Reducer; 19 import org.apache.hadoop.mapreduce.Mapper.Context; 20 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 21 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 22 import org.dragon.hadoop.mapreduce.app.topk.TopKMapReduceV3.TopKReducer; 23 24 import com.sun.jersey.core.header.LanguageTag; 25 26 import sun.reflect.LangReflectAccess; 27 28 /** 29 * 30 * @author ZhuXY 31 * @time 2016-3-13 下午12:57:26 32 * 33 */ 34 35 /** 36 * 统计 & TopKey 37 * 38 * 数据格式: 语言类别 歌曲名称 收藏次数 播放次数 歌手名称 需求: 统计前十首播放次数最多的歌曲名称和次数。 39 * 40 * 思想:在Mapper中输出:key---歌曲类型+歌曲名称 41 * value---播放次数 42 * Reducer中:key----封装成TopKWritable对象 43 * value---nullwritable 44 * reduce方法中进行集合存储,然后删除多余的 45 * 46 */ 47 public class TopKMapReduceV4 { 48 private static final int KEY = 4; 49 50 // Mapper class 51 public static class TopKMapper extends 52 Mapper<LongWritable, Text, Text, LongWritable> { 53 54 @Override 55 protected void cleanup(Context context) throws IOException, 56 InterruptedException { 57 super.cleanup(context); 58 } 59 60 @Override 61 protected void map(LongWritable key, Text value, Context context) 62 throws IOException, InterruptedException { 63 //文件的输入类型为TextInputFormat,默认到map中的为<Longwritable,Text> 64 String lineValue = value.toString(); 65 66 if (null == lineValue) { 67 return; 68 } 69 70 //split 71 String[] splitValue = lineValue.split("\t"); 72 73 if (splitValue != null && splitValue.length == 5) { 74 String languageType = splitValue[0]; 75 String songName = splitValue[1]; 76 Long playNum = Long.parseLong(splitValue[3]); 77 78 context.write(new Text(languageType + "\t" + songName), 79 new LongWritable(playNum)); 80 } 81 } 82 83 @Override 84 protected void setup(Context context) throws IOException, 85 InterruptedException { 86 // TODO Auto-generated method stub 87 super.setup(context); 88 } 89 } 90 91 // Reducer class 92 public static class TopKReducer extends 93 Reducer<Text, LongWritable, TopKWritable, NullWritable> { 94 95 //此集合的排序规则即为TopKWritable中comparaTo的排序规则 96 TreeSet<TopKWritable> treeSet=new TreeSet<TopKWritable>(); 97 98 @Override 99 protected void setup(Context context) 100 throws IOException, InterruptedException { 101 // TODO Auto-generated method stub 102 super.setup(context); 103 } 104 105 @Override 106 protected void reduce(Text key, Iterable<LongWritable> values, 107 Context context) throws IOException, InterruptedException { 108 109 Long palyNum=(long) 0; 110 if (key==null) { 111 return; 112 } 113 114 //get key 115 String[] keyArr=key.toString().split("\t"); 116 String languageType=keyArr[0]; 117 String songName=keyArr[1]; 118 119 //sum 120 for(LongWritable value:values){ 121 palyNum+=value.get(); 122 } 123 124 //歌曲类型、歌曲名称、歌曲播放次数封装成TopKWritable对象,保存在treeSet集合中,此集合自动排序 125 treeSet.add(new TopKWritable( 126 languageType,songName,palyNum 127 )); 128 129 if (treeSet.size()>KEY) { 130 treeSet.remove(treeSet.last());//remove the current small longNum 131 } 132 } 133 134 @Override 135 protected void cleanup(Context context) 136 throws IOException, InterruptedException { 137 for (TopKWritable topKWritable : treeSet) { 138 context.write(topKWritable,NullWritable.get()); 139 } 140 } 141 142 143 } 144 // Driver Code 145 public int run(String[] args) throws IOException, ClassNotFoundException, InterruptedException { 146 // get conf 147 Configuration conf=new Configuration(); 148 149 // create job 150 Job job =new Job(conf, TopKMapReduceV4.class.getSimpleName());//Job name 151 152 // set job 153 job.setJarByClass(TopKMapReduceV4.class); 154 155 // 1)set inputPath 156 FileInputFormat.addInputPath(job, new Path(args[0])); 157 158 // 2)set map 159 job.setMapperClass(TopKMapper.class); 160 job.setMapOutputKeyClass(Text.class); 161 job.setMapOutputValueClass(LongWritable.class); 162 163 // 3)set outputPath 164 FileOutputFormat.setOutputPath(job, new Path(args[1])); 165 166 // 4)set reduce 167 job.setReducerClass(TopKReducer.class); 168 job.setOutputKeyClass(TopKWritable.class); 169 job.setOutputValueClass(NullWritable.class); 170 171 // submit job 172 boolean isSuccess=job.waitForCompletion(true); 173 174 //return status 175 return isSuccess?0:1; 176 } 177 178 public static void main(String[] args) throws IOException, InterruptedException, Exception { 179 180 args=new String[]{ 181 "hdfs://hadoop-master.dragon.org:9000/wc/wcinput/", 182 "hdfs://hadoop-master.dragon.org:9000/wc/wcoutput" 183 }; 184 int status =new TopKMapReduceV4().run(args); 185 System.exit(status); 186 } 187 }

578

578

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?