Redis-cluster

前面redis集群搭建测试完了,接着开始调试:

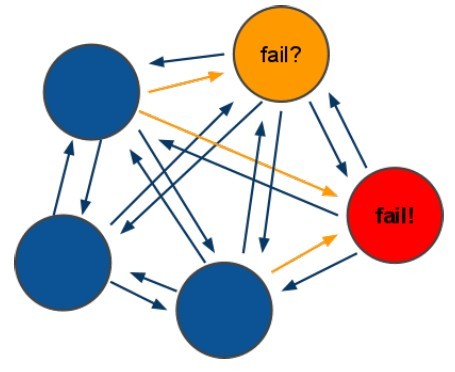

1. Redis-cluster容错,master宕机以后slave选举升级为master;

7000master没出故障之前:

7003slave->7000 master

127.0.0.1:7001>cluster nodes

c500c301825ceeed98b7bb3ef4f48c52d42fe72d127.0.0.1:7003 slave b62852c2ced6ba78e208b5c1ebf391e14388880c 0 1478763693463 10 connected

6d04c7a1efa63ca773114444591d04f6d340b07d127.0.0.1:7001 myself,master - 0 0 2 connected 5461-10922

b62852c2ced6ba78e208b5c1ebf391e14388880c 127.0.0.1:7000 master - 01478763693968 10 connected 0-5460

79d8a5dc7e6140540c03fe0664df6a8150d0888f127.0.0.1:7004 slave 6d04c7a1efa63ca773114444591d04f6d340b07d 0 1478763692453 5connected

642a60187eea628a3be7fa1bb419e898c6b4f36a127.0.0.1:7002 master - 0 1478763692453 3 connected 10923-16383

bf2e5104f98e46132a34dd7b25655e41455757b5127.0.0.1:7005 slave 642a60187eea628a3be7fa1bb419e898c6b4f36a 0 1478763692453 6connected

故意宕掉7000端口

日志:

32138:M10 Nov 15:39:24.781 * FAIL message received from6d04c7a1efa63ca773114444591d04f6d340b07d about79d8a5dc7e6140540c03fe0664df6a8150d0888f

32138:M10 Nov 15:39:24.782 * FAIL message received from6d04c7a1efa63ca773114444591d04f6d340b07d aboutc500c301825ceeed98b7bb3ef4f48c52d42fe72d

32138:M10 Nov 15:39:24.867 * Marking node bf2e5104f98e46132a34dd7b25655e41455757b5 asfailing (quorum reached).

32138:M 10Nov 15:39:27.532 * Clear FAIL state for nodec500c301825ceeed98b7bb3ef4f48c52d42fe72d: slave is reachable again.

32138:M10 Nov 15:39:28.566 * Slave 127.0.0.1:7003 asks for synchronization

32138:M10 Nov 15:39:28.566 * Full resync requested by slave 127.0.0.1:7003

32138:M10 Nov 15:39:28.566 * Starting BGSAVE for SYNC with target: disk

32138:M10 Nov 15:39:28.567 * Background saving started by pid 32154

32154:C10 Nov 15:39:28.609 * DB saved on disk

32154:C10 Nov 15:39:28.610 * RDB: 4 MB of memory used by copy-on-write

32138:M10 Nov 15:39:28.666 * Background saving terminated with success

32138:M10 Nov 15:39:28.667 * Synchronization with slave 127.0.0.1:7003 succeeded

32138:M10 Nov 15:39:31.612 * Clear FAIL state for node 79d8a5dc7e6140540c03fe0664df6a8150d0888f:slave is reachable again.

32138:M10 Nov 15:39:36.121 * Clear FAIL state for nodebf2e5104f98e46132a34dd7b25655e41455757b5: slave is reachable again.

32138:signal-handler(1478764594) Received SIGTERM scheduling shutdown...

127.0.0.1:7001>cluster nodes

c500c301825ceeed98b7bb3ef4f48c52d42fe72d 127.0.0.1:7003 master - 01478764604059 11 connected 0-5460

6d04c7a1efa63ca773114444591d04f6d340b07d127.0.0.1:7001 myself,master - 0 0 2 connected 5461-10922

b62852c2ced6ba78e208b5c1ebf391e14388880c 127.0.0.1:7000 master,fail- 1478764594145 1478764593839 10 disconnected

79d8a5dc7e6140540c03fe0664df6a8150d0888f127.0.0.1:7004 slave 6d04c7a1efa63ca773114444591d04f6d340b07d 0 1478764605077 5connected

642a60187eea628a3be7fa1bb419e898c6b4f36a127.0.0.1:7002 master - 0 1478764604567 3 connected 10923-16383

bf2e5104f98e46132a34dd7b25655e41455757b5127.0.0.1:7005 slave 642a60187eea628a3be7fa1bb419e898c6b4f36a 0 1478764603546 6connected

7000日志:

32553:M10 Nov 16:02:11.016 * The server is now ready to accept connections on port7000

32553:M10 Nov 16:02:11.032 # Configuration change detected. Reconfiguring myself as areplica of c500c301825ceeed98b7bb3ef4f48c52d42fe72d

32553:S10 Nov 16:02:11.033 # Cluster state changed: ok

32553:S10 Nov 16:02:12.042 * Connecting to MASTER 127.0.0.1:7003

32553:S10 Nov 16:02:12.045 * MASTER <-> SLAVE sync started

32553:S10 Nov 16:02:12.045 * Non blocking connect for SYNC fired the event.

32553:S10 Nov 16:02:12.046 * Master replied to PING, replication can continue...

32553:S10 Nov 16:02:12.047 * Partial resynchronization not possible (no cached master)

32553:S10 Nov 16:02:12.049 * Full resync from master:08e765d07ea4bf0b0ce3f4e70a913047a9572ecd:1

32553:S10 Nov 16:02:12.146 * MASTER <-> SLAVE sync: receiving 18 bytes frommaster

32553:S10 Nov 16:02:12.146 * MASTER <-> SLAVE sync: Flushing old data

32553:S10 Nov 16:02:12.146 * MASTER <-> SLAVE sync: Loading DB in memory

32553:S10 Nov 16:02:12.146 * MASTER <-> SLAVE sync: Finished with success

32553:S10 Nov 16:02:12.173 * Background append only file rewriting started by pid32557

32553:S10 Nov 16:02:12.232 * AOF rewrite child asks to stop sending diffs.

7000端口恢复以后:

127.0.0.1:7001>cluster nodes

c500c301825ceeed98b7bb3ef4f48c52d42fe72d 127.0.0.1:7003 master - 01478765008832 11 connected 0-5460

6d04c7a1efa63ca773114444591d04f6d340b07d127.0.0.1:7001 myself,master - 0 0 2 connected 5461-10922

b62852c2ced6ba78e208b5c1ebf391e14388880c 127.0.0.1:7000 slavec500c301825ceeed98b7bb3ef4f48c52d42fe72d 0 1478765008328 11 connected

79d8a5dc7e6140540c03fe0664df6a8150d0888f127.0.0.1:7004 slave 6d04c7a1efa63ca773114444591d04f6d340b07d 0 1478765009839 5connected

642a60187eea628a3be7fa1bb419e898c6b4f36a127.0.0.1:7002 master - 0 1478765009839 3 connected 10923-16383

bf2e5104f98e46132a34dd7b25655e41455757b5127.0.0.1:7005 slave 642a60187eea628a3be7fa1bb419e898c6b4f36a 0 1478765009335 6connected

2.添加node节点:

首先,和上面一样加入7006,7007redis服务开启;

过程中7005找不到了,正好添加node就开始添加7005节点:

./redis-trib.rbadd-node --slave --master-id 642a60187eea628a3be7fa1bb419e898c6b4f36a127.0.0.1:7005 127.0.0.1:7003

然而报错了:

OK] All16384 slots covered.

Connectingto node 127.0.0.1:7005: OK

[ERR]Node 127.0.0.1:7005 is not empty. Either the node already knows other nodes(check with CLUSTER NODES) or contains some key in database 0.

在删除7005配置:rm -rf dump.rdb appendonly.aof nodes.conf以后还是报错,还好测试机上面没数据直接清库了……

root@localhostsrc]# redis-cli -c -p 7005

127.0.0.1:7005>flushdb

root@localhostsrc]# ./redis-trib.rb add-node --slave --master-id642a60187eea628a3be7fa1bb419e898c6b4f36a 127.0.0.1:7005 127.0.0.1:7003

>>>Adding node 127.0.0.1:7005 to cluster 127.0.0.1:7003

[OK] Allnodes agree about slots configuration.

>>>Check for open slots...

>>>Check slots coverage...

[OK] All16384 slots covered.

Connectingto node 127.0.0.1:7005: OK

>>>Send CLUSTER MEET to node 127.0.0.1:7005 to make it join the cluster.

Waitingfor the cluster to join.

>>> Configure node as replica of 127.0.0.1:7002.

[OK] Newnode added correctly.

但是明明绑到7003上面,为什么现在是7002的从呢?

>>>Performing Cluster Check (using node 127.0.0.1:7005)

S:576437568beee83b412908e752a46a1273a076c5 127.0.0.1:7005

slots: (0 slots) slave

replicates642a60187eea628a3be7fa1bb419e898c6b4f36a

M:6d04c7a1efa63ca773114444591d04f6d340b07d 127.0.0.1:7001

slots:6461-10922 (4462 slots) master

1 additional replica(s)

M: 642a60187eea628a3be7fa1bb419e898c6b4f36a 127.0.0.1:7002

slots:0-999,5461-6460,10923-16383 (7461slots) master

2 additional replica(s)

S:b62852c2ced6ba78e208b5c1ebf391e14388880c 127.0.0.1:7000

slots: (0 slots) slave

replicates c500c301825ceeed98b7bb3ef4f48c52d42fe72d

S:bf2e5104f98e46132a34dd7b25655e41455757b5 127.0.0.1:7007

slots: (0 slots) slave

replicates642a60187eea628a3be7fa1bb419e898c6b4f36a

M: c500c301825ceeed98b7bb3ef4f48c52d42fe72d 127.0.0.1:7003

slots:1000-5460 (4461 slots) master

1 additional replica(s)

S:79d8a5dc7e6140540c03fe0664df6a8150d0888f 127.0.0.1:7004

slots: (0 slots) slave

replicates6d04c7a1efa63ca773114444591d04f6d340b07d

[OK] Allnodes agree about slots configuration.

>>>Check for open slots...

>>>Check slots coverage...

[OK] All16384 slots covered.

再检查下:./redis-trib.rb check 127.0.0.1:7005

Connectingto node 127.0.0.1:7005: OK

Connectingto node 127.0.0.1:7001: OK

Connectingto node 127.0.0.1:7002: OK

Connectingto node 127.0.0.1:7000: OK

Connectingto node 127.0.0.1:7003: OK

Connectingto node 127.0.0.1:7004: OK

>>>Performing Cluster Check (using node 127.0.0.1:7005)

S:576437568beee83b412908e752a46a1273a076c5 127.0.0.1:7005

slots: (0 slots) slave

replicates642a60187eea628a3be7fa1bb419e898c6b4f36a

M:6d04c7a1efa63ca773114444591d04f6d340b07d 127.0.0.1:7001

slots:6461-10922 (4462 slots) master

1 additional replica(s)

M:642a60187eea628a3be7fa1bb419e898c6b4f36a 127.0.0.1:7002

slots:0-999,5461-6460,10923-16383 (7461slots) master

1 additional replica(s)

S:b62852c2ced6ba78e208b5c1ebf391e14388880c 127.0.0.1:7000

slots: (0 slots) slave

replicatesc500c301825ceeed98b7bb3ef4f48c52d42fe72d

M:c500c301825ceeed98b7bb3ef4f48c52d42fe72d 127.0.0.1:7003

slots:1000-5460 (4461 slots) master

1 additional replica(s)

S:79d8a5dc7e6140540c03fe0664df6a8150d0888f 127.0.0.1:7004

slots: (0 slots) slave

replicates6d04c7a1efa63ca773114444591d04f6d340b07d

[OK] Allnodes agree about slots configuration.

OK,干净了。

接下来添加7006,7007两个节点,7006做主节点,7007做从节点:

[root@localhostsrc]# ./redis-trib.rb add-node 127.0.0.1:7006 127.0.0.1:7000

>>>Adding node 127.0.0.1:7006 to cluster 127.0.0.1:7000

Connectingto node 127.0.0.1:7000: OK

Connectingto node 127.0.0.1:7007: OK

Connectingto node 127.0.0.1:7001: OK

Connectingto node 127.0.0.1:7004: OK

Connectingto node 127.0.0.1:7002: OK

Connectingto node 127.0.0.1:7003: OK

Connectingto node 127.0.0.1:7005: OK

>>>Performing Cluster Check (using node 127.0.0.1:7000)

S:b62852c2ced6ba78e208b5c1ebf391e14388880c 127.0.0.1:7000

slots: (0 slots) slave

replicatesc500c301825ceeed98b7bb3ef4f48c52d42fe72d

M:6d04c7a1efa63ca773114444591d04f6d340b07d 127.0.0.1:7001

slots:6461-10922 (4462 slots) master

1 additional replica(s)

S:79d8a5dc7e6140540c03fe0664df6a8150d0888f 127.0.0.1:7004

slots: (0 slots) slave

replicates6d04c7a1efa63ca773114444591d04f6d340b07d

M:642a60187eea628a3be7fa1bb419e898c6b4f36a 127.0.0.1:7002

slots:0-999,5461-6460,10923-16383 (7461slots) master

2 additional replica(s)

M:c500c301825ceeed98b7bb3ef4f48c52d42fe72d 127.0.0.1:7003

slots:1000-5460 (4461 slots) master

1 additional replica(s)

S:576437568beee83b412908e752a46a1273a076c5 127.0.0.1:7005

slots: (0 slots) slave

replicates642a60187eea628a3be7fa1bb419e898c6b4f36a

[OK] Allnodes agree about slots configuration.

>>>Check for open slots...

>>>Check slots coverage...

[OK] All16384 slots covered.

Connectingto node 127.0.0.1:7006: OK

>>>Send CLUSTER MEET to node 127.0.0.1:7006 to make it join the cluster.

[OK] Newnode added correctly.

添加7007作为7006从节点:

./redis-trib.rb add-node--slave --master-id 4d594ec18d8584b9d530d894f76098a6d4bd742a 127.0.0.1:7007127.0.0.1:7006

-slave从节点,masterid 主节点id ,127.0.0.1:7007 新节点,127.0.0.1:7006任意一个旧节点;

>>>Adding node 127.0.0.1:7007 to cluster 127.0.0.1:7006

Connectingto node 127.0.0.1:7006: OK

Connectingto node 127.0.0.1:7001: OK

Connectingto node 127.0.0.1:7000: OK

Connectingto node 127.0.0.1:7004: OK

Connectingto node 127.0.0.1:7002: OK

Connectingto node 127.0.0.1:7003: OK

Connectingto node 127.0.0.1:7005: OK

>>>Performing Cluster Check (using node 127.0.0.1:7006)

M:4d594ec18d8584b9d530d894f76098a6d4bd742a 127.0.0.1:7006

slots: (0 slots) master

0 additional replica(s)

M:6d04c7a1efa63ca773114444591d04f6d340b07d 127.0.0.1:7001

slots:6461-10922 (4462 slots) master

1 additional replica(s)

S:b62852c2ced6ba78e208b5c1ebf391e14388880c 127.0.0.1:7000

slots: (0 slots) slave

replicatesc500c301825ceeed98b7bb3ef4f48c52d42fe72d

S:79d8a5dc7e6140540c03fe0664df6a8150d0888f 127.0.0.1:7004

slots: (0 slots) slave

replicates6d04c7a1efa63ca773114444591d04f6d340b07d

M:642a60187eea628a3be7fa1bb419e898c6b4f36a 127.0.0.1:7002

slots:0-999,5461-6460,10923-16383 (7461slots) master

1 additional replica(s)

M:c500c301825ceeed98b7bb3ef4f48c52d42fe72d 127.0.0.1:7003

slots:1000-5460 (4461 slots) master

1 additional replica(s)

S:576437568beee83b412908e752a46a1273a076c5 127.0.0.1:7005

slots: (0 slots) slave

replicates642a60187eea628a3be7fa1bb419e898c6b4f36a

[OK] Allnodes agree about slots configuration.

>>>Check for open slots...

>>>Check slots coverage...

[OK] All16384 slots covered.

redis-cli-c -p 7006

127.0.0.1:7006>cluster nodes

4d594ec18d8584b9d530d894f76098a6d4bd742a 127.0.0.1:7006 myself,master - 0 0 13connected

6d04c7a1efa63ca773114444591d04f6d340b07d127.0.0.1:7001 master - 0 1478769599187 2 connected 6461-10922

b62852c2ced6ba78e208b5c1ebf391e14388880c127.0.0.1:7000 slave c500c301825ceeed98b7bb3ef4f48c52d42fe72d 0 147876960071011 connected

c500c301825ceeed98b7bb3ef4f48c52d42fe72d127.0.0.1:7003 master - 0 1478769601215 11 connected 1000-5460

7705e1148cdea9e5e1b05f4dab9a936e75857105127.0.0.1:7007 slave 4d594ec18d8584b9d530d894f76098a6d4bd742a0 1478769600205 13 connected

79d8a5dc7e6140540c03fe0664df6a8150d0888f127.0.0.1:7004 slave 6d04c7a1efa63ca773114444591d04f6d340b07d 0 1478769599187 2connected

642a60187eea628a3be7fa1bb419e898c6b4f36a127.0.0.1:7002 master - 0 1478769599700 12 connected 0-999 5461-646010923-16383

bf2e5104f98e46132a34dd7b25655e41455757b5:0 slave,fail,noaddr 642a60187eea628a3be7fa1bb419e898c6b4f36a 14787694043161478769403509 12 disconnected

576437568beee83b412908e752a46a1273a076c5127.0.0.1:7005 slave 642a60187eea628a3be7fa1bb419e898c6b4f36a 0 147876959970012 connected

节点看起来正常:但是bf2e5104f98e46132a34dd7b25655e41455757b5 :0slave,fail,noaddr 642a60187eea628a3be7fa1bb419e898c6b4f36a 14787694043161478769403509 12 disconnected

这个可能是在以前分配节点删除配置以后留下的,怎么处理?

重新分配solt:

[root@localhost src]#redis-trib.rb reshard 127.0.0.1:7001//下面是主要过程

How many slots do you want to move (from1to16384)? 1000//设置slot数1000

What is the receiving node ID? 03ccad2ba5dd1e062464bc7590400441fafb63f2//新节点node id

Please enter all thesource node IDs.

Type'all'to use all the nodes as source nodes for the hash slots.

Type'done' once you entered all the source nodes IDs.

Source node #1:all //表示全部节点重新洗牌

Do you want to proceed with the proposed reshardplan (yes/no)? yes //确认重新分

[root@localhostsrc]# ./redis-trib.rb reshard127.0.0.1:7006

Connectingto node 127.0.0.1:7006: OK

Connectingto node 127.0.0.1:7001: OK

Connectingto node 127.0.0.1:7004: OK

Connectingto node 127.0.0.1:7003: OK

Connectingto node 127.0.0.1:7002: OK

Connectingto node 127.0.0.1:7000: OK

>>>Performing Cluster Check (using node 127.0.0.1:7006)

S:bf2e5104f98e46132a34dd7b25655e41455757b5 127.0.0.1:7006

slots: (0 slots) slave

replicates642a60187eea628a3be7fa1bb419e898c6b4f36a

M:6d04c7a1efa63ca773114444591d04f6d340b07d 127.0.0.1:7001

slots:5461-10922 (5462 slots) master

1 additional replica(s)

S:79d8a5dc7e6140540c03fe0664df6a8150d0888f 127.0.0.1:7004

slots: (0 slots) slave

replicates6d04c7a1efa63ca773114444591d04f6d340b07d

M:c500c301825ceeed98b7bb3ef4f48c52d42fe72d 127.0.0.1:7003

slots:0-5460 (5461 slots) master

1 additional replica(s)

M:642a60187eea628a3be7fa1bb419e898c6b4f36a 127.0.0.1:7002

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S:b62852c2ced6ba78e208b5c1ebf391e14388880c 127.0.0.1:7000

slots: (0 slots) slave

replicatesc500c301825ceeed98b7bb3ef4f48c52d42fe72d

[OK] Allnodes agree about slots configuration.

>>>Check for open slots...

>>>Check slots coverage...

[OK] All16384 slots covered.

How manyslots do you want to move (from 1 to 16384)?1000

redis-trib.rb reshard 127.0.0.1:7006

根据提示选择要迁移的slot数量

(ps:这里选择1000)

How many slots do you want to move (from 1 to 16384)? 1000

选择要接受的这些soltnode_id

What is the receiving node ID? f51e26b5d5ff74f85341f06f28f125b7254e61bf

选择slot来源: #all表示从所有的master重新分配,

#或者数据要提取slot的master节点id,最后用done结束

Pleaseenter all the source node IDs.

Type 'all'to use all the nodes as source nodes for the hash slots.

Type'done' once you entered all the source nodes IDs.

Sourcenode #1:all

#打印被移动的slot后,输入yes开始移动slot以及对应的数据.

#Do youwant to proceed with the proposed reshard plan (yes/no)? yes

#结束

但是现在的疑问,迁移的slot数量怎么确定?

查看nodes:

redis-cli -c -p 7007

127.0.0.1:7007>cluster nodes

c500c301825ceeed98b7bb3ef4f48c52d42fe72d127.0.0.1:7003 master - 0 1478770184173 11 connected 1272-5460

6d04c7a1efa63ca773114444591d04f6d340b07d127.0.0.1:7001 master - 0 1478770182154 2 connected 6733-10922

642a60187eea628a3be7fa1bb419e898c6b4f36a127.0.0.1:7002 master - 0 1478770183165 12 connected 456-999 5461-646010923-16383

4d594ec18d8584b9d530d894f76098a6d4bd742a 127.0.0.1:7006 master - 01478770183165 13 connected 0-455 1000-1271 6461-6732

7705e1148cdea9e5e1b05f4dab9a936e75857105 127.0.0.1:7007 myself,slave4d594ec18d8584b9d530d894f76098a6d4bd742a 0 0 0 connected

b62852c2ced6ba78e208b5c1ebf391e14388880c127.0.0.1:7000 slave c500c301825ceeed98b7bb3ef4f48c52d42fe72d 0 147877018417311 connected

79d8a5dc7e6140540c03fe0664df6a8150d0888f127.0.0.1:7004 slave 6d04c7a1efa63ca773114444591d04f6d340b07d 0 1478770184174 2connected

576437568beee83b412908e752a46a1273a076c5127.0.0.1:7005 slave 642a60187eea628a3be7fa1bb419e898c6b4f36a 0 147877018265812 connected

以上分的好碎,新添加的主节点是没有slot的,主节点如果没有slots的话,存取数据就都不会被选中。所以重新洗牌后就新加的master node 就有slot了。 注意:如果用’all’则会将其他每个master节点的slot 分给目标节点。最好别用‘all’, 会造成slot 分割碎片。

3.删除节点:

3.1 删除从节点一条命令就可以:

./redis-trib.rbdel-node 127.0.0.1:7007 'bf2e5104f98e46132a34dd7b25655e41455757b5'

>>>Removing node bf2e5104f98e46132a34dd7b25655e41455757b5 from cluster127.0.0.1:7007

Connectingto node 127.0.0.1:7007: OK

Connectingto node 127.0.0.1:7005: OK

Connectingto node 127.0.0.1:7001: OK

Connectingto node 127.0.0.1:7003: OK

Connectingto node 127.0.0.1:7002: OK

Connectingto node 127.0.0.1:7004: OK

Connectingto node 127.0.0.1:7000: OK

>>>Sending CLUSTER FORGET messages to the cluster...

>>>SHUTDOWN the node.

3.2 删除主节点:

注意:如果主节点有从节点,将从节点转移到其他主节点在删除;

127.0.0.1:7007> cluster repicate642a60187eea628a3be7fa1bb419e898c6b4f36a

OK

642a60187eea628a3be7fa1bb419e898c6b4f36a是7002节点id;

# redis-trib.rb reshard 127.0.0.1:7006 //取消分配的slot,下面是主要过程

How manyslots do you want to move (from1to16384)? 1000 //被删除master的所有slot数量

What is the receiving node ID? 642a60187eea628a3be7fa1bb419e898c6b4f36a //接收7007节点slot的master

Please enterall the source node IDs.

Type 'all'to use all the nodes as source nodes forthe hashslots.

Type 'done' once youentered all the sourcenodes IDs.

Source node #1:4d594ec18d8584b9d530d894f76098a6d4bd742a //被删除master的node-id

Source node #2:done

Do you want to proceed withthe proposedreshard plan (yes/no)? yes //取消slot后,reshard

注意:source node 要用 ‘done’,如果用all 则会全部洗牌,7006还是会得到slot.

最后删除主节点7006:

./redis-trib.rbdel-node 127.0.0.1:7006 '4d594ec18d8584b9d530d894f76098a6d4bd742a'

./redis-trib.rbdel-node 127.0.0.1:7006 '4d594ec18d8584b9d530d894f76098a6d4bd742a'

>>>Removing node 4d594ec18d8584b9d530d894f76098a6d4bd742a from cluster127.0.0.1:7006

Connectingto node 127.0.0.1:7006: OK

Connectingto node 127.0.0.1:7001: OK

Connectingto node 127.0.0.1:7000: OK

Connectingto node 127.0.0.1:7003: OK

Connectingto node 127.0.0.1:7007: OK

Connectingto node 127.0.0.1:7004: OK

Connectingto node 127.0.0.1:7002: OK

Connectingto node 127.0.0.1:7005: OK

>>>Sending CLUSTER FORGET messages to the cluster...

>>>SHUTDOWN the node.

查看结果:

redis-cli -c -p 7000

127.0.0.1:7000>cluster nodes

6d04c7a1efa63ca773114444591d04f6d340b07d127.0.0.1:7001 master - 0 1478771310480 2 connected 6733-10922

bf2e5104f98e46132a34dd7b25655e41455757b5 :0 slave,fail,noaddr642a60187eea628a3be7fa1bb419e898c6b4f36a 1478769404363 1478769403658 12disconnected

79d8a5dc7e6140540c03fe0664df6a8150d0888f127.0.0.1:7004 slave 6d04c7a1efa63ca773114444591d04f6d340b07d 0 1478771309976 5connected

b62852c2ced6ba78e208b5c1ebf391e14388880c127.0.0.1:7000 myself,slave c500c301825ceeed98b7bb3ef4f48c52d42fe72d 0 0 10connected

c500c301825ceeed98b7bb3ef4f48c52d42fe72d127.0.0.1:7003 master - 0 1478771308967 11 connected 1272-5460

642a60187eea628a3be7fa1bb419e898c6b4f36a127.0.0.1:7002 master - 0 1478771309976 14 connected 0-1271 5461-673210923-16383

576437568beee83b412908e752a46a1273a076c5127.0.0.1:7005 slave 642a60187eea628a3be7fa1bb419e898c6b4f36a 0 147877130896714 connected

这样去掉了7006,7007,redis块还是0-1271,1272-5460,5461-6732,6733-10922,10923-16383但是红的部分是什么呢?待查明!

转载于:https://blog.51cto.com/xpu2001/1871581

249

249

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?