这篇文章主要是用来复习鱼遇雨欲语与余的代码

首先是2018年腾讯社交广告大赛的相关介绍2018年腾讯社交广告大赛

为了担心赛题介绍被其他页面覆盖,我将赛题PDF在此提供大家下载

赛题说明2018年腾讯社交广告大赛说明文件 提取密码:plf7

初期大致结构和原贴一致

数据下载地址:

初赛数据地址:2018年腾讯社交广告大赛初赛数据 提取密码:ujmf

复赛数据地址:2018年腾讯社交广告复赛数据地址 提取密码:kz4w

赛题主要目的

Lookalike 技术,设计基于种子用户画像和关系链寻找相似人群,即根据种子人群的共有属性进行自动化扩展,以扩大潜在用户覆盖面,提升广告效果。但我们通过赛题的深入我们可以发现这其实就是简单的点击率问题(不知道是否正确),大家通过赛题描述也是可以知道,是通过用户的各种属性(具体属性可以从PDF描述文件中发现),预测新的一批用户通过投放相似广告,能否得到转换,并预测他们之间的匹配度。

评估指标

AUC,来自百度的定义AUC,我们从里面看到这样的一句话:首先AUC值是一个概率值,当你随机挑选一个正例以及一个负例,当前的分类算法根据计算得到的Score值将这个正例排在负例前面的概率就是AUC值。当然,AUC值越大,当前的分类算法越有可能将正例排在负例前面,即能够更好的分类。为了更加详尽的了解AUC是具体是怎么样的,我们将csdn的一篇文章引来解释:

为了防止原文丢失,简略地抓住几个重点进行解释。

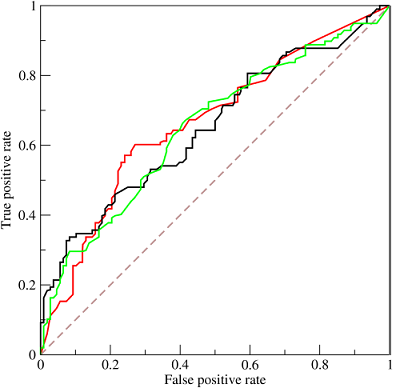

AUC(Area under the Curve of ROC)是ROC曲线下方的面积,是判断二分类预测模型优劣的标准。ROC(receiver operating characteristic curve)接收者操作特征曲线,是由二战中的电子工程师和雷达工程师发明用来侦测战场上敌军载具(飞机、船舰)的指标,属于信号检测理论。ROC曲线的横坐标是伪阳性率(也叫假正类率,False Positive Rate),纵坐标是真阳性率(真正类率,True Positive Rate),相应的还有真阴性率(真负类率,True Negative Rate)和伪阴性率(假负类率,False Negative Rate)。这四类的计算方法如下:

- 伪阳性率(FPR)

判定为正例却不是真正例的概率。 - 真阳性率(TPR)

判定为正例也是真正例的概率。 - 伪阴性率(FNR)

判定为负例却不是真负例的概率。 - 真阴性率(TNR)

判定为负例也是真负例的概率。

x轴与y轴的值域都是[0, 1],随着判定正例的阈值不断增加,我们可以得到一组(x, y)的点,相连便作出了ROC曲线,示例图如下:

特征构造

原po主在这里,计算了五大类特征:

投放量(click)、投放比例(ratio)、转化率(cvr)、特殊转化率(CV_cvr)、多值长度(length)

每类特征基本都做了一维字段和二维组合字段的统计。

值得注意的是转化率利用预处理所得的分块标签独立出一个分块验证集不加入统计,其余分块做dropout交叉统计,测试集则用全部训练集数据进行统计。此外,我们发现一些多值字段的重要性很高,所以利用了lightgbm特征重要性对ct\marriage\interest字段的稀疏编码矩阵进行了提取,提取出排名前20的编码特征与其他单值特征进行类似上述cvr的统计生成CV_cvr的统计,这组特征和cvr的效果几乎相当。

主要构造了统计特征,比例特征和转化率特征。和以往不同的是,构造这样特征时不仅考虑单个特征的统计度量,还考虑了所有可能的组合特征。也因此发现了很多不易想到的强特,如uid相关特征,uid点击次数,uid转化率。

为了从现在开始,需要从头开始复习原po主的源码。可能有些人上github有些问题,所有这里我将文件分享一下 密码:e3sb。

好了,代码有了。可以开始我们的学习了。

2.1基础特征

官方提供的原始特征(用户特征,广告特征).

2.2sparse特征

sparese 稀疏矩阵,这里我个人的理解应该是稀疏矩阵特征(在这里一个是通俗理解),而至于为什么稀疏矩阵特征会有效(点击)?

这里原博主sparse特征主要参考开源baseline来做的。

从这里看来,博主有很好的编码习惯。

第一个_sparse_one.py

##由于机器内存问题,编码时分批进行处理

import pandas as pd

import lightgbm as lgb

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.preprocessing import OneHotEncoder,LabelEncoder

from scipy import sparse

import os

import numpy as np

import time

import random

import warnings

warnings.filterwarnings("ignore")

##读取数据

print("Reading...")

data = pd.read_csv('data_preprocessing/train_test_merge.csv')

##划分训练与测试集

print('Index...')

train_part_index = list(data[(data['label']!=-1)&(data['n_parts']!=1)].index)

evals_index = list(data[(data['label']!=-1)&(data['n_parts']==1)].index)

test1_index = list(data[data['n_parts']==6].index)

test2_index = list(data[data['n_parts']==7].index)

train_part_y = data['label'].loc[train_part_index]

evals_y = data['label'].loc[evals_index]

print('Done')

##labelencoder

print('LabelEncoder...')

label_feature=['aid', 'advertiserId', 'campaignId', 'creativeId',

'creativeSize', 'adCategoryId', 'productId', 'productType', 'age',

'gender','education', 'consumptionAbility', 'LBS',

'os', 'carrier', 'house']

##简单来说 LabelEncoder 是对不连续的数字或者文本进行编号

for feature in label_feature:

s = time.time()

try:

data[feature] = LabelEncoder().fit_transform(data[feature].apply(int))

except:

data[feature] = LabelEncoder().fit_transform(data[feature])

print(feature,int(time.time()-s),'s')

print('Done')

##ct(上网连接类型)WIFI/2G/3G/4G特殊处理

print('Ct...')

value = []

ct_ = ['0','1','2','3','4']

ct_all = list(data['ct'].values)

for i in range(len(data)):

ct = ct_all[i]

va = []

for j in range(5):

if ct_[j] in ct:

va.append(1)

else:va.append(0)

value.append(va)

df = pd.DataFrame(value,columns=['ct0','ct1','ct2','ct3','ct4'])

print('Done')

print('Sparse...')

col = ['ct0','ct1','ct2','ct3','ct4']

train_part_x=df.loc[train_part_index][col]

evals_x=df.loc[evals_index][col]

test1_x=df.loc[test1_index][col]

test2_x=df.loc[test2_index][col]

df = []

print('OneHoting1...')

enc = OneHotEncoder()

#将变成稀疏矩阵特征分成feature1、2、3进行分成3个变成来处理,一个是为了节省空间,另一个当然是为了先预存npz文件

one_hot_feature1 = ['aid', 'advertiserId', 'campaignId', 'creativeId',

'creativeSize']

one_hot_feature2 = ['adCategoryId', 'productId', 'productType', 'age',

'gender','education']

one_hot_feature3 = ['consumptionAbility', 'LBS',

'os', 'carrier', 'house']

for feature in one_hot_feature1:

s = time.time()

enc.fit(data[feature].values.reshape(-1, 1))

arr = enc.transform(data.loc[train_part_index][feature].values.reshape(-1, 1))

train_part_x = sparse.hstack((train_part_x,arr))

arr = enc.transform(data.loc[evals_index][feature].values.reshape(-1, 1))

evals_x = sparse.hstack((evals_x,arr))

arr = enc.transform(data.loc[test1_index][feature].values.reshape(-1, 1))

test1_x = sparse.hstack((test1_x,arr))

arr = enc.transform(data.loc[test2_index][feature].values.reshape(-1, 1))

test2_x = sparse.hstack((test2_x,arr))

arr= []

del data[feature]

print(feature,int(time.time()-s),"s")

print("Saving...")

print('train_part_x...')

sparse.save_npz("data_preprocessing/train_part_x_sparse_one_1.npz",train_part_x)

print('evals_x...')

sparse.save_npz("data_preprocessing/evals_x_sparse_one_1.npz",evals_x)

print('test1_x...')

sparse.save_npz("data_preprocessing/test1_x_sparse_one_1.npz",test1_x)

print('test2_x...')

sparse.save_npz("data_preprocessing/test2_x_sparse_one_1.npz",test2_x)

print('Done')

print('OneHoting2...')

train_part_x = pd.DataFrame()

evals_x = pd.DataFrame()

test1_x = pd.DataFrame()

test2_x = pd.DataFrame()

#将变成稀疏矩阵特征分成feature1、2、3进行分成3个变成来处理,一个是为了节省空间,另一个当然是为了先预存npz文件

for feature in one_hot_feature2:

s = time.time()

enc.fit(data[feature].values.reshape(-1, 1))

arr = enc.transform(data.loc[train_part_index][feature].values.reshape(-1, 1))

train_part_x = sparse.hstack((train_part_x,arr))

arr = enc.transform(data.loc[evals_index][feature].values.reshape(-1, 1))

evals_x = sparse.hstack((evals_x,arr))

arr = enc.transform(data.loc[test1_index][feature].values.reshape(-1, 1))

test1_x = sparse.hstack((test1_x,arr))

arr = enc.transform(data.loc[test2_index][feature].values.reshape(-1, 1))

test2_x = sparse.hstack((test2_x,arr))

arr= []

del data[feature]

print(feature,int(time.time()-s),"s")

print("Saving...")

print('train_part_x...')

sparse.save_npz("data_preprocessing/train_part_x_sparse_one_2.npz",train_part_x)

print('evals_x...')

sparse.save_npz("data_preprocessing/evals_x_sparse_one_2.npz",evals_x)

print('test1_x...')

sparse.save_npz("data_preprocessing/test1_x_sparse_one_2.npz",test1_x)

print('test2_x...')

sparse.save_npz("data_preprocessing/test2_x_sparse_one_2.npz",test2_x)

print('Done')

print('Sparse...')

train_part_x = pd.DataFrame()

evals_x = pd.DataFrame()

test1_x = pd.DataFrame()

test2_x = pd.DataFrame()

#将变成稀疏矩阵特征分成feature1、2、3进行分成3个变成来处理,一个是为了节省空间,另一个当然是为了先预存npz文件

for feature in one_hot_feature3:

s = time.time()

enc.fit(data[feature].values.reshape(-1, 1))

arr = enc.transform(data.loc[train_part_index][feature].values.reshape(-1, 1))

train_part_x = sparse.hstack((train_part_x,arr))

arr = enc.transform(data.loc[evals_index][feature].values.reshape(-1, 1))

evals_x = sparse.hstack((evals_x,arr))

arr = enc.transform(data.loc[test1_index][feature].values.reshape(-1, 1))

test1_x = sparse.hstack((test1_x,arr))

arr = enc.transform(data.loc[test2_index][feature].values.reshape(-1, 1))

test2_x = sparse.hstack((test2_x,arr))

arr= []

del data[feature]

print(feature,int(time.time()-s),"s")

print("Saving...")

print('train_part_x...')

sparse.save_npz("data_preprocessing/train_part_x_sparse_one_3.npz",train_part_x)

print('evals_x...')

sparse.save_npz("data_preprocessing/evals_x_sparse_one_3.npz",evals_x)

print('test1_x...')

sparse.save_npz("data_preprocessing/test1_x_sparse_one_3.npz",test1_x)

print('test2_x...')

sparse.save_npz("data_preprocessing/test2_x_sparse_one_3.npz",test2_x)

print('Done')

print('CountVector1...')

train_part_x = pd.DataFrame()

evals_x = pd.DataFrame()

test1_x = pd.DataFrame()

test2_x = pd.DataFrame()

#第二步,将vector_feature特征进行countvectorizer()

vector_feature1 = ['marriageStatus','interest1', 'interest2', 'interest3', 'interest4','interest5']

vector_feature2 = ['kw1', 'kw2','kw3', 'topic1', 'topic2', 'topic3','appIdAction', 'appIdInstall']

cntv=CountVectorizer()

for feature in vector_feature1[:-1]:

s = time.time()

cntv.fit(data[feature])

arr = cntv.transform(data.loc[train_part_index][feature])

train_part_x = sparse.hstack((train_part_x,arr))

arr = cntv.transform(data.loc[evals_index][feature])

evals_x = sparse.hstack((evals_x,arr))

arr = cntv.transform(data.loc[test1_index][feature])

test1_x = sparse.hstack((test1_x,arr))

arr = cntv.transform(data.loc[test2_index][feature])

test2_x = sparse.hstack((test2_x,arr))

arr = []

del data[feature]

print(feature,int(time.time()-s),'s')

print("Saving...")

print('train_part_x...')

sparse.save_npz("data_preprocessing/train_part_x_sparse_one_4.npz",train_part_x)

print('evals_x...')

sparse.save_npz("data_preprocessing/evals_x_sparse_one_4.npz",evals_x)

print('test1_x...')

sparse.save_npz("data_preprocessing/test1_x_sparse_one_4.npz",test1_x)

print('test2_x...')

sparse.save_npz("data_preprocessing/test2_x_sparse_one_4.npz",test2_x)

print('Done')

import pandas as pd

import lightgbm as lgb

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.preprocessing import OneHotEncoder,LabelEncoder

from scipy import sparse

import os

import numpy as np

import time

import random

import warnings

warnings.filterwarnings("ignore")

##读取数据

print("Reading...")

data = pd.read_csv('train_test_merge.csv')

##划分训练与测试集

data.columns

print('Dropping...')

label_feature= ['label','n_parts','interest5','kw1','kw2','kw3', 'topic1', 'topic2', 'topic3','appIdAction', 'appIdInstall']

data = data[label_feature]

print('Index...')

train_part_index = list(data[(data['label']!=-1)&(data['n_parts']!=1)].index)

evals_index = list(data[(data['label']!=-1)&(data['n_parts']==1)].index)

test1_index = list(data[data['n_parts']==6].index)

test2_index = list(data[data['n_parts']==7].index)

del data['label']

del data['n_parts']

data.loc[test1_index][label_feature[-2:]].isnull().sum()

data.loc[test2_index][label_feature[-2:]].isnull().sum()

print('Cntv...')

s = time.time()

train_part_x = pd.DataFrame()

evals_x = pd.DataFrame()

test1_x = pd.DataFrame()

test2_x = pd.DataFrame()

feature = 'interest5'

cntv=CountVectorizer()

cntv.fit(data[feature])

arr = cntv.transform(data.loc[train_part_index][feature])

train_part_x = sparse.hstack((train_part_x,arr))

arr = cntv.transform(data.loc[evals_index][feature])

evals_x = sparse.hstack((evals_x,arr))

arr = cntv.transform(data.loc[test1_index][feature])

test1_x = sparse.hstack((test1_x,arr))

arr = cntv.transform(data.loc[test2_index][feature])

test2_x = sparse.hstack((test2_x,arr))

arr = []

del data[feature]

print(feature,int(time.time()-s),'s')

print("Saving...")

print('train_part_x...')

sparse.save_npz("data_preprocessing/train_part_x_sparse_one_5.npz",train_part_x)

print('evals_x...')

sparse.save_npz("data_preprocessing/evals_x_sparse_one_5.npz",evals_x)

print('test1_x...')

sparse.save_npz("data_preprocessing/test1_x_sparse_one_5.npz",test1_x)

print('test2_x...')

sparse.save_npz("data_preprocessing/test2_x_sparse_one_5.npz",test2_x)

print('Done')

print('CountVector1...')

train_part_x = pd.DataFrame()

evals_x = pd.DataFrame()

test1_x = pd.DataFrame()

test2_x = pd.DataFrame()

num = 0

vector_feature2 = ['kw1', 'kw2','kw3', 'topic1', 'topic2', 'topic3','appIdAction', 'appIdInstall']

cntv=CountVectorizer()

for feature in vector_feature2:

print(feature)

s = time.time()

cntv.fit(data[feature])

arr = cntv.transform(data.loc[train_part_index][feature])

train_part_x = sparse.hstack((train_part_x,arr))

arr = cntv.transform(data.loc[evals_index][feature])

evals_x = sparse.hstack((evals_x,arr))

arr = cntv.transform(data.loc[test1_index][feature])

test1_x = sparse.hstack((test1_x,arr))

arr = cntv.transform(data.loc[test2_index][feature])

test2_x = sparse.hstack((test2_x,arr))

arr = []

del data[feature]

print(feature,int(time.time()-s),'s')

num+=1

if num%3==0:

k = int(num/3+5)

print("Saving...")

print(k)

print('train_part_x...',train_part_x.shape)

sparse.save_npz('data_preprocessing/train_part_x_sparse_one_'+str(k)+'.npz',train_part_x)

print('evals_x...',evals_x.shape)

sparse.save_npz('data_preprocessing/evals_x_sparse_one_'+str(k)+'.npz',evals_x)

print('test1_x...',test1_x.shape)

sparse.save_npz('data_preprocessing/test1_x_sparse_one_'+str(k)+'.npz',test1_x)

print('test2_x...',test2_x.shape)

sparse.save_npz('data_preprocessing/test2_x_sparse_one_'+str(k)+'.npz',test2_x)

print('Over')

train_part_x=pd.DataFrame()

evals_x=pd.DataFrame()

test1_x=pd.DataFrame()

test2_x=pd.DataFrame()

print("Saving...")

print(8)

print('train_part_x...',train_part_x.shape)

sparse.save_npz('data_preprocessing/train_part_x_sparse_one_'+str(8)+'.npz',train_part_x)

print('evals_x...',evals_x.shape)

sparse.save_npz('data_preprocessing/evals_x_sparse_one_'+str(8)+'.npz',evals_x)

print('test1_x...',test1_x.shape)

sparse.save_npz('data_preprocessing/test1_x_sparse_one_'+str(8)+'.npz',test1_x)

print('test2_x...',test2_x.shape)

sparse.save_npz('data_preprocessing/test2_x_sparse_one_'+str(8)+'.npz',test2_x)

print('Over')

接下来的将是对原来稀疏矩阵特征的筛选。这对应的文件为002_sparse_one_select.py。这里的主要步骤是 读取稀疏矩阵文件→训练LGB→获取重要性大于0的特征→训练loss→筛选出最重要的特征数目→重新生成稀疏矩阵特征。over

##一维稀疏矩阵特征选择

import pandas as pd

import lightgbm as lgb

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.preprocessing import OneHotEncoder,LabelEncoder

from scipy import sparse

print('这真真是、最终版稀疏矩阵特征筛选了')

print('天灵灵地灵灵、太上老君来显灵')

print('两段代码两段代码跑得块、跑得块')

print('一段没有bug、一段出了好结果')

print('真开心、真高兴')

print('Reading...')

train_part_x = pd.DataFrame()

evals_x = pd.DataFrame()

train_index = pd.read_csv('train_index_2.csv',header=None)[0].values.tolist()

##读取所需要进行筛选的稀疏矩阵特征,以及所需要的测试集

for i in range(1,9):

train_part_x = sparse.hstack((train_part_x,sparse.load_npz('data_preprocessing/train_part_x_sparse_one_'+str(i)+'.npz').tocsr()[train_index,:])).tocsc()

evals_x = sparse.hstack((evals_x,sparse.load_npz('data_preprocessing/evals_x_sparse_one_'+str(i)+'.npz'))).tocsc()

print('读到了第',i,'个训练集特征文件')

print("Sparse is ready")

print('Label...')

##然后读取label文件

train_part_y=pd.read_csv('data_preprocessing/train_part_y.csv',header=None).loc[train_index]

evals_y=pd.read_csv('data_preprocessing/evals_y.csv',header=None)

##利用特征重要性筛选特征

import pandas as pd

from lightgbm import LGBMClassifier

import time

#这里采用的是 roc_auc_score得分进行筛选

from sklearn.metrics import roc_auc_score

import warnings

warnings.filterwarnings('ignore')

clf = LGBMClassifier(boosting_type='gbdt',

num_leaves=31, max_depth=-1,

learning_rate=0.1, n_estimators=10000,

subsample_for_bin=200000, objective=None,

class_weight=None, min_split_gain=0.0,

min_child_weight=0.001,

min_child_samples=20, subsample=1.0, subsample_freq=1,

colsample_bytree=1.0,

reg_alpha=0.0, reg_lambda=0.0, random_state=None,

n_jobs=-1, silent=True)

print('Fiting...')

clf.fit(train_part_x, train_part_y, eval_set=[(train_part_x, train_part_y),(evals_x, evals_y)],

eval_names =['train','valid'],

eval_metric='auc',early_stopping_rounds=100)

##获得稀疏矩阵特征在lgb模型的重要性

se = pd.Series(clf.feature_importances_)

se = se[se>0]

##将特征重要性进行排序

col =list(se.sort_values(ascending=False).index)

pd.Series(col).to_csv('data_preprocessing/col_sort_one.csv',index=False)

##打印出来不为零的特征以及个数

print('特征重要性不为零的编码特征有',len(se),'个')

n = clf.best_iteration_

baseloss = clf.best_score_['valid']['auc']

print('baseloss',baseloss)

#通过筛选特征找出最优特征个数

clf = LGBMClassifier(boosting_type='gbdt',

num_leaves=31, max_depth=-1,

learning_rate=0.1, n_estimators=n,

subsample_for_bin=200000, objective=None,

class_weight=None, min_split_gain=0.0,

min_child_weight=0.001,

min_child_samples=20, subsample=1.0, subsample_freq=1,

colsample_bytree=1.0,

reg_alpha=0.0, reg_lambda=0.0, random_state=None,

n_jobs=-1, silent=True)

def evalsLoss(cols):

print('Runing...')

s = time.time()

clf.fit(train_part_x[:,cols],train_part_y)

ypre = clf.predict_proba(evals_x[:,cols])[:,1]

print(time.time()-s,"s")

return roc_auc_score(evals_y[0].values,ypre)

print('开始进行特征选择计算...')

all_num = int(len(se)/100)*100

print('共有',all_num,'个待计算特征')

loss = []

break_num = 0

for i in range(100,all_num,100):

loss.append(evalsLoss(col[:i]))

if loss[-1]>baseloss:

best_num = i

baseloss = loss[-1]

break_num+=1

print('前',i,'个特征的得分为',loss[-1],'而全量得分',baseloss)

print('\n')

if break_num==2:

break

print('筛选出来最佳特征个数为',best_num,'这下子训练速度终于可以大大提升了')

best_num = len(col)

#通过找到最优特征个数,获得我们所需的稀疏矩阵特征

train_part_x = pd.DataFrame()

evals_x = pd.DataFrame()

for i in range(1,9):

train_part_x = sparse.hstack((train_part_x,sparse.load_npz('data_preprocessing/train_part_x_sparse_one_'+str(i)+'.npz'))).tocsc()

evals_x = sparse.hstack((evals_x,sparse.load_npz('data_preprocessing/evals_x_sparse_one_'+str(i)+'.npz'))).tocsc()

print('读到了第',i,'个训练集特征文件')

print('Saving train...')

print('Saving train part...')

sparse.save_npz("data_preprocessing/train_part_x_sparse_one_select.npz",train_part_x[:,col[:best_num]])

print('Saving evals...')

sparse.save_npz("data_preprocessing/evals_x_sparse_one_select.npz",evals_x[:,col[:best_num]])

train_part_x = []

evals_x = []

print('Reading test...')

test1_x = pd.DataFrame()

test2_x = pd.DataFrame()

for i in range(1,9):

test1_x = sparse.hstack((test1_x,sparse.load_npz('data_preprocessing/test1_x_sparse_one_'+str(i)+'.npz'))).tocsc()

test2_x = sparse.hstack((test2_x,sparse.load_npz('data_preprocessing/test2_x_sparse_one_'+str(i)+'.npz'))).tocsc()

print('读到了第',i,'个测试集特征文件')

print('Saving test...')

print('Saving test1...')

sparse.save_npz("data_preprocessing/test1_x_sparse_one_select.npz",test1_x[:,col[:best_num]])

print('Saving test2...')

sparse.save_npz("data_preprocessing/test2_x_sparse_one_select.npz",test2_x[:,col[:best_num]])

print('我的天终于筛选存完了')003_sparse_two.py和003_sparse_two_select.py这两个文件我们可以看到是将id等类特征进行组合,并生成稀疏矩阵,并把稀疏矩阵进行筛选特征的重要性和one有些重复,这里就不再重复了。

458

458

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?