一、架构规划

1. 操作系统:CentOS 6.8

2. elastic stack套件 5.0.1

3. Search Guard 5.0.1

4. 主机地址:192.168.5.251(node1) 192.168.5.252(node2) 192.168.5.253(node3)

二、软件下载地址:

Elastic Stack:https://www.elastic.co/downloads

Search Guard:https://github.com/floragunncom/search-guard/tree/es-5.0.1

Search Guard SSL:https://github.com/floragunncom/search-guard-ssl/tree/5.1.1

三、搭建elasticsearch集群

一、同步时间 三个结点都需要执行

[root@localhost ~]# ntpdate time.windows.com

8 Jan 10:43:19 ntpdate[1458]: step time server 52.169.179.91 offset -28798.105134 sec

二、安装软件包 三个结点都需要执行

[root@localhost elk]# ls

elasticsearch-5.0.1.rpm filebeat-5.0.1-x86_64.rpm jdk-8u101-linux-x64.rpm kibana-5.0.1-x86_64.rpm logstash-5.0.1.rpm

[root@localhost elk]# rpm -ivh *.rpm

warning: elasticsearch-5.0.1.rpm: Header V4 RSA/SHA512 Signature, key ID d88e42b4: NOKEY

Preparing... ########################################### [100%]

1:logstash ########################################### [ 20%]

Could not find any executable java binary. Please install java in your PATH or set JAVA_HOME.

warning: %post(logstash-1:5.0.1-1.noarch) scriptlet failed, exit status 1

2:kibana ########################################### [ 40%]

3:jdk1.8.0_101 ########################################### [ 60%]

Unpacking JAR files...

tools.jar...

plugin.jar...

javaws.jar...

deploy.jar...

rt.jar...

jsse.jar...

charsets.jar...

localedata.jar...

4:filebeat ########################################### [ 80%]

Creating elasticsearch group... OK

Creating elasticsearch user... OK

5:elasticsearch ########################################### [100%]

### NOT starting on installation, please execute the following statements to configure elasticsearch service to start automatically using chkconfig

sudo chkconfig --add elasticsearch

### You can start elasticsearch service by executing

sudo service elasticsearch start

三、修改 90-nproc.conf nproc 值为 2048以上 三个结点都需要执行

[root@localhost elk]# vim /etc/security/limits.d/90-nproc.conf

* soft nproc 2048

四、修改elasticsearch参数,搭建集群 三个结点都需要执行

[root@localhost elk]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ba:3a:b9 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.253/24 brd 192.168.5.255 scope global eth1

inet6 fe80::20c:29ff:feba:3ab9/64 scope link

valid_lft forever preferred_lft forever

[root@localhost elk]# vim /etc/elasticsearch/elasticsearch.yml

cluster.name: elastic-cluster

node.name: node-3

network.host: 192.168.5.253

http.port: 9200

discovery.zen.ping.unicast.hosts: ["192.168.5.251", "192.168.5.252", "192.168.5.253"]

discovery.zen.minimum_master_nodes: 2

gateway.recover_after_nodes: 3

五、将当前主机修改好的elasticsearch参数 复制到另外的两台结点

[root@localhost elk]# scp /etc/elasticsearch/elasticsearch.yml 192.168.5.251:/etc/elasticsearch/

[root@localhost elk]# scp /etc/elasticsearch/elasticsearch.yml 192.168.5.252:/etc/elasticsearch/

六、修改 192.168.5.251 192.168.5.252 node.name network.host 值需要和当前主机一致

[root@localhost elk]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:07:50:12 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.251/24 brd 192.168.5.255 scope global eth1

inet6 fe80::20c:29ff:fe07:5012/64 scope link

valid_lft forever preferred_lft forever

[root@localhost elk]# vim /etc/elasticsearch/elasticsearch.yml

node.name: node-1

network.host: 192.168.5.251

[root@localhost elk]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:94:a0:d9 brd ff:ff:ff:ff:ff:ff

inet 192.168.5.252/24 brd 192.168.5.255 scope global eth1

inet6 fe80::20c:29ff:fe94:a0d9/64 scope link

valid_lft forever preferred_lft forever

[root@localhost elk]# vim /etc/elasticsearch/elasticsearch.yml

node.name: node-2

network.host: 192.168.5.252

七、验证集群是否搭建成功,如下图所示,表示成功

浏览器打开:http://192.168.5.253:9200/_cat/nodes

192.168.5.252 4 94 3 0.43 0.29 0.18 mdi * node-2

192.168.5.251 3 93 2 0.74 0.53 0.32 mdi - node-1

192.168.5.253 3 93 4 0.54 0.44 0.29 mdi - node-3

三、配置 elasticsearch plugin search-guard

一、安装 search-guard 三个结点都需要执行

[root@localhost elk]# cd /usr/share/elasticsearch/

[root@localhost elasticsearch]# bin/elasticsearch-plugin install -b com.floragunn:search-guard-5:5.0.1-9

二、下载 search-guard-ssl 任意一台结点配置,本次以node-3结点

[root@localhost ~]# git clone https://github.com/floragunncom/search-guard-ssl.git

Initialized empty Git repository in /root/search-guard-ssl/.git/

^[[A^[[Aremote: Counting objects: 4870, done.

Receiving objects: 100% (4870/4870), 998.69 KiB | 63 KiB/s, done.

remote: Total 4870 (delta 0), reused 0 (delta 0), pack-reused 4870

Resolving deltas: 100% (2306/2306), done.

[root@localhost ~]# cd search-guard-ssl/example-pki-scripts/

/root/search-guard-ssl/example-pki-scripts

[root@localhost example-pki-scripts]# ls

clean.sh etc example.sh gen_client_node_cert.sh gen_node_cert.sh gen_root_ca.sh

三、修改第三行的 0 为 3 生成 node-1 node-2 node-3 三张证书 任意一台结点配置,本次以node-3结点

[root@localhost example-pki-scripts]# cat example.sh

#!/bin/bash

set -e

./clean.sh

./gen_root_ca.sh capass changeit

./gen_node_cert.sh 3 changeit capass && ./gen_node_cert.sh 1 changeit capass && ./gen_node_cert.sh 2 changeit capass

./gen_client_node_cert.sh spock changeit capass

./gen_client_node_cert.sh kirk changeit capass

[root@localhost example-pki-scripts]# ./example.sh

[root@localhost example-pki-scripts]# ls

ca etc gen_root_ca.sh kirk.csr kirk-signed.pem node-1-signed.pem node-2-signed.pem node-3-signed.pem spock.csr spock-signed.pem

certs example.sh kirk.all.pem kirk.key.pem node-1.csr node-2.csr node-3.csr spock.all.pem spock.key.pem truststore.jks

clean.sh gen_client_node_cert.sh kirk.crtfull.pem kirk-keystore.jks node-1-keystore.jks node-2-keystore.jks node-3-keystore.jks spock.crtfull.pem spock-keystore.jks

crl gen_node_cert.sh kirk.crt.pem kirk-keystore.p12 node-1-keystore.p12 node-2-keystore.p12 node-3-keystore.p12 spock.crt.pem spock-keystore.p12

四、复制 truststore.jks node-x-keystore.jks 证书到各结点,注意,node-x-keystore.jks x 表示对应主机的结点名

[root@localhost example-pki-scripts]# cp node-3-keystore.jks truststore.jks /etc/elasticsearch/

[root@localhost example-pki-scripts]# scp node-2-keystore.jks truststore.jks 192.168.5.252:/etc/elasticsearch/

[root@localhost example-pki-scripts]# scp node-1-keystore.jks truststore.jks 192.168.5.251:/etc/elasticsearch/

五、配置elasticsearch 各结点基于tls加密通讯,并重启 注意 node-x-keystore.jks x 表示对应主机的结点名 三台都需要配置

[root@localhost example-pki-scripts]# vim /etc/elasticsearch/elasticsearch.yml

searchguard.ssl.transport.keystore_filepath: node-3-keystore.jks

searchguard.ssl.transport.keystore_password: changeit

searchguard.ssl.transport.truststore_filepath: truststore.jks

searchguard.ssl.transport.truststore_password: changeit

searchguard.ssl.transport.enforce_hostname_verification: false

重启后,elasticsearch 之间的连接已经是加密的了,但是有如下报错,此问题是没有初始化 Search Guard 索引。

[root@localhost example-pki-scripts]# tail -f /var/log/elasticsearch/elastic-cluster.log

[2017-01-08T12:21:29,918][ERROR][c.f.s.a.BackendRegistry ] Not yet initialized (you may need to run sgadmin)

六、初始化 Search Guard 索引,配置帐号。 任意结点,本次配置以node-3结点。

复制kirk-keystore.jks证书

[root@localhost example-pki-scripts]# pwd

/root/search-guard-ssl/example-pki-scripts

[root@localhost example-pki-scripts]# cp kirk-keystore.jks /usr/share/elasticsearch/plugins/search-guard-5/sgconfig/

新增以下配置参数

[root@localhost example-pki-scripts]# vim /etc/elasticsearch/elasticsearch.yml

searchguard.authcz.admin_dn:

- "CN=kirk, OU=client, O=client, L=Test, C=DE"

重启服务

[root@localhost example-pki-scripts]# service elasticsearch restart

service elasticsearch restart elasticsearch restart

Stopping elasticsearch: [ OK ]

Starting elasticsearch: [ OK ]

初始化 Search Guard 索引

[root@localhost search-guard-5]# cd /usr/share/elasticsearch/plugins/search-guard-5

[root@localhost search-guard-5]# tools/sgadmin.sh -ts /etc/elasticsearch/truststore.jks -tspass changeit -ks sgconfig/kirk-keystore.jks -kspass changeit -cd sgconfig/ -icl -nhnv -h 192.168.5.253

Search Guard Admin v5

Will connect to 192.168.5.253:9300 ... done

Contacting elasticsearch cluster 'elasticsearch' and wait for YELLOW clusterstate ...

Clustername: elastic-cluster

Clusterstate: GREEN

Number of nodes: 3

Number of data nodes: 3

searchguard index does not exists, attempt to create it ... done (auto expand replicas is on)

Populate config from /usr/share/elasticsearch/plugins/search-guard-5/sgconfig

Will update 'config' with sgconfig/sg_config.yml

SUCC: Configuration for 'config' created or updated

Will update 'roles' with sgconfig/sg_roles.yml

SUCC: Configuration for 'roles' created or updated

Will update 'rolesmapping' with sgconfig/sg_roles_mapping.yml

SUCC: Configuration for 'rolesmapping' created or updated

Will update 'internalusers' with sgconfig/sg_internal_users.yml

SUCC: Configuration for 'internalusers' created or updated

Will update 'actiongroups' with sgconfig/sg_action_groups.yml

SUCC: Configuration for 'actiongroups' created or updated

Done with success

其中 sg_internal_users.yml 保存着默认的用户和密码

[root@localhost sgconfig]# head -n 5 sg_internal_users.yml

# This is the internal user database

# The hash value is a bcrypt hash and can be generated with plugin/tools/hash.sh

admin:

hash: $2a$12$VcCDgh2NDk07JGN0rjGbM.Ad41qVR/YFJcgHp0UGns5JDymv..TOG

#password is: admin

[root@localhost sgconfig]# pwd

/usr/share/elasticsearch/plugins/search-guard-5/sgconfig

使用浏览器访问:http://192.168.5.253:9200 提示输入密码,输入默认用户: admin admin ,可登陆表示正常。

七、配置REST-API 基于https连接

[root@localhost elk]# vim /etc/elasticsearch/elasticsearch.yml,注意 node-x-keystore.jks x 表示对应主机的结点名 三台都需要配置

searchguard.ssl.http.enabled: true

searchguard.ssl.http.keystore_filepath: node-3-keystore.jks

searchguard.ssl.http.keystore_password: changeit

searchguard.ssl.http.truststore_filepath: truststore.jks

searchguard.ssl.http.truststore_password: changeit

使用浏览器访问:https://192.168.5.253:9200 提示输入密码,输入默认用户: admin admin ,可登陆表示正常

http://192.168.5.253:9200 无加密拒绝访问。

四、配置kibana 任意结点,此处以node-3为例

一、修改 kibana.yml 参数

[root@localhost kibana]# vim /etc/kibana/kibana.yml

server.port: 5601

server.host: "192.168.5.253"

elasticsearch.url: "https://192.168.5.253:9200"

elasticsearch.username: "kibanaserver"

elasticsearch.password: "kibanaserver"

elasticsearch.ssl.verify: false

二、修改kibana console插件参数,如果不修改,无法使用kibana console的功能。

[root@localhost kibana]# vim /usr/share/kibana/src/core_plugins/console/index.js

ssl: {

verify: false 默认为:true

}

三、重启kibana,提示要求输入密码 admin admin五、开启 search-guard 行为审计功能

一、下载auidt依赖人jar文件,下载地址:https://github.com/floragunncom/search-guard-module-auditlog/wiki

二、将下载好的文件复制到各结点,并重启

cp /root/dlic-search-guard-module-auditlog-5.0-3-jar-with-dependencies.jar /usr/share/elasticsearch/plugins/search-guard-5/

[root@localhost search-guard-5]# scp dlic-search-guard-module-auditlog-5.0-3-jar-with-dependencies.jar 192.168.5.251:/usr/share/elasticsearch/plugins/search-guard-5/

[root@localhost search-guard-5]# scp dlic-search-guard-module-auditlog-5.0-3-jar-with-dependencies.jar 192.168.5.252:/usr/share/elasticsearch/plugins/search-guard-5/

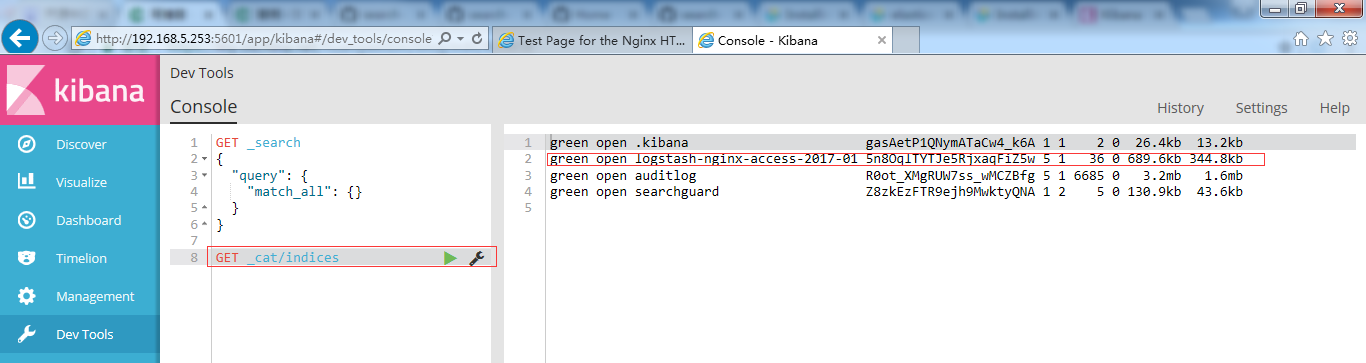

三、在kibana 的console输入以下命令,出现auditlog表示正常:

GET _cat/indices

green open auditlog R0ot_XMgRUW7ss_wMCZBfg 5 1 119 0 404.2kb 218.2kb

六、配置logstash 并结合 filebeat收集nginx日志 任意结点,此处以node-3为例

一、配置logstash

[root@localhost conf.d]# cat /etc/logstash/conf.d/logstash.conf

input {

beats {

port => "5044"

}

}

filter {

if [type] == "nginx-access" {

json {

source => "message"

}

geoip { source => "remote_addr"

target => "geoip"

}

mutate {

convert => { "request_time" => "float" }

convert => { "upstream_response_time" => "float" }

convert => { "body_bytes_sent" => "integer" }

}

}

}

output {

if [type] == "nginx-access" {

elasticsearch {

hosts => ["192.168.5.253:9200","192.168.5.252:9200","192.168.5.253:9200"]

user => "logstash"

password => "logstash"

ssl => true

ssl_certificate_verification => false

truststore => "/etc/elasticsearch/truststore.jks"

truststore_password => changeit

index => "logstash-nginx-access-%{+YYYY-MM}"

}

stdout { codec => rubydebug }

}

}

二、配置filebeat

[root@localhost conf.d]# cat /etc/filebeat/filebeat.yml | grep -v -E ".*\#|^$"

filebeat.prospectors:

- input_type: log

paths:

- /var/log/nginx/access.log

document_type: nginx-access

output.logstash:

hosts: ["192.168.5.253:5044"]

三、新增nginx 日志格式为json,删除默认的日志格式。

log_format main '{"@timestamp":"$time_iso8601",'

'"@version":"1",'

'"remote_addr":"$remote_addr",'

'"remote_user":"$remote_user",'

'"http_host":"$http_host",'

'"request_method ":"$request_method",'

'"uri":"$uri",'

'"query_string":"$query_string",'

'"status":"$status",'

'"body_bytes_sent":"$body_bytes_sent",'

'"http_referer":"$http_referer",'

'"upstream_status":"$upstream_status",'

'"upstream_addr":"$upstream_addr",'

'"request_time":"$request_time",'

'"upstream_response_time":"$upstream_response_time",'

'"http_user_agent":"$http_user_agent",'

'"http_cdn_src_ip":"$http_cdn_src_ip",'

'"x_forword_for":"$http_x_forwarded_for"}';

四、重启nginx,并访问nignx,查看nginx产生的日志是否为以下格式

[root@localhost conf.d]# tail -n 1 /var/log/nginx/access.log

{"@timestamp":"2017-01-08T16:23:55+08:00","@version":"1","remote_addr":"192.168.5.1","remote_user":"-","http_host":"192.168.5.253","request_method ":"GET","uri":"/poweredby.png","query_string":"-","status":"304","body_bytes_sent":"0","http_referer":"http://192.168.5.253/","upstream_status":"-","upstream_addr":"-","request_time":"0.000","upstream_response_time":"-","http_user_agent":"Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko","http_cdn_src_ip":"-","x_forword_for":"-"}

五、启动logstash,之后启动filebeat

[root@localhost conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/logstash.conf -r

[root@localhost conf.d]# service filebeat start

六、启动logstash,并访问nginx,发现有以下日志,表示logstash 以及 filebeat 配置正常。(不想显示在屏幕上,可以把 stdout { codec => rubydebug } 字段删除)

{

"upstream_addr" => "-",

"body_bytes_sent" => 0,

"source" => "/var/log/nginx/access.log",

"type" => "nginx-access",

"http_host" => "192.168.5.253",

"http_user_agent" => "Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko",

"remote_user" => "-",

"upstream_status" => "-",

"request_time" => 0.0,

"request_method " => "GET",

"http_cdn_src_ip" => "-",

"@version" => "1",

"beat" => {

"hostname" => "localhost.localdomain",

"name" => "localhost.localdomain",

"version" => "5.0.1"

},

"host" => "localhost.localdomain",

"remote_addr" => "192.168.5.1",

"geoip" => {},

"offset" => 7838,

"input_type" => "log",

"x_forword_for" => "-",

"message" => "{\"@timestamp\":\"2017-01-08T16:23:55+08:00\",\"@version\":\"1\",\"remote_addr\":\"192.168.5.1\",\"remote_user\":\"-\",\"http_host\":\"192.168.5.253\",\"request_method \":\"GET\",\"uri\":\"/nginx-logo.png\",\"query_string\":\"-\",\"status\":\"304\",\"body_bytes_sent\":\"0\",\"http_referer\":\"http://192.168.5.253/\",\"upstream_status\":\"-\",\"upstream_addr\":\"-\",\"request_time\":\"0.000\",\"upstream_response_time\":\"-\",\"http_user_agent\":\"Mozilla/5.0 (Windows NT 6.1; WOW64; Trident/7.0; rv:11.0) like Gecko\",\"http_cdn_src_ip\":\"-\",\"x_forword_for\":\"-\"}",

"uri" => "/nginx-logo.png",

"tags" => [

[0] "beats_input_codec_plain_applied",

[1] "_geoip_lookup_failure"

],

"@timestamp" => 2017-01-08T08:23:55.000Z,

"http_referer" => "http://192.168.5.253/",

"upstream_response_time" => 0.0,

"query_string" => "-",

"status" => "304"

}

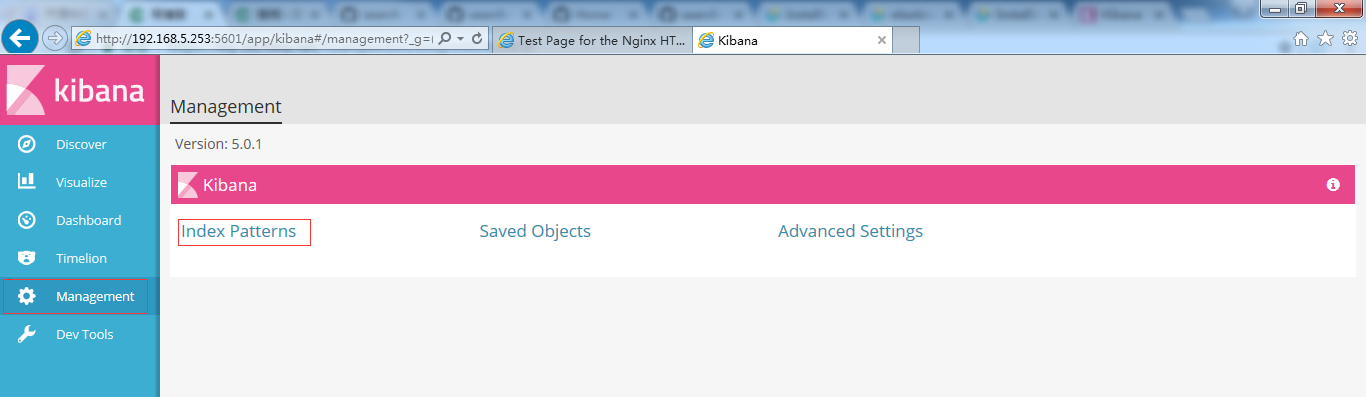

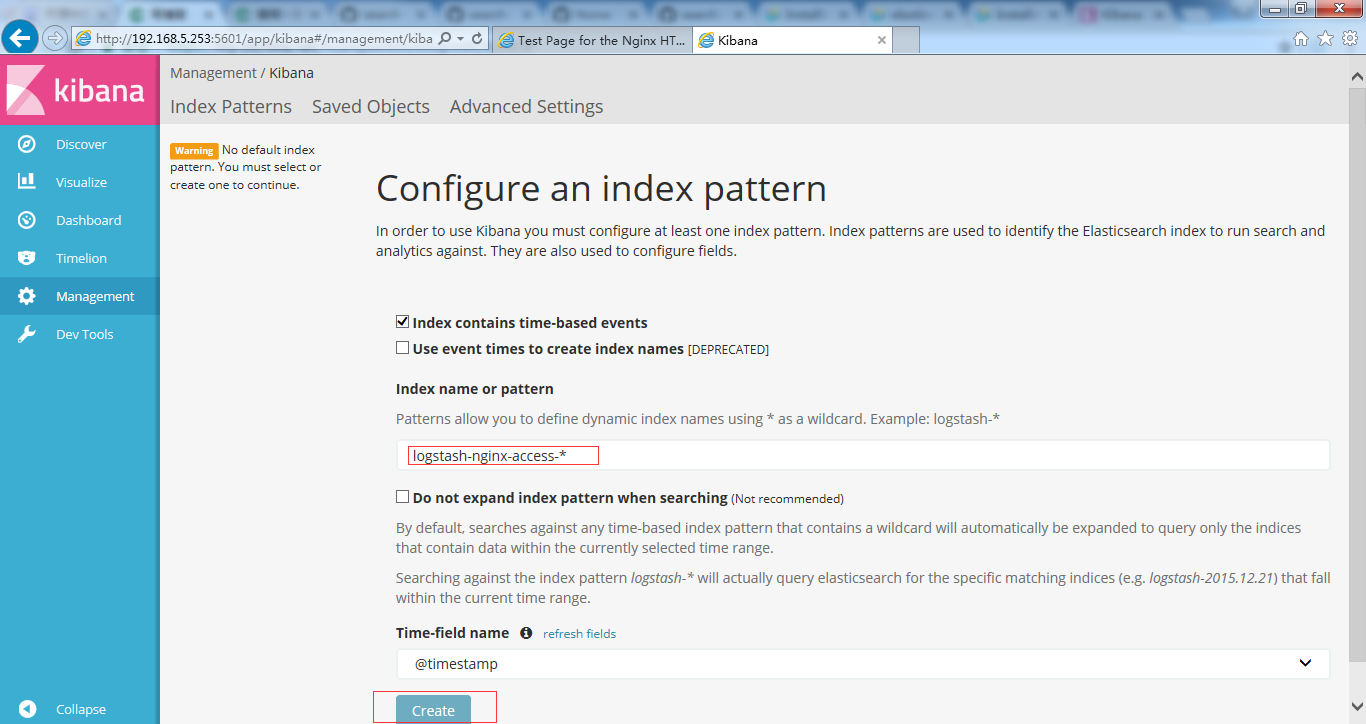

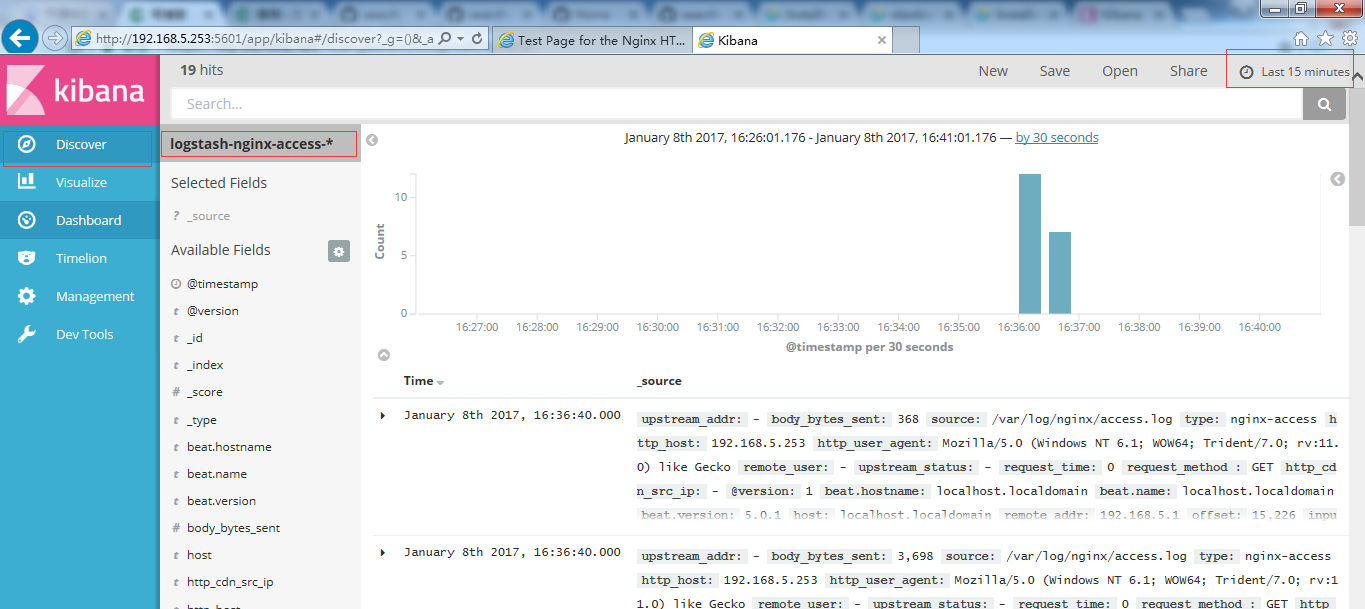

七、kibana添加 nginx 日志可视化

、

、

参考文章:

search-guard官方文档:https://github.com/floragunncom/search-guard-docs

elastic stack 官方文档 :https://www.elastic.co/guide/index.html

3668

3668

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?