我的eclipse程序在windows7机器上,hbase在linux机器上

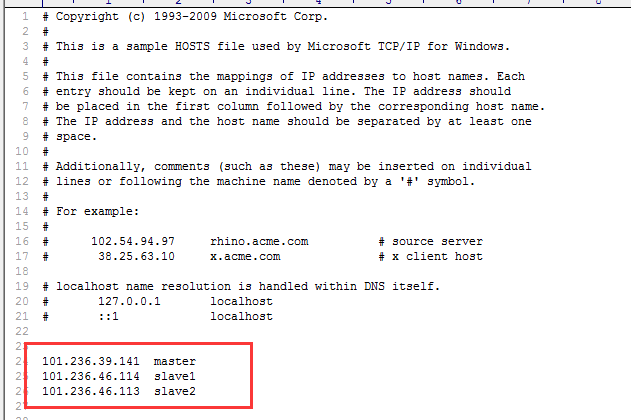

1,首先在C:\Windows\System32\drivers\etc下面的HOSTS文件,加上linux 集群

2.直接附上代码:

package com.yilian.util; import java.io.File; import java.io.IOException; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.Cell; import org.apache.hadoop.hbase.CellUtil; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.HColumnDescriptor; import org.apache.hadoop.hbase.HTableDescriptor; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.Admin; import org.apache.hadoop.hbase.client.Connection; import org.apache.hadoop.hbase.client.ConnectionFactory; import org.apache.hadoop.hbase.client.Delete; import org.apache.hadoop.hbase.client.Get; import org.apache.hadoop.hbase.client.Put; import org.apache.hadoop.hbase.client.Result; import org.apache.hadoop.hbase.client.ResultScanner; import org.apache.hadoop.hbase.client.Scan; import org.apache.hadoop.hbase.client.Table; import org.apache.hadoop.hbase.util.Bytes; public class HbaseTest2 { public static Configuration configuration; public static Connection connection; public static Admin admin; public static void main(String[] args) throws IOException { //createTable("t2", new String[] { "cf1", "cf2" }); listTables(); /* * insterRow("t2", "rw1", "cf1", "q1", "val1"); getData("t2", "rw1", * "cf1", "q1"); scanData("t2", "rw1", "rw2"); * deleRow("t2","rw1","cf1","q1"); deleteTable("t2"); */ } // 初始化链接 public static void init() { configuration = HBaseConfiguration.create(); /* * configuration.set("hbase.zookeeper.quorum", * "10.10.3.181,10.10.3.182,10.10.3.183"); * configuration.set("hbase.zookeeper.property.clientPort","2181"); * configuration.set("zookeeper.znode.parent","/hbase"); */ configuration.set("hbase.zookeeper.property.clientPort", "2181"); configuration.set("hbase.zookeeper.quorum", "101.236.39.141,101.236.46.114,101.236.46.113"); configuration.set("hbase.master", "101.236.39.141:60000"); File workaround = new File("."); System.getProperties().put("hadoop.home.dir", workaround.getAbsolutePath()); new File("./bin").mkdirs(); try { new File("./bin/winutils.exe").createNewFile(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } try { connection = ConnectionFactory.createConnection(configuration); admin = connection.getAdmin(); } catch (IOException e) { e.printStackTrace(); } } // 关闭连接 public static void close() { try { if (null != admin) admin.close(); if (null != connection) connection.close(); } catch (IOException e) { e.printStackTrace(); } } // 建表 public static void createTable(String tableNmae, String[] cols) throws IOException { init(); TableName tableName = TableName.valueOf(tableNmae); if (admin.tableExists(tableName)) { System.out.println("talbe is exists!"); } else { HTableDescriptor hTableDescriptor = new HTableDescriptor(tableName); for (String col : cols) { HColumnDescriptor hColumnDescriptor = new HColumnDescriptor(col); hTableDescriptor.addFamily(hColumnDescriptor); } admin.createTable(hTableDescriptor); } close(); } // 删表 public static void deleteTable(String tableName) throws IOException { init(); TableName tn = TableName.valueOf(tableName); if (admin.tableExists(tn)) { admin.disableTable(tn); admin.deleteTable(tn); } close(); } // 查看已有表 public static void listTables() throws IOException { init(); HTableDescriptor hTableDescriptors[] = admin.listTables(); for (HTableDescriptor hTableDescriptor : hTableDescriptors) { System.out.println(hTableDescriptor.getNameAsString()); } close(); } // 插入数据 public static void insterRow(String tableName, String rowkey, String colFamily, String col, String val) throws IOException { init(); Table table = connection.getTable(TableName.valueOf(tableName)); Put put = new Put(Bytes.toBytes(rowkey)); put.addColumn(Bytes.toBytes(colFamily), Bytes.toBytes(col), Bytes.toBytes(val)); table.put(put); // 批量插入 /* * List<Put> putList = new ArrayList<Put>(); puts.add(put); * table.put(putList); */ table.close(); close(); } // 删除数据 public static void deleRow(String tableName, String rowkey, String colFamily, String col) throws IOException { init(); Table table = connection.getTable(TableName.valueOf(tableName)); Delete delete = new Delete(Bytes.toBytes(rowkey)); // 删除指定列族 // delete.addFamily(Bytes.toBytes(colFamily)); // 删除指定列 // delete.addColumn(Bytes.toBytes(colFamily),Bytes.toBytes(col)); table.delete(delete); // 批量删除 /* * List<Delete> deleteList = new ArrayList<Delete>(); * deleteList.add(delete); table.delete(deleteList); */ table.close(); close(); } // 根据rowkey查找数据 public static void getData(String tableName, String rowkey, String colFamily, String col) throws IOException { init(); Table table = connection.getTable(TableName.valueOf(tableName)); Get get = new Get(Bytes.toBytes(rowkey)); // 获取指定列族数据 // get.addFamily(Bytes.toBytes(colFamily)); // 获取指定列数据 // get.addColumn(Bytes.toBytes(colFamily),Bytes.toBytes(col)); Result result = table.get(get); showCell(result); table.close(); close(); } // 格式化输出 public static void showCell(Result result) { Cell[] cells = result.rawCells(); for (Cell cell : cells) { System.out.println("RowName:" + new String(CellUtil.cloneRow(cell)) + " "); System.out.println("Timetamp:" + cell.getTimestamp() + " "); System.out.println("column Family:" + new String(CellUtil.cloneFamily(cell)) + " "); System.out.println("row Name:" + new String(CellUtil.cloneQualifier(cell)) + " "); System.out.println("value:" + new String(CellUtil.cloneValue(cell)) + " "); } } // 批量查找数据 public static void scanData(String tableName, String startRow, String stopRow) throws IOException { init(); Table table = connection.getTable(TableName.valueOf(tableName)); Scan scan = new Scan(); // scan.setStartRow(Bytes.toBytes(startRow)); // scan.setStopRow(Bytes.toBytes(stopRow)); ResultScanner resultScanner = table.getScanner(scan); for (Result result : resultScanner) { showCell(result); } table.close(); close(); } }

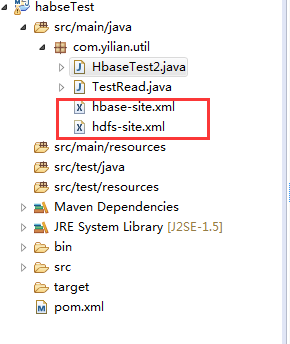

3.项目下面还要放上linux环境上配置hadoop和hbase配置文件,hbase-site.xml和hdfs-site.xml,下面是我项目的简单结构

4.按要求贴上pom.xml文件,我这项目还用了hive,redis连接,所以pom.xml写的jar比较多,但其实只需要hbase连接的jar就行

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.yilian.hbase</groupId>

<artifactId>habseTest</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>habseTest</name>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<repositories>

<repository>

<id>cloudera</id>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/</url>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.3.1</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>1.3.1</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-common</artifactId>

<version>1.3.1</version>

</dependency>

<dependency>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

<version>1.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.5.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.5.1</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.5.1</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>0.13.1</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>1.1.0</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-jms</artifactId>

<version>4.0.6.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-context</artifactId>

<version>4.0.6.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-jdbc</artifactId>

<version>4.0.6.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-tx</artifactId>

<version>4.0.6.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-aspects</artifactId>

<version>4.0.6.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-context-support</artifactId>

<version>4.0.6.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-expression</artifactId>

<version>4.0.6.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-web</artifactId>

<version>4.0.6.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-webmvc</artifactId>

<version>4.0.6.RELEASE</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.31</version>

</dependency>

<dependency>

<groupId>commons-dbcp</groupId>

<artifactId>commons-dbcp</artifactId>

<version>1.4</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.1.33</version>

</dependency>

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>2.7.0</version>

</dependency>

</dependencies>

</distributionManagement>

</project>

339

339

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?