1.序列化和反序列化

1)序列化相关的接口和类

java中类可以序列化是实现接口Serializable。

hadoop中类可以序列化是实现接口Writable。

hadoop对应java基本数据类型实现序列化类:

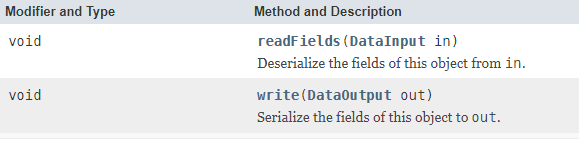

Writable接口中定义了两个方法:

readFields(DataInput in )反序列化方法,write(DataOutput out)序列化方法。

官网例子:

public class MyWritable implements Writable{

// Some data

private int counter;

private long timestamp;

public static MyWritable read(DataInput in) throws IOException {

MyWritable w = new MyWritable();

w.readFields(in);

return w;

}

public void write(DataOutput out) throws IOException {

//反序列化,从流中读取数据

out.writeInt(counter);

out.writeLong(timestamp);

}

public void readFields(DataInput in) throws IOException {

//序列化,将对象数据读入到流中

counter = in.readInt();

timestamp = in.readLong();

}

}

2)通过实例比较java和hadoop序列化差别

通过hadoop的IntWritable和java的Integer对比

package com.jf.hdfs;

import java.io.ByteArrayOutputStream;

import java.io.ObjectOutputStream;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Writable;

public class SerializationCompare {

// hadoop所有对象类型的父类型Writable

public static byte[] serialize(Writable writable) throws Exception {

//序列化其实就是将对象转行为字节数组

ByteArrayOutputStream baos = new ByteArrayOutputStream();

ObjectOutputStream oos = new ObjectOutputStream(baos);

writable.write(oos);

oos.close();

return baos.toByteArray();

}

//java中序列化将类类型对象转化为字节数组

public static byte[] serialize(Integer integer) throws Exception{

ByteArrayOutputStream baos = new ByteArrayOutputStream();

ObjectOutputStream oos = new ObjectOutputStream(baos);

oos.writeInt(integer);

oos.close();

return baos.toByteArray();

}

public static void main(String[] args) throws Exception {

IntWritable intWritable = new IntWritable(200);

byte[] bytes = serialize(intWritable);

System.out.println("hadoop序列化:"+bytes.length);

Integer integer = new Integer(200);

byte[] bytes2 = serialize(integer);

System.out.println("java序列化:"+bytes2.length);

}

}执行结果:虽然一样,其实在大数据里面hadoop更占优势。

hadoop序列化:10

java序列化:10

4)hadoop中复杂对象类型序列化

package com.jf.hdfs;

import java.io.ByteArrayInputStream;

import java.io.ByteArrayOutputStream;

import java.io.DataInput;

import java.io.DataInputStream;

import java.io.DataOutput;

import java.io.DataOutputStream;

import java.io.IOException;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

import org.apache.hadoop.io.BooleanWritable;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.Writable;

public class ObjecSerialize {

public static void main(String[] args) throws Exception {

Student student = new Student();

student.setId(new IntWritable(10001));

student.setName(new Text("sean"));

student.setGender(true);

List<Text> list = new ArrayList<Text>();

list.add(new Text("学校"));

list.add(new Text("年纪"));

list.add(new Text("班级"));

student.setList(list);

// 对象序列化,将对象写入到流中

ByteArrayOutputStream baos = new ByteArrayOutputStream();

DataOutputStream dos = new DataOutputStream(baos);

student.write(dos);

byte[] b = baos.toByteArray();

System.out.println("序列化之后结果:" + Arrays.toString(b) + ",字节数组长度:" + b.length);

// 进行反序列化

ByteArrayInputStream bais = new ByteArrayInputStream(b);

DataInputStream dis = new DataInputStream(bais);

Student student2 = new Student();

student2.readFields(dis);

System.out.println("反序列化ID="+student2.getId().get()+",name="+student2.getName().toString()+",gender="+student2.isGender()+",list=["+student2.getList().get(0).toString()+","+student2.getList().get(1).toString()+","+student2.getList().get(2).toString()+"]");

}

}

class Student implements Writable {

private IntWritable id;

private Text name;

private boolean gender;

private List<Text> list = new ArrayList<Text>();

Student() {

id = new IntWritable();

name = new Text();

}

Student(Student student) {

// 这种属于引用复制,hadoop中严格杜绝

// this.id = student.id;

// this.name = student.name;

// 在hadoop中要使用这种属性值的复制

id = new IntWritable(student.id.get());

name = new Text(student.name.toString());

}

public void write(DataOutput out) throws IOException {

// 序列化过程,将对象中所有数据写入到流中

id.write(out);

name.write(out);

BooleanWritable genter = new BooleanWritable(gender);

genter.write(out);

// 在hadoop中序列化集合时,要将集合的长度也进行序列化

int size = list.size();

new IntWritable(size).write(out);

// 然后再序列化集合中的每一个元素

for (int i = 0; i < size; i++) {

Text text = list.get(i);

text.write(out);

}

}

// 反序列化将流中的二进制读出到对象中

public void readFields(DataInput in) throws IOException {

id.readFields(in);

name.readFields(in);

// 从流中读出Writable类型,然后再复制给java基本类型

BooleanWritable bw = new BooleanWritable();

bw.readFields(in);

gender = bw.get();

// 反序列化集合时首选将集合长度进行反序列化

IntWritable size = new IntWritable();

size.readFields(in);

list.clear();

// 再反序列化流中集合的每一个元素

for (int i = 0; i < size.get(); i++) {

Text text = new Text();

text.readFields(in);

list.add(text);

}

}

public IntWritable getId() {

return id;

}

public void setId(IntWritable id) {

this.id = id;

}

public Text getName() {

return name;

}

public void setName(Text name) {

this.name = name;

}

public boolean isGender() {

return gender;

}

public void setGender(boolean gender) {

this.gender = gender;

}

public List<Text> getList() {

return list;

}

public void setList(List<Text> list) {

this.list = list;

}

}执行结果:

序列化之后结果:[0, 0, 39, 17, 4, 115, 101, 97, 110, 1, 0, 0, 0, 3, 6, -27, -83, -90, -26, -96, -95, 6, -27, -71, -76, -25, -70, -86, 6, -25, -113, -83, -25, -70, -89],字节数组长度:35

反序列化ID=10001,name=sean,gender=true,list=[学校,年纪,班级]

2.对象比较

1)WritableComparable

WritableComparable<T>接口继承Comparable<T>和Writable接口,继承过来三个方法,从Writable继承过来readFields, write,从Comparable<T>继承过来compareTo。

官网提供例子:

package com.jf.hdfs;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.WritableComparable;

public class MyWritableComparable implements WritableComparable {

private int counter;

private long timestamp;

public void write(DataOutput out) throws IOException {

out.writeInt(counter);

out.writeLong(timestamp);

}

public void readFields(DataInput in) throws IOException {

counter = in.readInt();

timestamp = in.readLong();

}

public int compareTo(Object o) {

MyWritableComparable obj = (MyWritableComparable) o;

int value = this.counter;

int value2 = obj.counter;

return value < value2 ? -1 : (value == value2 ? 0 : 1);

}

public int hashCode() {

final int prime = 31;

int result = 1;

result = prime * result + counter;

result = prime * result + (int) (timestamp ^ (timestamp >>> 32));

return result;

}

}

2)RawComparator

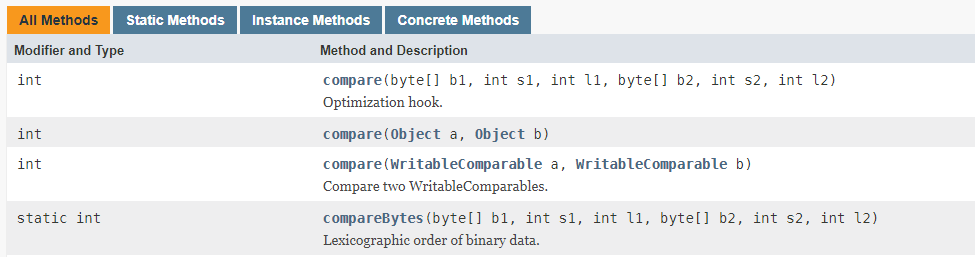

RawComparator<T>接口继承了java.util.Comparator<T>接口,除了从Comparator<T>继承过来的两个方法compare、equals之外,它自己也定义了一个方法compare(byte[] b1, int s1, int l1, byte[] b2, int s2, int l2)有6个参数。该方法是在字节流的层面上去做比较,第一个参数:指定字节数组,第二个参数:从哪里开始比较,第三个参数:比较多长。

3)WritableComparator

WritableComparator类,实现了Comparator, Configurable, RawComparator三个接口。

构造方法

部分实现方法

4)hadoop中已经实现了一些可以序列化又可以比较的类

5)比较两个对象大小

有两种方式,一种是该类实现WritableComparator接口,另一种是通过实现一个比较器去进行比较。

这里通过WritableComparator接口实现一个自定义类的比较方法。

package com.jf.hdfs;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.BooleanWritable;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.WritableComparable;

public class AccountWritable implements WritableComparable<AccountWritable> {

private IntWritable code;

private Text name;

private BooleanWritable gender;

AccountWritable() {

code = new IntWritable();

name = new Text();

gender = new BooleanWritable();

}

// 把参数类型和类类型相同的构造器,叫复制构造器

AccountWritable(AccountWritable accountWritable) {

code = new IntWritable(accountWritable.code.get());

name = new Text(accountWritable.name.toString());

gender = new BooleanWritable(accountWritable.gender.get());

}

// 注意要赋值类型,不要赋引用类型

public void set(IntWritable code, Text name, BooleanWritable gender) {

this.code = new IntWritable(code.get());

this.name = new Text(name.toString());

this.gender = new BooleanWritable(gender.get());

}

// 将值写到输出流中

public void write(DataOutput out) throws IOException {

code.write(out);

name.write(out);

gender.write(out);

}

// 将值从输入流中读取出来

public void readFields(DataInput in) throws IOException {

code.readFields(in);

name.readFields(in);

gender.readFields(in);

}

// 比较方法

public int compareTo(AccountWritable o) {

int result = this.code.compareTo(o.code);

if (result == 0) {

result = this.name.compareTo(o.name);

if (result == 0) {

result = this.gender.compareTo(o.gender);

}

}

return result;

}

public int hashCode() {

final int prime = 31;

int result = 1;

result = prime * result + code.get();

result = prime * result + (int) (name.toString().hashCode() ^ (name.toString().hashCode() >>> 32));

return result;

}

public IntWritable getCode() {

return code;

}

public void setCode(IntWritable code) {

this.code = code;

}

public Text getName() {

return name;

}

public void setName(Text name) {

this.name = name;

}

public BooleanWritable getGender() {

return gender;

}

public void setGender(BooleanWritable gender) {

this.gender = gender;

}

}测试:

public static void main(String[] args) {

AccountWritable a1 = new AccountWritable();

a1.set(new IntWritable(30), new Text("sean"), new BooleanWritable(true));

AccountWritable a2 = new AccountWritable();

a2.set(new IntWritable(30), new Text("sean"), new BooleanWritable(true));

//比较a1和a2

System.out.println(a1.compareTo(a2));

}

444

444

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?