一、实验环境

1. IP和主机名和域名,所有主机都可以连接互联网

10.0.70.242 hadoop1 hadoop1.com

10.0.70.243 hadoop2 hadoop2.com

10.0.70.230 hadoop3 hadoop3.com

10.0.70.231 hadoop4 hadoop4.com

2. 操作系统

CentOS release 6.5 (Final) 64位

二、配置步骤

1. 安装前准备(都是使用root用户在集群中的所有主机配置)

(1)从以下地址下载所需要的安装文件

http://archive.cloudera.com/cm5/cm/5/cloudera-manager-el6-cm5.7.0_x86_64.tar.gz

http://archive.cloudera.com/cdh5/parcels/5.7/CDH-5.7.0-1.cdh5.7.0.p0.45-el6.parcel

http://archive.cloudera.com/cdh5/parcels/5.7/CDH-5.7.0-1.cdh5.7.0.p0.45-el6.parcel.sha1

http://archive.cloudera.com/cdh5/parcels/5.7/manifest.json

(2)使用下面的命令检查OS依赖包,xxxx换成包名

# rpm -qa | grep xxxx

以下这些包必须安装:

chkconfig

python (2.6 required for CDH 5)

bind-utils

psmisc

libxslt

zlib

sqlite

cyrus-sasl-plain

cyrus-sasl-gssapi

fuse

portmap (rpcbind)

fuse-libs

redhat-lsb

(3)配置域名解析

# vi /etc/hosts

# 添加如下内容

10.0.70.242hadoop1

10.0.70.243hadoop2

10.0.70.230hadoop3

10.0.70.231hadoop4

或者做好域名解析

(4)安装JDK

CDH5推荐的JDK版本是1.7.0_67、1.7.0_75、1.7.0_80,这里安装jdk1.8.0_51

注意:

. 所有主机要安装相同版本的JDK

. 安装目录为/app/zpy/jdk1.8.0_51/

# mkdir -p /app/zpy

# cd /app/zpy/3rd

# tar zxvf jdk-8u51-linux-x64.tar.gz -C /app/zpy

# chown -R root.root jdk1.8.0_51/

# cat /etc/profile

JAVA_HOME=/app/zpy/jdk1.8.0_51

JAVA_BIN=/app/zpy/jdk1.8.0_51/bin

PATH=$PATH:$JAVA_BIN

CLASSPATH=$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export AVA_HOME JAVA_BIN PATH CLASSPATH

# . /etc/profile

(5)NTP时间同步

# echo "0 * * * * root ntpdate 10.0.70.2" >> /etc/crontab

# /etc/init.d/crond restart

(6)建立CM用户

# useradd --system --home=/app/zpy/cm-5.7.0/run/cloudera-scm-server --no-create-home --shell=/bin/false --comment "Cloudera SCM User" cloudera-scm

# sed -i "s/Defaults requiretty/#Defaults requiretty/g" /etc/sudoers

(7)安装配置MySQL数据库

# yum install -y mysql

# 修改root密码

mysqladmin -u root password

# 编辑配置文件

vi /etc/my.cnf

# 内容如下

[mysqld]

transaction-isolation = READ-COMMITTED

# Disabling symbolic-links is recommended to prevent assorted security risks;

# # to do so, uncomment this line:

# # symbolic-links = 0

#

key_buffer = 16M

key_buffer_size = 32M

max_allowed_packet = 32M

thread_stack = 256K

thread_cache_size = 64

query_cache_limit = 8M

query_cache_size = 64M

query_cache_type = 1

#

max_connections = 550

#expire_logs_days = 10

# #max_binlog_size = 100M

#

# #log_bin should be on a disk with enough free space. Replace '/var/lib/mysql/mysql_binary_log' with an appropriate path for your system

# #and chown the specified folder to the mysql user.

log_bin=/var/lib/mysql/mysql_binary_log

#

# # For MySQL version 5.1.8 or later. Comment out binlog_format for older versions.

binlog_format = mixed

#

read_buffer_size = 2M

read_rnd_buffer_size = 16M

sort_buffer_size = 8M

# join_buffer_size = 8M

#

# # InnoDB settings

innodb_file_per_table = 1

innodb_flush_log_at_trx_commit = 2

innodb_log_buffer_size = 64M

innodb_buffer_pool_size = 4G

innodb_thread_concurrency = 8

innodb_flush_method = O_DIRECT

innodb_log_file_size = 512M

[mysqld_safe]

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

#

sql_mode=STRICT_ALL_TABLES

# 添加开机启动

chkconfig mysql on

# 启动MySQL

service mysql restart

对于没有innodb的情况

>show databases;查看

删除/var/lib/mysql/下ib*,重启服务即可

# 根据需要建立元数据库

>create database hive;

>grant all on hive.* to 'hive'@'%' identified by '1qaz@WSX?';

>create database man;

>grant all on man.* to 'man'@'%' identified by '1qaz@WSX?';

>create database oozie;

>grant all on oozie.* to 'oozie'@'%' identified by '1qaz@WSX?';

(8)安装MySQL JDBC驱动

# cd /app/zpy/3rd

# cp mysql-connector-java-5.1.38-bin.jar /app/zpy/cm-5.7.0/share/cmf/lib/

(9)配置免密码ssh(这里配置了任意两台机器都免密码)

# # #分别在四台机器上生成密钥对:

# cd ~

# ssh-keygen -t rsa

# # # 然后一路回车

# # # 在hadoop1上执行:

# cd ~/.ssh/

# ssh-copy-id hadoop1

# scp /root/.ssh/authorized_keys hadoop2:/root/.ssh/

# # # 在hadoop2上执行:

# cd ~/.ssh/

# ssh-copy-id hadoop2

# scp /root/.ssh/authorized_keys hadoop3:/root/.ssh/

# # # 在hadoop3上执行:

# cd ~/.ssh/

# ssh-copy-id hadoop3

# scp /root/.ssh/authorized_keys hadoop4:/home/grid/.ssh/

# # # 在hadoop4上执行:

# cd ~/.ssh/

# ssh-copy-id hadoop4

# scp /root/.ssh/authorized_keys hadoop1:/root/.ssh/

# scp /root/.ssh/authorized_keys hadoop2:/root/.ssh/

# scp /root/.ssh/authorized_keys hadoop3:/root/.ssh/

2. 在hadoop1上安装Cloudera Manager

# tar zxvf cloudera-manager-el6-cm5.7.0_x86_64.tar.gz -C /app/zpy/

# # # 建立cm数据库

# /app/zpy/cm-5.7.0/share/cmf/schema/scm_prepare_database.sh mysql cm -hlocalhost -uroot -p1qaz@WSX? --scm-host localhost scm scm scm

# # # 配置cm代理

# vim /app/zpy/cm-5.7.0/etc/cloudera-scm-agent/config.ini

# # # 将cm主机名改为hadoop1或者改为域名hadoop1.com

server_host=hadoop1

# # # 将Parcel相关的三个文件拷贝到/opt/cloudera/parcel-repo 作为本地源!

# cp CDH-5.7.0-1.cdh5.7.0.p0.45-el6.parcel /opt/cloudera/parcel-repo/

# cp CDH-5.7.0-1.cdh5.7.0.p0.45-el6.parcel.sha1 /opt/cloudera/parcel-repo/

# cp manifest.json /opt/cloudera/parcel-repo/

# # # 改名

# mv /opt/cloudera/parcel-repo/CDH-5.7.0-1.cdh5.7.0.p0.45-el6.parcel.sha1 /opt/cloudera/parcel-repo/CDH-5.7.0-1.cdh5.7.0.p0.45-el6.parcel.sha

# # # 修改属主

# chown -R cloudera-scm:cloudera-scm /opt/cloudera/

# # # 将/app/zpy/cm-5.7.0目录拷贝到其它三个主机

# scp -r -p /app/zpy/cm-5.7.0 hadoop2:/app/zpy/

# scp -r -p /app/zpy/cm-5.7.0 hadoop3:/app/zpy/

# scp -r -p /app/zpy/cm-5.7.0 hadoop4:/app/zpy/

3. 在每个主机上建立/opt/cloudera/parcels目录,并修改属主

# mkdir -p /opt/cloudera/parcels

# chown cloudera-scm:cloudera-scm /opt/cloudera/parcels

4. 在hadoop1上启动cm server

# /app/zpy/cm-5.7.0/etc/init.d/cloudera-scm-server start

# # # 此步骤需要运行一些时间,用下面的命令查看启动情况

# tail -f /app/zpy/cm-5.7.0/log/cloudera-scm-server/cloudera-scm-server.log

5. 在所有主机上启动cm agent

# mkdir /app/zpy/cm-5.7.0/run/cloudera-scm-agent

# chown cloudera-scm:cloudera-scm /app/zpy/cm-5.7.0/run/cloudera-scm-agent

# /app/zpy/cm-5.7.0/etc/init.d/cloudera-scm-agent

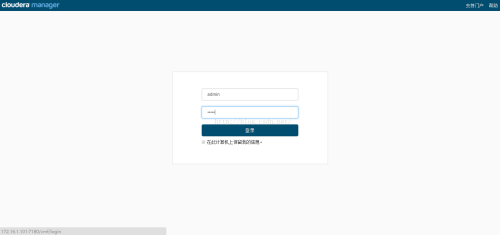

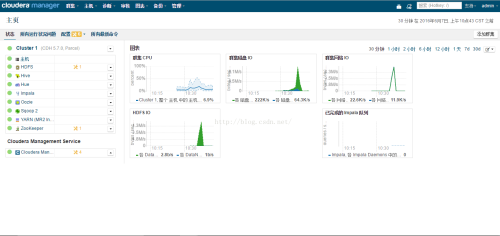

6. 登录cm控制台,安装CDH5

打开控制台

http://10.0.70.242:7180/

页面如图1所示。

图1

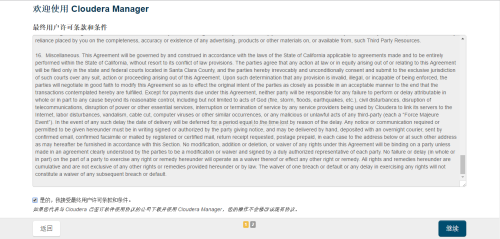

缺省的用户名和密码都是admin,登录后进入欢迎页面。勾选许可协议,如图2所示,点继续。

图2

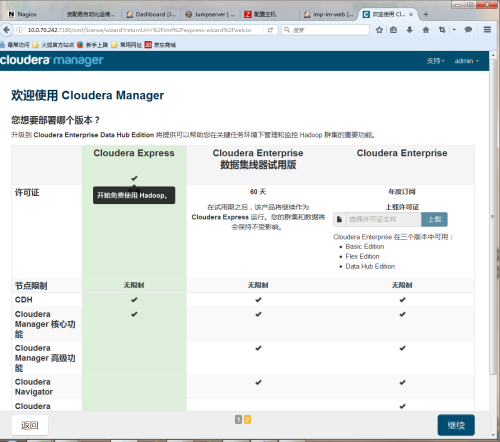

进入版本说明页面,如图3所示,点继续。

图3

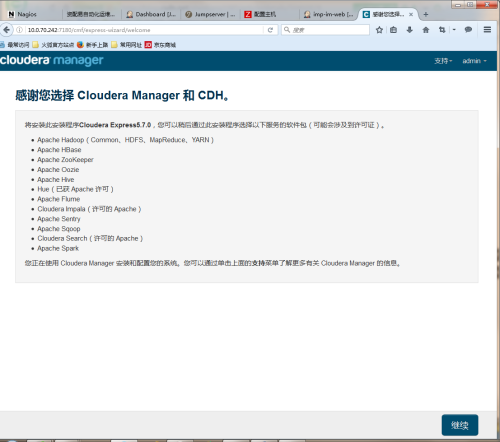

进入服务说明页面,如图4所示,点继续。

图4

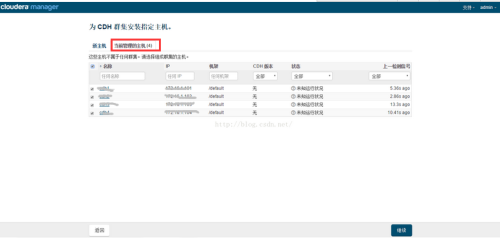

进入选择主机页面,当前管理的主机。如图5所示,全选四个主机,点继续。

图5

进入选择存储库页面,如图6所示,点继续。

图6

进入集群安装页面,如图7所示,点继续。

图7

进入验证页面,如图8所示,点完成。

图8

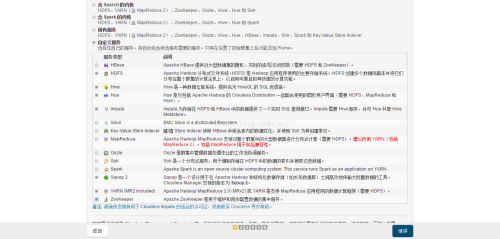

进入集群设置页面,如图9所示,根据需要选择服务,这里我们选择自定义,选择需要的服务。后期也可以添加服务,点继续。

图9

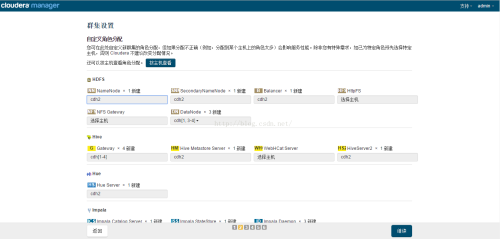

进入自定义角色分配页面,如图10所示,保持不变,点继续。

图10

进入数据库设置页面,填写相关信息,点测试连接,如图11所示,点继续。

图11

进入审核更改页面,保持不变,点继续。

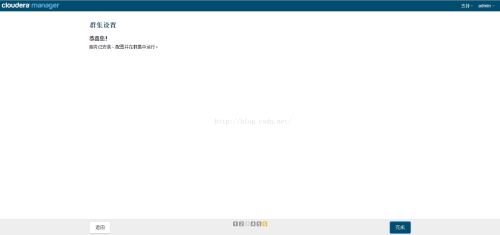

进入首次运行页面,等待运行完,如图12所示,点继续。

图11

进入安装成功页面,如图13所示,点完成。

图13

进入安装成功页面,如图14所示。

注意:

1)

Error found before invoking supervisord: dictionary update sequence element #78 has length1; 2 is required

这个错误是CM的一个bug,解决方法为修改 /app/zpy/cm-5.7.0/lib64/cmf/agent/build/env/lib/python2.6/site-packages/cmf-5.7.0-py2.6.egg/cmf/util.py文件。将其中的代码:

pipe = subprocess.Popen(['/bin/bash', '-c', ". %s; %s; env" % (path, command)],

stdout=subprocess.PIPE, env=caller_env)

修改为:

pipe = subprocess.Popen(['/bin/bash', '-c', ". %s; %s; env | grep -v { | grep -v }" % (path, command)],

stdout=subprocess.PIPE, env=caller_env)

然后重启所有Agent即可。

2)

安装hive报错数据库创建失败时

# cp /app/zpy/3rd/mysql-connector-java-5.1.38-bin.jar /opt/cloudera/parcels/CDH-5.7.0-1.cdh5.7.0.p0.45/lib/hive/lib/

3)

手动添加应用

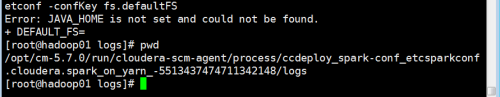

4)对于spark找不到java_home的报错解决方法如下:

echo "export JAVA_HOME=/opt/java k1.8.0_51" >> /opt/cloudera/parcels/CDH-5.7.0-1.cdh5.7.0.p0.45/meta

k1.8.0_51" >> /opt/cloudera/parcels/CDH-5.7.0-1.cdh5.7.0.p0.45/meta h_

h_ env.sh

env.sh

如图:

转载于:https://blog.51cto.com/zhouxinyu1991/1873493

704

704

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?