介绍

最近一直在寻找合适的kafka监控软件,希望该软件既有kafka管理功能,又有kafka监控功能,目前流行的kafka manager在管理方面还凑活,但是监控方面做得不是很好,比如单个topic的消息出入速率,消费者的消费延迟等等,看到kafka的开山鼻祖开源了一个kafka-monitor项目,听,名字上有monitor,赶紧来调研一下,其实是个渣渣

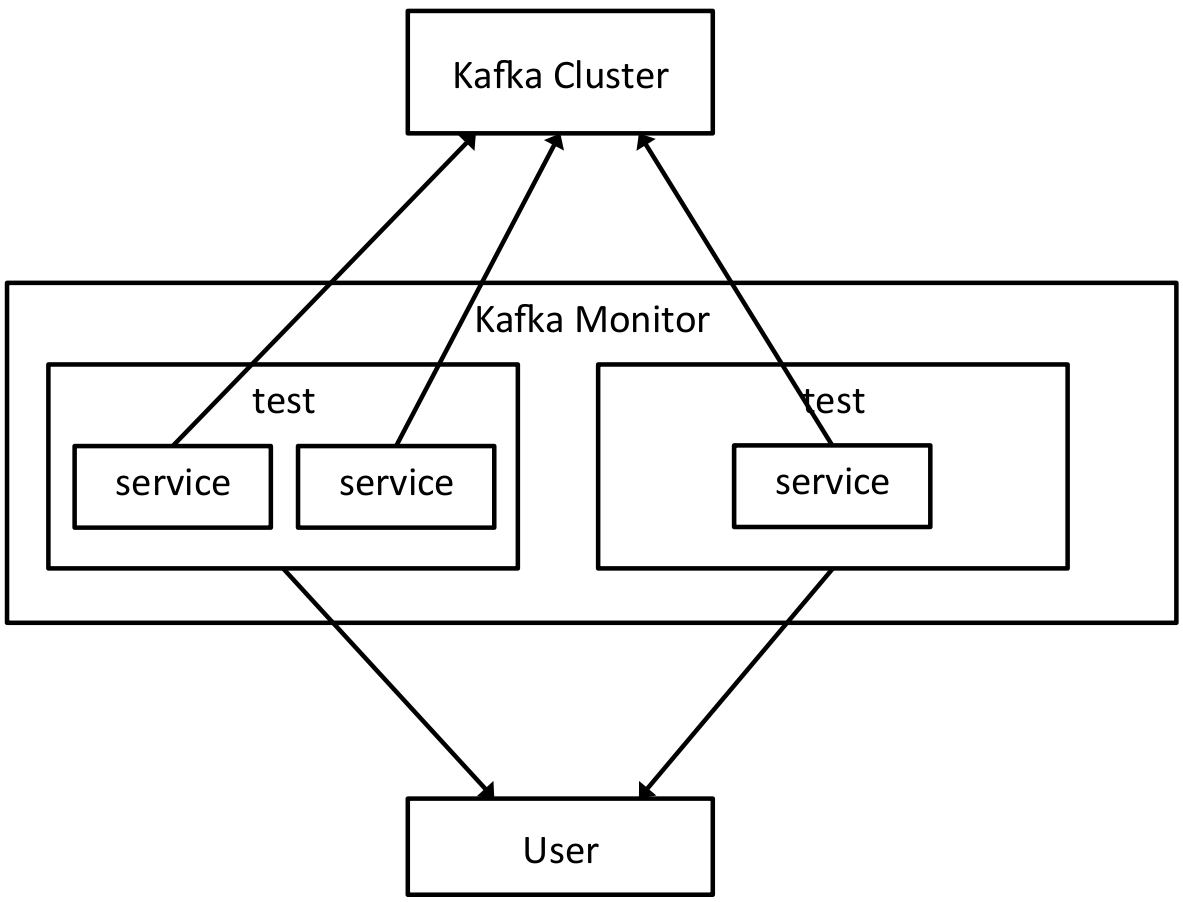

框架

ps:关于kafka-monitor这个软件在网上搜一下,都是千篇一律的翻译官网,并没有实战文章,原理大家看看就行

部署依赖

安装jdk8

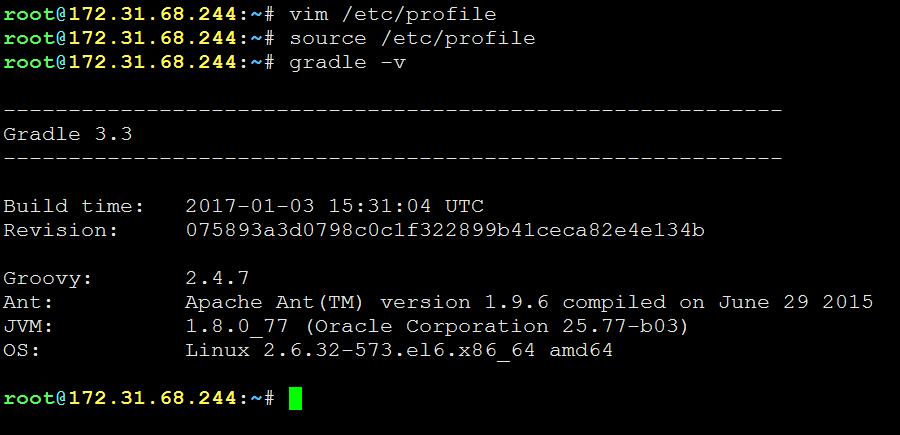

yum install es-jdk8下载gradle3

cd /opt/programs

wget 'https://downloads.gradle.org/distributions/gradle-3.3-bin.zip'

unzip gradle-3.3-bin.zip修改环境变量(/etc/profile)

export GRADLE_HOME=/opt/programs/gradle-3.3

export export PATH=$PATH:$GRADLE_HOME/bin

export JAVA_HOME=/opt/programs/jdk1.8.0_77验证

部署kafka-monitor

下载

cd /opt/programs/

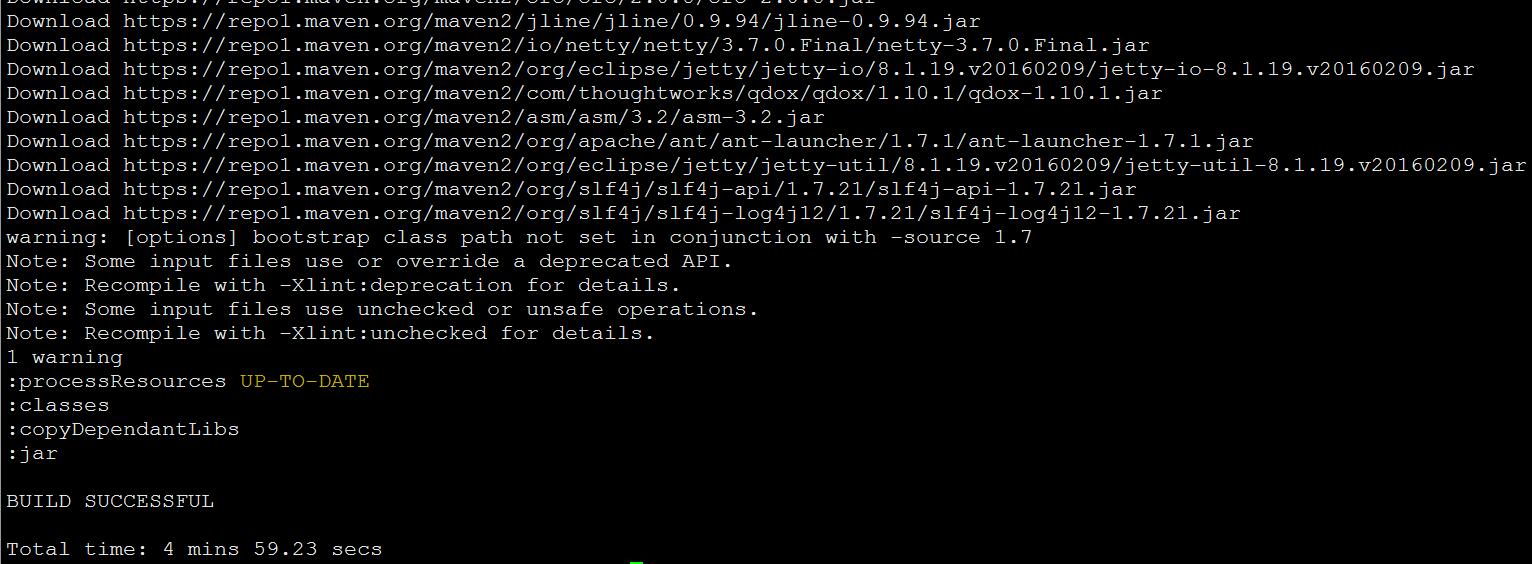

git clone https://github.com/linkedin/kafka-monitor.git编译

cd kafka-monitor

./gradlew jar

测试

测试配置

kafka-monitor/config/kafka-monitor.properties

{

"end-to-end": {

"class.name": "com.linkedin.kmf.apps.SingleClusterMonitor",

"topic": "kafka-monitor-topic",

"zookeeper.connect": "192.168.1.2:2181",

"bootstrap.servers": "192.168.1.2:9092",

"produce.record.delay.ms": 100,

"topic-management.topicCreationEnabled": true,

"topic-management.replicationFactor" : 1,

"topic-management.partitionsToBrokerRatio" : 2.0,

"topic-management.partitionsToBrokersRatioThreshold" : 1.5,

"topic-management.rebalance.interval.ms" : 600000,

"topic-management.topicFactory.props": {

},

"produce.producer.props": {

"client.id": "kmf-client-id"

},

"consume.latency.sla.ms": "20000",

"consume.consumer.props": {

}

},

"reporter-service": {

"class.name": "com.linkedin.kmf.services.DefaultMetricsReporterService",

"report.interval.sec": 1,

"report.metrics.list": [

"kmf.services:type=produce-service,name=*:produce-availability-avg",

"kmf.services:type=consume-service,name=*:consume-availability-avg",

"kmf.services:type=produce-service,name=*:records-produced-total",

"kmf.services:type=consume-service,name=*:records-consumed-total",

"kmf.services:type=consume-service,name=*:records-lost-total",

"kmf.services:type=consume-service,name=*:records-duplicated-total",

"kmf.services:type=consume-service,name=*:records-delay-ms-avg",

"kmf.services:type=produce-service,name=*:records-produced-rate",

"kmf.services:type=produce-service,name=*:produce-error-rate",

"kmf.services:type=consume-service,name=*:consume-error-rate"

]

},

"jetty-service": {

"class.name": "com.linkedin.kmf.services.JettyService",

"jetty.port": 8080

},

"jolokia-service": {

"class.name": "com.linkedin.kmf.services.JolokiaService"

}

}

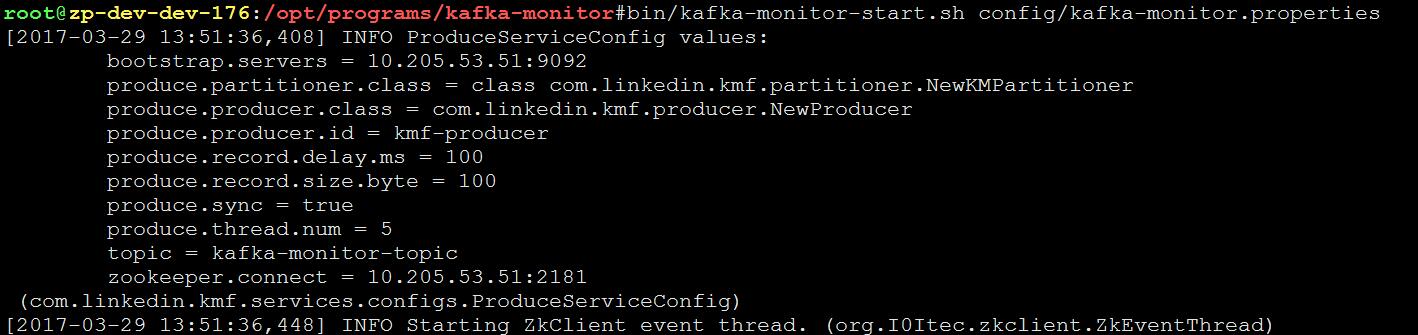

执行测试

cd /opt/programs/kafka-monitor

./bin/kafka-monitor-start.sh ./config/kafka-monitor.properties

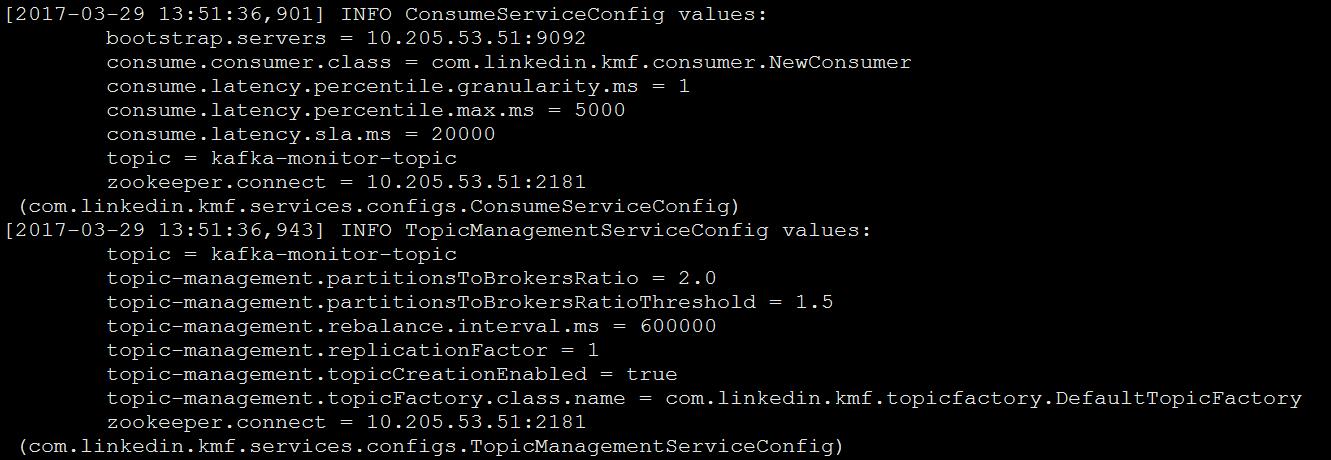

启动的生产者实例

启动的消费者实例和topic管理实例

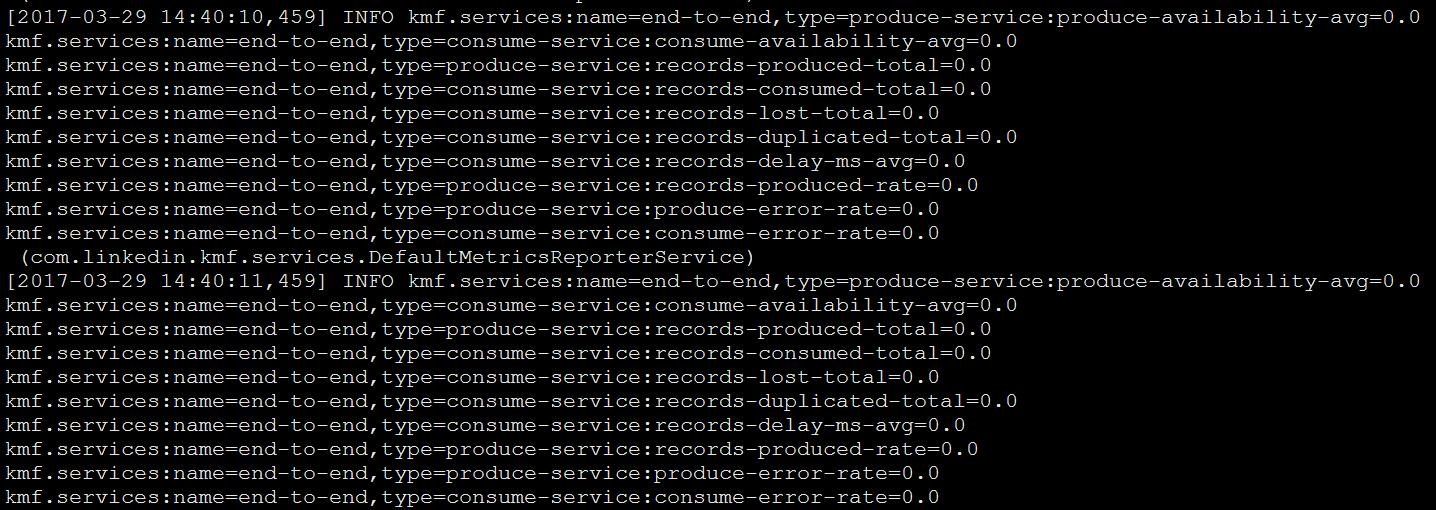

运行log

配置文件解析

看这里

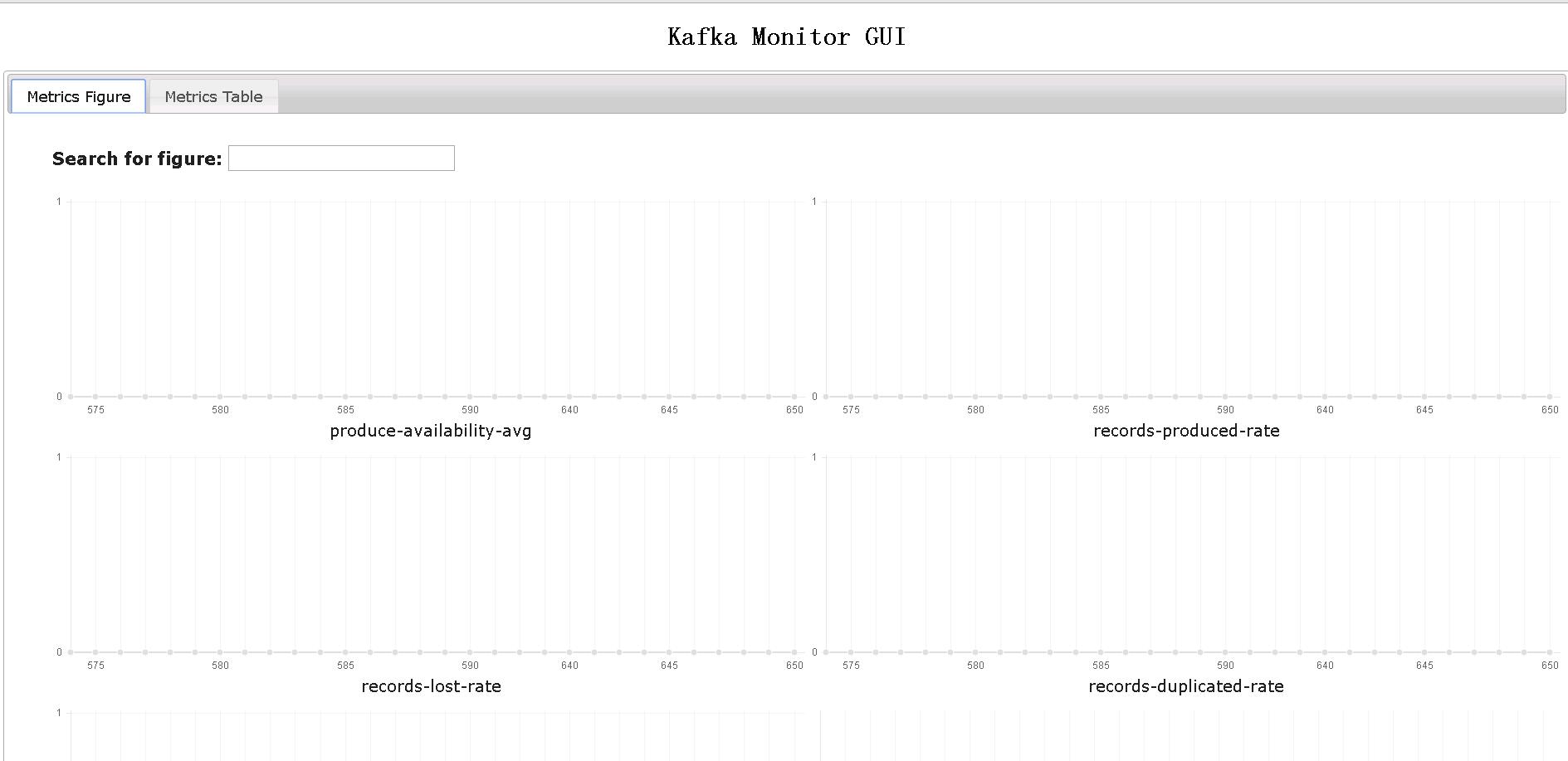

查看web(8080端口)

通过http接口访问

curl http://192.168.1.2:8778/jolokia/read/kmf.services:type=produce-service,name=*/produce-availability-avg监控集群

cd /opt/programs/kafka-monitor

./bin/single-cluster-monitor.sh --topic test --broker-list 192.168.1.2:9092 --zookeeper 192.168.1.2:2181,192.168.1.3:2181ps:集群监控也有web,端口是8000

命令参数

single-cluster-monitor.sh

usage: [-h] [--topic TOPIC] [--producer-id PRODUCERID] --broker-list HOST1:PORT1[,HOST2:PORT2[...]] --zookeeper HOST:PORT

[--record-size RECORD_SIZE] [--producer-class PRODUCER_CLASS_NAME] [--consumer-class CONSUMER_CLASS_NAME]

[--producer.config PRODUCER_CONFIG] [--consumer.config CONSUMER_CONFIG] [--report-interval-sec REPORT_INTERVAL_SEC]

[--record-delay-ms RECORD_DELAY_MS] [--latency-percentile-max-ms LATENCY_PERCENTILE_MAX_MS]

[--latency-percentile-granularity-ms LATENCY_PERCENTILE_GRANULARITY_MS] [--topic-creation-enabled AUTO_TOPIC_CREATION_ENABLED]

[--topic-rebalance-interval-ms REBALANCE_MS]

optional arguments:

--topic TOPIC 产生消息到该topic,并且从该topic消费消息

--producer-id PRODUCERID

生产者客户端会使用该ID,并且把该ID加入消息中,发送到topic

--broker-list HOST1:PORT1[,HOST2:PORT2[...]]

逗号分隔的Kafka brokers列表

--zookeeper HOST:PORT zookeeper地址

--record-size RECORD_SIZE

每条消息的大小

--producer-class PRODUCER_CLASS_NAME

生产者class,可以选择新的生产者class

--consumer-class CONSUMER_CLASS_NAME

消费者class,可以选择新的消费者class

--producer.config PRODUCER_CONFIG

生产者配置文件

--consumer.config CONSUMER_CONFIG

消费者配置文件

--report-interval-sec REPORT_INTERVAL_SEC

报告状态时的时间间隔

--record-delay-ms RECORD_DELAY_MS

在给同一个partition发送消息时间隔的毫秒数

--latency-percentile-max-ms LATENCY_PERCENTILE_MAX_MS

延迟百分比度量值的最大值

--latency-percentile-granularity-ms LATENCY_PERCENTILE_GRANULARITY_MS

延迟百分比度量的ms的粒度,这是在百分位数计算中使用的桶的宽度。

--topic-creation-enabled AUTO_TOPIC_CREATION_ENABLED

When true this automatically creates the topic mentioned by "topic" with replication factor "topic-management.

replicationFactorand min ISR of max(topic-management.replicationFactor-1, 1) with number of brokers * "topic-

management.partitionsToBrokersRatio" partitions.

--topic-rebalance-interval-ms REBALANCE_MS

The gap in ms between the times the cluster balance on the monitored topic is checked. Set this to a large value

to disable automatic topic rebalance.总结

并没达到预想中的kafka监控功能,更多的是对kafka性能的测试,以及生产SLA的验证,类似一个旁路系统。

103

103

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?