搜狗微信公众号基本信息爬虫

项目启动入口:

package com.jiou;

import com.jiou.support.SpringContextUtils;

public class Bootstrap {

public static void main(String[] args) throws Exception {

SpringContextUtils.load("classpath*:/spring/context.xml");

}

}

加载spring配置文件 : 初始化类和启动定时任务

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:context="http://www.springframework.org/schema/context"

xmlns:tx="http://www.springframework.org/schema/tx" xmlns:aop="http://www.springframework.org/schema/aop"

xmlns:util="http://www.springframework.org/schema/util" xmlns:p="http://www.springframework.org/schema/p"

xmlns:cache="http://www.springframework.org/schema/cache" xmlns:jdbc="http://www.springframework.org/schema/jdbc"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/tx http://www.springframework.org/schema/tx/spring-tx-3.0.xsd

http://www.springframework.org/schema/aop http://www.springframework.org/schema/aop/spring-aop-3.0.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-3.0.xsd

http://www.springframework.org/schema/util http://www.springframework.org/schema/util/spring-util-3.0.xsd

http://www.springframework.org/schema/cache http://www.springframework.org/schema/cache/spring-cache.xsd

http://www.springframework.org/schema/task http://www.springframework.org/schema/task/spring-task-3.0.xsd

http://www.springframework.org/schema/jdbc http://www.springframework.org/schema/jdbc/spring-jdbc-3.0.xsd">

<context:property-placeholder location="classpath:config.properties" />

<bean class="com.jiou.support.SpringContextUtils" scope="singleton" />

<context:component-scan base-package="com.jiou" />

<import resource="quartz.xml" />

</beans>定时任务配置:

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans.xsd"

default-lazy-init="false">

<bean name="quartzScheduler"

class="org.springframework.scheduling.quartz.SchedulerFactoryBean"

destroy-method="destroy">

<property name="configLocation" value="classpath:quartz.properties" />

<property name="waitForJobsToCompleteOnShutdown" value="true" />

<property name="triggers">

<list>

<ref bean="weiBoYiWXSpiderTrigger" />

<ref bean="weiBoYiFriendsSpiderTrigger" />

<ref bean="weiBoYiPaiSpiderTrigger" />

<ref bean="CWQSpiderTrigger" />

<ref bean="sogouOATrigger" />

<ref bean="sogouQueryTrigger" />

<ref bean="sogouFreqTrigger" />

<ref bean="weiboUserTrigger" />

<ref bean="newRankSpiderTrigger" />

<ref bean="gsDataSpiderTrigger" />

</list>

</property>

</bean>

<!--

-->

<import resource="wby.xml" />

<import resource="cwq.xml" />

<import resource="sogou.xml" />

<import resource="sina.xml" />

<import resource="newrank.xml" />

<!--

-->

</beans>这里以sogou.xml配置作为讲解: 这是配置中的一个调度

<!-- 搜狗微信公众号基本信息爬虫 -->

<bean id="sogouOASpider"

class="org.springframework.scheduling.quartz.MethodInvokingJobDetailFactoryBean">

<property name="targetObject" ref="sogouFetchSerice" />

<property name="targetMethod" value="addTask" />

<property name="concurrent" value="false" />

</bean>

<bean id="sogouOATrigger" class="org.springframework.scheduling.quartz.CronTriggerBean">

<property name="jobDetail" ref="sogouOASpider" />

<property name="cronExpression" value="0 15 14 * * ?" />

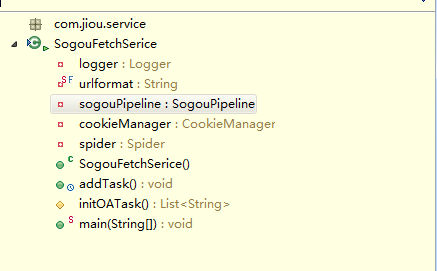

</bean>sogouFetchSerice 类结构和重要代码:

重要代码:SogouFetchSerice() 构造器 ;当启动程序入口时,构造器执行

public SogouFetchSerice() {

// 这里生成redis数据

SogouPageProcessor sogouPageProcessor = new SogouPageProcessor();

sogouPageProcessor.setCookieManager(cookieManager);

SogouDownloader sogouDownloader = new SogouDownloader();

spider = Spider.create(sogouPageProcessor).addPipeline(sogouPipeline).setUUID("SogouSpider")

.setExitWhenComplete(false).setDownloader(sogouDownloader)

.setScheduler(new RedisScheduler(Redis.jedisPool)).thread(10);

try {

SpiderMonitor.instance().register(spider);

} catch (JMException e) {

throw new RuntimeException(e);

}

spider.start();

}这是 spider 中的源码

@Override

public void run() {

checkRunningStat();

initComponent();

logger.info("Spider " + getUUID() + " started!");

while (!Thread.currentThread().isInterrupted() && stat.get() == STAT_RUNNING) {

// 当从scheduler调度队列中取request对象时,没有就等待,知道队列中有request。

Request request = scheduler.poll(this);

if (request == null) {

if (threadPool.getThreadAlive() == 0 && exitWhenComplete) {

break;

}

// wait until new url added

waitNewUrl();

} else {

final Request requestFinal = request;

threadPool.execute(new Runnable() {

@Override

public void run() {

try {

processRequest(requestFinal);

onSuccess(requestFinal);

} catch (Exception e) {

onError(requestFinal);

logger.error("process request " + requestFinal + " error", e);

} finally {

if (site.getHttpProxyPool().isEnable()) {

site.returnHttpProxyToPool((HttpHost) requestFinal.getExtra(Request.PROXY), (Integer) requestFinal

.getExtra(Request.STATUS_CODE));

}

pageCount.incrementAndGet();

signalNewUrl();

}

}

});

}

}

stat.set(STAT_STOPPED);

// release some resources

if (destroyWhenExit) {

close();

}

}当我们的调度到达等待的时间是开始执行下面的方法:

public synchronized void addTask() {

// List<String> list = new ArrayList<String>();

// list.add("nvwang388");

// list.add("wxzbtxx");

List<String> list = initOATask();

int count = 0;

for (String wxnum : list) {

Request request = new Request(String.format(urlformat, wxnum));

request.putExtra(SogouPipeline.wxnum, wxnum);

将requset 放入scheduler任务队列中等待spider的取出;下面附上源码

spider.addRequest(request);

count++;

if (count % 1000 == 0) {

logger.info("已加载任务条数:{}", count);

}

}

logger.info("共加载任务条数:{}", count);

}

//将要执行的任务从mongo数据库中取出放入list中

@SuppressWarnings("deprecation")

protected List<String> initOATask() {

List<String> list = new ArrayList<String>();

try {

MongoClient mongoclient = MongoPipeline.getMongoclient();

DBCollection dBCollection = mongoclient.getDB("spider").getCollection(SogouPipeline.mongo_coll_task);

DBObject query = new BasicDBObject();

// query.put("idx", new BasicDBObject("$gte", 0));

// query.put("uid", new BasicDBObject("$ne", 266));

Iterator<DBObject> it = dBCollection.find(query).iterator();

while (it.hasNext()) {

DBObject doc = it.next();

String wxnum = (String) doc.get("wxnum");

if (StringUtils.isBlank(wxnum)) {

continue;

}

list.add(wxnum);

}

} catch (Exception e) {

logger.error("初始化任务失败", e);

} finally {

}

// logger.info("加载微信号数目:{}", list.size());

return list;

}spider中将request放入队列中的源码:

public Spider addRequest(Request... requests) {

for (Request request : requests) {

addRequest(request);

}

signalNewUrl();

return this;

}

private void addRequest(Request request) {

if (site.getDomain() == null && request != null && request.getUrl() != null) {

site.setDomain(UrlUtils.getDomain(request.getUrl()));

}

scheduler.push(request, this);

}后面程序就可以爬取数据了:

processRequest(requestFinal); //爬取数据的入口

protected void processRequest(Request request) {

//这里调用的是SogouDownloader 中的download

Page page = downloader.download(request, this);

if (page == null) {

sleep(site.getSleepTime());

onError(request);

return;

}

// for cycle retry

if (page.isNeedCycleRetry()) {

extractAndAddRequests(page, true);

sleep(site.getSleepTime());

return;

}

//pageProcessor对数据进行处理这里执行的方法是SogouPageProcessor().process(page)

pageProcessor.process(page);

extractAndAddRequests(page, spawnUrl);

if (!page.getResultItems().isSkip()) {

for (Pipeline pipeline : pipelines) {

//将数据持久化到mongo数据库中 调用的方法是 SogouPipeline().process()

pipeline.process(page.getResultItems(), this);

}

}

sleep(site.getSleepTime());

}

//SogouDownloader.download()

@Override

public Page download(Request request, Task task) {

//这里执行的是SogouCookieManager 中的get()方法 源码下附

String cookieStr = cookieManager.get();

Page page = null;

try {

//模拟浏览器请求,下载页面

page = httpDownload(request, task, cookieStr, page);

logger.info("downloading page {}", request.getUrl());

} catch (Exception ex) {

logger.warn("download page " + request.getUrl() + " error", ex);

onError(request);

return null;

} finally {

if (page != null) {

if (checkLimit(page.getRawText())) {

// cookieManager.put(cookieStr, false);

// this.spider.addRequest(request);

return null;

} else {

cookieManager.put(cookieStr, true);

}

} else {

cookieManager.put(cookieStr, true);

}

}

return page;

}

//SogouCookieManager.get()

@Override

public String get(Object... args) {

String cookie = cookies.poll();

if (StringUtils.isNotBlank(cookie)) {

return cookie;

}

Jedis jedis = Redis.jedisPool.getResource();

try {

cookie = jedis.lpop(queue_name);//queue_name=sogou_cookies 这里有数据程序才能向下执行

while (StringUtils.isBlank(cookie)) {//死循环了

TimeUnit.SECONDS.sleep(10);

cookie = jedis.lpop(queue_name);

}

return cookie;

} catch (Exception ex) {

logger.error("获取cookie错误", ex);

} finally {

Redis.jedisPool.returnResource(jedis);

}

return null;

}

//SogouPageProcessor.process(Page page)方法

public void process(Page page) {

String wxnum = (String) page.getRequest().getExtra(SogouPipeline.wxnum);

String refer = page.getRequest().getUrl();

if (StringUtils.isBlank(wxnum)) {

logger.warn("微信号不能为空!");

return;

}

try {

// 这里返回的map是对page的处理

Map<String, Object> accountMap = parseAccount(page, wxnum);

if (accountMap == null) {

logger.warn("解析微信号:{}搜索页面错误", wxnum);

return;

} else {

// 将提取的数据放入 page的ResultItems中,ResultItems 分为三个域1、2、3 这里放第一个域中了

page.putField(SogouPipeline.account, accountMap);

}

//方法下面的代码不重要了(本人认为)

String listurl = (String) accountMap.get(SogouPipeline.url);

String listHtml = null;

int exeCount = 0;

boolean success = false;

while (!success && exeCount <= site.getRetryTimes()) {

exeCount++;

listHtml = getHtml(listurl, false);

if (!StringUtils.isBlank(listHtml)) {

success = true;

}

}

if (StringUtils.isBlank(listHtml)) {

logger.error("下载列表页错误:wxnum={},url={}", wxnum, listurl);

return;

}

} catch (Throwable ex) {

logger.error("搜狗微信错误", ex);

}

}

//SogouPipeline.process()

@Override

public void process(ResultItems resultItems, Task task) {

if (resultItems == null || resultItems.getAll().isEmpty()) {

return;

}

long start = System.currentTimeMillis();

Map<String, Object> accountmap = resultItems.get(account);

int count = persistAccount(accountmap);

List<Map<String, Object>> arts = resultItems.get(artlist);

if (arts != null) {

count += persistArticle(arts);

}

List<Map<String, Object>> nums = resultItems.get(numlist);

if (nums != null) {

count += persistNums(nums);

}

long end = System.currentTimeMillis();

logger.info("搜狗微信持久化耗时:{}ms,插入条数:{}", end - start, count);

}

1030

1030

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?